OpenSource Ghost

Limp Gawd

- Joined

- Feb 14, 2022

- Messages

- 233

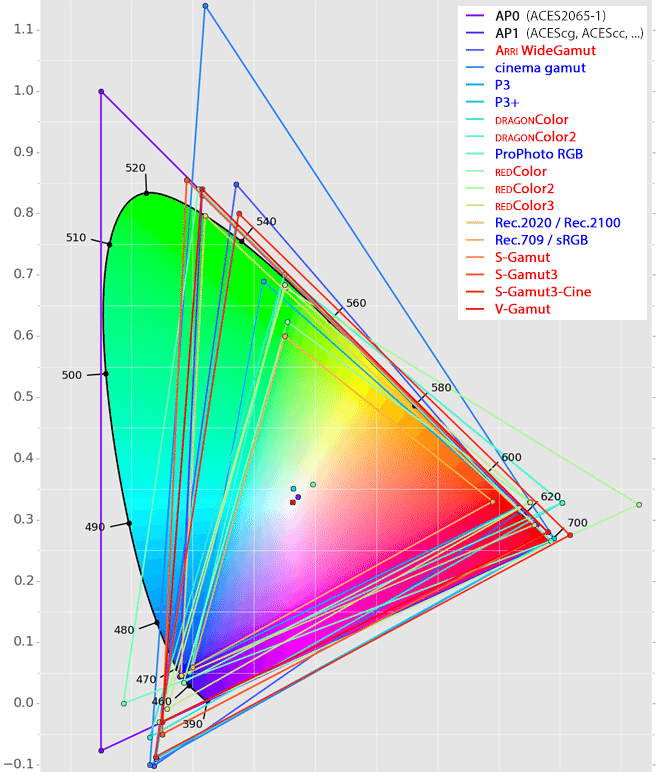

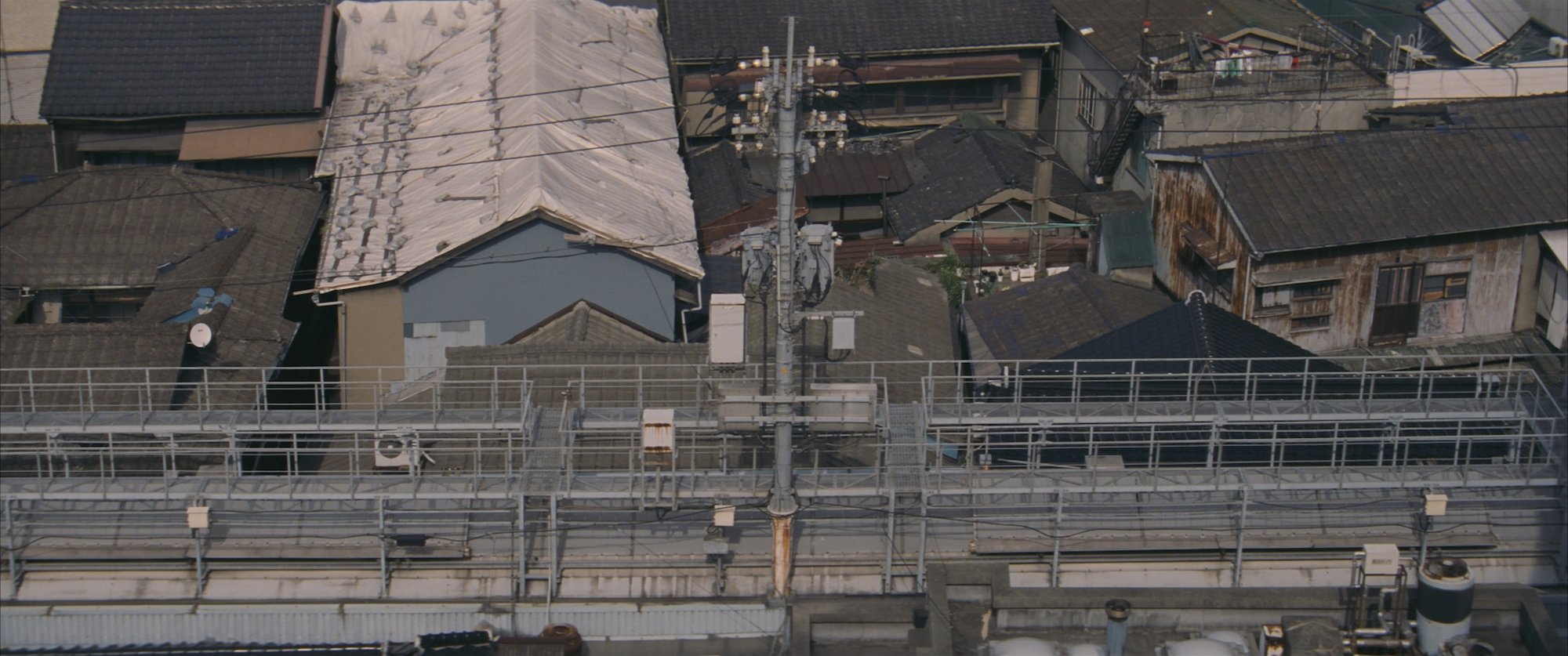

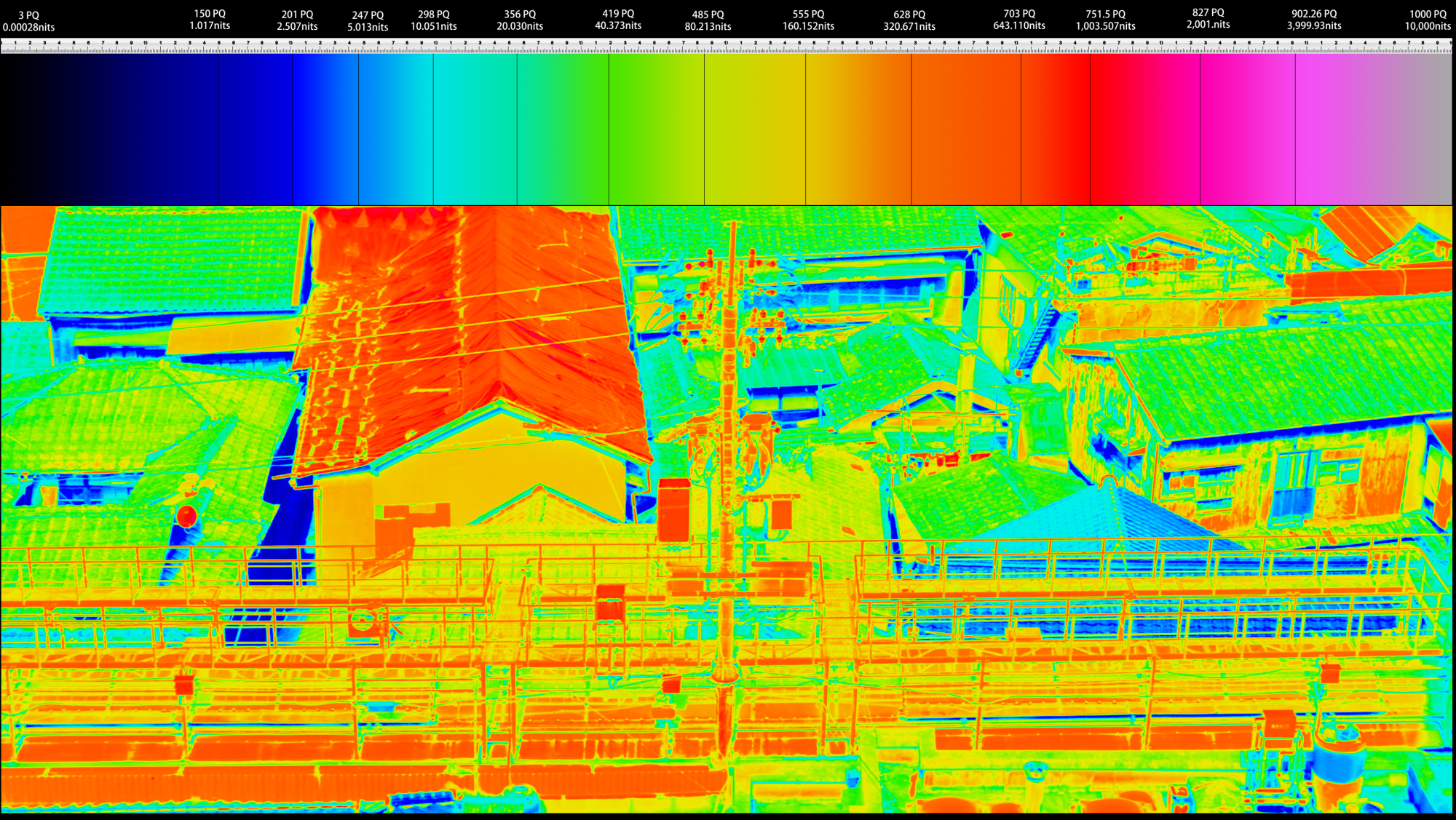

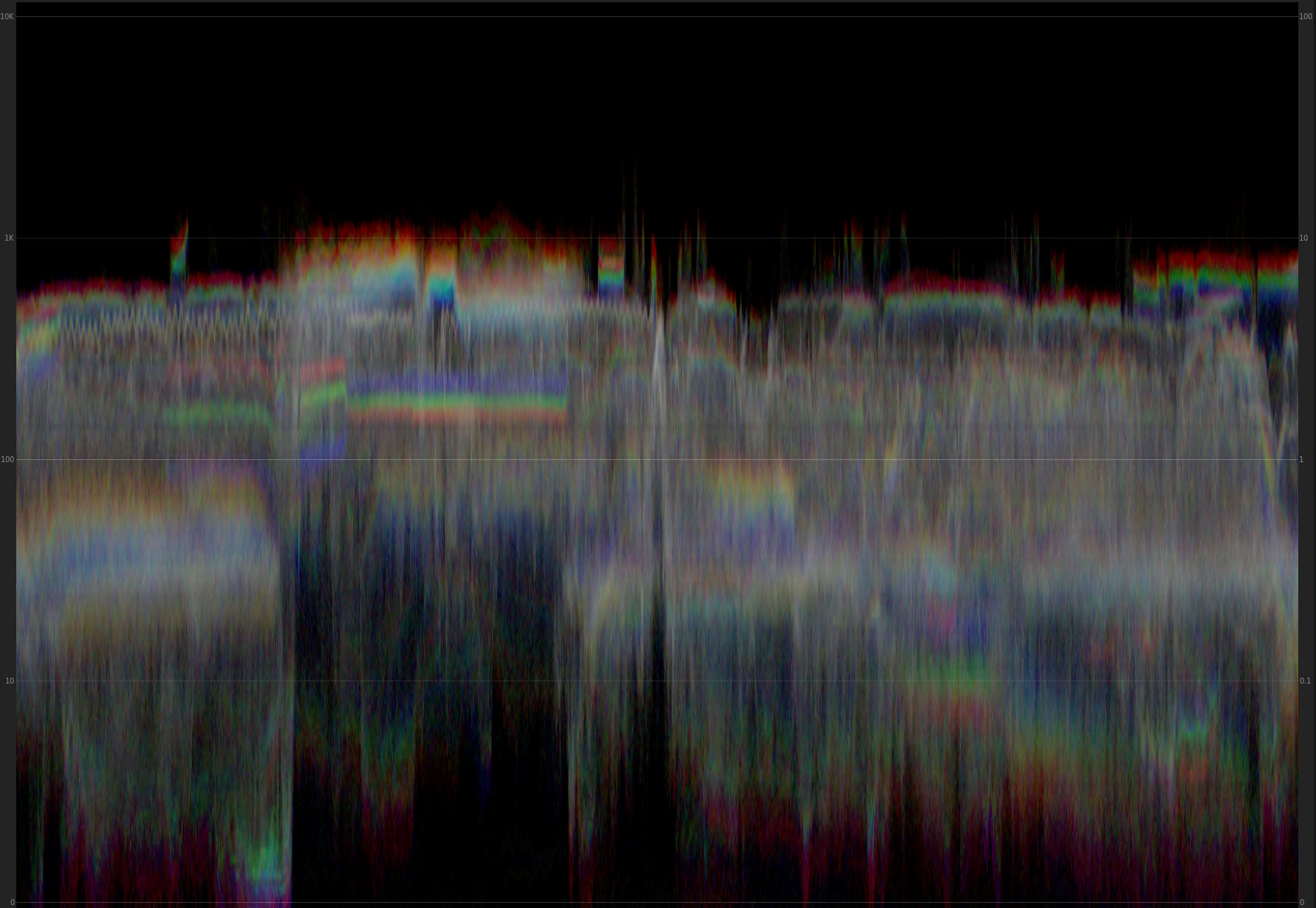

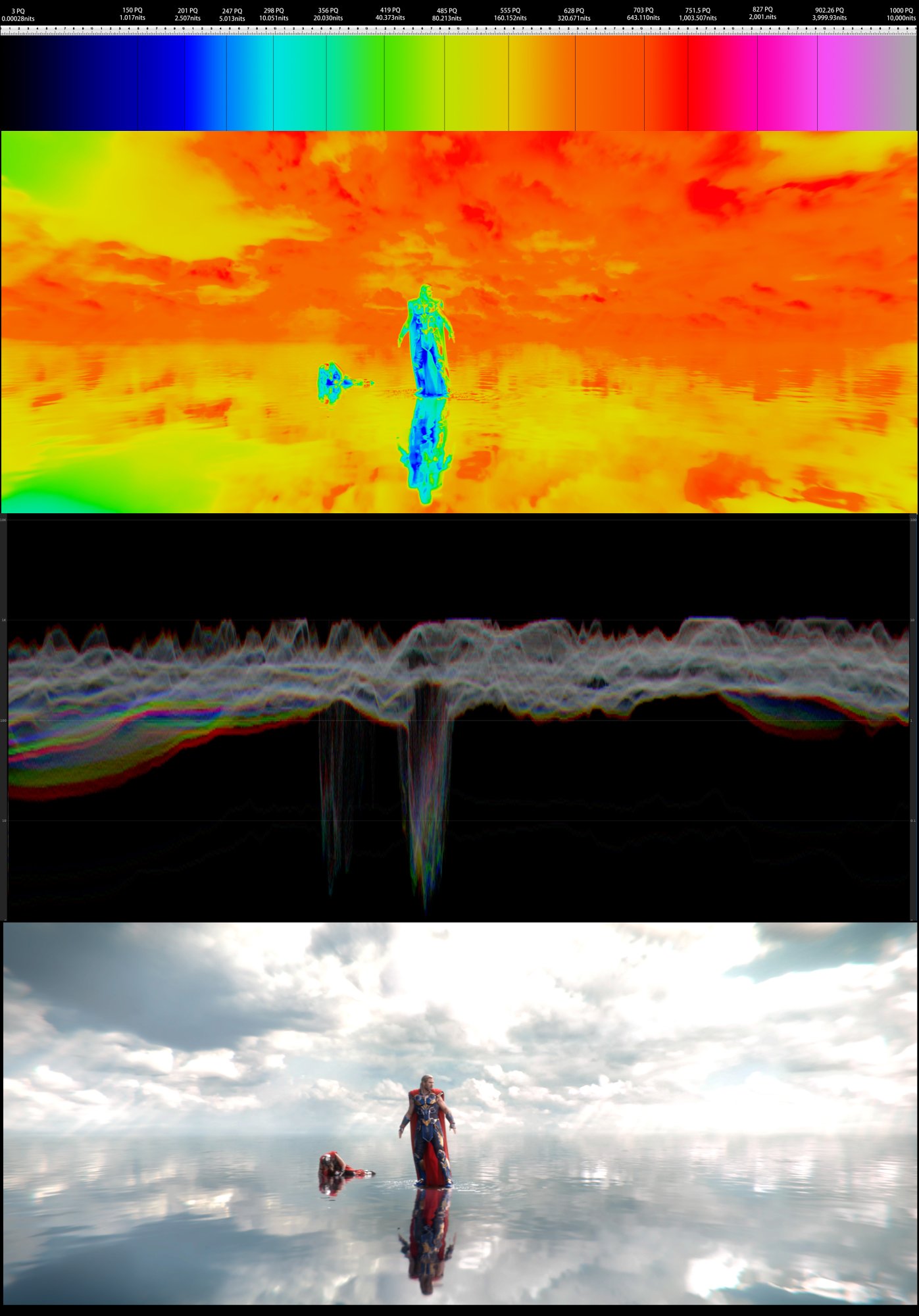

I have been out of display calibraiton world ever since HDR came out. Has HDR been standardized in any way? Is there actually a reference HDR image that consumer displays are capable of producing after calibration? I remember arguements that consumer HDR displays are not capable of tone mapping used by studio/reference HDR displays. Is that still the case? There are also sub-types of HDR and color gamut differences... What is the actual reference HDR standard?

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)