Zion Halcyon

2[H]4U

- Joined

- Dec 28, 2007

- Messages

- 2,108

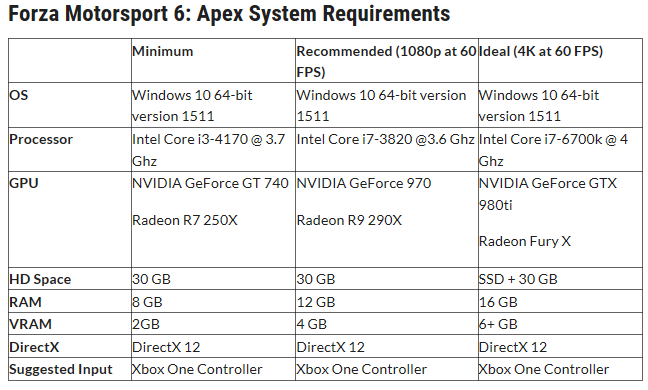

Just browsing and saw these system requirements for the open beta:

Read more: http://wccftech.com/forza-motorsport-6-apex-windows-10-open-beta-5-system-requirements-revealed/#ixzz478eLkOHT

This appears to be the first game NOT dependent on DX11. VERY curious to see how it performs.

Read more: http://wccftech.com/forza-motorsport-6-apex-windows-10-open-beta-5-system-requirements-revealed/#ixzz478eLkOHT

This appears to be the first game NOT dependent on DX11. VERY curious to see how it performs.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)