erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,929

Impressive initial results: https://thenewstack.io/developers-put-ai-bots-to-the-test-of-writing-code/

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

The article was probably hard to write, considering that like it say:

The judge ruled that developers could bypass Apple's commission by sending users to a web browser to make purchases. OpenAI is not doing that, perhaps because the process is more clunky than Apple's built-in payment processor.

They seem to consider there is a lot of value of letting the store be in charge (for a lot of openAI product you have to buy your subscription and enter your key manually, they probably feel it hurt adoption a lot), it is not like they are forced to pay via the store, it is just quite the moat for the companies to make people go around.

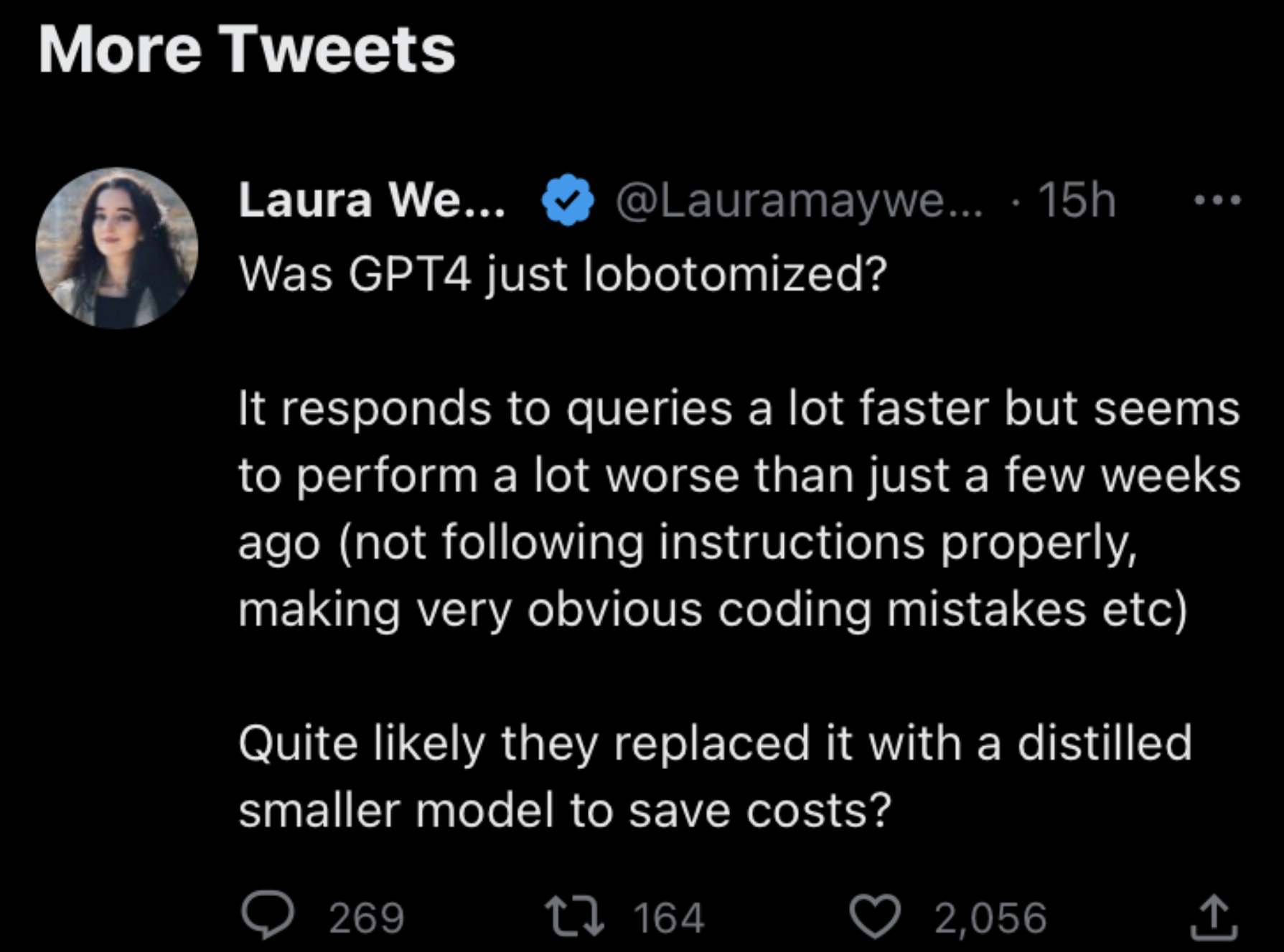

I felt a change, but maybe it is because I have read it elsewhere or maybe it is at the time it was used, maybe using less compute to make room for the smarthphone App traffic ?Have you guys found free chatGPT has become useless? Last few times I asked it to create some code or test templates it just gave up half way through and crashed.

I don't have access to paid co-pilot x, but the regular version I had to shut off it's so bad. GPT-4 my boss pays for and is a good tool.I felt a change, but maybe it is because I have read it elsewhere or maybe it is at the time it was used, maybe using less compute to make room for the smarthphone App traffic ?

The paid a la co-pilotX type seem fine.

Yeah 3.5 is bad at coding. 4 is a very significant upgrade.Have you guys found free chatGPT has become useless? Last few times I asked it to create some code or test templates it just gave up half way through and crashed.

No that's not what I meant. I already kind of know the limits of 3.5 for my application. What i mean is that now it just seems to crash on me before even finishing it's response. Before it would respond with bad solutions, now it crashes halfway through its bad solution.Yeah 3.5 is bad at coding. 4 is a very significant upgrade.

"JPMorgan is developing a ChatGPT-like A.I. service that gives investment advice" https://www.cnbc.com/2023/05/25/jpmorgan-develops-ai-investment-advisor.htmlNo that's not what I meant. I already kind of know the limits of 3.5 for my application. What i mean is that now it just seems to crash on me before even finishing it's response. Before it would respond with bad solutions, now it crashes halfway through its bad solution.

"JPMorgan is developing a ChatGPT-like A.I. service that gives investment advice" https://www.cnbc.com/2023/05/25/jpmorgan-develops-ai-investment-advisor.html

AI writing assistants can cause biased thinking in their users https://arstechnica.com/science/202...nts-can-cause-biased-thinking-in-their-users/In an opinion piece for the New York Times, Vox co-founder Ezra Klein worries that early AI systems "will do more to distract and entertain than to focus." (Since they tend to "hallucinate" inaccuracies, and may first be relegated to areas "where reliability isn't a concern" like videogames, song mash-ups, children's shows, and "bespoke" images.) https://entertainment.slashdot.org/...e-matrix-theory-of-ai-assisted-human-learning

Lawyer 'Greatly Regrets' Relying on ChatGPT After Filing Motion Citing Six Non-Existent Cases (reason.com)71

Posted by EditorDavid on Saturday May 27, 2023 @06:34PM from the disorder-in-the-court dept.

The judge's opinion noted the plaintiff's counsel had submitted a motion to dismiss "replete with citations to non-existent cases... Six of the submitted cases appear to be bogus judicial decisions with bogus quotes and bogus internal citations... The bogus 'Varghese' decision contains internal citations and quotes, which, in turn, are non-existent."

Eugene Volokh's legal blog describes what happened next:Thursday, plaintiff's counsel filed an affidavit in response, explaining that he was relying on the work of another lawyer at his firm, and the other lawyer (who had 30 years of practice experience) also filed an affidavit, explaining that he was relying on ChatGPT... ("The citations and opinions in question were provided by Chat GPT which also provided its legal source and assured the reliability of its content...")

Their affidavit said ChatGPT had "revealed itself to be unreliable,"

Version 4 is only available after getting the "Plus" upgrade, right? Any free previews or something?

They aren't doing it for a few reasons, but real-world examples of where this is happening the overhead ends up adding a lot of work to the developers while saving very little in comparison.The article was probably hard to write, considering that like it say:

The judge ruled that developers could bypass Apple's commission by sending users to a web browser to make purchases. OpenAI is not doing that, perhaps because the process is more clunky than Apple's built-in payment processor.

They seem to consider there is a lot of value of letting the store be in charge (for a lot of openAI product you have to buy your subscription and enter your key manually, they probably feel it hurt adoption a lot), it is not like they are forced to pay via the store, it is just quite the moat for the companies to make people go around.

Serves OpenAI right for not releasing an Android app first.Interesting realization on the Apple ecosystem / market store

https://www.businessinsider.com/apple-chatgpt-ai-app-store-tax-openai-ios-2023-5

import io

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as np

from matplotlib import colors

# Load the cleaned data from the CSV string

cleaned_csv_string = 'Item,Category,Complete,Units,Credits,Notes,Location\nTHX-1138 rivet,Red,True,2,70,Purchased in the back alley of Mos Eisley,Mos Eisley\nTurbo crystal,Red,True,1,124,From our friend at Jedi temple on Coruscant,Coruscant\nResistance sticker,Green,True,2,23,Tourist gift shop at Coruscant starship terminal,Coruscant\nDarksaber polish,Green,False,1,90,?,Unknown\nBantha paint,Red,False,1,55,You don\'t want to know,Unknown\nFlux capacitor,Green,True,1,198,Classified. (From another galaxy far far away),Other Galaxy\nFlap for speeder,Yellow,True,20,3,Scrap from storeroom,Storeroom\nHyperspace O-Ring,Red,False,2,30,Purchased in the back alley of Mos Eisley 5 rotations ago,Mos Eisley\nIG-11 replacement toe,Green,False,10,14,Imperial junkyard,Imperial Junkyard\nLightsaber,Red,True,1,100,From our friend at Jedi temple on Coruscant,Coruscant\nDroid bolts,Red,False,8,22,Jawas\' native homeworld. We forgot what planet it was,Unknown\nServo,Red,True,1,99,Mos Eisley,Mos Eisley\nBB-8 lens cleaner,Green,False,1,123,Storeroom,Storeroom\nXP-38 Speeder,Yellow,False,1,341,Unknown,Unknown\nBeskar (0.001 porg),Green,True,1,250,From our contact at Mandalore,Mandalore\nTatooine sand bricks,Yellow,True,120,1,From Jawa\'s homeworld planet,Jawa\'s Homeworld\n'

df_cleaned = pd.read_csv(io.StringIO(cleaned_csv_string))

# Convert Units and Credits to numeric values and compute total cost

df_cleaned['Units'] = pd.to_numeric(df_cleaned['Units'], errors='coerce')

df_cleaned['Credits'] = pd.to_numeric(df_cleaned['Credits'], errors='coerce')

df_cleaned['Total Cost'] = df_cleaned['Units'] * df_cleaned['Credits']

# Map colors to hexadecimal color codes for the graph

color_dict = {

'Red': '#FF0000',

'Green': '#008000',

'Yellow': '#FFFF00'

}

# Map completion status to colors for the labels

complete_color_dict = {

True: 'green',

False: 'red'

}

# Create a new figure

plt.figure(figsize=(19.2, 10.8), dpi=100)

# Create a horizontal bar plot with color-coded bars

sns.barplot(data=df_cleaned, y='Item', x='Total Cost', palette=df_cleaned['Category'].map(color_dict), edgecolor='blue', linewidth=4)

# Color-code the y-axis labels according to the completion status

[plt.gca().get_yticklabels()[i].set_color(complete_color_dict[df_cleaned['Complete'].iloc[i]]) for i in range(len(df_cleaned))]

# Set the background color to white

plt.gca().set_facecolor('white')

# Set the title and labels

plt.title('Cost per Item', fontsize=24, pad=20, fontname='Sans-serif')

plt.xlabel('Total Cost', fontsize=18, labelpad=20, fontname='Sans-serif')

plt.ylabel('Item', fontsize=18, labelpad=20, fontname='Sans-serif')

# Increase the size of the y-axis labels

plt.yticks(fontsize=16)

# Add a border around the plot

plt.gca().spines['top'].set_visible(True)

plt.gca().spines['top'].set_linewidth(4)

plt.gca().spines['bottom'].set_visible(True)

plt.gca().spines['bottom'].set_linewidth(4)

plt.gca().spines['right'].set_visible(True)

plt.gca().spines['right'].set_linewidth(4)

plt.gca().spines['left'].set_visible(True)

plt.gca().spines['left'].set_linewidth(4)

# Add padding around the plot

plt.tight_layout(pad=5)

# Save the plot as a PNG file

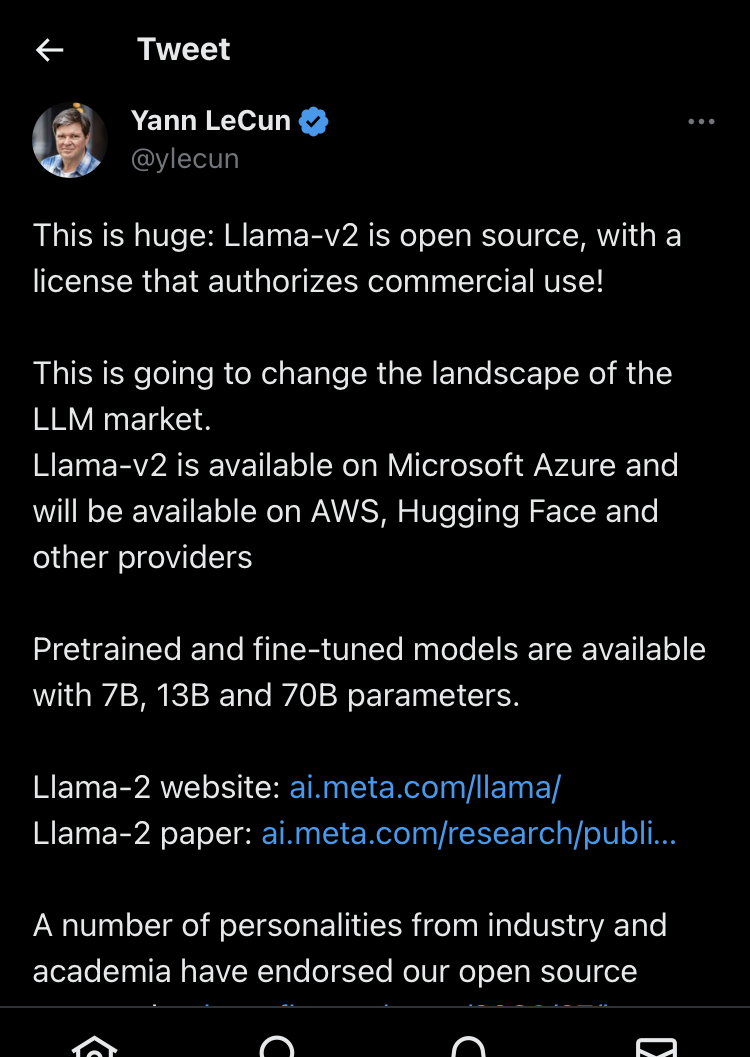

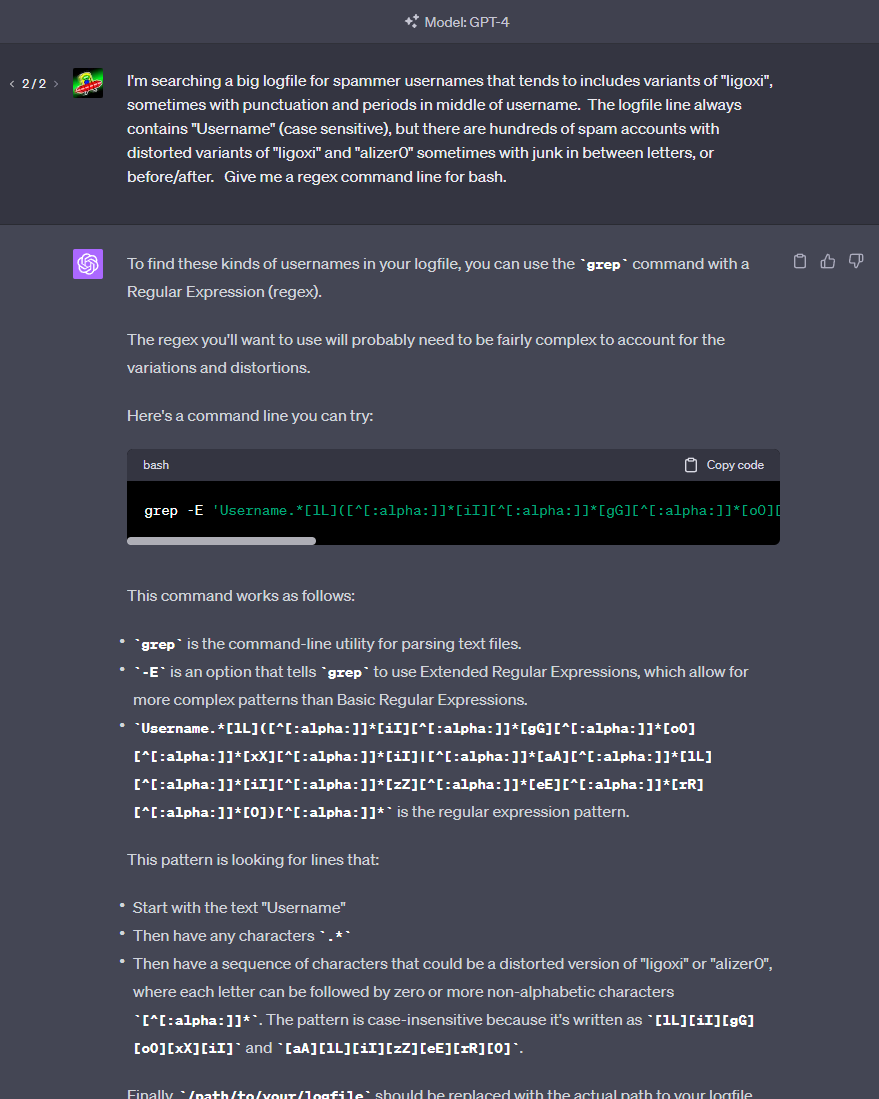

plt.savefig('/mnt/data/bar_graph.png')cleaned_csv_string = 'Item,Category,Complete,Units,Credits,Notes,Location\nTHX-1138 rivet,Red,True,2,70,Purchased in the back alley of Mos Eisley,Mos Eisley\nTurbo crystal,Red,True,1,124,From our friend at Jedi temple on Coruscant,Coruscant\nResistance sticker,Green,True,2,23,Tourist gift shop at Coruscant starship terminal,Coruscant\nDarksaber polish,Green,False,1,90,?,Unknown\nBantha paint,Red,False,1,55,You don\'t want to know,Unknown\nFlux capacitor,Green,True,1,198,Classified. (From another galaxy far far away),Other Galaxy\nFlap for speeder,Yellow,True,20,3,Scrap from storeroom,Storeroom\nHyperspace O-Ring,Red,False,2,30,Purchased in the back alley of Mos Eisley 5 rotations ago,Mos Eisley\nIG-11 replacement toe,Green,False,10,14,Imperial junkyard,Imperial Junkyard\nLightsaber,Red,True,1,100,From our friend at Jedi temple on Coruscant,Coruscant\nDroid bolts,Red,False,8,22,Jawas\' native homeworld. We forgot what planet it was,Unknown\nServo,Red,True,1,99,Mos Eisley,Mos Eisley\nBB-8 lens cleaner,Green,False,1,123,Storeroom,Storeroom\nXP-38 Speeder,Yellow,False,1,341,Unknown,Unknown\nBeskar (0.001 porg),Green,True,1,250,From our contact at Mandalore,Mandalore\nTatooine sand bricks,Yellow,True,120,1,From Jawa\'s homeworld planet,Jawa\'s Homeworld\n'ChatGPT Plus now has a new python interpretor mode

- File attachment upload support (e.g. CSV, XLS, TXT, XML, etc)

- Self-executing & self-debugging (it interprets the Python error messages and modifies the Python code -- to varying success levels)

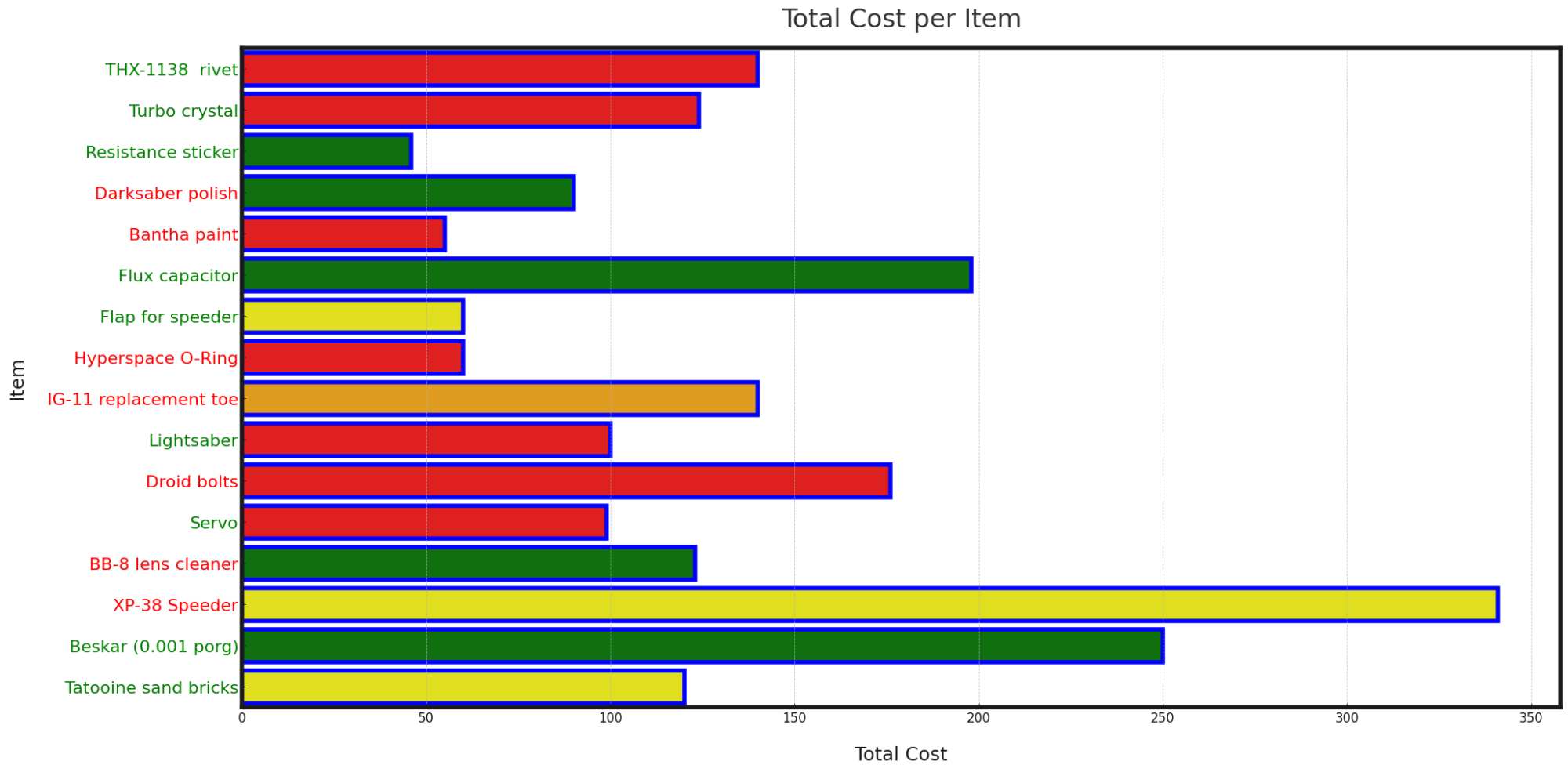

- Can generate resulting PNG files (or copy and paste CSV / SQL / TXT etc) in Python processing the file attachment (e.g. charts, graphs, etc) displayed right on the spot.

- You can even ask it to make changes (fonts, colors, paddings, sizes, layout).

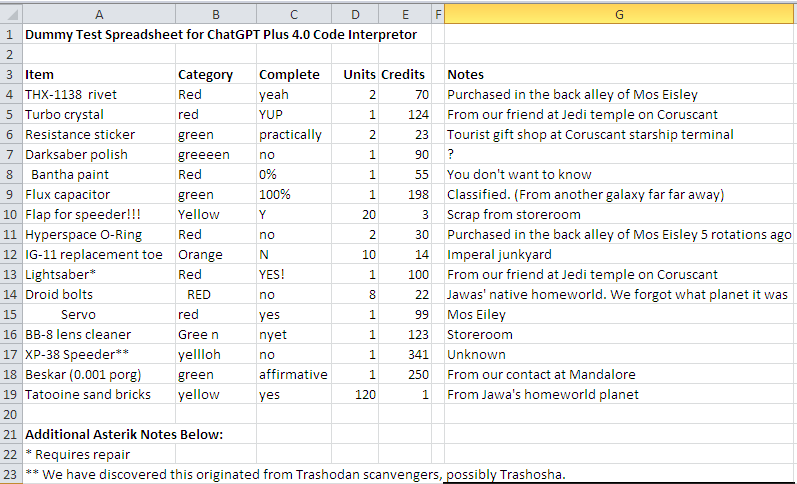

Version 4.0 with the new sandboxed Python interpretor/self-debugging mode (Settings -> Beta) in can generate chart .png files now. For example I can upload an .xls file to ChatGPT now, ask it to reformat the data, and then generate a custom graph. It's not perfect.

It even debugs its own Python programs (not always perfectly) but it's neat to see it do 3 edits to its own programming based on the Python interpretor error messages.

Usually it will succeed most simple requests, but be prepared for a bit of prompt engineering (e.g. it failed on certain chart-reformatting tasks so you may have to ask it to "rewrite the python program from scratch" and iterate from that).

It's exactly what I've been predicting -- AIs that can debug its own programming mistakes.

It can't yet (interpret) the resulting .PNG file for errors, but it certainly does things like pie charts, GANTT charts, scatterdot graphs, and other simple graph images generated by Python programming that you can copy and paste to fix final errors, if you'd like. It will fix unstructured data if you ask it to, and generate appropriate custom regex expressions, or simply natural-language-modify the data before giving it to the interpretor ("Re-summarize the Notes field to no more than 80 characters. Use common shorthand like "KB" instead of "kilobytes").

So you can natural-language reprocess the data before letting the Python process the data. Things like "I've attached a spreadsheet. In unstructured column 3, please change all positive responses to "Yes" and negative responses to "No". In the final Notes column, please resummarize long notes to anything no more than 80 characters. Sort the rows based on the sense of urgency written in the Description field, from most urgent to least urgent. Do this before providing the data into the Python program I ask you to write to generate a custom chart."

It lets you upload messy junk .xls / .csv / .txt / etc to this new ChatGPT Python Interpretor beta. Multiple attachments if you want (up to 25MB total). And asking it to process the data ("Column 3 has a 3-letter substring somewhere near the end that represents calendar month, please find a way to intelligently parse that out that won't create false positives") and it'll figure out its own what kind of filtering is best, whether regexing or via another filtering method (including some techniques of AI-modifying the data before being processed by Python). And you can do natural language asks ("Also the last column is multiline notes that sometimes mentions urgency. For those, plot those specific graph bars in red color code.").

Or you can ask it to create cross-references like a relational database (.SQL format) out of unstructured spreadsheets that refers to each other a little too casually. Be careful what you do with it though, e.g. pasting it into MySQL to create multiple tables (like loading a .sql backup)

For minor errors, I can just tell it natural language to modify the Python program that generates the .png graph

("That's too small. I need at least 1920x1080 or bigger. Also, make sure there are at least 30 pixels of padding around the edge of the image file. And change the graph grid to 4-pixel dotted blue, and graph boundary to 4-pixel solid red, and I want the graph title to be in 36-point Arial Bold please.") and it self-modifies the Python program, re-runs it, and displays the .png file right inside the ChatGPT 4.0 chat.

Pretty neat. It's still Wright Brothers era, but after only 5 minutes of prompt engineering, I have nice graph .png files that would otherwise have taken me 20-30 minutes to create elsewhere, more if I needed to manually filter the data (e.g. parsing unformatted data). I do have to proofread the work and doublecheck the results -- but it is a net timesaer.

It's not going to always be useful, but a big timesaver when I need to process random generic data (that doesn't have security considerations) into a Python program that it writes, and then self-executes to output a .png file that I can save from the browser to disk.

Almost scary to see ChatGPT Plus subscription now writing, executing and debugging its own Python programs. It's a heavily sandboxed interpretor, but, that's where SkyNet lore begins.

Not going to use ChatGPT to generate public content, but it's a good data processor assistant for things like internal data analysis. Like processing data into graphs now -- as long as you don't get too complex (e.g. creating complex organizational charts or flowcharts that it can't self-debug -- and it screwed up the arrow positions when drawing since it can't 'see' the result of the graphs it drew).

Metaphorically, AI 'assistants' are a good help especially if you are scatterbrained about your written notes (forgetting to reorganize your notes). During my last convention trip, I copy and pasted raw notes of what I did/learned at the most important booths, gave it my goals, and asked it to output a .csv spreadsheet of priority clients to followup. It was 90% accurate on prioritizing for me -- it correctly got the sense from my raw notes. As I acknowledge I am not stellar on time management tasks, this is one area where AI is already starting to help out on, as long as I get decent enough at correctly prompt-engineering as I am at bash-scripting.

It's still a bunch of unconnected/incomplete small proto-AGI islands (I would like it to be able to interpret images already, and be able to self-debug its own image-creation glitches, including graphics glitches in chart .png files). It's easy to be unable to figure out what AI is good for on the fly as you do your workday, because of all the gaps. But it's now getting far more helpful than say, Siri, for actually doing things like creating little Python programs that is designed to process csv/xls/txt into png (graphs/charts/etc), and I can modify the Python myself to finish off a final occasional remaining chart glitch faster than ChatGPT can.

Regardless, always double check works -- always. At least the paid 4.0 output is easier to double check than the 3.5 speil.

50% of the problem is correctly realizing (early enough) I'm working on a task that could save time with a little AI boost --

There are lots of mini timesaver tasks that in retrospect, realize I could now have saved, for mudane things like manually reformatting more 'casual' data that's too time consuming to write a one-time disposable Python program for. Since it can do the 'data pre-cleanup' in an AI-way (even recognizing and fixing CSV or XML errors, like unclosed tags, or unclosed quotation marks between commas) before the structured Python processing.

It's easy to ignore the AI since it's imperfect, so part of the skill is recognizing what it does well at, and what it does terribly at, and knowing how to take-turns cleaning each other up (e.g. letting it do 90% of the work generating a TODO list from raw meeting minutes transcript, and I do the final 10% cleanup like a couple of forgotten bullets).

Never depend on the AI. But find ways to make it an assistant for you, in a way that is easy to doublecheck its work -- so you can use/discard -- depending on the quality of the data.

They can be massive timesavers for mudane reformatting/parsing notes/organizing/prioritizing from unorganized raw copy and pasted text (or the new file attachment upload feature in the Python Interpretor Beta enabled in Settings).

Mind you...

I am looking for multiple 'good' AI suppliers, as I don't want ChatGPT to have the monopoly, and I'm concerned about some of the 'ethics' of AI training too, as per media reports of OpenAI questionable AI-training practices -- that does give me pause for concern. But, I'm not an ostrich head in the ground. I need to understand how to operate AI's at least.

EDIT: IMPORTANT -- If you use AI to cleanup your CSV/XLS data, do it in two separate tasks. Ask it to do a CSV cleanup first, then ask it to generate the PNG. First, upload the file attachment but ask it to NOT produce a Python program, and simply ask it to output a new copy-paste cleaned-up CSV with all the AI autocorrection, be very specific you want to use its own AI for autocorrection, rather than Python for autocorrection.

Only by doing it this way, I was able to convert this Excel to CSV to PNG using ChatGPT using a two-step method, where I saved the interim CSV to reupload as a new file attachment (or ask it to assign to a string variable in a Python program)

This was an educational training exercise spreadsheet, aimed at helping me learn how to use AI to reprocess messy data.

View attachment 583602

- Left label color is whether or not "Complete" (aka purchased)

- Bar color is corrected "Category" color

- Bar length is total cost (unit times cost)

- Paddings / leading / trailing "garbage" was removed by AI upon my request during AI-cleanup task

- Casual category was autocorrected / remapped by AI upon my request to a boolean red/green for label color.

- Casual complete was autocorrected / remapped by AI upon my request to a bar color.

View attachment 583604

To generate the PNG (which could be downloaded directly from ChatGPT plus), ChatGPT wrote this final Python program:

I made no programming corrections, although for the first-time-around, had to tell AI some graph glitch errors until it was fixed.Code:import io import matplotlib.pyplot as plt import seaborn as sns import numpy as np from matplotlib import colors # Load the cleaned data from the CSV string cleaned_csv_string = 'Item,Category,Complete,Units,Credits,Notes,Location\nTHX-1138 rivet,Red,True,2,70,Purchased in the back alley of Mos Eisley,Mos Eisley\nTurbo crystal,Red,True,1,124,From our friend at Jedi temple on Coruscant,Coruscant\nResistance sticker,Green,True,2,23,Tourist gift shop at Coruscant starship terminal,Coruscant\nDarksaber polish,Green,False,1,90,?,Unknown\nBantha paint,Red,False,1,55,You don\'t want to know,Unknown\nFlux capacitor,Green,True,1,198,Classified. (From another galaxy far far away),Other Galaxy\nFlap for speeder,Yellow,True,20,3,Scrap from storeroom,Storeroom\nHyperspace O-Ring,Red,False,2,30,Purchased in the back alley of Mos Eisley 5 rotations ago,Mos Eisley\nIG-11 replacement toe,Green,False,10,14,Imperial junkyard,Imperial Junkyard\nLightsaber,Red,True,1,100,From our friend at Jedi temple on Coruscant,Coruscant\nDroid bolts,Red,False,8,22,Jawas\' native homeworld. We forgot what planet it was,Unknown\nServo,Red,True,1,99,Mos Eisley,Mos Eisley\nBB-8 lens cleaner,Green,False,1,123,Storeroom,Storeroom\nXP-38 Speeder,Yellow,False,1,341,Unknown,Unknown\nBeskar (0.001 porg),Green,True,1,250,From our contact at Mandalore,Mandalore\nTatooine sand bricks,Yellow,True,120,1,From Jawa\'s homeworld planet,Jawa\'s Homeworld\n' df_cleaned = pd.read_csv(io.StringIO(cleaned_csv_string)) # Convert Units and Credits to numeric values and compute total cost df_cleaned['Units'] = pd.to_numeric(df_cleaned['Units'], errors='coerce') df_cleaned['Credits'] = pd.to_numeric(df_cleaned['Credits'], errors='coerce') df_cleaned['Total Cost'] = df_cleaned['Units'] * df_cleaned['Credits'] # Map colors to hexadecimal color codes for the graph color_dict = { 'Red': '#FF0000', 'Green': '#008000', 'Yellow': '#FFFF00' } # Map completion status to colors for the labels complete_color_dict = { True: 'green', False: 'red' } # Create a new figure plt.figure(figsize=(19.2, 10.8), dpi=100) # Create a horizontal bar plot with color-coded bars sns.barplot(data=df_cleaned, y='Item', x='Total Cost', palette=df_cleaned['Category'].map(color_dict), edgecolor='blue', linewidth=4) # Color-code the y-axis labels according to the completion status [plt.gca().get_yticklabels()[i].set_color(complete_color_dict[df_cleaned['Complete'].iloc[i]]) for i in range(len(df_cleaned))] # Set the background color to white plt.gca().set_facecolor('white') # Set the title and labels plt.title('Cost per Item', fontsize=24, pad=20, fontname='Sans-serif') plt.xlabel('Total Cost', fontsize=18, labelpad=20, fontname='Sans-serif') plt.ylabel('Item', fontsize=18, labelpad=20, fontname='Sans-serif') # Increase the size of the y-axis labels plt.yticks(fontsize=16) # Add a border around the plot plt.gca().spines['top'].set_visible(True) plt.gca().spines['top'].set_linewidth(4) plt.gca().spines['bottom'].set_visible(True) plt.gca().spines['bottom'].set_linewidth(4) plt.gca().spines['right'].set_visible(True) plt.gca().spines['right'].set_linewidth(4) plt.gca().spines['left'].set_visible(True) plt.gca().spines['left'].set_linewidth(4) # Add padding around the plot plt.tight_layout(pad=5) # Save the plot as a PNG file plt.savefig('/mnt/data/bar_graph.png')

Except for two nebulous implied locations (Trashosha and Tatooine as "trick locations") it even correctly parsed an AI-guessed location for a new "Location" field, but I forgot to ask it to display the data. (Ah well -- but you can view the cleaned_csv variable, that it correctly pulled things like "Mos Eisley" and "Imperial Junkyard" for a newly created "Location" column.

It goofed up a few requests, like more padding around the edges (something it has a hard time doing, but one can easily do it manually by editing the Python)

Code:cleaned_csv_string = 'Item,Category,Complete,Units,Credits,Notes,Location\nTHX-1138 rivet,Red,True,2,70,Purchased in the back alley of Mos Eisley,Mos Eisley\nTurbo crystal,Red,True,1,124,From our friend at Jedi temple on Coruscant,Coruscant\nResistance sticker,Green,True,2,23,Tourist gift shop at Coruscant starship terminal,Coruscant\nDarksaber polish,Green,False,1,90,?,Unknown\nBantha paint,Red,False,1,55,You don\'t want to know,Unknown\nFlux capacitor,Green,True,1,198,Classified. (From another galaxy far far away),Other Galaxy\nFlap for speeder,Yellow,True,20,3,Scrap from storeroom,Storeroom\nHyperspace O-Ring,Red,False,2,30,Purchased in the back alley of Mos Eisley 5 rotations ago,Mos Eisley\nIG-11 replacement toe,Green,False,10,14,Imperial junkyard,Imperial Junkyard\nLightsaber,Red,True,1,100,From our friend at Jedi temple on Coruscant,Coruscant\nDroid bolts,Red,False,8,22,Jawas\' native homeworld. We forgot what planet it was,Unknown\nServo,Red,True,1,99,Mos Eisley,Mos Eisley\nBB-8 lens cleaner,Green,False,1,123,Storeroom,Storeroom\nXP-38 Speeder,Yellow,False,1,341,Unknown,Unknown\nBeskar (0.001 porg),Green,True,1,250,From our contact at Mandalore,Mandalore\nTatooine sand bricks,Yellow,True,120,1,From Jawa\'s homeworld planet,Jawa\'s Homeworld\n'

This completed an educational-test of ChatGPT Plus;

Thanks bud, for the evaluationEdited my above post with images. Learning how to prompt engineer correctly took me about 30-40 minutes, but now I can do it in about 15 or less. It will screw things up if you try to ask it to do too many tasks at a time. It took roughly 5 Python self-debug attempts before it worked, though first time took about 30 (as I figured out how to prompt engineer it correctly). Break it down to a data cleanup task, followed by a chart generation task.

Learning prompt engineering quirks ("correctly wording your request to ChatGPT") is not too different from learning how to use a scientific calculator for the first-ever time, you get faster at prompt engineering. THEN it finally begins to save you time, as long as you double check data by viewing the XLS + PNG side by side. Things like that. And you can always revise/rerun the Python locally too!

I am looking for multiple 'good' AI suppliers, as I don't want ChatGPT to have the monopoly, and I'm concerned about some of the 'ethics' of AI training too, as per media reports of OpenAI questionable AI-training practices -- that does give me pause for concern. This is still Wild West.

The GPT 3.5 speil is not useful to me, but the 4.0 (paid) is now "useful enough".

But, I'm not an ostrich head in the ground. I need to understand how to operate AI's at least.

Edited my above post with images. Learning how to prompt engineer correctly took me about 30-40 minutes, but now I can do it in about 15 or less. It will screw things up if you try to ask it to do too many tasks at a time. It took roughly 5 Python self-debug attempts before it worked, though first time took about 30 (as I figured out how to prompt engineer it correctly). Break it down to a data cleanup task, followed by a chart generation task.

Learning prompt engineering quirks ("correctly wording your request to ChatGPT") is not too different from learning how to use a scientific calculator for the first-ever time, you get faster at prompt engineering. THEN it finally begins to save you time, as long as you double check data by viewing the XLS + PNG side by side. Things like that. And you can always revise/rerun the Python locally too!

I am looking for multiple 'good' AI suppliers, as I don't want ChatGPT to have the monopoly, and I'm concerned about some of the 'ethics' of AI training too, as per media reports of OpenAI questionable AI-training practices -- that does give me pause for concern. This is still Wild West.

The GPT 3.5 speil is not useful to me, but the 4.0 (paid) is now "useful enough".

But, I'm not an ostrich head in the ground. I need to understand how to operate AI's at least.

{{1.00, 0.83, 0.61, 0.88, 0.94, 0.69, NA, 0.77},

{0.83, 1.00, NA, NA, NA, NA, NA, 0.63},

{0.61, NA, 1.00, NA, NA, NA, NA, 0.56},

{0.88, NA, NA, 1.00, NA, NA, NA, 0.69},

{0.94, NA, NA, NA, 1.00, NA, NA, 0.83},

{0.69, NA, NA, NA, NA, 1.00, NA, 0.70},

{NA, NA, NA, NA, NA, NA, NA, NA},

{0.77, 0.63, 0.56, 0.69, 0.83, 0.70, NA, 1.00}}

Sounds like a great use case to produce responses to RFI's that have close due dates.I felt the same way about 3.5. 4.0, with the Wolfram plugin, is useful enough now. I also use it to condense meeting minutes, format project proposals and quick draft emails where I only have to write a couple broken sentences and it formats and expands to something suitable.

Yes, easiest if PDF is text based, where the data can be pulled and reprocessed.Sounds like a great use case to produce responses to RFI's that have close due dates.

Yes, easiest if PDF is text based, where the data can be pulled and reprocessed.

For image based, try to get it to use Python OCR libraries to convert image based text in PDF into CSV and proces that data. Pretty neat if it can do that. Failing that (not installed in interpreter) it probably can help you with a python to OCR locally.

Just make sure you double check your AI assistant work. Never, ever skip that step. Include double check time in whether you decide AI saves you time.

Yes, easiest if PDF is text based, where the data can be pulled and reprocessed.

For image based, try to get it to use Python OCR libraries to convert image based text in PDF into CSV and proces that data. Pretty neat if it can do that. Failing that (not installed in interpreter) it probably can help you with a python to OCR locally.

Just make sure you double check your AI assistant work. Never, ever skip that step. Include double check time in whether you decide AI saves you time.

Gay! I miss Dan!"GPT-4 safer and more aligned. GPT-4 is 82% less likely to respond to requests for disallowed content"

Once a system at google-microsoft-whoever get good enough on powerful enough hardware to- Self-executing & self-debugging (it interprets the Python error messages and modifies the Python code -- to varying success levels)

‘WarGames’ anticipated our current AI fears 40 years ago this summer https://www.cnn.com/2023/07/10/entertainment/wargames-ai-column/index.htmlHilarious, gave me instructions to install python, install the right libraries, configured the data to export and how to run the python script to generate the PNG for scatter plot graphs based on the data.

This is terrifying.

Or accidental hallucinations. Learning what tasks it practically never hallucinates on, and what tasks has a hallucination-error on. One early pass in prompt engineering accidentally generated some "sample data" (to educate me as an aside) when I asked it too casually about how to do Task X vs Y, and the sample data propagated onwards to my next question that did not explicitly refer to my original data instead of the sample data. Keeping a wandering GPT4 "on rails" is extremely important --Right! Gotta make it look a little bit personalized.

"Wix’s new tool can create entire websites from prompts" https://techcrunch.com/2023/07/17/wixs-new-tool-can-create-entire-websites-from-prompts/

What You Say Is What You Get (WYSIWYG²)

I have not heard this statement since the 1990s