This may actually be an area where QD-OLED is better since black crush on the darkest nuances have long been a problem on WOLED. I've solved it by raising black stabilizer in game mode on my LG C2 and dark HDR movies looks much better now.Supposedly QD-OLED is supposed to mitigate this when compared to WOLED (white boost pixel) according to this blog article by Samsung itself. Kinda interesting read. How much of it is marketing hype? Probably some.

https://innovate.samsungdisplay.com...with-qd-oled-see-new-details-in-old-classics/

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Alienware AW3225QF 32" 4K 240 Hz OLED

- Thread starter Vega

- Start date

Enhanced Interrogator

[H]ard|Gawd

- Joined

- Mar 23, 2013

- Messages

- 1,429

Are you using one Picture Mode globally or something? Because the Gigabyte has like 8 different picture modes to choose from and you can edit gamma and brightness in all of themRGB(1,1,1) isn't far away from RGB(0,0,0), but it was still distinct from the background. On my FO48U it was too bright, but that was also to be expected, since I mangled the settings to try and get the gamma to look good on my consoles.

Self Emissive

n00b

- Joined

- May 15, 2022

- Messages

- 11

My gen1 aw34 qd-oled shows that lagom black levels test fine, all squares clearly visible.

It is a good pattern for seeing how dirty/film-grainy/quantum-foamy the gen1 is when showing these low-luminance colours though, has that been improved on the gen3's?

Also dumb question about how Dolby Vision works. Does that have its own tonemap/processing for plain old hdr10 content as well, distinct from the hdr10 true black and peak modes, or does it only kick in when a dolby enabled app flips to exclusive/fullscreen mode or something?

It is a good pattern for seeing how dirty/film-grainy/quantum-foamy the gen1 is when showing these low-luminance colours though, has that been improved on the gen3's?

Also dumb question about how Dolby Vision works. Does that have its own tonemap/processing for plain old hdr10 content as well, distinct from the hdr10 true black and peak modes, or does it only kick in when a dolby enabled app flips to exclusive/fullscreen mode or something?

According to reviewers its the same coating, so still slightly dirty and veiled, somewhat like semi glossy.It is a good pattern for seeing how dirty/film-grainy/quantum-foamy the gen1 is when showing these low-luminance colours though, has that been improved on the gen3's?

Self Emissive

n00b

- Joined

- May 15, 2022

- Messages

- 11

That's a shame. Does that include it being responsible for the impression that it's squirming/crawling?

cvinh

2[H]4U

- Joined

- Sep 4, 2009

- Messages

- 2,103

So I've had this monitor for a few days now. I really wanted to like it but after getting used to a 42" C2 I just can't make the switch. It's main use is for gaming and the 42" is just way more immersive. The 32" AW is just there in front of me but with the 42" I feel like I'm in the game. Most AAA games aren't hitting 240hz anyway so it didn't matter much. The C2 just feels better. Anyway, I'll be returning this and waiting for a 240hz 40-42" monitor to replace the C2.

cvinh

2[H]4U

- Joined

- Sep 4, 2009

- Messages

- 2,103

so the colors don't wow you compared to the C2 eh? nor the pixel density? I have a 48 C1 and I find it a little too large, was hoping the 32 would be my next.

The PPI made it a little sharper, but I could see more detail on the C2 just due to the size. The colors seem more vibrant on the AW but nothing that made me go crazy vs the C2. I loaded up all my favorite games and everything just felt tiny which meant less noticeable detail. I had the AW like 20 inches from my face too but didn't really make a difference. I guess I'm just used to the larger screen. Honestly 38-40" would be perfect.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,528

The PPI made it a little sharper, but I could see more detail on the C2 just due to the size. The colors seem more vibrant on the AW but nothing that made me go crazy vs the C2. I loaded up all my favorite games and everything just felt tiny which meant less noticeable detail. I had the AW like 20 inches from my face too but didn't really make a difference. I guess I'm just used to the larger screen. Honestly 38-40" would be perfect.

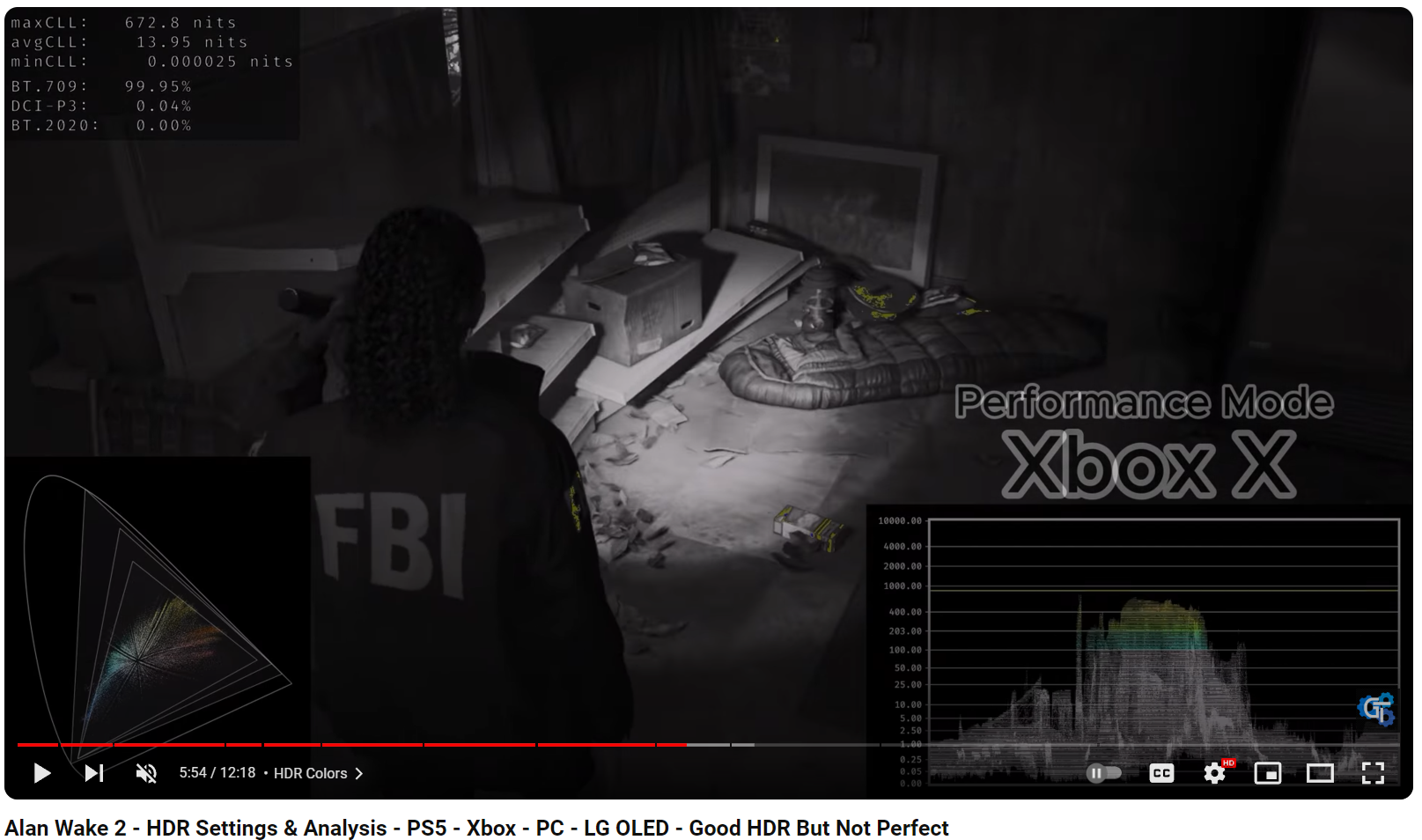

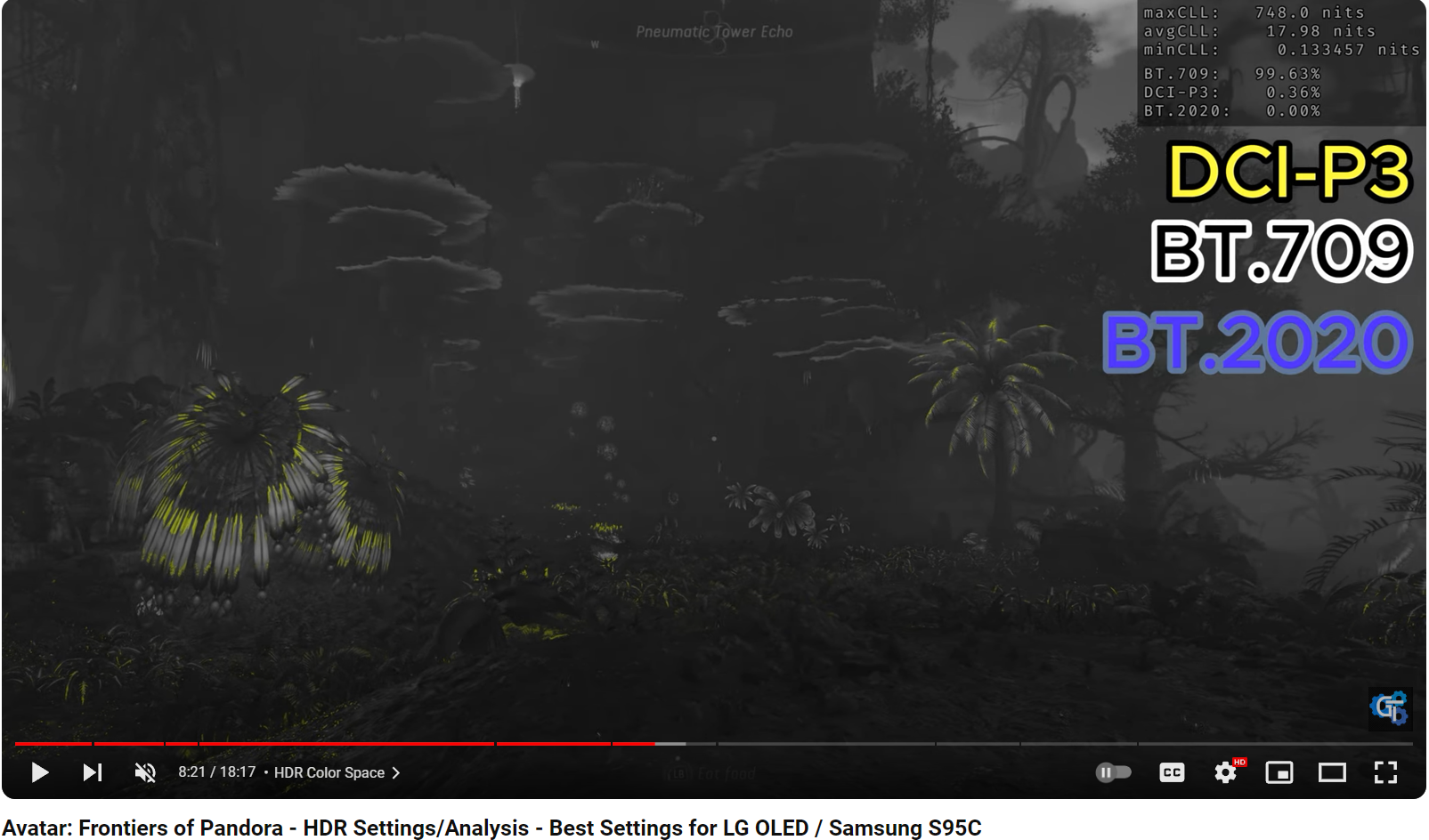

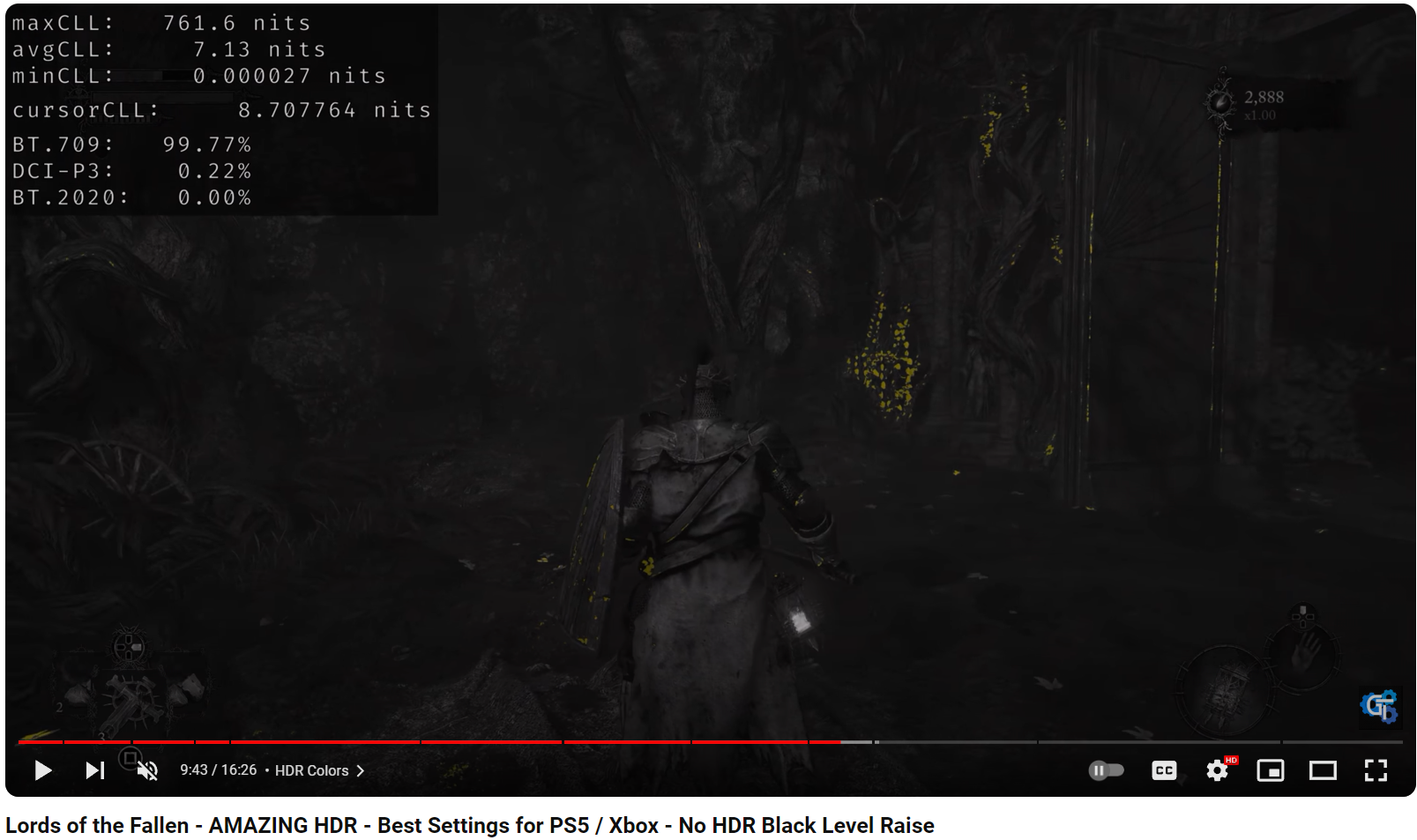

I mentioned this in another post but it seems like most games barely use any colors beyond BT.709 when running in HDR, even the latest ones. So really the wider color gamut of QD OLED isn't going to do much if it isn't even being used in the first place. The biggest advantage that QD OLED has over WOLED is the absence of a white subpixel which means colors will not get diluted at higher brightness levels, but again the games themselves are not pushing for super bright colors in the first place. This is probably why some people who have compared WOLED to QD OLED say they can't notice a big difference when it comes to games. It's because the games are not letting the monitors fully stretch their legs.

Last edited:

I tried comparing the same HDR videos on my 16" M2 Macbook Pro mini-LED vs my LG CX 48" WOLED and apart from the higher brightness capabilities, I felt that with the settings I had on the OLED, it looked less saturated. Just adjusting the Color setting in HDR mode higher helped make it more similar to the Mac, which I assume would be fairly well calibrated out of the box.I mentioned this in another post but it seems like most games barely use any colors beyond BT.709 when running in HDR, even the latest ones. So really the wider color gamut of QD OLED isn't going to do much if it isn't even being used in the first place. The biggest advantage that QD OLED has over WOLED is the absence of a white subpixel which means colors will not get diluted at higher brightness levels, but again the games themselves are not pushing for super bright colors in the first place. This is probably why some people who have compared WOLED to QD OLED say they can't notice a big difference when it comes to games. It's because the games are not letting the monitors fully stretch their legs.

Obviously the brightness difference is there, but just adjusting Color made it perceivedly more similar even if in reality it might be oversaturating some colors.

Baasha

Limp Gawd

- Joined

- Feb 23, 2014

- Messages

- 249

Why are there monitors in 2024 being released with DP 1.4? WTAF? Shouldn't DP 2.1 be standard now? Are there any 4K 240Hz OLED monitors coming out with DP 2.1?

https://tftcentral.co.uk/articles/when-is-displayport-2-1-going-to-be-used-on-monitors is worth a read for some of the reasons.Why are there monitors in 2024 being released with DP 1.4? WTAF? Shouldn't DP 2.1 be standard now? Are there any 4K 240Hz OLED monitors coming out with DP 2.1?

Baasha

Limp Gawd

- Joined

- Feb 23, 2014

- Messages

- 249

Interesting... so that clears it up then. Hmm.. perhaps I should get this monitor then.https://tftcentral.co.uk/articles/when-is-displayport-2-1-going-to-be-used-on-monitors is worth a read for some of the reasons.

l88bastard

2[H]4U

- Joined

- Oct 25, 2009

- Messages

- 3,720

Your Baaaasha you don't just get ONE! You get 5 and sandwich them together with no airflow!Interesting... so that clears it up then. Hmm.. perhaps I should get this monitor then.

stinkytofus

Weaksauce

- Joined

- Apr 11, 2023

- Messages

- 116

looks like im keeping my pg32uqx

l88bastard

2[H]4U

- Joined

- Oct 25, 2009

- Messages

- 3,720

Pg32uqx is a BRIGHT BEAST!looks like im keeping my pg32uqx

It would be so awesome if they refreshed that with a faster 240hz and pulse VRR and more dimming zones.

Xar

Limp Gawd

- Joined

- Dec 15, 2022

- Messages

- 227

The Greatest.looks like im keeping my pg32uqx

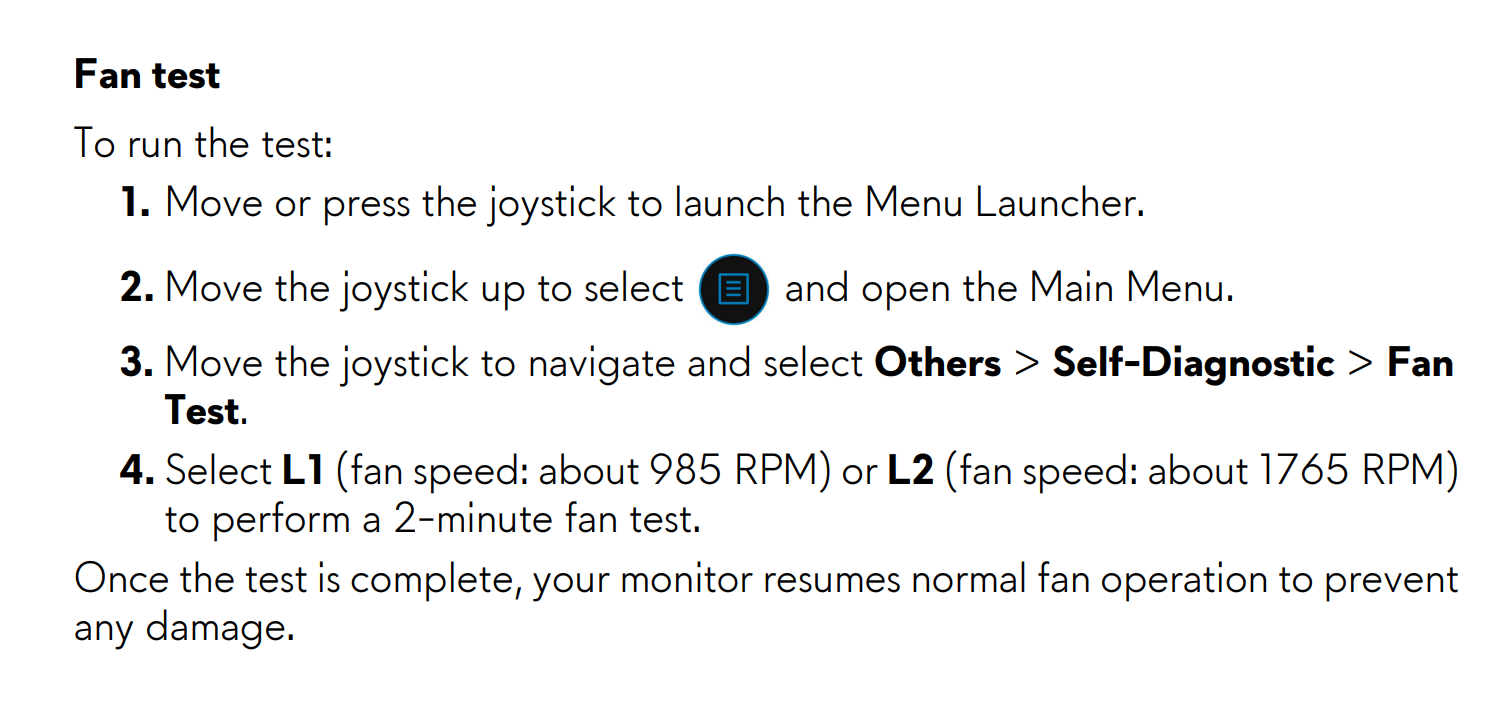

Probably just to account for different climates. I doubt it will have any real effect on durability.I saw BadSeed review and he mentions there are two fan speed you can choose. I'm wondering what's the benefit of setting the speed to the highest unless there's a drawback when setting to low. More risk of burn in?

The OSD menu option is just a fan speed test. The fan runs automatically during normal operation. Here is pg 89 of the manual:I saw BadSeed review and he mentions there are two fan speed you can choose. I'm wondering what's the benefit of setting the speed to the highest unless there's a drawback when setting to low. More risk of burn in?

Same here, I have tested many displays next to this including all the modern OLEDs from 2023. Nothing comes close.looks like im keeping my pg32uqx

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,528

Pg32uqx is a BRIGHT BEAST!

It would be so awesome if they refreshed that with a faster 240hz and pulse VRR and more dimming zones.

It's the year 2040, and still the best Mini LED monitor will be the PG32UQX.

l88bastard

2[H]4U

- Joined

- Oct 25, 2009

- Messages

- 3,720

Yea I regret selling mine, but I have an Innocn 27 4k160 that is almost as good and does some things better.....for a whole lot less $$$It's the year 2040, and still the best Mini LED monitor will be the PG32UQX.

One thing that I don't think the color analysis in that tool can properly show is desaturation of WOLED at high brightness. Since it is looking at gamut in 2D it isn't considering what happens as things get brighter which, on WOLED, tends to mean they desaturate. So you could potentially have something that is in the BT.709 colorspace, yet looks more saturated on a QD-OLED because it is at 800 nits and is getting desaturated to hit that brightness.I mentioned this in another post but it seems like most games barely use any colors beyond BT.709 when running in HDR, even the latest ones. So really the wider color gamut of QD OLED isn't going to do much if it isn't even being used in the first place. The biggest advantage that QD OLED has over WOLED is the absence of a white subpixel which means colors will not get diluted at higher brightness levels, but again the games themselves are not pushing for super bright colors in the first place. This is probably why some people who have compared WOLED to QD OLED say they can't notice a big difference when it comes to games. It's because the games are not letting the monitors fully stretch their legs.

We really should talk about color volume in 3D... but it gets hard to visualize and compare so we generally don't.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,528

One thing that I don't think the color analysis in that tool can properly show is desaturation of WOLED at high brightness. Since it is looking at gamut in 2D it isn't considering what happens as things get brighter which, on WOLED, tends to mean they desaturate. So you could potentially have something that is in the BT.709 colorspace, yet looks more saturated on a QD-OLED because it is at 800 nits and is getting desaturated to hit that brightness.

We really should talk about color volume in 3D... but it gets hard to visualize and compare so we generally don't.

Yes that tool just shows color space being used but not luminance levels. You can analyze that with heatmaps though and based on the limited sample of games I've looked at, most colors definitely don't go anywhere near 800 nits. They are mostly within SDR range actually, the ones that tend to go to really high brightness levels would be things like the fire so the orange/red hue would be way more saturated and fuller looking on a QD OLED and Mini LED. I'm sure HDR colors in games will get better over time so at this point I'm longer considering anything that's WOLED anymore.

Bigmonitorguy

Limp Gawd

- Joined

- Jan 2, 2020

- Messages

- 277

Yea I regret selling mine, but I have an Innocn 27 4k160 that is almost as good and does some things better.....for a whole lot less $$$

I have the 32" Innocn and the PG32UQX. The Innocn doesn't get quite as bright, but close enough. I use the Innocn because it has KVM and no fan.

l88bastard

2[H]4U

- Joined

- Oct 25, 2009

- Messages

- 3,720

The pg32uqx would get bright as shit and LAZOOR BEAMZ sizzled my eyeballs in battlefront 2 game!!!I have the 32" Innocn and the PG32UQX. The Innocn doesn't get quite as bright, but close enough. I use the Innocn because it has KVM and no fan.

I like the Innocn 27 better though because way better motion clarity and 160hz > 144hz

also for 32" and larger I prefer curved screens.... I think 27" is as big as I like to go with flat screens

Baasha

Limp Gawd

- Joined

- Feb 23, 2014

- Messages

- 249

lol those days are long gone! I still have too many monitors though - Neo G9 49", Neo G9 57", PG32UQX, AW5520QF, FI32U - probably will sell the FI32U and use the PG32UQX as the accessory display and put this OLED 240Hz as the main. hmm.. You get yours yet? How're you liking it?Your Baaaasha you don't just get ONE! You get 5 and sandwich them together with no airflow!

l88bastard

2[H]4U

- Joined

- Oct 25, 2009

- Messages

- 3,720

In production since Jan 14thlol those days are long gone! I still have too many monitors though - Neo G9 49", Neo G9 57", PG32UQX, AW5520QF, FI32U - probably will sell the FI32U and use the PG32UQX as the accessory display and put this OLED 240Hz as the main. hmm.. You get yours yet? How're you liking it?

undertaker2k8

[H]ard|Gawd

- Joined

- Jul 25, 2012

- Messages

- 1,990

Feel free to send some over if you get tired of anylol those days are long gone! I still have too many monitors though - Neo G9 49", Neo G9 57", PG32UQX, AW5520QF, FI32U - probably will sell the FI32U and use the PG32UQX as the accessory display and put this OLED 240Hz as the main. hmm.. You get yours yet? How're you liking it?

jhinck1414

Limp Gawd

- Joined

- Oct 4, 2008

- Messages

- 428

I got mine last week and I’ve having major flickering problems with HDMI. Also seems like the firmware is buggy with keeping color profiles. Mine might be going back.

JohnnyFlash

Limp Gawd

- Joined

- Apr 23, 2022

- Messages

- 154

Am I crazy for only caring about good sRGB performance?

I mean... A little. HDR is pretty amazing and it is part of the reason to get an expensive monitor. However I will say good SDR/sRGB performance matters since there's still lots of games that you'll be playing like that.Am I crazy for only caring about good sRGB performance?

JohnnyFlash

Limp Gawd

- Joined

- Apr 23, 2022

- Messages

- 154

That's the thing, it's 99% SDR. Only the newest titles have HDR and fewer actually work correctly.I mean... A little. HDR is pretty amazing and it is part of the reason to get an expensive monitor. However I will say good SDR/sRGB performance matters since there's still lots of games that you'll be playing like that.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,163

Do monitors have poor sRGB performance anymore? Every display I've looked at for the past few years in this price/performance bracket have no issues with sRGB. The trend is so common that I don't even look at sRGB performance in reviews anymore. I'm most concerned about HDR performance since that still varies wildly.Am I crazy for only caring about good sRGB performance?

l88bastard

2[H]4U

- Joined

- Oct 25, 2009

- Messages

- 3,720

HDMI is borked and needs a firmware updateI got mine last week and I’ve having major flickering problems with HDMI. Also seems like the firmware is buggy with keeping color profiles. Mine might be going back.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,528

Do monitors have poor sRGB performance anymore? Every display I've looked at for the past few years in this price/performance bracket have no issues with sRGB. The trend is so common that I don't even look at sRGB performance in reviews anymore. I'm most concerned about HDR performance since that still varies wildly.

Unless you're looking for a monitor with sRGB clamp mode that does perfect 100% coverage and not 99.999%, 0.000 delta E, and perfectly exact spot on 6500k white point, then yeah nearly every monitor these days does sRGB just fine.

There are getting to be a whole lot more that support it, and most that do look good if your display is good. Games I've played recently with good HDR are Jedi Survivor, the Resident Evils (2, 3, 4, Village), Hitman 3, Dead Space, Hogwarts Legacy. It's far from universal but there are a lot these days that do. Also depending on how much you like to mess around, many games can have HDR added via something like Special K since most games internally render in floating point linear light space and then tone map to sRGB. Injectors can undo that mapping and then put their own pipeline in its place.That's the thing, it's 99% SDR. Only the newest titles have HDR and fewer actually work correctly.

Basically my point is that yes, you should care about SDR/sRGB performance. It does matter and will continue to matter. But don't sleep on HDR performance as more games are getting it and it really does look amazing.

Ya some do. You wouldn't think so but I've seen a bunch of issues looking at reviews (and some in person):Do monitors have poor sRGB performance anymore? Every display I've looked at for the past few years in this price/performance bracket have no issues with sRGB. The trend is so common that I don't even look at sRGB performance in reviews anymore. I'm most concerned about HDR performance since that still varies wildly.

1) Locking out of controls. Some either take the "sRGB mode" too literally and will try and clamp brightness at 80nits (the OG sRGB spec has brightness specified, among other things), lock white point, and so on. Others just disable crap because their chip can't handle it along with the gamut emulation.

2) Poor gamma performance. You see some displays all over the place with their gamma and that can lead to things looking too dark, or too bright, or having crushed blacks AND blown out highlights at the same time, etc. A good, smooth, gamma curve is important to good SDR content, just like good PQ tracking matters to HDR content.

3) Banding or other issues because of the color mapping. To clamp the colors space, you have to do things like when the computer is requesting pure red 255,0,0, you don't send that, you instead send something like 255,11,14 (not a real value from a real monitor, just an example) to the panel to get the color you want. If this mapping isn't done well, you can get banding or colors that are off in other ways.

You are right it shouldn't be an issue, but it is something I always check as plenty of monitors screw it up.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)