Comixbooks

Fully [H]

- Joined

- Jun 7, 2008

- Messages

- 22,029

I'll have a chance to play this all this week off for 9 days unless I call in today which would be a really good idea.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I'm specifically referring to the last game to reference the Alan Wake "universe", which was Quantum Break. All I'm saying is that for the same reason they felt to recast the character to someone who looks completely different is the exact same reason why I believe they should've kept the casting as similar as possible to maintain continuity.She wasn't in the last game...

I don't agree with this. Like most things - This is if you just power through the main story and don't do any of the other things along the way.It's a 20 hour game. Can be done in 2-3 days if you commit.

I'm sorry but in the greater context of such a deliberately weird and malleable story. I'm not sure how I could disagree more about your reasons.I'm specifically referring to the last game to reference the Alan Wake "universe", which was Quantum Break. All I'm saying is that for the same reason they felt to recast the character to someone who looks completely different is the exact same reason why I believe they should've kept the casting as similar as possible to maintain continuity.

I wish that this game ran better too. I'm with you there. I'd love to disable everything related to ray tracing because it looks like an awful smeary mess at the lower settings. However Rockstar has been just as guilty for questionable performance decisions in the recent past as well.And after finally trying the game, I had an extremely hard time making it past the intro. The game's optimization is crap and it doesn't actually even look that good. Remedy really lost their touch since the days of Max Payne. Maybe it's a good thing Rockstar made Max Payne 3...

Every game Remedy has made since Quantum Break except Alan Wake Remastered uses the Northlight Engine. That includes Quantum Break, Control, and this game. Alan Wake Remastered still uses the "Alan Wake" engine.The game uses Alan Wake 2 uses Remedy's Northlight engine. Wonder if previous games used the same engine seems like there tons of detail compared to control.

exactly. Thant's kinda my point in general I guess. People are paying $1600+ for the best of the best current gen video cards to play current gen games and it cant even be done without upscaling. I find that absurd. Did you want to run AW2 @ 4k maxed without upscaling? Well you can do that......2 years from now when nvidia releases the 5000 series....for another $1800. That's right for just another small fee, you'll now be able to run this 2 year old game truly maxed out at acceptable framerates. fuck all that bs.Even anything less than a 4080 is going to struggle though.

You can turn down the graphics, you know. One of the videos in this thread shows it at low, it still looks great.exactly. Thant's kinda my point in general I guess. People are paying $1600+ for the best of the best current gen video cards to play current gen games and it cant even be done without upscaling. I find that absurd. Did you want to run AW2 @ 4k maxed without upscaling? Well you can do that......2 years from now when nvidia releases the 5000 series....for another $1800. That's right for just another small fee, you'll now be able to run this 2 year old game truly maxed out at acceptable framerates. fuck all that bs.

It will change fast, I think, it must already be really rare for someone to really think like that, in reality it must be always a fear that it is a sign that will not scale down and never perform that well, because there a correlation.Gamers are saying "I wanna max out the settings, if I can't the game sucks!" Not evaluating how the game actually looks or runs at a given setting,

no! if i cant do 4k/144/hdr/full rt/no dlss, its a piece of unoptimized shitAnyone wishing the game ran better just needs to turn off raytracing. This is not the game to choose as your battle for one that is not 'optimized' well. It runs perfectly fine given the immense level of detail. If you don't have a 40 series card you're basically not going to be able to use the raytracing in this game. Even anything less than a 4080 is going to struggle though.

But people without the highest end hardware often have to turn down the settings. That has long been a thing. It is going to look ok too, since consoles are basically never able to run at max settings, so they are going to make sure it looks good for them.It will change fast, I think, it must already be really rare for someone to really think like that, in reality it must be always a fear that it is a sign that will not scale down and never perform that well, because there a correlation.

The games should not the possibility of have setting only useful for future hardware, they would be better off not allowing you to choose them type of talk will not survive,

upscaling is stupid has well, people will ask and reward good dynamic upscaling real soon.

Also by all accounts, the non-RT lighting is very good. They did a real good job on it and the RT lighting is a minor upgrade. A noticeable one, but not one that is night and day as it seems to be in Cyberpunk. They seem to have worked hard on making the non-RT lighting really good in this game.Anyone wishing the game ran better just needs to turn off raytracing. This is not the game to choose as your battle for one that is not 'optimized' well. It runs perfectly fine given the immense level of detail. If you don't have a 40 series card you're basically not going to be able to use the raytracing in this game. Even anything less than a 4080 is going to struggle though.

I am not sure what problem people have with it, but like I said my prediction it will not survive, it will become perfectly accepted. Those views will become quite marginal in the Unreal 5 era. And will not make sense with pathtracing, game should let people throw 4,6 rays by pixel if they want with 5 bounces, how much more code is that ? and once RT core get powerful enough, voila, better light.Why? What's wrong with supporting new technologies?

You still maintain the option you used to have: Turn down the resolution. You now just have an additional one: Turn down the render resolution but use a clever neural net to try and restore as much of the image quality as possible. If upscaling isn't for you, turn down the rez. Or turn down the detail and run at a higher rez. Or deal with a slower frame rate. You have options, choose the one that's right for you.

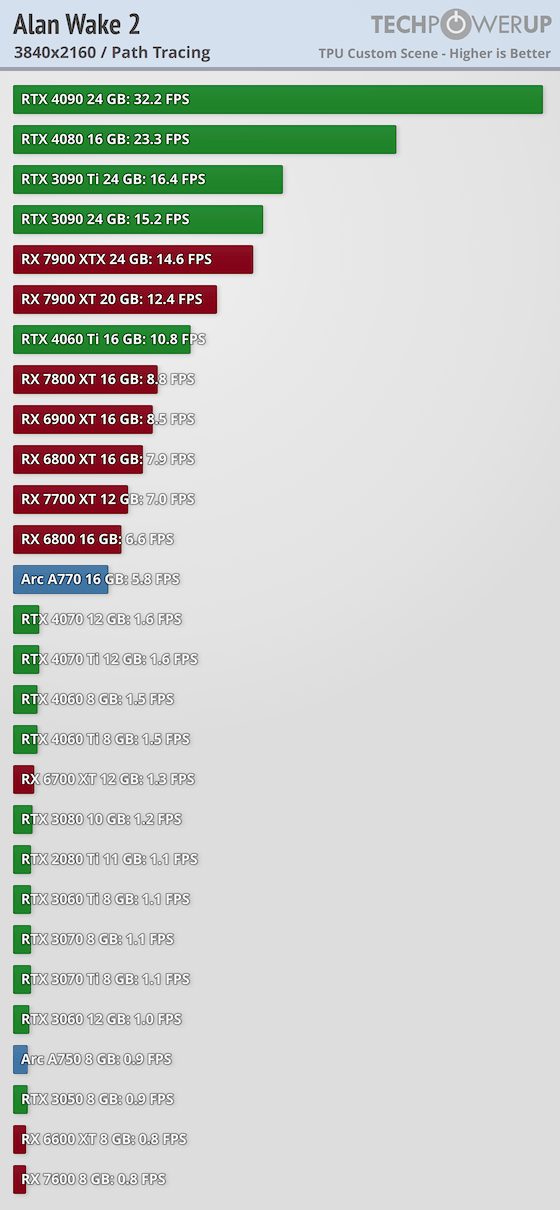

You can run AW2 @ 4k max settings without upscaling on a 4090 just fine currently. Although it would be silly NOT to use upscaling when it looks visually identical but performs better.exactly. Thant's kinda my point in general I guess. People are paying $1600+ for the best of the best current gen video cards to play current gen games and it cant even be done without upscaling. I find that absurd. Did you want to run AW2 @ 4k maxed without upscaling? Well you can do that......2 years from now when nvidia releases the 5000 series....for another $1800. That's right for just another small fee, you'll now be able to run this 2 year old game truly maxed out at acceptable framerates. fuck all that bs.

because some of us can see and feel the difference, and its crap.I'll never understand the folks who are absolutely obsessed with "native" resolution.

Depend on what people mean by both max setting and just fine, with the new reconstruction RT affair does it not look even better in some ways to use upscaling now (until Nvidia add DLAA + RR support) ?You can run AW2 @ 4k max settings without upscaling on a 4090 just fine currently. Although it would be silly NOT to use upscaling when it looks visually identical but performs better.

people don't spend that kind of money to turn settings down...You can turn down the graphics, you know.

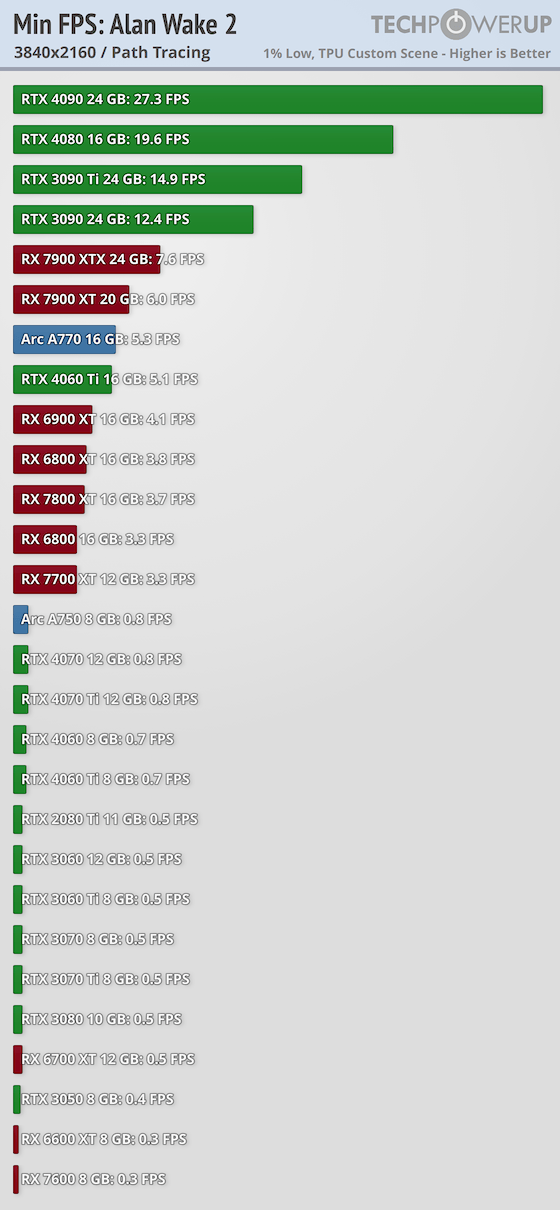

That's minimum fps, not average...Depend on what people mean by both max setting and just fine, with the new reconstruction RT affair does it not look even better in some ways to use upscaling now (until Nvidia add DLAA + RR support) ?

View attachment 610173

So... That's just ego then. "I spent $X which means it hurts my ego if I have to turn details down!" What you are really demanding is that they simply don't offer an option, because you don't feel like the performance on your hardware is good enough. You don't have to use it, the game looks and works great without path tracing, but you want it taken away anyhow because it hurts your ego that it is there and the performance isn't what you'd like.^"just fine" rolf

people don't spend that kind of money to turn settings down...

It's also basically the worst-case scenario. You want 4k, with no upscaling, with everything maxes, with path tracing on... Well for path tracing, that's pretty unrealistic. If you don't want to do upscaling, probably best to stick with raster, particularly if you run a 4k display. There they show a 73 average 63 1% minimum at max settings, no upscaling. Sounds quite nicely playable to me. If you want path tracing, really you need to be looking at upscaling both since more pixels are the hardest things, performance wise, for ray tracers (there is a reason old RT demos used to always be so low rez), and because you need a denoiser ANYHOW so you might as well use DLSS 3.5 and get your upscaling and denoising combined. With that, it looks like you can get 63 FPS with DLSS quality, path tracing, maxed settings, at 4k.That's minimum fps, not average...

I remember in the Daniel_Owen vid when he set it to 4k max it tanked to the 20's.That's minimum fps, not average...

That's what they probably should've done tbh. Because you know in a year or so when they go to sell you the game all over again, the complete version with all the dlc's, they could've included those max setting as well for a little visual upgrade. They'd be cut and dried ready to go, wouldn't even have to do any work. And by that time the next gen cards could possibly be out, or just around the corner anyway."Take away an option, and rename the next level down to maximum."

^"just fine" rolf

people don't spend that kind of money to turn settings down...

and the point about crysis is not even comparable, flagship cards back then were a fraction of the price of what they are these days, like around $500 or so, which was nothing for anyone to afford back then.

...or they could just offer the option now since:That's what they probably should've done tbh. Because you know in a year or so when they go to sell you the game all over again, the complete version with all the dlc's, they could've included those max setting as well for a little visual upgrade. They'd be cut and dried ready to go, wouldn't even have to do any work. And by that time the next gen cards could possibly be out, or just around the corner anyway.

Which would tend to be a very important part of running just fine or not average is not that different (they say min but it is not the min, it is the average 1% low)That's minimum fps, not average...

Why? The game is stunning, and truly next-gen from a technology standpoint. 32 FPS native w/ max on the 4090 is fine. DLSS quality gets you 64FPS, and once you add frame-gen that's an effective 128hz.perhaps in the Daniel_Owen vid he didn't have frame gen enabled?

To be fair though Remedy's recent games have aways ran a little poor at launch. yeah dlss

I agree the comments, it's all good. I have no ego about this, I'm not even running a 4090 (I'm not even playing this game lol!!!), but if I was I would feel a little bitter about the performance, absolutely.

In this case, higher IQ in many ways I think (because of ray reconstruction)It has very minimal IQ loss

Also more fine detail in areas, even in no-ray reconstruction cases. I've seen a number of games where DLSS quality reveals fine details in distant geometry (like wires and such) that you don't see at clearly at native rez. Now of course it introduces some minor artifacts too, but I find in quality it ends up being a tradeoff that while there may be some minor downgrades over native, there are often some minor upgrades too.In this case, higher IQ in many ways I think (because of ray reconstruction)