psy81

Gawd

- Joined

- Feb 18, 2011

- Messages

- 605

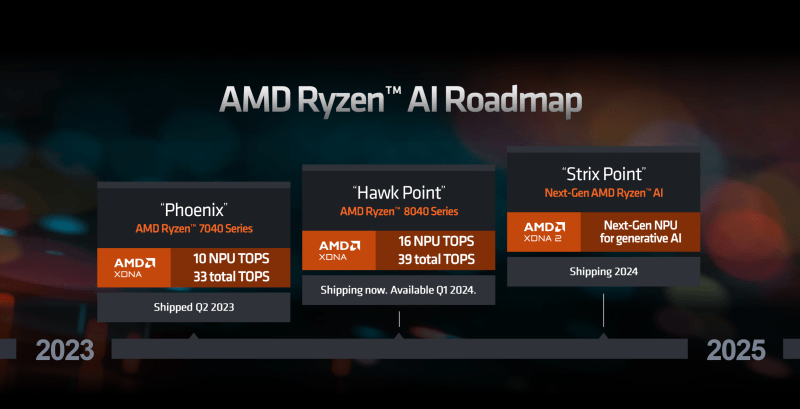

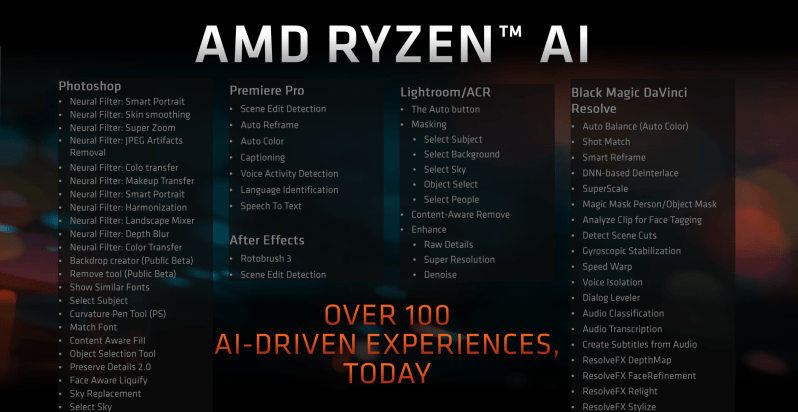

Based on recent developments and Microsoft's move to integrate AI into Windows it looks like in the near future we will have AI Accelerator Cards in our PCs or not, who knows. Maybe Nvidia will just buy up these startups and integrate this into their GPUs.

What do you guys think of this? Personally, this doesn't excite me but who know what this may bring.

I would be annoyed if Microsoft required these cards to run Windows.

View: https://youtu.be/q0l7eaK-4po?si=QHdBKS3RdQ6rw4PK

https://www.pcworld.com/article/219...or-cards-from-memryx-kinara-debut-at-ces.html

What do you guys think of this? Personally, this doesn't excite me but who know what this may bring.

I would be annoyed if Microsoft required these cards to run Windows.

View: https://youtu.be/q0l7eaK-4po?si=QHdBKS3RdQ6rw4PK

https://www.pcworld.com/article/219...or-cards-from-memryx-kinara-debut-at-ces.html

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)