Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

4K progressive-scan on a CRT! Nice to see, even if it's not resolvable.Basically this card has an insanely fast RAMDAC that goes up to a whopping 655.xxMHz!!Unfortunately it has some really weird limits of horizontal pixels and lower max bandwidth when doing interlaced. Really sad.

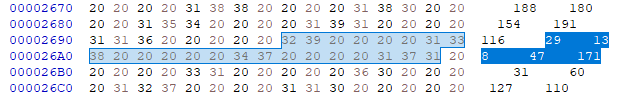

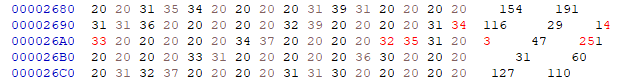

For fun I tried again to display 4K, in progressive, and I was able to squeeze 3840x2160p at 60Hz with this GPU, after a lot of trial and error adjusting the timings in CRU (see photo below). Not the best settings, but it worked, just for a test!

I knew it was possible to do 2160i in the bandwidth of 1080p if you jumped enough hoops -- but 2160p I haven't seen anybody do that at full 3840 wide.

You must be using 200-300% DPI zoom at the moment!

Try testing VRR on your CRT!

This won't work with all CRTs, but it reportedly works with a few very-multisync tubes with less aggressive "out-of-range" firmware cops. You should try the ToastyX force-VRR trick (give your output a FreeSync range), and see if it works on your CRT in a tight range. Try testing 800x600 55-65Hz and using the windmill demo, and slowly expand your VRR range. However, not all HDMI-to-VGA adaptors work, but if you've got an onboard DVI-I port with both analog/digital and a RAMDAC on a graphics card that supports VRR, bingo -- try it... This could be fantastic for capped 60fps games for the world's lowest latency "60Hz 60fps VSYNC ON lookalike" on a CRT. DVI-D already worked with VRR sometimes with the force-VRR trick ten years ago, on some generic LCD panels, and the DVI-A signal may be along for the ride too.

There are many low-lag clones of "VSYNC ON":However, since I actually hardly use VSync cause of the latency, I will certainly look into the modern methods of reducing/eliminating latency. Thanks!

- Special K Latent Sync

- RTSS Scanline Sync

- Capped VRR (fixed-framerate), may not work with CRT

- Low-Lag VSYNC HOWTO (Microsecond-accurate capper like RTSS 0.01fps below what you see at www.testufo.com/refreshrate)

- VSYNC ON + NULL

Whenever you *can* do VRR -- it is the easiest low-lag VSYNC ON clone, you simply cap below max Hz, e.g. run VRR range to 60.5Hz-63Hz, and cap to 60fps for your emulators. Ideally you need to cap about 3% below max Hz (3fps-below is an old VRR-specific boilerplate, but there's some give or take).

Capped VRR is the world's lowest lag "easy VSYNC ON" clone, do not ignore it just simply because esporters complain it has more lag than VSYNC OFF. You should use VSYNC OFF for a lot of esports games. But not all games are esports games, and you simply want to enjoy the amazing TestUFO-smooth motion in games on your display, you can do it. If you ARE HUNTING for the world's lowest lag VSYNC ON clone, there ya go. Now, if your display doesn't support VRR, then Special K Lantent Sync is becoming a quick favourite on Discord, as it seems to be slightly lower lag and slightly easier than RTSS Scanline Sync.

Scanline sync technologies, unfortunately, requires enough GPU horsepower to achieve 60fps at only ~50% GPU, for reliable VSYNC OFF raster-interrupt-style tearline-steering between refresh cycles (tearingless VSYNC OFF), pushing the tearline above/below edge of screen.

However, the effort is worth it if you actually put the effort in procuring a CRT -- then there is a desire to pamper the visuals properly with a low-lag "VSYNC ON" lookalike technology.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)