Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 39,027

Or the local drive to have a partition for it.

Brilliant. Do your backups to the same drive as the original content, so if it dies you lose both your primary source and your backups

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Or the local drive to have a partition for it.

I didn't say it's smart.Not many Apple users are going to go through the trouble of booting to recovery mode, launching disk utility, formatting, re partitioning, and reinstalling so they can run Time Machine locally.

They pay through the ass for that storage they aren’t about to just hand half of it over like that.

Yeah the Xbox game bar is a requirement especially if you are running the multi CCD x3D chips.

AMD has a driver hook in there to offset their existing scheduler.

Just glad you fixed it.

That should be it, the game bar toggles on Game Mode when it detects that you are running a game. And the chipset drivers change what ever they do accordingly at least that’s what AMD and Microsoft say.What exactly do you do withe the Xbox gamebar to get it to work its magic?

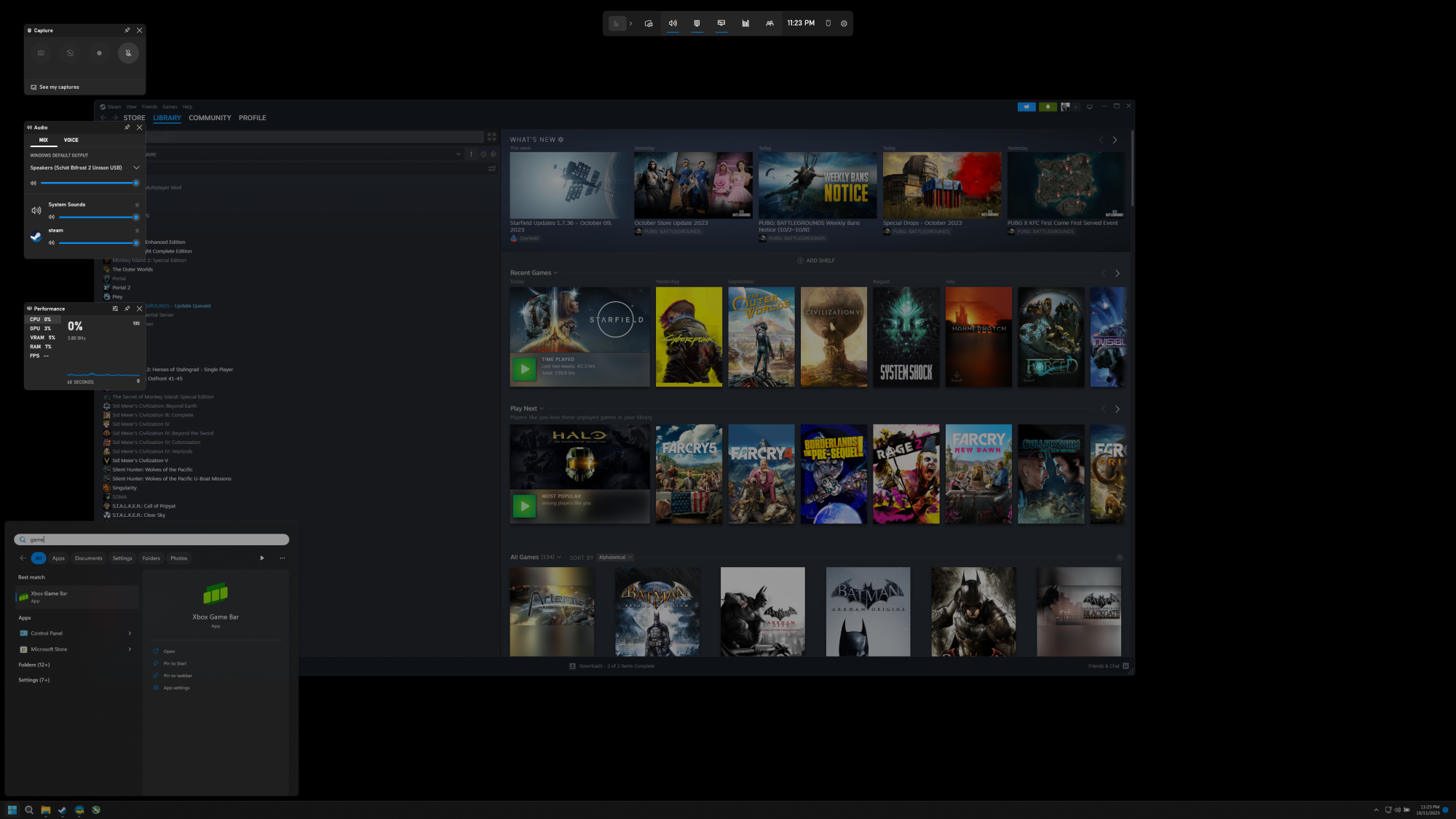

I launched it, and all I got were these UI elements to stay on screen until I clicked on anything, and then they went away:

View attachment 605348

Not quite sure what I am supposed to be doing with it...

What exactly do you do withe the Xbox gamebar to get it to work its magic?

I launched it, and all I got were these UI elements to stay on screen until I clicked on anything, and then they went away:

View attachment 605348

Not quite sure what I am supposed to be doing with it...

That should be it, the game bar toggles on Game Mode when it detects that you are running a game. And the chipset drivers change what ever they do accordingly at least that’s what AMD and Microsoft say.

1) It's a Game Bar Overlay, like Steam In Game Overlay or when playing a game on console and bringing up the overlay - use the Game Bar at top to toggle widgets (those dragable items to the left) or hit the gear icon on the Game Bar for Settings

2) There's no setting for the AMD CPU stuff it's automatic by the program as long as it's installed and running (always on - as long as you can bring it up like that you can confirm so) Edit: A setting either via Game Bar or in Windows 11 settings called 'Game Mode' might also need to be toggled on if not by default - I just heard you need Game Bar installed but I recall that setting too off the top of my head Double edit: https://community.amd.com/t5/gaming...with-a-new-amd-ryzen-9-7950x3d-or/ba-p/589464

I have to be honest. This shit totally rubs me the wrong way, needing to have a resident overlay (named some console bullshit like Xbox at that) running in order to get my CPU to perform its best in game.

I've always been a proponent of keeping a few things as possible running in the backround when running a game, and having a game bar running is against everything I believe in

But if that's what it takes, I guess I'll have to do it. Still cannot express how much I hate this.

Yeah Intel Thread Director is dope, this is just a software version of it for AMD.I have to be honest. This shit totally rubs me the wrong way, needing to have a resident overlay (named some console bullshit like Xbox at that) running in order to get my CPU to perform its best in game.

I've always been a proponent of keeping a few things as possible running in the backround when running a game, and having a game bar running is against everything I believe in

But if that's what it takes, I guess I'll have to do it. Still cannot express how much I hate this.

I see this updates through the Microsoft Store too, which is bullshit. I never use the Microsoft store and want nothing to do with it.

This feels like little more than a manipulative scheme to break down resistance and get people to start using the "features" Microsoft wants them to be using, even if they don't like them.

Like, if you need one overlay (Xbox Game Bar) running to get your CPU to function properly, people will probably do everything in that one game bar rather than try Nvidia's or Steams overlays.

I hate everything about this. I shouldn't need an overlay to make my CPU work properly.

This may even push me to go Intel next round, if they don't also require it.

Yeah Intel Thread Director is dope, this is just a software version of it for AMD.

I’m sure they did it as a cross promotional gaming collaboration project so they can maximize AMD gaming integration and synergize with the XBox brand to increase gamer enjoyment and propel retention and engagement numbers to the max.

Any buzzwords I missed??

I have to be honest. This shit totally rubs me the wrong way, needing to have a resident overlay (named some console bullshit like Xbox at that) running in order to get my CPU to perform its best in game.

I've always been a proponent of keeping a few things as possible running in the backround when running a game, and having a game bar running is against everything I believe in

But if that's what it takes, I guess I'll have to do it. Still cannot express how much I hate this.

I see this updates through the Microsoft Store too, which is bullshit. I never use the Microsoft store and want nothing to do with it.

This feels like little more than a manipulative scheme to break down resistance and get people to start using the "features" Microsoft wants them to be using, even if they don't like them.

Like, if you need one overlay (Xbox Game Bar) running to get your CPU to function properly, people will probably do everything in that one game bar rather than try Nvidia's or Steams overlays.

I hate everything about this. I shouldn't need an overlay to make my CPU work properly.

This may even push me to go Intel next round, if they don't also require it.

Well, that is ok, I can start the XBox Series X, run RDR2 and being playing it far, far faster than on a PC. Start the PC, wait for it to boot, run Steam, run the game, loads, Rockstar, loads the game, finally select to play the game, wait for it to load and then play. And this is on a very, very fast PC with Windows 11.I have to be honest. This shit totally rubs me the wrong way, needing to have a resident overlay (named some console bullshit like Xbox at that) running in order to get my CPU to perform its best in game.

I've always been a proponent of keeping a few things as possible running in the backround when running a game, and having a game bar running is against everything I believe in

But if that's what it takes, I guess I'll have to do it. Still cannot express how much I hate this.

I see this updates through the Microsoft Store too, which is bullshit. I never use the Microsoft store and want nothing to do with it.

This feels like little more than a manipulative scheme to break down resistance and get people to start using the "features" Microsoft wants them to be using, even if they don't like them.

Like, if you need one overlay (Xbox Game Bar) running to get your CPU to function properly, people will probably do everything in that one game bar rather than try Nvidia's or Steams overlays.

I hate everything about this. I shouldn't need an overlay to make my CPU work properly.

This may even push me to go Intel next round, if they don't also require it.

"It's just console first it makes sense on PC"

There's that one

I'll take a second to shill for GeForce Experience as an overlay - auto-OC'ing, system/performance monitor, HDR screenshots and video recordings, GameStreaming (still works, backend parts of GameStream never removed - yet, maybe - only official front end interfaces were killed at this time so use Moonlight front end on whatever device) - and then of course driver notification/management - does a lot more that's just what I use it for - they forced me into it with GameStream, it's got enough other stuff it's useful enough for 1 app I think

You might also want to turn virtualization off in BIOS if you don't use VMs or Windows Sandbox on your machine - Windows 11 has default VM/virtualized security & OS based backround features - I think there was like ~6-10% performance decrease/increase or something when gaming

That should be it, the game bar toggles on Game Mode when it detects that you are running a game. And the chipset drivers change what ever they do accordingly at least that’s what AMD and Microsoft say.

1) It's a Game Bar Overlay, like Steam In Game Overlay or when playing a game on console and bringing up the overlay - use the Game Bar at top to toggle widgets (those dragable items to the left) or hit the gear icon on the Game Bar for Settings

2) There's no setting for the AMD CPU stuff it's automatic by the program as long as it's installed and running (always on - as long as you can bring it up like that you can confirm so) Edit: A setting either via Game Bar or in Windows 11 settings called 'Game Mode' might also need to be toggled on if not by default - I just heard you need Game Bar installed but I recall that setting too off the top of my head Double edit: https://community.amd.com/t5/gaming...with-a-new-amd-ryzen-9-7950x3d-or/ba-p/589464

And I'll take a second to shill for "no overlays ever from any developer".

Yes, I even have the Steam overlay disabled

I also refuse to run background tasks. I don't even have mouse software running in the background. I shut down everything and run only the game.

Except in modern versions of Windows they always have some bullshit background task running that I didn't ask for and have little to no control over, and I hate that.

Some things really were better in the single core era.

We used to use scripts in the IRC chat days and brag about how much free resources (cpu free memory free) we had. Weird thing to brag about, but it came to mind as i read this.

I agree. And I hate having to sift through two pages of processes to find something rogue to close down. Was also easy to spot a virus or trojan running because you always knew how many tasks were normally active, so seeing another one would cause you to look it over.Old habits die hard. I have a 24C/48T CPU and 64 Gigs of RAM, and I still can't bring myself to waste system resources with background tasks. I kill absolutely everything I am not actively using.

In fact, I kind of hate any and all background tasks that I didn't intentionally activate myself. I like being in 100% absolute micromanaging control over my system 100% of the time. Nothing should ever happen without me telling it to, and that's what I hate about modern versions of windows.

They have gone hog wild on automation, cloud and background tasks, and it drives me up a wall.

I also liked when if i wasnt doing anything, nothing else was going on. hdd/ssd light always flickering no matter whats going on. just seems wasteful to me

Linux is probably going to be my next step. I run it on my garage pc because then it is always ready to use, no 20 window bullshit 'new feature! please provide your xbox gamer account to use this!" when i really just need a browser window haha.Same. I still expect this, and I am always disappointed.

Like WTF are you doing now? I didn't tell you to do anything? You should be doing NOTHING!

At least Linux still mostly behaves this way. I mean, there are some background tasks, but these are really only those that are explicitly defined in cron, like periodic checks for updates. I can live with that. It's the lack of transparency of what is going on on MY MACHINE where I should be in control and know everything that just fills me with fury on a regular basis.

IMHO tech peaked in 2007. Everything since then has just been stupid.

The part that confused me was that when I go to launch it in start menu those widgets appear, but then as soon as I click on anything they disappear, and I can't quite tell if it is still running or even doing anything anymore.

Is there any way to confirm that it is working and doing its thing?

My Series X takes longer to boot than my PC. On my PC I just boot it and double-click the shortcut to the game and it starts since I have Rockstar Games Launcher set to boot with my PC.Well, that is ok, I can start the XBox Series X, run RDR2 and being playing it far, far faster than on a PC. Start the PC, wait for it to boot, run Steam, run the game, loads, Rockstar, loads the game, finally select to play the game, wait for it to load and then play. And this is on a very, very fast PC with Windows 11.

My Series X takes longer to boot than my PC. On my PC I just boot it and double-click the shortcut to the game and it starts since I have Rockstar Games Launcher set to boot with my PC.

I've had numerous issues with quick resume and standby in the past, so I don't use them. I keep my Series X in "power saving" mode, so it's a cold boot every time. My PC did take a long time to boot until I discovered the memory context restore setting in the BIOS. For some reason it was relearning the memory timings on each boot without that setting forced on.Why do I find the pc being faster than the Xbox booting hard to believe?Plus the fact that you still need to open the launcher even though runs in the background takes time. There are no launchers for the XBox.

Oh, and I forgot, there is always quick resume, something the pc has yet to do.

Edit: I am not saying you are wrong on the boot thing, just that it is no where near the norm.

I miss Windows 7 so much bro's.

F Windows 7, it took to much away from what Windows Vista could do, on a personal level, and I wish I could still use Windows Vista today.

My PC boots faster than my PS5 so I don’t think that’s overly difficult.Why do I find the pc being faster than the Xbox booting hard to believe?Plus the fact that you still need to open the launcher even though runs in the background takes time. There are no launchers for the XBox.

Oh, and I forgot, there is always quick resume, something the pc has yet to do.

Edit: I am not saying you are wrong on the boot thing, just that it is no where near the norm.

Everyones use case is different. I don't recall missing anything when I moved from Vista to 7, but I also only used Vista for a few weeks before upgrading to 7. I was a long time XP holdout.

I bought Vista Business only a few weeks before 7 was launched, and it came with a free 7 Pro upgrade.

My recollection was that 7 was essentially just a reskin of Vista SP1, and that not much had changed.

I'm curious, what did you miss?

My PC boots faster than my PS5 so I don’t think that’s overly difficult.

It has been a while so I know there were a number of things. However, the biggest I recall is when they removed the quick boot function. If you recall, this was during the HDD era, when people were complaining that their hard drive was thrashing with Vista. It was, in fact, loading all the programs in use so that they would load fast, and they did, where as, with Windows 7, they essentially removed it, although some would say neutered it, since it was useless to load the programs in the background many minutes after the computer booted up.

My PC boots faster than my PS5 so I don’t think that’s overly difficult.

This is because Microsoft is still trying to monetize Windows, since they realize nobody wants to pay $100+ for an OS with minor changes. They can't even give away the OS at this point. The only source of income for Microsoft from Windows is through OEM. Since Microsoft is a publicly traded company who only has to answer to shareholders, so they will find new stupid ways to make money. After Windows 7, Microsoft wanted to get into the tablet market with Windows 8, which pushed desktop users to use a tablet UI. Which didn't work because Android is open source and therefore free, and Apple never charges a direct fee for iOS and MacOS. So Microsoft for the first time with Windows 10, didn't charge anyone any money to upgrade. The catch 22 here is that Windows 10 is full of data collection and cannot be easily turned off. This was so lucrative that Microsoft forced some Windows 7 and 8 users to upgrade without their consent. Now we have Windows 11 which requires TPM 2.0 and Secure Boot. The purpose of this is so Microsoft can push their form of DRM which is enforced by TPM to software developers to encourage them to sell their software on their store.IMHO tech peaked in 2007. Everything since then has just been stupid.

Windows 7 is just Vista with all the updates included. Windows 7 was more of a marketing ploy to get everyone on board without the tainted name of Vista. It was such a problem to get people on Vista, that Microsoft included a copy of Windows XP in Windows 7.F Windows 7, it took to much away from what Windows Vista could do, on a personal level, and I wish I could still use Windows Vista today.

For my personal experience only, they removed a lot of features that I used daily. Also, I preferred the Vista look better but, oh well.This is because Microsoft is still trying to monetize Windows, since they realize nobody wants to pay $100+ for an OS with minor changes. They can't even give away the OS at this point. The only source of income for Microsoft from Windows is through OEM. Since Microsoft is a publicly traded company who only has to answer to shareholders, so they will find new stupid ways to make money. After Windows 7, Microsoft wanted to get into the tablet market with Windows 8, which pushed desktop users to use a tablet UI. Which didn't work because Android is open source and therefore free, and Apple never charges a direct fee for iOS and MacOS. So Microsoft for the first time with Windows 10, didn't charge anyone any money to upgrade. The catch 22 here is that Windows 10 is full of data collection and cannot be easily turned off. This was so lucrative that Microsoft forced some Windows 7 and 8 users to upgrade without their consent. Now we have Windows 11 which requires TPM 2.0 and Secure Boot. The purpose of this is so Microsoft can push their form of DRM which is enforced by TPM to software developers to encourage them to sell their software on their store.

At no point has there been any improvements to Windows since Windows 7 beyond security updates and Microsoft locking DX12 to Windows 10 unless you happen to be playing World of Warcraft where DX12 is allowed on Windows 7. Things like auto HDR is not going to cut it, especially when most people don't have a HDR monitor.

Windows 7 is just Vista with all the updates included. Windows 7 was more of a marketing ploy to get everyone on board without the tainted name of Vista. It was such a problem to get people on Vista, that Microsoft included a copy of Windows XP in Windows 7.

i'm gonna take it you know libre and open office lets you save to MS formats in the save dialog. it's too bad they don't by default but usually after the first time you change it, it stays whatever format you chose last. but i sure trying to explain that to most normies out there is like trying to teach them rocket science.That, and the aforementioned critical mass of Libre (or other open) office document formats, because they are never going to be perfectly compatible with MS Office, and people tend to have poor tolerance for their documents rendering differently on different machines.

i'm gonna take it you know libre and open office lets you save to MS formats in the save dialog. it's too bad they don't by default but usually after the first time you change it, it stays whatever format you chose last. but i sure trying to explain that to most normies out there is like trying to teach them rocket science.

My point was that why would I choose to use a system where I'd loose access to the data even if there is nothing wrong with the storage itself.Your point was that if something happened to the computer and you couldn't access the drive, you were screwed.

A problem you wouldn't have without apple.This problem is alleviated by having back ups.

But we are not talking about the hard drive failing are we? You are moving the goalposts. We were talking of the exact opposite, where nothing is wrong with the drive, the issue is with the computer.If your hard drive failed in a Windows computer, you would also be screwed if you didn't have back ups.

No, it is not eliminated, it is much more straightforward to pull the drive and put it into another computer of the same type. Or better yet just replace the actual HW that failed in the system and you don't even have to pull drives.Yes, Apple having everything soldered to the motherboard is annoying. But your drastic scenario is simply eliminated by having backups.

I never said you shouldn't have backups.I didn't say what Apple is doing doesn't matter cuz backups. I'm saying your scenario is unrealistic unless you willingly choose not to have a back up plan.

You literally know nothing of my backups methods but instead of asking you paint a completely ludicrous image.Nonsense. It's not impossible. It sounds like you just need a backup method that involves Thunderbolt 4 and NVME drives. I work with massive data files as well. I'm not going to use that as an excuse to have a crappy back up plan or even worse ... no back up plan at all. Your hard drive(s) could die at any moment. What would your excuse be if you simply lost everything because you think that backing up is impossible?

Why would I wait hours for a restoration from a backup, when I can just pull the drive and plug it into another system in 5 minutes?There is mirroring software for both Mac and PC. If you had a proper back up method, you wouldn't need to do anything from scratch as most modern solutions allow for "metal to metal" backup restoration ... meaning if you had to get a completely different computer than what you are using now, you could just restore everything from the most recent backup with all your apps, files, and settings being exactly as they were.

If a project is multiple TBs, then you must keep it all in the working set, guess you don't understand that when I say I'm working with large datasets, I mean it.That’s irresponsible…

Who keeps 100’s of GB on their active system in a working folder set. I can understand 100’s of GB hell dozens of TB in a project but in an active set that’s asking for a bad time.

The projects are backed up on a NAS, plus offline copies as well. But it is not practical to access it from there.That should live in a NAS and you check the work in and out, otherwise you are just asking for a bad day.

The data is virtually worthless to anyone else, so an encryption would only make my life harder. Local encryption won't stop a ransomware attack.And if you run local encryption as is recommended by just about every community, then you can’t just pick up and move your storage drive between devices.

Stop trying to downplay the issue. Especially considering that most apple users have zero backups.So even my basic bitch windows workstation, take that m.2 out to another machine or a USB enclosure and good luck.

This is a whole lot of words to say a whole lot of nothing.My point was that why would I choose to use a system where I'd loose access to the data even if there is nothing wrong with the storage itself.

A problem you wouldn't have without apple.

But we are not talking about the hard drive failing are we? You are moving the goalposts. We were talking of the exact opposite, where nothing is wrong with the drive, the issue is with the computer.

No, it is not eliminated, it is much more straightforward to pull the drive and put it into another computer of the same type. Or better yet just replace the actual HW that failed in the system and you don't even have to pull drives.

I never said you shouldn't have backups.

You literally know nothing of my backups methods but instead of asking you paint a completely ludicrous image.

Why would I wait hours for a restoration from a backup, when I can just pull the drive and plug it into another system in 5 minutes?

If a project is multiple TBs, then you must keep it all in the working set, guess you don't understand that when I say I'm working with large datasets, I mean it.

The projects are backed up on a NAS, plus offline copies as well. But it is not practical to access it from there.

The data is virtually worthless to anyone else, so an encryption would only make my life harder. Local encryption won't stop a ransomware attack.

Stop trying to downplay the issue. Especially considering that most apple users have zero backups.

The backup argument is completely irrelevant. It is apple breaking people's shins, but instead of condemning them your positions is it's your fault for not wearing shin guards!

always is...This is a whole lot of words to say a whole lot of nothing.

I have to disagree with this part because Apple nags the shit out of you to enable iCloud sync for your home folder, and it also gives you frequent notifications about time machine if you aren't doing that, it is annoying very very annoying, and just about every Apple desktop user I know just pays the $1.99 a month for the 100GB or so of iCloud storage to make that go away so their no longer receiving the nagging messages about iCloud sync and TimeMachine sync, and then the "your iCloud is full messages" they get after enabling iCloud sync and TimeMachine to said iCloud.Stop trying to downplay the issue. Especially considering that most apple users have zero backups.

The backup argument is completely irrelevant. It is apple breaking people's shins, but instead of condemning them your positions is it's your fault for not wearing shin guards!

yup. every time i connect and ext hdd/ssd or a usb big enough its buggin me to turn it into a time machine drive. at least we already have icloud storage so it doesnt bug me about that.I have to disagree with this part because Apple nags the shit out of you to enable iCloud sync for your home folder, and it also gives you frequent notifications about time machine if you aren't doing that, it is annoying very very annoying, and just about every Apple desktop user I know just pays the $1.99 a month for the 100GB or so of iCloud storage to make that go away so their no longer receiving the nagging messages about iCloud sync and TimeMachine sync, and then the "your iCloud is full messages" they get after enabling iCloud sync and TimeMachine to said iCloud.