TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,739

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

AMD is notorious for their misleading marketing.I don't get it. AMD touted a partnership with Watch Dogs 2 8-9 months ago according to that tweet, and then went quiet for 8 months while Ubisoft and Nvidia released a trailer and touted their partnership. I guess if you were making the point that AMD can't compete with Nvidia on game development partnerships, you did a good job. But to bring up a tweet from 8 months ago to simply call someone wrong, when its obvious Ubisoft decided to switch focus to Nvidia is pretty...petty? I don't know how to express this with the proper words...i mean, really? You bring up a 8 month old tweet to call him "wrong" for what reason? Do you have more recent tweets? Did he tout AMD-Watch Dogs 2 cooperation when Nvidia released their trailer?

I don't get this. Did you want him to say 4-5-6 months later from that tweet, "AMD is no longer alongside Watch Dogs 2 development" ? I guess if thats your point, to demand AMD cut off its own foot. But why do you have to call him wrong when the evidence seems pretty clear that Ubisoft switched to Nvidia? Did AMD tout it after the switch? Is that what your calling out?

I mean you have plenty to slam the guy on, but this Watch Dogs 2 DX12 stuff is not one of them.

And those benchmarks aren't using the latest AMD driver.

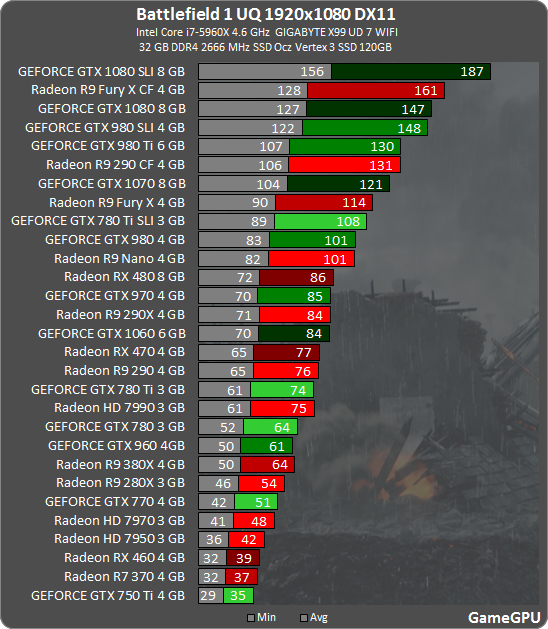

Should you play BF1 in DX11 or DX12?

One of the questions we wanted to ask, and answer, is if you should play BF1 in DX11 or DX12 for the best gameplay experience?

We have discovered that BF1 is highly optimized and runs well in DX11. Performance is very good on an AMD Radeon RX 480 and NVIDIA GeForce GTX 1060 in DX11. We can run the campaign mode at 1440p with the highest possible in-game settings on both video card and still average above 60 FPS. At 1080p performance is well into the 90’s to 100’s, which is incredible. However, you must use DX11 to experience this bliss in gaming performance.

When switched to DX12, BF1 falls flat on its face. There is no performance improvement, and there are no visual quality improvements. In fact, there is a negative return on framerate. On both the AMD Radeon RX 480 and NVIDIA GeForce GTX 1060 the framerates go south and provide worse performance while gaming, at 1080p and 1440p.

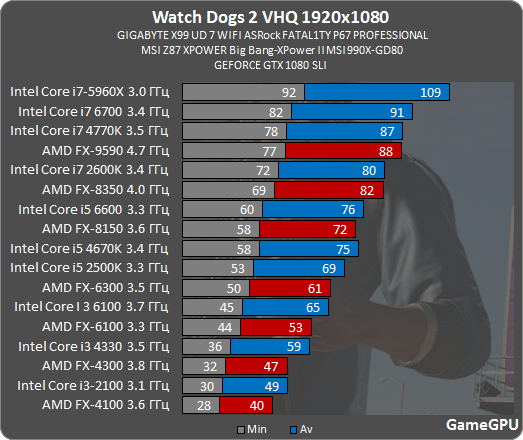

This is why I'm waiting for ZEN.Well looks like the performance is pretty close to being the same to me. The CPU numbers are awesome also. I like the fact that the 5960X is putting in work!

I was impressed cf and sli are actually working.

Can't blame tainted for holding a company to their word.

Well if you compare it to other enthusiast chips the picture probably won't be so pretty. The second best cpu on that list is a 6700, which is not an enthusiast chip not even the high end mainstream one.

Well if you compare it to other enthusiast chips the picture probably won't be so pretty. The second best cpu on that list is a 6700, which is not an enthusiast chip not even the high end mainstream one.

I don't get it. AMD touted a partnership with Watch Dogs 2 8-9 months ago according to that tweet, and then went quiet for 8 months while Ubisoft and Nvidia released a trailer and touted their partnership. I guess if you were making the point that AMD can't compete with Nvidia on game development partnerships, you did a good job. But to bring up a tweet from 8 months ago to simply call someone wrong, when its obvious Ubisoft decided to switch focus to Nvidia is pretty...petty? I don't know how to express this with the proper words...i mean, really? You bring up a 8 month old tweet to call him "wrong" for what reason? Do you have more recent tweets? Did he tout AMD-Watch Dogs 2 cooperation when Nvidia released their trailer?

I don't get this. Did you want him to say 4-5-6 months later from that tweet, "AMD is no longer alongside Watch Dogs 2 development" ? I guess if thats your point, to demand AMD cut off its own foot. But why do you have to call him wrong when the evidence seems pretty clear that Ubisoft switched to Nvidia? Did AMD tout it after the switch? Is that what your calling out?

I mean you have plenty to slam the guy on, but this Watch Dogs 2 DX12 stuff is not one of them.

And those benchmarks aren't using the latest AMD driver.

They both do this though.

Look at Nvidia with Doom and presenting how great Vulkan was when they launched Pascal, yet Doom had greater work with AMD (context Vulkan) as they had time and engagement with AMD to use the low level GPUOpen extensions that greatly enhance performance (same way Nvidia does similar with OpenGL but not yet fully with Vulkan).

Regarding drivers, PCGamesHardware is using what seems the latest the 16.11.5 came out 28/11.

I tend to ignore GameGPU.

Cheers

AMD is notorious for their misleading marketing.

Just because it's been nearly a year doesn't mean they get a pass on this. That's just how I feel, though.

I bet you bought an HP laptop. GSync is known to be broken on it. (Since you don't bother to address any comments made to you in other threads I'm dropping this here.)So is Nvidia, Gsync is the most overhyped pos user tax product I can think of, when it rarely works great but they never tested using it. Surprised they haven't had an article written on how bad it is.

Both do this nonsense

I bought a 1070 laptop believing it would be just like desktop as per all their marketing... Well it sucks really really bad.

So true. Reminds me of that Premium VR experience one is supposed to get with a certain brand.Marketing and reality is never the same.

Well looks like the performance is pretty close to being the same to me. The CPU numbers are awesome also. I like the fact that the 5960X is putting in work!

View attachment 11316

Yeah,

Quick update from them. Nice.

I'm glad you started this thread. Just the other day I came out of my house and saw AMD kick my dog really hard then run away. The other night I was pretty sure it was AMD that was hitting on my wife at the restaurant as well. I've also heard rumors that AMD is aligned with ISIS but nothing has been substantiated yet. I'm glad you can hold such petty grudge so long because we cannot let these atrocities continue. I've never heard of Marketing embellishing their products before so we MUST nip this in the bud...thank goodness for people like you.

Seems like you haven't really been paying attention to the shenanigans AMD marketing has been pulling lately.

Lets not even bring up that on the RX 480 box it says it is VR rdy....when it sucks arse.

Couple on Kaveri and Carrizo. The BS is just staggering.

You mean companies hype their product to stupid levels instead of saying "meh, go by an nvidia card"? Colour me shocked...

Well it helps that on x99 both 1080's have a full x16 3.0 lane.Well looks like the performance is pretty close to being the same to me. The CPU numbers are awesome also. I like the fact that the 5960X is putting in work!

View attachment 11316

Seems like you haven't really been paying attention to the shenanigans AMD marketing has been pulling lately.

Well looks like the performance is pretty close to being the same to me. The CPU numbers are awesome also. I like the fact that the 5960X is putting in work!

Nope, I was in the hospital. AMD cornered me and beat the tar out of me so I have been recuperating ever since.

Looks like my two 1070's will work well with this game, helps that the game was given for free too with the 2nd 1070. That 9590 just keeps on giving and climbing that game ladder over timeWell looks like the performance is pretty close to being the same to me. The CPU numbers are awesome also. I like the fact that the 5960X is putting in work!

View attachment 11316

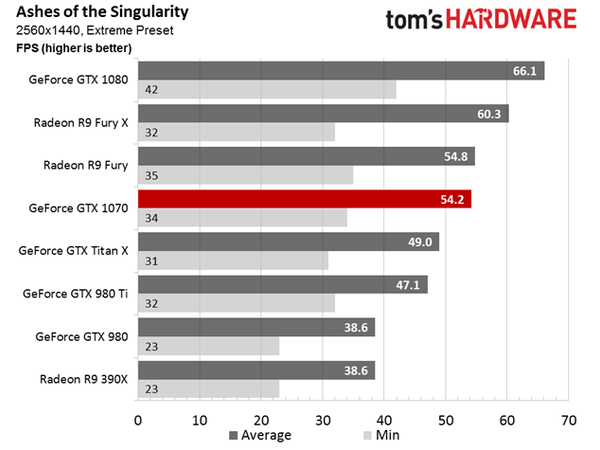

This is why I never take you seriously, a wolf in sheeps clothing if you will.Also remember how often AMD and Roy went on about DX12 performance advantage with AotS compared to Nvidia but now Nvidia is actually either equal or better (980ti outperforms Fury X now, 1060 and 480 are pretty much equal with just a slight lead for either depending upon resolution and whether benchmark or in-game) they have now moved on to other aspects, which is ironic as this is still the only true full performance DX12/async 'game benchmark' available (differentiating this from DX12 Time Spy that is only a benchmark utility, or DX12 games that still do not match its complete development).

Examples where well monitored/benchmarked:

And amusingly notice that Fury X is actually ahead to the reference 980ti in DX11 but not DX12 with async compute

Ok yeah in theory Fury X may still be weaker due to the minimum frames.

Reference 1060 and 480.

Worth noting as well Nvidia's performance relatively improves in game compared to the benchmark albeit not dramatically, so these figures would subtly change again to Nvidia's favor as this is based upon the internal benchmark albeit with PresentMon.

So once it no longer suited their narrative that AoTS shows Nvidia is weak across the model range when using DX12/async compute compared to AMD models they managed to sweep it under the carpet, if they had not made such a noise regarding AoTS performance and how weak Nvidia was I would not had minded so much myself.

And yes before anyone singles out the 980 is still weak compared to say 390/390x in AoTS, context though was AMD used the game in general as a point to highlight weakness of Nvidia architecture and solution, when in reality it was nothing to do with the Nvidia's architecture/solution as to why AMD was so outperforming Nvidia back then in AoTS.

Cheers