I was just thinking. Would be nice if some of the top tier motherboard makers sold models with a socket for the GPU chip and slots for the VRAM. Maybe it would need an EATX form factor. But it would make GPU upgrades simpler, since you could keep your old VRAM. Or you could get a less expensive GPU chip with more VRAM than you get with a "bunlded" GPU chip and VRAM.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

So why not motherboards with GPU (socket) and RAM slots?

- Thread starter philb2

- Start date

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

This has been talked about a lot, and I’ve even commented on it multiple times. But, the very short answer is that that would require the standardization of GPUs and for technical reasons that’s not really possible. Effectively what you would have is a GPU socket that would only support a single GPU, and every time you wanted to upgrade, said GPU, you would have to simply move to another socket.

The reason for this is that each GPUs pinouts are unique to that GPU. The reason why reference boards exist for partners in the first place is because of this complexity (eg a 4090 board looks nothing like a 4060 board in terms of RAM, power delivery, any part of it). If it was as simple as slapping a GPU into any PCB, a significant portion of the engineering cost of GPU boards in general would be significantly decreased. If it was simple to do that, obviously, both AMD and Nvidia would already do that. Engineering and manufacturing cost would go down and therefore either margins would go up or they would be able to make their cards significantly more price competitive. So, if it was possible, they would be doing it.

A long time ago I had a friend who did the technical documentation for Nvidia, the long and the short is, even back then a technical document about a single GPU would be well over 100 pages. And, each individual GPU from Nvidia would require a new technical document. The purpose of said technical document is to give board partners detailed information about how every pin and part of the GPU works for board partners. So that they can either use the reference board or design their own. In case it's not painfully obvious, the complexity of how even a single GPU works isn't simple.

It would actually likely be significantly more expensive to have any form of socket for GPU. You just have to know that the whole reason why a GPU is placed on a PCI-E card in the first place is that that is the part that is replaceable (just like AGP and PCI before it). Making parts of expansion cards with removable parts whether you recognize it or not is really significant added complexity, and as I noted for no real gain.

The reason for this is that each GPUs pinouts are unique to that GPU. The reason why reference boards exist for partners in the first place is because of this complexity (eg a 4090 board looks nothing like a 4060 board in terms of RAM, power delivery, any part of it). If it was as simple as slapping a GPU into any PCB, a significant portion of the engineering cost of GPU boards in general would be significantly decreased. If it was simple to do that, obviously, both AMD and Nvidia would already do that. Engineering and manufacturing cost would go down and therefore either margins would go up or they would be able to make their cards significantly more price competitive. So, if it was possible, they would be doing it.

A long time ago I had a friend who did the technical documentation for Nvidia, the long and the short is, even back then a technical document about a single GPU would be well over 100 pages. And, each individual GPU from Nvidia would require a new technical document. The purpose of said technical document is to give board partners detailed information about how every pin and part of the GPU works for board partners. So that they can either use the reference board or design their own. In case it's not painfully obvious, the complexity of how even a single GPU works isn't simple.

It would actually likely be significantly more expensive to have any form of socket for GPU. You just have to know that the whole reason why a GPU is placed on a PCI-E card in the first place is that that is the part that is replaceable (just like AGP and PCI before it). Making parts of expansion cards with removable parts whether you recognize it or not is really significant added complexity, and as I noted for no real gain.

Last edited:

Honestly I didn't know about that. The idea just popped into my head while I was stuck in traffic.This has been talked about a lot, and I’ve even commented on it multiple times.

Your explanation shows me that not all ideas are worth doing, and some ideas may be completely impractical.

But, the very short answer is that that would require the standardization of GPUs and for technical reasons that’s not really possible. Effectively what you would have is a GPU socket that would only support a single GPU, and every time you wanted to upgrade, said GPU, you would have to simply move to another socket.

The reason for this is that each GPUs pinouts are unique to that GPU. The reason why reference boards exist for partners in the first place is because of this complexity (eg a 4090 board looks nothing like a 4060 board in terms of RAM, power delivery, any part of it). If it was as simple as slapping a GPU into any PCB, a significant portion of the engineering cost of GPU boards in general would be significantly decreased. If it was simple to do that, obviously, both AMD and Nvidia would already do that. Engineering and manufacturing cost would go down and therefore either margins would go up or they would be able to make their cards significantly more price competitive. So, if it was possible, they would be doing it.

A long time ago I had a friend who did the technical documentation for Nvidia, the long and the short is, even back then a technical document about a single GPU would be well over 100 pages. And, each individual GPU from Nvidia would require a new technical document. The purpose of said technical document is to give board partners detailed information about how every pin and part of the GPU works for board partners. So that they can either use the reference board or design their own. In case it's not painfully obvious, the complexity of how even a single GPU works isn't simple.

It would actually likely be significantly more expensive to have any form of socket for GPU. You just have to know that the whole reason why a GPU is placed on a PCI-E card in the first place is that that is the part that is replaceable (just like AGP and PCI before it). Making parts of expansion cards with removable parts whether you recognize it or not is really significant added complexity, and as I noted for no real gain.

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,929

I was just thinking. Would be nice if some of the top tier motherboard makers sold models with a socket for the GPU chip and slots for the VRAM. Maybe it would need an EATX form factor. But it would make GPU upgrades simpler, since you could keep your old VRAM. Or you could get a less expensive GPU chip with more VRAM than you get with a "bunlded" GPU chip and VRAM.

NamelessPFG

Gawd

- Joined

- Oct 16, 2016

- Messages

- 893

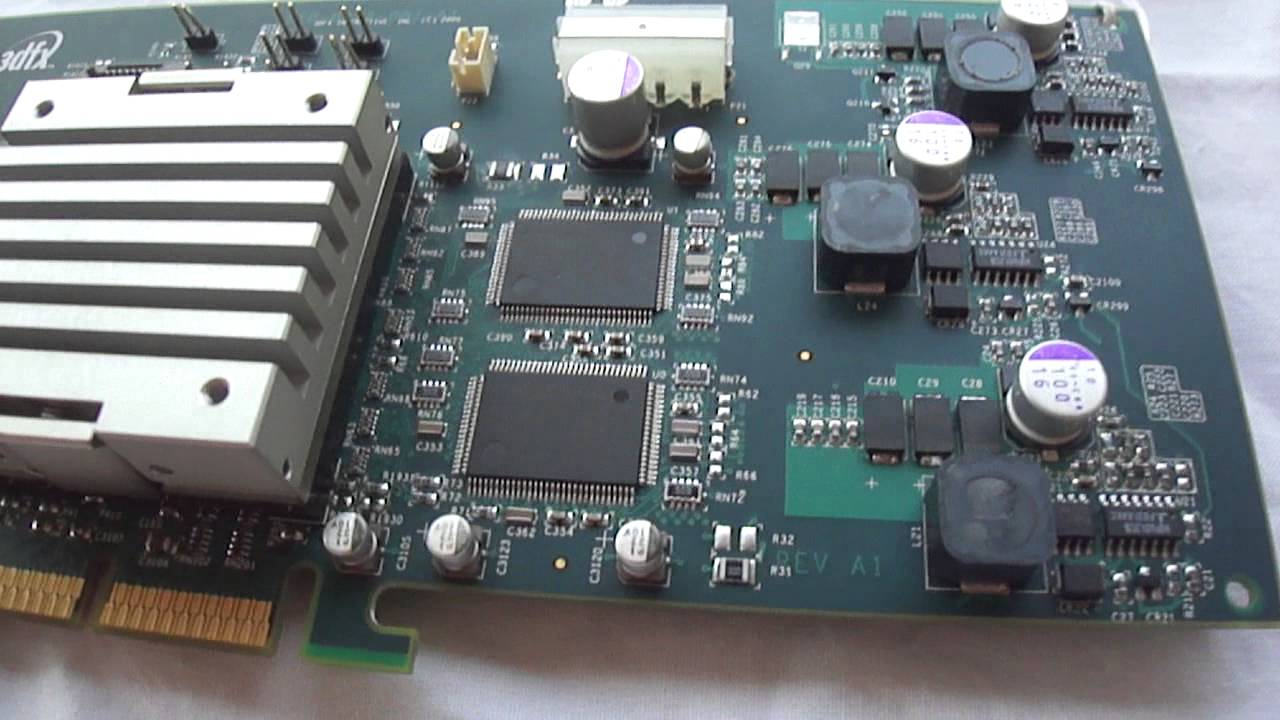

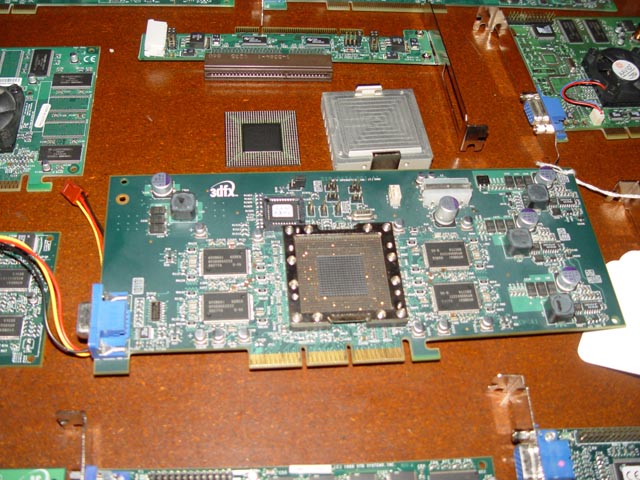

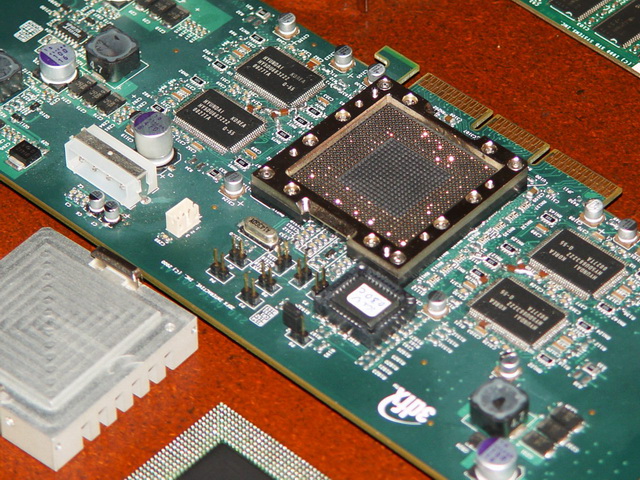

Believe it or not, really old graphics cards from the '90s used to have VRAM SIMMs or other upgrade modules, but that practice died out pretty quickly. Good luck finding VRAM SIMMs now!

Part of the problem with today's GPUs is similar to what laptops are experiencing with DDR5 and the need for CAMMs: the trace routing requirements for the memory bus are simply too tight for typical memory modules to be good enough. Consider the kind of bandwidth that GDDR6X has to handle right now, never mind GDDR7, and how all the chips are packed much more closely to the GPU than older GPU designs (taken to a logical extreme if HBM is used instead).

PCIe 4.0 and especially 5.0 has much the same problem with signal integrity requirements on the traces, which is a big reason why motherboard prices are going up significantly for recent platforms.

Speaking of laptops, MXM has died out as a standard, too. That doesn't mean replaceable GPU modules have died out entirely; HP has some weird proprietary format with a one-time-use "beam connector" (looks like what we'd typically call a compression connector, hopefully not as delicate as the SGI XIO variety) in the ZBook Fury G8s, and then there's the Framework 16 with its own new approach.

I know it sounds like it'd be a lot less material waste to cut the PCB out of the equation for a new GPU and its VRAM, but given the technical challenges, it's probably better for that stuff to have its own board with a tailored trace layout and power delivery/VRM setup, even if it does suck that everyone who wants to use full-cover waterblocks has to replace the block with every single new GPU.

Part of the problem with today's GPUs is similar to what laptops are experiencing with DDR5 and the need for CAMMs: the trace routing requirements for the memory bus are simply too tight for typical memory modules to be good enough. Consider the kind of bandwidth that GDDR6X has to handle right now, never mind GDDR7, and how all the chips are packed much more closely to the GPU than older GPU designs (taken to a logical extreme if HBM is used instead).

PCIe 4.0 and especially 5.0 has much the same problem with signal integrity requirements on the traces, which is a big reason why motherboard prices are going up significantly for recent platforms.

Speaking of laptops, MXM has died out as a standard, too. That doesn't mean replaceable GPU modules have died out entirely; HP has some weird proprietary format with a one-time-use "beam connector" (looks like what we'd typically call a compression connector, hopefully not as delicate as the SGI XIO variety) in the ZBook Fury G8s, and then there's the Framework 16 with its own new approach.

I know it sounds like it'd be a lot less material waste to cut the PCB out of the equation for a new GPU and its VRAM, but given the technical challenges, it's probably better for that stuff to have its own board with a tailored trace layout and power delivery/VRM setup, even if it does suck that everyone who wants to use full-cover waterblocks has to replace the block with every single new GPU.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)