Why do you say that?For all we know, though, their interest in GPU investment may just fizzle out entirely.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GPU prices — is the worst behind us ?

- Thread starter Marees

- Start date

because Intel has a record of entering new markets not immediately becoming number 1, and then bailing the next time they need to trim costs.Why do you say that?

Short-term thinking and/or failure to write a realistic business case. Did they have illusions (hallucinations? drug-induced ideas? )that they could beat Nvidia in the GPU market, or even AMD?because Intel has a record of entering new markets not immediately becoming number 1, and then bailing the next time they need to trim costs.

Accountants ran the company for over a decade. They left the mobile market because margins were less than 60%.Short-term thinking and/or failure to write a realistic business case. Did they have illusions (hallucinations? drug-induced ideas? )that they could beat Nvidia in the GPU market, or even AMD?

Never let accounts run a company

No kidding. They sold off their ARM holdings to Marvell in the mid-2000s or so, which was just crazy. I had an HP pocket pc I bought around 2005 that was pretty impressive for that day--an hx4700, IIRC. They'd probably be giving Qualcomm some serious competition if they hadn't bailed.Accountants ran the company for over a decade. They left the mobile market because margins were less than 60%.

Never let accounts run a company

My recommendation:

Grab the 6700xt/6750xt/6800 before they run out of stock !!

Grab the 6700xt/6750xt/6800 before they run out of stock !!

The Jornada and the iPaq were super useful units.No kidding. They sold off their ARM holdings to Marvell in the mid-2000s or so, which was just crazy. I had an HP pocket pc I bought around 2005 that was pretty impressive for that day--an hx4700, IIRC. They'd probably be giving Qualcomm some serious competition if they hadn't bailed.

The iPaq hx4705 was like the Falcon Northwest of Pocket PCs.The Jornada and the iPaq were super useful units.

I don’t know if that’s good or bad…The iPaq hx4705 was like the Falcon Northwest of Pocket PCs.

But from 2002-2007 I basically slept along side my pocket PC until I got a BlackBerry.

It was a pretty big step up from stuff like Dell Axims and most of the Compaq PPCs, but it cost a lot more. I just checked: 624MHz Intel XScale, full VGA display, and generous RAM & flash.I don’t know if that’s good or bad…

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,248

OutOfPhase

Supreme [H]ardness

- Joined

- May 11, 2005

- Messages

- 6,580

Sorry to continue on a bit of a tangent, but - for my needs and sheer instant usability - I actually preferred my old PalmPilot to my iPaq, as cool as it was.

As for GPU prices, no the worst is not behind us, at least if you want "big iron".

As for GPU prices, no the worst is not behind us, at least if you want "big iron".

Last edited:

Current Gpu perf/$ of cards in $250 to $400 range:

7600 XT reviews consolidated by 3DCenter_org

https://old.reddit.com/r/hardware/comments/1aicvhe/amd_radeon_rx_7600_xt_meta_review/

https://m-3dcenter-org.translate.go...0-xt?_x_tr_sl=auto&_x_tr_tl=en&_x_tr_hl=en-GB

And with that the 1660 series has finally been retiredFor what it's worth, they quietly released the 3050 6GB.

For cheap gaming? RDNA2 cards suck.My recommendation:

Grab the 6700xt/6750xt/6800 before they run out of stock !!

kirbyrj

Fully [H]

- Joined

- Feb 1, 2005

- Messages

- 30,693

For cheap gaming? RDNA2 cards suck.

This is beyond ignorant.

OutOfPhase

Supreme [H]ardness

- Joined

- May 11, 2005

- Messages

- 6,580

Could you elaborate? They seem pretty solid for "cheap gaming".For cheap gaming? RDNA2 cards suck.

Yes, only gaming, though. I think value is when they're good for 'more' or 'everything.' That's just my opinion, though.Could you elaborate? They seem pretty solid for "cheap gaming".

When I was researching used cards - I did look at the RDNA 2 gpus - the higher end 6900 XT/6950 XT also have transient spikes, high power consumption when playing a video - and they don't have the DLSS/RT blah, blah like the Nvidia gpus - they only have FSR - and I thought that was inferior? Also, they don't have AV1 - plus, they're still pretty expensive in my country - some of them are as expensive or more expensive than used 3080s here.

Their performance in Blender, video editing, ML/AI is pretty bland...

OutOfPhase

Supreme [H]ardness

- Joined

- May 11, 2005

- Messages

- 6,580

It seems like a use-case direction. What do you do most of the day. and what matters to you, for critical path?Yes, only gaming, though. I think value is when they're good for 'more' or 'everything.' That's just my opinion, though.

When I was researching used cards - I did look at the RDNA 2 gpus - the higher end 6900 XT/6950 XT also have transient spikes, high power consumption when playing a video - and they don't have the DLSS/RT blah, blah like the Nvidia gpus - they only have FSR - and I thought that was inferior? Also, they don't have AV1 - plus, they're still pretty expensive in my country - some of them are as expensive or more expensive than used 3080s here.

Their performance in Blender, video editing, ML/AI is pretty bland...

I would stand by my original assessment - for most gaming, and doubly-so for "cheap-gaming", RDNA2 cards are really pretty great. FSR is improving (a LOT, from what I've seen), and we can all try to dial in a personal preference of quality there.

If you're running Blender, or video editing, or ML - things could change. I personally have no experience there.

Also, if you're like me and tweak out on visuals, I personally want better ray tracing performance. But I am not doing cheap gaming.

sfsuphysics

[H]F Junkie

- Joined

- Jan 14, 2007

- Messages

- 15,994

but that was literally what you wrote and seem to refer to in your replyYes, only gaming, though.

"For cheap gaming? RDNA2 cards suck."

Well in context to the statementbut that was literally what you wrote and seem to refer to in your reply

"For cheap gaming? RDNA2 cards suck."

My recommendation:

Grab the 6700xt/6750xt/6800 before they run out of stock !!

Then the question “For Cheap Gaming?”

Fits,

Because as he explains later for production loads they are somewhat sub par.

I was referring to gaming cards <= $400Well in context to the statement

Then the question “For Cheap Gaming?”

Fits,

Because as he explains later for production loads they are somewhat sub par.

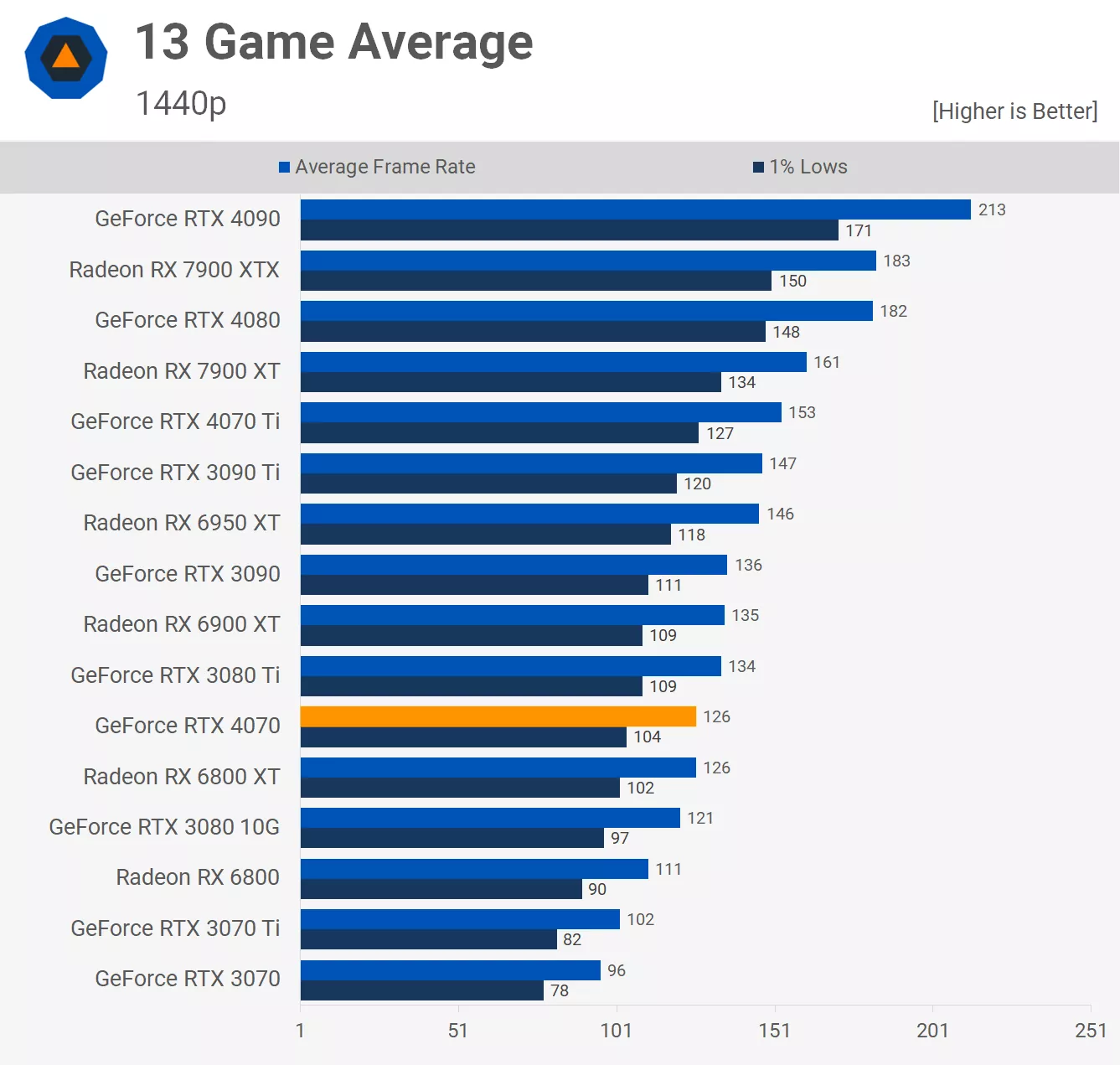

HUB Analysis on ideal GPU prices:

here's what the lineup should have looked like at launch, up to the mid-range:

here's what the lineup should have looked like at launch, up to the mid-range:

- The RTX 4070 should have debuted at $500, matched by AMD with the 7900 XT.

- The 7800 XT should have been priced at $460, indicating it was better priced than most without being exceptional.

- Around $400, we should have seen the 7700 XT competing against the RTX 4060 Ti 16GB, with the Nvidia model priced at $370.

- Below that, at $300, there should have been a battle between the RTX 4060 Ti 8GB and RX 7600 XT, presenting an interesting choice between faster performance with less VRAM, and slower performance with more VRAM.

- At $250, the battle should have been between the RTX 4060 and RX 7600.

- Rounding it out, last-generation GPUs like the RX 6600 at $170 and the RTX 3050 6GB at $110 should have provided solid entry points to PC gaming.

Next reset could be around launch of RDNA 4 (that could happen aligned with FSR4 & PS5 pro launch or in June/July if Lisa Su is in a more generous mood)HUB Analysis on ideal GPU prices:

here's what the lineup should have looked like at launch, up to the mid-range:

https://www.techspot.com/article/2817-price-is-wrong-gpu-reality-check/#google_vignette

- The RTX 4070 should have debuted at $500, matched by AMD with the 7900 XT.

- The 7800 XT should have been priced at $460, indicating it was better priced than most without being exceptional.

- Around $400, we should have seen the 7700 XT competing against the RTX 4060 Ti 16GB, with the Nvidia model priced at $370.

- Below that, at $300, there should have been a battle between the RTX 4060 Ti 8GB and RX 7600 XT, presenting an interesting choice between faster performance with less VRAM, and slower performance with more VRAM.

- At $250, the battle should have been between the RTX 4060 and RX 7600.

- Rounding it out, last-generation GPUs like the RX 6600 at $170 and the RTX 3050 6GB at $110 should have provided solid entry points to PC gaming.

6400xt = <$140

6600 = $170

7600 = $220

7600xt = $280

Navi 44 8gb = $300 (~=4060 ti)

7700xt = $350

7800xt = $420-$450

Navi 48 cut down = $480-$500

Navi 48 full = $550-$600 (~= 7900xt/4070 ti super)

SPARTAN VI

[H]F Junkie

- Joined

- Jun 12, 2004

- Messages

- 8,762

Disagree with this. HUB's rationale is based on the combined RT and raster performance, a standard that they don't uniformly apply to the other tiers. Even their own data (albeit a bit old now) shows the 7900 XT is a closer match to the 4070 Ti. I can't entirely blame them given the RDNA 3 product stack has more holes than swiss cheese, but it still feels like a misstep to drag the 7900 XT down that far.

Raster performance:

TechSpot didn't aggregate RT performance like they did with raster (above), but we see in their long form review that in 5 out of the 8 RT titles they tested, the 7900 XT was closer to the RTX 4070 Ti's performance.

Last edited:

The:

feel like almost a typo, the 7900xt is a "missed" top of the line Navi 31, 529mm of die, a card made and that can handle many title at 4k type, around 40% faster raster than a 4070, that would needed to have dropped really fast from $900 in a loosing your face ways and how much can you charge for the XTX $650 max, would already a lot the gap is not that big between the 2 ?

The 7900xt should not have been an over 500m-320 bit card that perform like a 7900xt and is much cheaper to make... yes sure would be nice, but considering the price tag it launched, would they have been selling it at a lost at $500 in early 2023 ?

7800xt at $460 would make little sense if $50 more give you the XT, you can get +30% performance, 4GB of vram for only %10 more of the price... everything would need to go down more than that.

feel like almost a typo, the 7900xt is a "missed" top of the line Navi 31, 529mm of die, a card made and that can handle many title at 4k type, around 40% faster raster than a 4070, that would needed to have dropped really fast from $900 in a loosing your face ways and how much can you charge for the XTX $650 max, would already a lot the gap is not that big between the 2 ?

The 7900xt should not have been an over 500m-320 bit card that perform like a 7900xt and is much cheaper to make... yes sure would be nice, but considering the price tag it launched, would they have been selling it at a lost at $500 in early 2023 ?

7800xt at $460 would make little sense if $50 more give you the XT, you can get +30% performance, 4GB of vram for only %10 more of the price... everything would need to go down more than that.

Last edited:

learners permit

[H]ard|Gawd

- Joined

- Jun 15, 2005

- Messages

- 1,807

Yea HUB was shittin on the 7900GRE as well disregarding it punching the 4070 in the face most of the time. Seems Steve is starting to swing over to the RT hype train.

Sir Beregond

Gawd

- Joined

- Oct 12, 2020

- Messages

- 947

I didn't watch whatever HUB video is being referenced here, but I would have instead liked to have seen the 4070 Ti release as the 4070 and I would have been ok with that at $600. It really is how each card got branded/priced that got screwed up (4080 12GB probably what screwed up the 70 cards), but for all intents and purposes, the 4070 Ti had all the performance characteristics of what a base non-Ti branded 70-card would be any given gen - i.e. matches performance of the previous gen flagship. So the sudden shift to make that a 4070 Ti or even what they tried at first which was the 4080 12GB plus tacking on a price hike of $300+ to what the previous two 70 cards MSRP'd for from Ampere and Turing was just a joke.Disagree with this. HUB's rationale is based on the combined RT and raster performance, a standard that they don't uniformly apply to the other tiers. Even their own data (albeit a bit old now) shows the 7900 XT is a closer match to the 4070 Ti. I can't entirely blame them given the RDNA 3 product stack has more holes than swiss cheese, but it still feels like a misstep to drag the 7900 XT down that far.

Raster performance:

View attachment 641649

TechSpot didn't aggregate RT performance like they did with raster (above), but we see in their long form review that in 5 out of the 8 RT titles they tested, the 7900 XT was closer to the RTX 4070 Ti's performance.

Yea HUB was shittin on the 7900GRE as well disregarding it punching the 4070 in the face most of the time. Seems Steve is starting to swing over to the RT hype train.

They have favored the nvidia feature set mostly because of DLSS for the past 2-3 years. Less so RT. Given their history with Nvidia, especially when they got flack for not caring about RT during turing it is interesting to see the change. C'est la vie I guess. They and GN went down a notch in my book after last year's drama over power cables and fsr exclusives.

crazycrave

[H]ard|Gawd

- Joined

- Mar 31, 2016

- Messages

- 1,884

I been using that cheap gaming card with RDNA 2 that sucks, one thing it doesn't suck at is power with the 100watt cap.

Nvidia GPU prices rise in China

( Is this a result of the US ban ??? )

https://videocardz.com/newz/chinese-board-partners-are-adjusting-prices-for-several-rtx-30-40-models

( Is this a result of the US ban ??? )

https://videocardz.com/newz/chinese-board-partners-are-adjusting-prices-for-several-rtx-30-40-models

Absalom

[H]ard|Gawd

- Joined

- Oct 3, 2007

- Messages

- 1,269

I didn't even know the 4090D was a thing.Nvidia GPU prices rise in China

( Is this a result of the US ban ??? )

https://videocardz.com/newz/chinese-board-partners-are-adjusting-prices-for-several-rtx-30-40-models

My guess is some serious FUD going on, maybe a little bit to do with the recent earthquake in Taiwan? The US gov has been ordering Nvidia to not sell these high-end 40 series to China for a year now based on nothing but AI fearmongering. I'm guessing this is why the 4090D exists? The average price of a 4090 (non-D) in the states has been $1799 for months now. The 4090 FE has been a unicorn (sniped by bots @ BB) since it launched 18 months ago, and is the only 4090 sku holding a MSRP at $1599.

Inflation calculator says there's been 6% increase to the USD since Oct 2022 when the 40 series launched. So if Nvidia is guiding any price adjustment, they could make any number of excuses. Remember, if Nvidia charges OEMs more per chip, OEMs will typically raise their product prices to maintain their profit margin, whatever that may be.

Hopefully any incoming price increase is a China only thing. In the western world, we thought the 4070 Super should have been launched with a $500 MSRP anyway.

There's also the conspiracy theory that Nvidia has slowed down production of the 40 series to create artificial demand. One thing that is evident, people will indeed pay any kind of premium for their products.

I didn't even know the 4090D was a thing.

My guess is some serious FUD going on, maybe a little bit to do with the recent earthquake in Taiwan? The US gov has been ordering Nvidia to not sell these high-end 40 series to China for a year now based on nothing but AI fearmongering. I'm guessing this is why the 4090D exists? The average price of a 4090 (non-D) in the states has been $1799 for months now. The 4090 FE has been a unicorn (sniped by bots @ BB) since it launched 18 months ago, and is the only 4090 sku holding a MSRP at $1599.

Inflation calculator says there's been 6% increase to the USD since Oct 2022 when the 40 series launched. So if Nvidia is guiding any price adjustment, they could make any number of excuses. Remember, if Nvidia charges OEMs more per chip, OEMs will typically raise their product prices to maintain their profit margin, whatever that may be.

Hopefully any incoming price increase is a China only thing. In the western world, we thought the 4070 Super should have been launched with a $500 MSRP anyway.

There's also the conspiracy theory that Nvidia has slowed down production of the 40 series to create artificial demand. One thing that is evident, people will indeed pay any kind of premium for their products.

What's an audiance?

A typoWhat's an audiance?

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,672

In a nonsensical meme at that. Also kind of hard to typo when It's multiple keys away on the keyboard.A typo

Camberwell

Gawd

- Joined

- Jan 20, 2008

- Messages

- 947

NVIDIA board partners expect GeForce RTX 5090 and RTX 5080 to launch in fourth quarter

https://videocardz.com/newz/nvidia-...5090-and-rtx-5080-to-launch-in-fourth-quarter

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)