erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,923

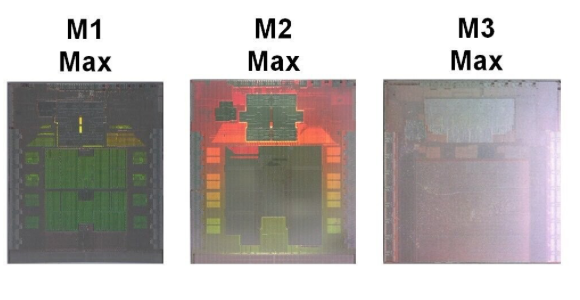

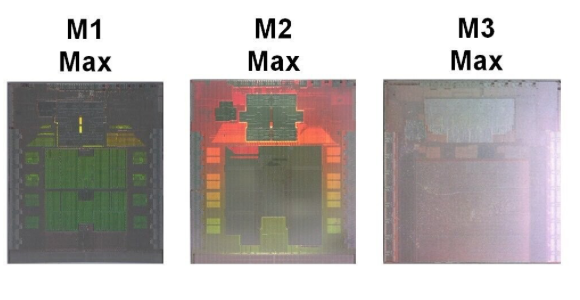

“While the absence of visible interconnect space on early die-shots is not conclusive evidence, as seen with the M1 Max not having visible UltraFusion interconnect and still being a part of M1 Ultra with UltraFusion, industry has led the speculation that the M3 Ultra may indeed feature a monolithic design. Considering that the M3 Max has 92 billion transistors and is estimated to have a die size between 600 and 700 mm², going Ultra with these chips may be pushing the manufacturing limit. Considering the maximum die size limit of 848 mm² for the TSMC N3B process used by Apple, there may not be sufficient space for a dual-chip M3 Ultra design. The potential shift to a monolithic design for the M3 Ultra raises questions about how Apple will scale the chip's performance without the UltraFusion interconnect. Competing solutions, such as NVIDIA's Blackwell GPU, use a high-bandwidth C2C interface to connect two 104 billion transistor chips, achieving a bandwidth of 10 TB/s. In comparison, the M2 Ultra's UltraFusion interconnect provided a bandwidth of 2.5 TB/s.”

Source: https://www.techpowerup.com/321129/...ithic-design-without-ultrafusion-interconnect

Source: https://www.techpowerup.com/321129/...ithic-design-without-ultrafusion-interconnect

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)