Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,567

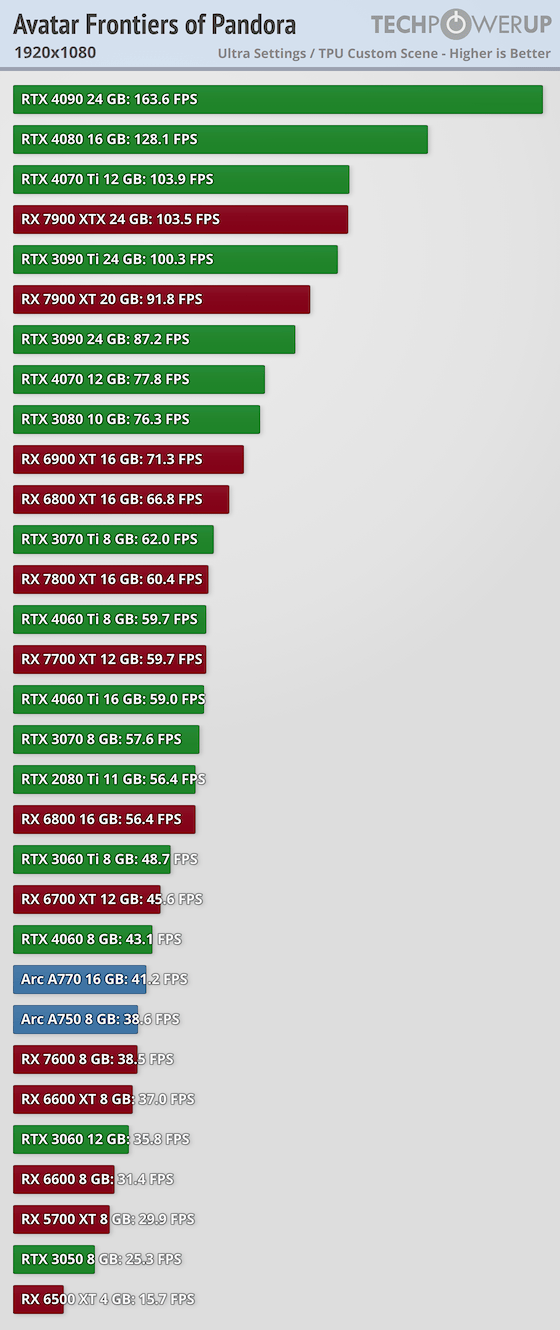

Not every card is going to cost over $1000 though. The 5080 might get the price raised back up to $1200 to match the 4080's initial launch price but everything else under it is going to be less than $1,000. If Nvidia starts resting on their laurels then that only allows AMD an opportunity to take the performance crown and I'm sure Mr. Leather Jacket would never allow that to happen.

I feel a 5080 priced over 1,000 bucks will be a dust collector for retailers, sort of like the current 4080. Honestly I was a bit surprised how well the 4090 sold, so that may still do well at a higher price. Profit is far more important to the leather jacket right now, otherwise stock value might drop.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)