MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,524

And yet AMD STILL can't compete..

They are, just not at the top end. Not everyone is a 4090 class buyer.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

And yet AMD STILL can't compete..

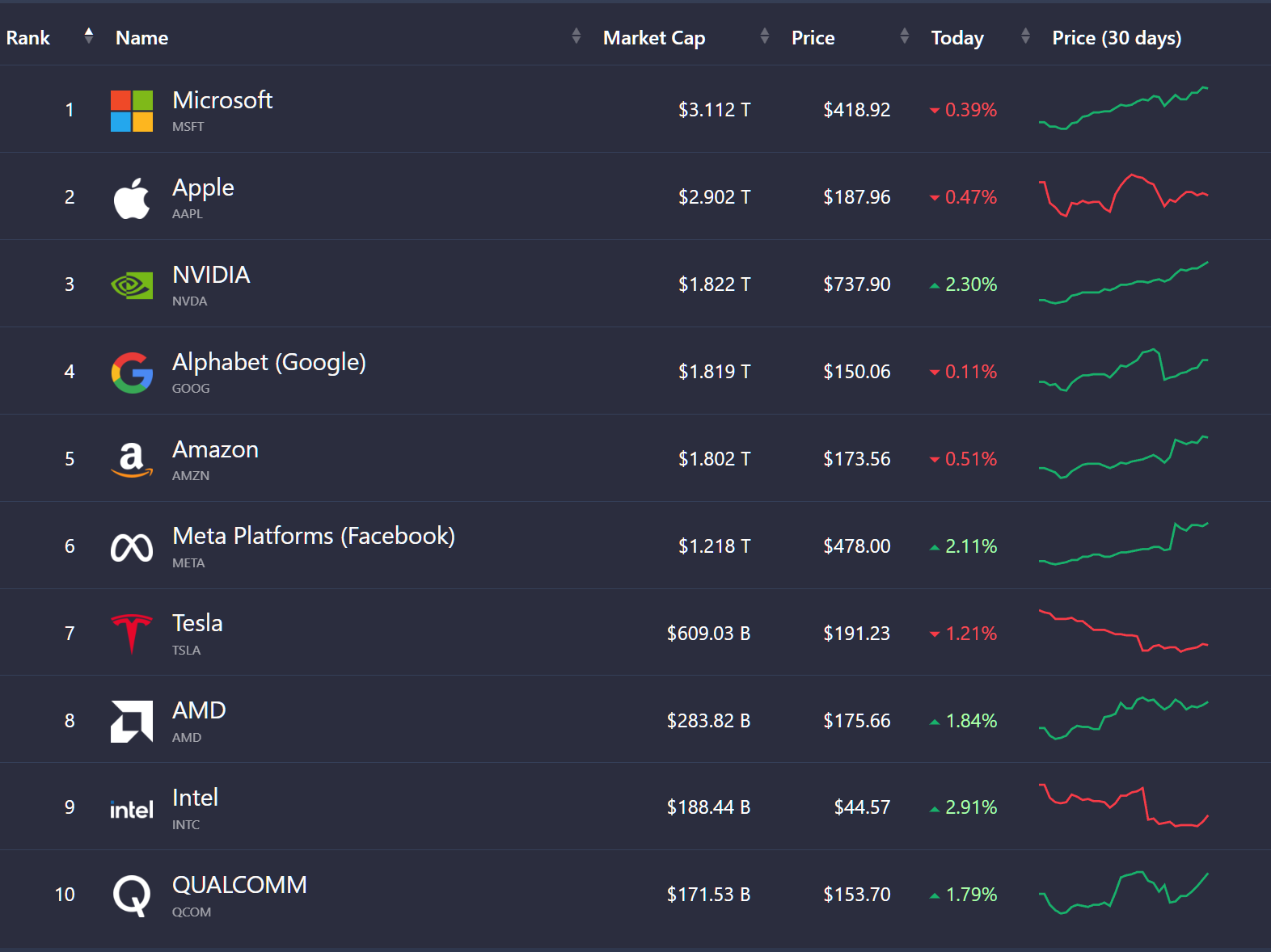

Not really. They're losing market share, throwing out a "7999.999XXXTXXX AWESOME FAST" card that competes with Nvidia's 4080 in raster and 4060 in RT.They are, just not at the top end. Not everyone is a 4090 class buyer.

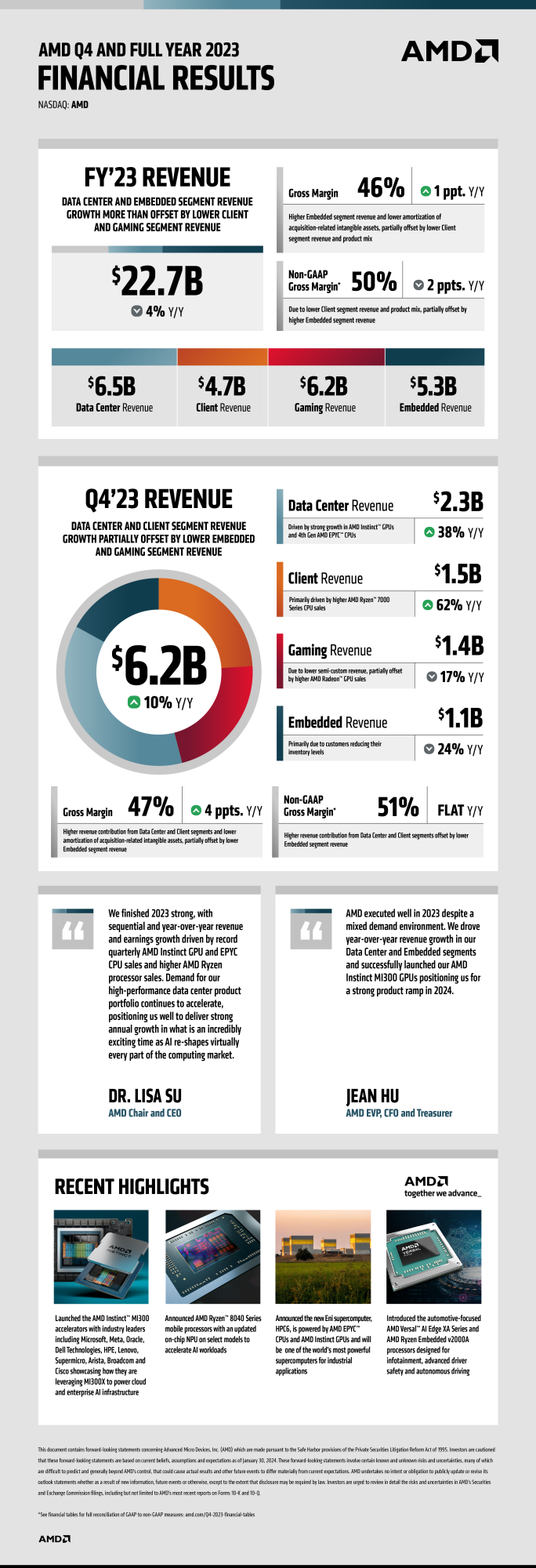

But right now? AMD can't. If they could whip up a 4090 killer they would have already done so. Agreed 100%.Not really. They're losing market share, throwing out a "7999.999XXXTXXX AWESOME FAST" card that competes with Nvidia's 4080 in raster and 4060 in RT.

They aren't leaving the high end by choice. no matter what they say. Profit per square-millimetre of silicon at the high-end is ridiculously high. They would compete there if they could.

Not really. They're losing market share, throwing out a "7999.999XXXTXXX AWESOME FAST" card that competes with Nvidia's 4080 in raster and 4060 in RT.

They aren't leaving the high end by choice. no matter what they say. Profit per square-millimetre of silicon at the high-end is ridiculously high. They would compete there if they could.

I think its a design choice, not incompetence. AD102 has 30% more transistors than Navi 31, and performs about 30% faster. AMD's wafer starts are even more strained than Nvidia's - they have to fab Epyc, Ryzen, Navi, and Instinct, parts with wildly different markets and margins, all at the same fab on the same node.But right now? AMD can't. If they could whip up a 4090 killer they would have already done so. Agreed 100%.

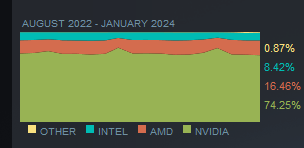

I heard some interesting stats yesterday of a user survey about the market share of GPUs at Hardware Unboxed.I wasn't really referring to market share but the actual products themselves. The products compete pretty well at their given price points (at least for raster performance which is the majority of gaming), but it doesn't matter because people tend to buy Nvidia anyways. I've seen people choose a GTX 1050 over an RX 580 in the past despite the RX 580 being way faster at a similar price. Nowdays though GPU performance is more about raster and AMD is quickly losing more and more ground in that aspect so if they don't make some major changes then yeah for sure they won't be able to compete at all.

They're targeting 4070 Super Ti - 4030 range which's more of a fight against Intel than Nvidia. Good thing Intel's struggling very bad at the moment and doesn't seem like they'll ever going back to the good ole' Sandy Bridge days again.And yet AMD STILL can't compete..