Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,878

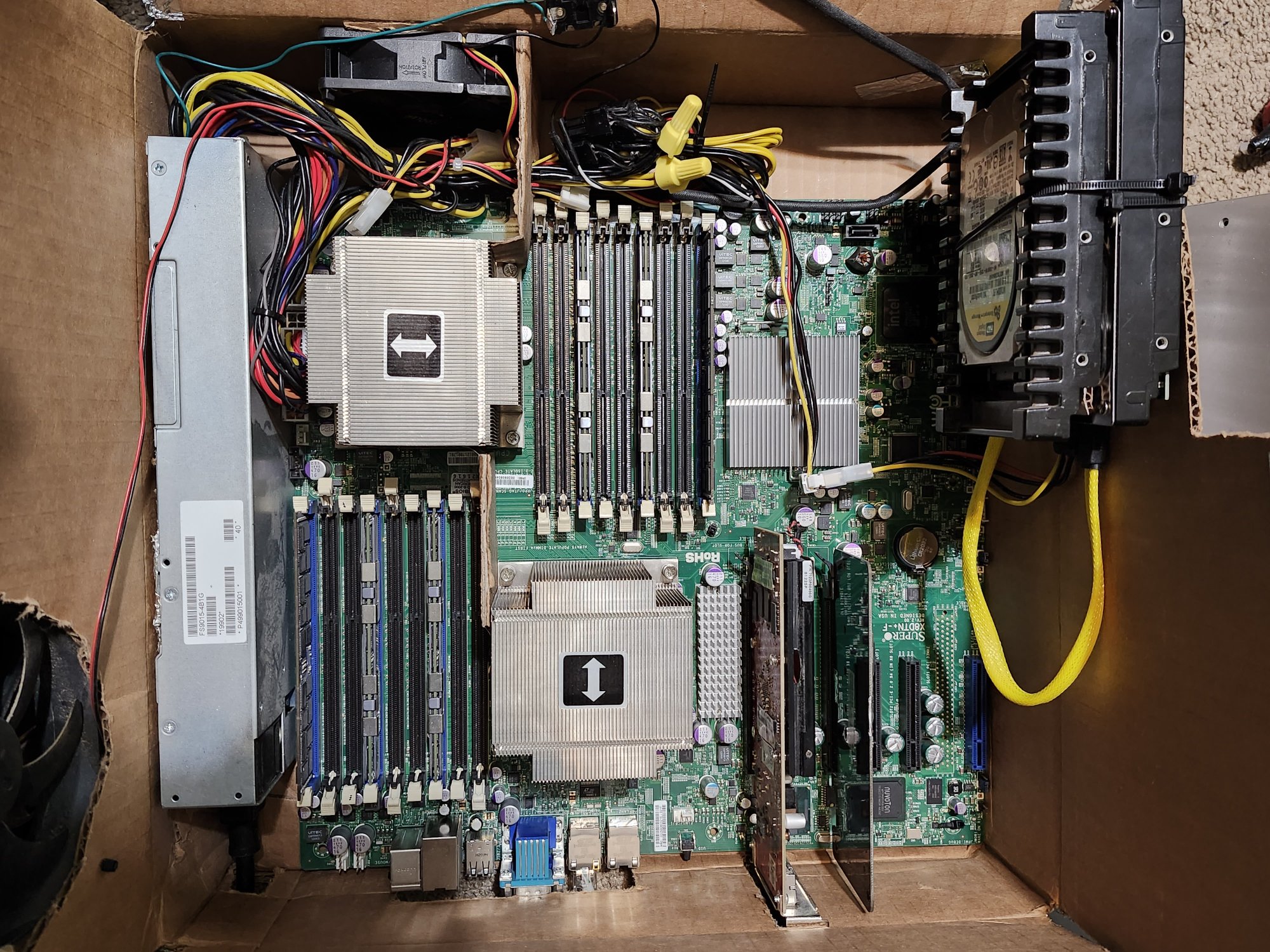

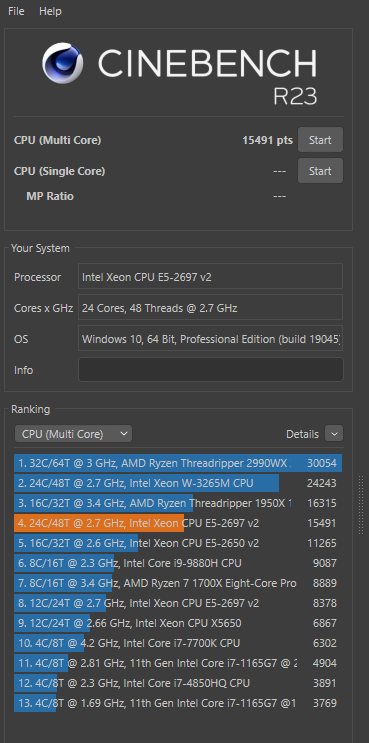

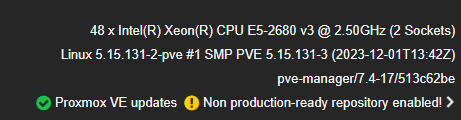

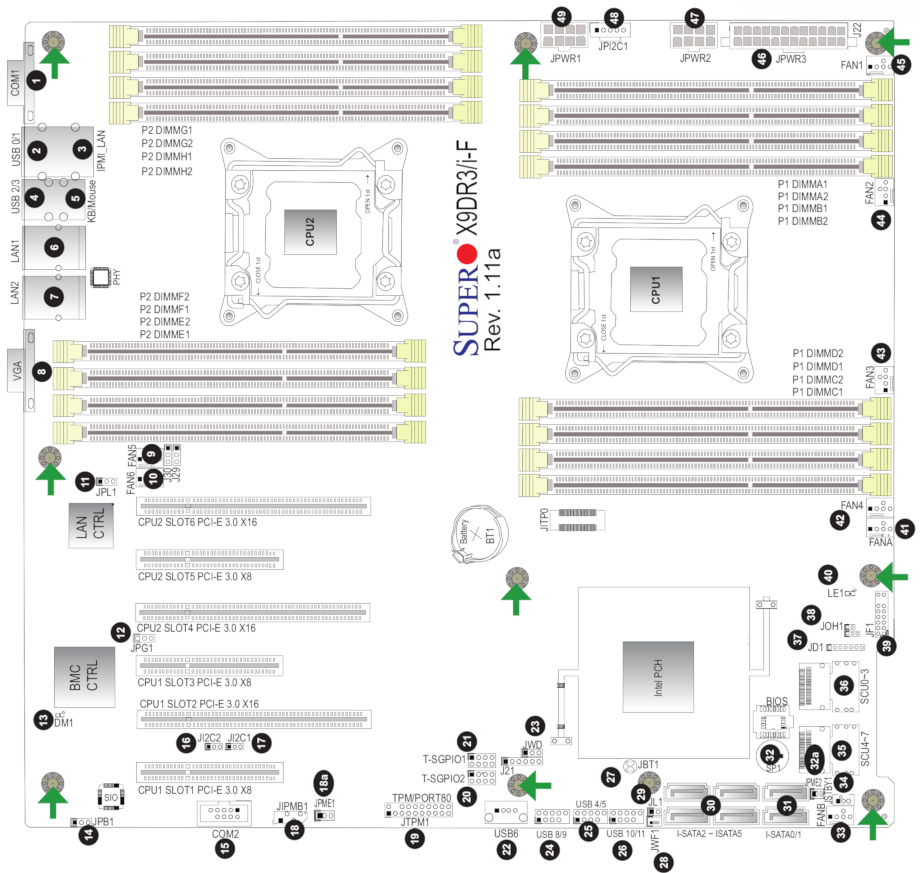

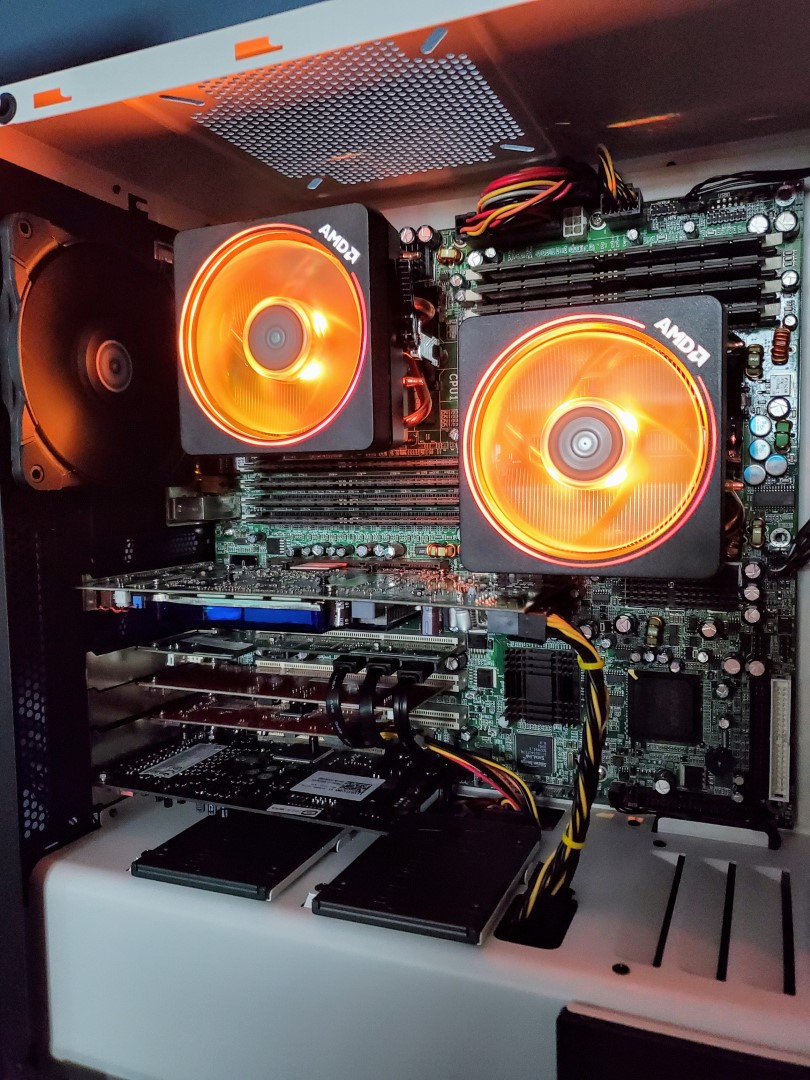

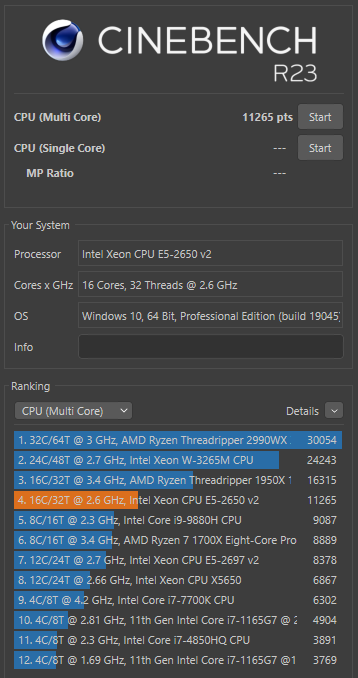

Two 8C/16T Ivy Bridge Xeon E5-2650 v2's with 256GB of ECC RAM. This would have been quite the workstation back when it was new

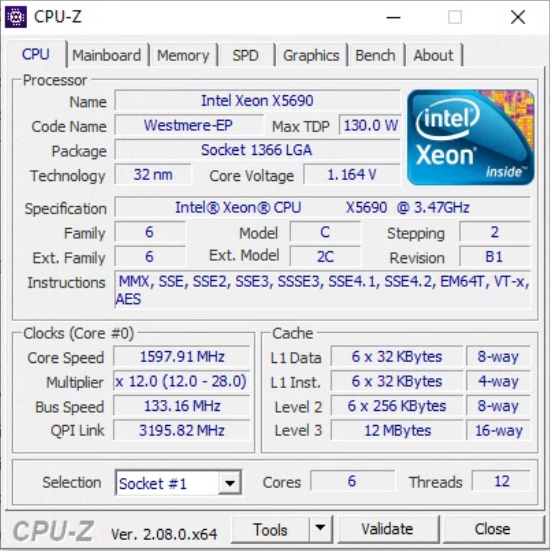

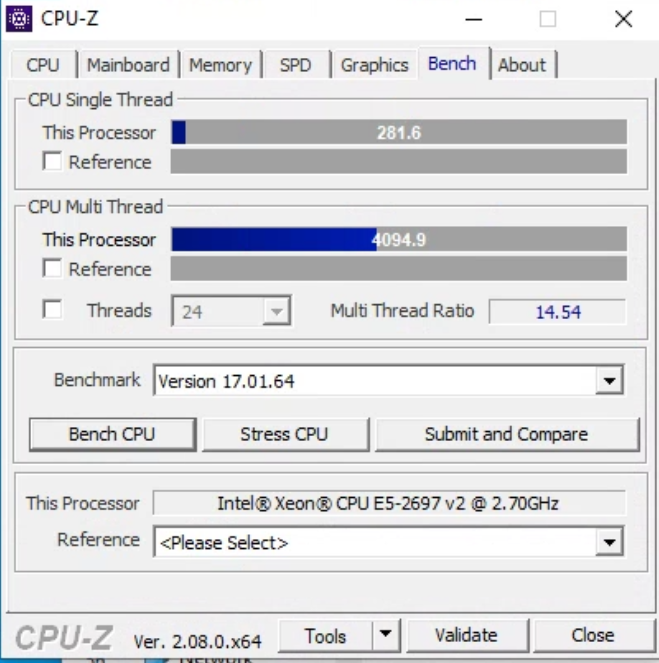

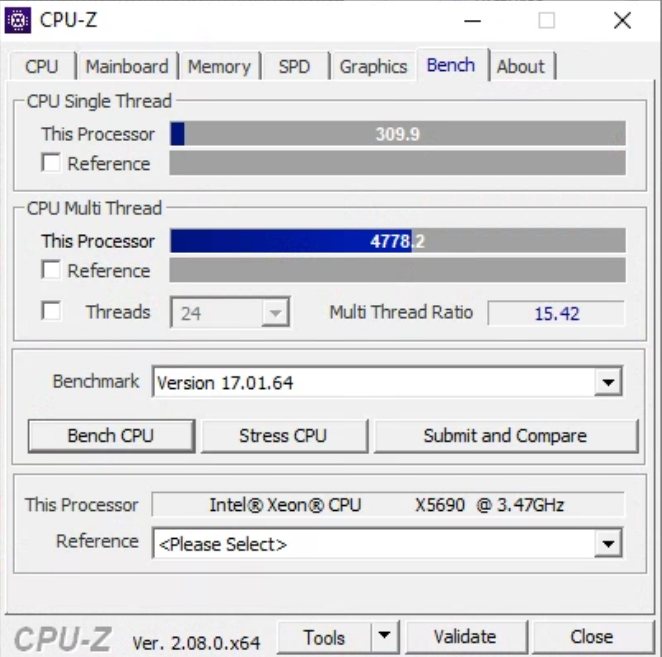

But just to illustrate that that was quite a long time ago

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)