sabrewolf732

Supreme [H]ardness

- Joined

- Dec 6, 2004

- Messages

- 4,778

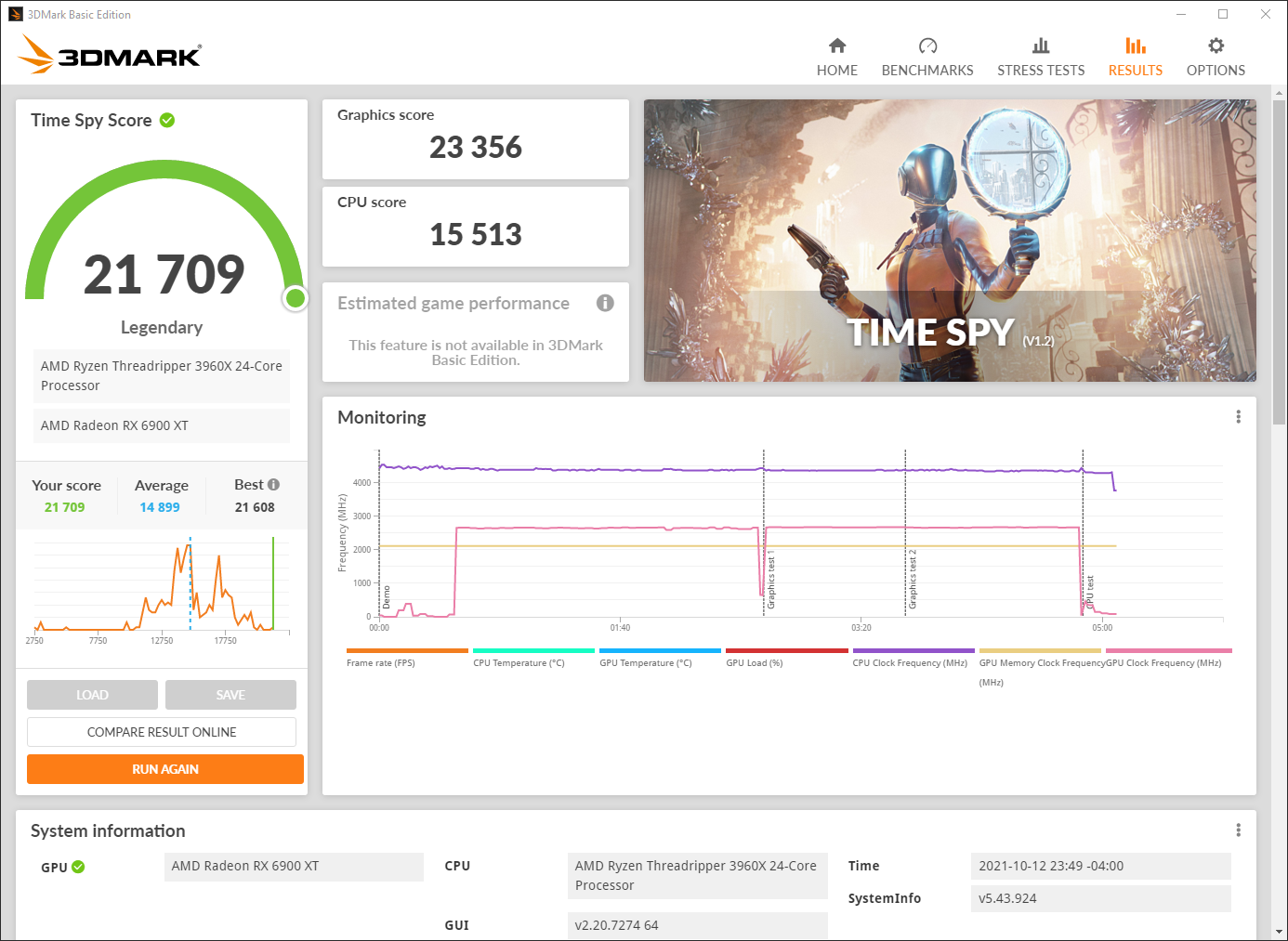

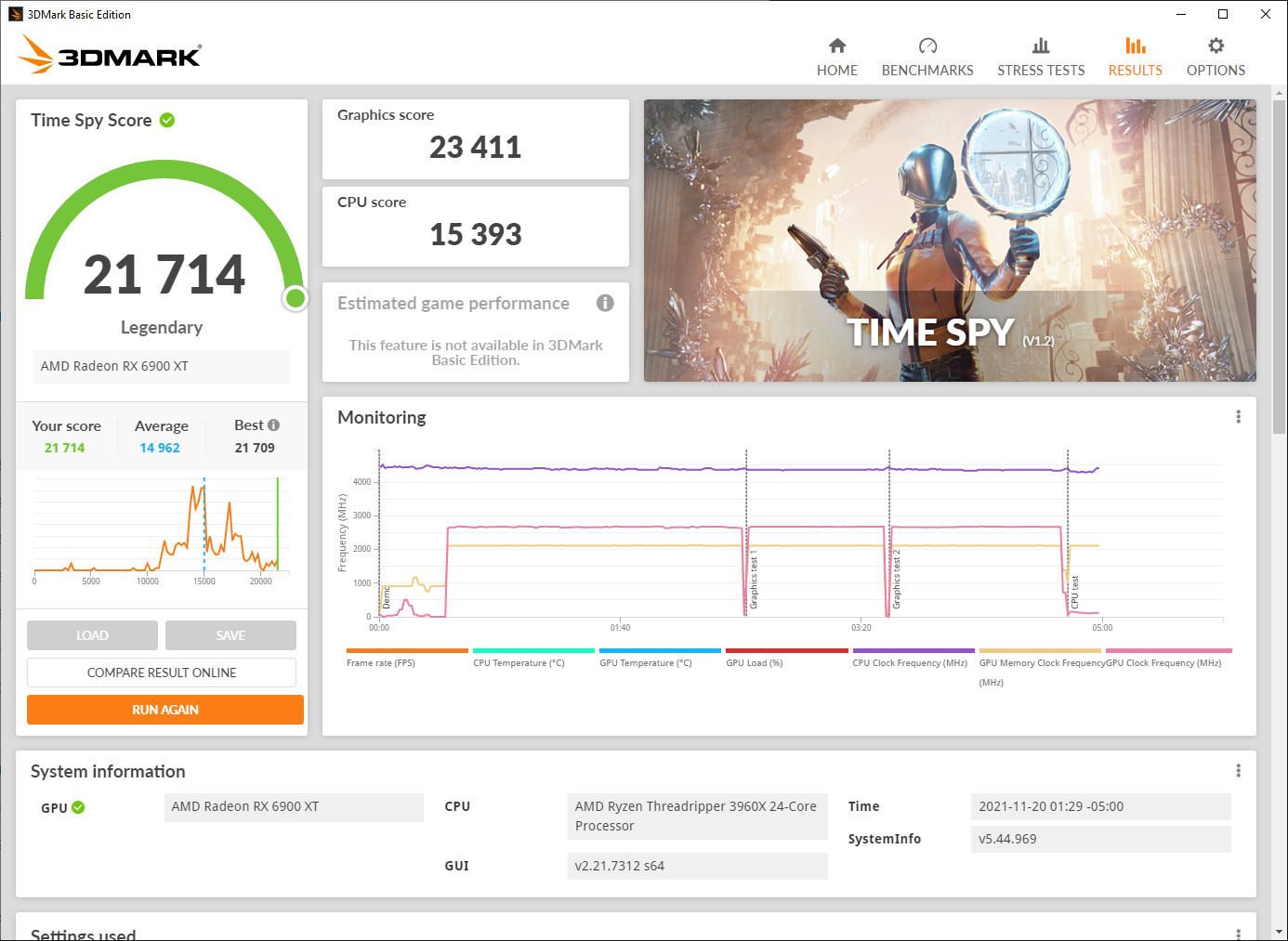

You might want to downclock your memory a bit. I'll show a screenshot when I get home, but I'm pretty sure I'm close to that on the reference cooler. Except my clocks lower.

I can play with it a bit later, from what I remember score decreases with lower memory clocks.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)