Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,850

So,

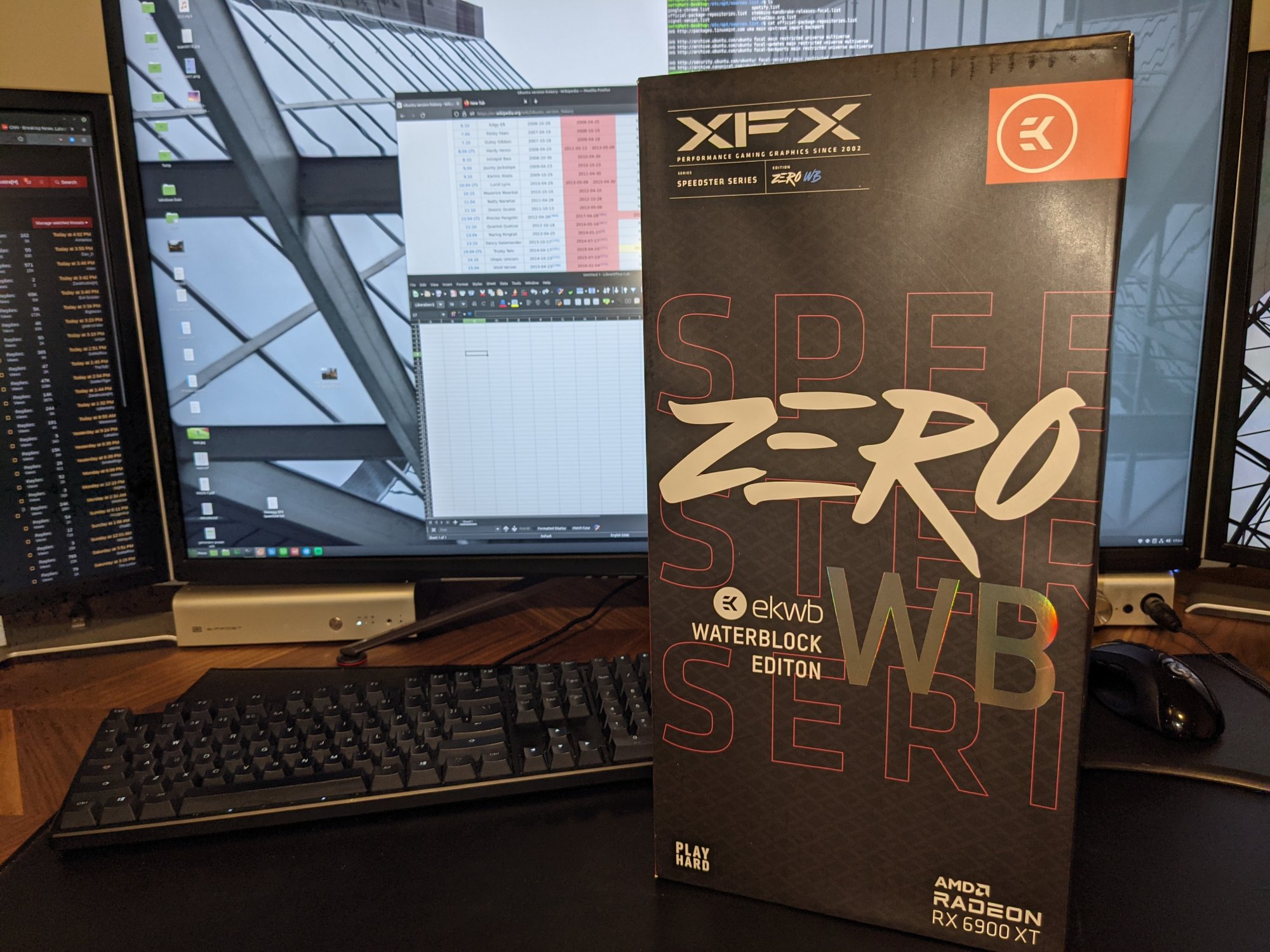

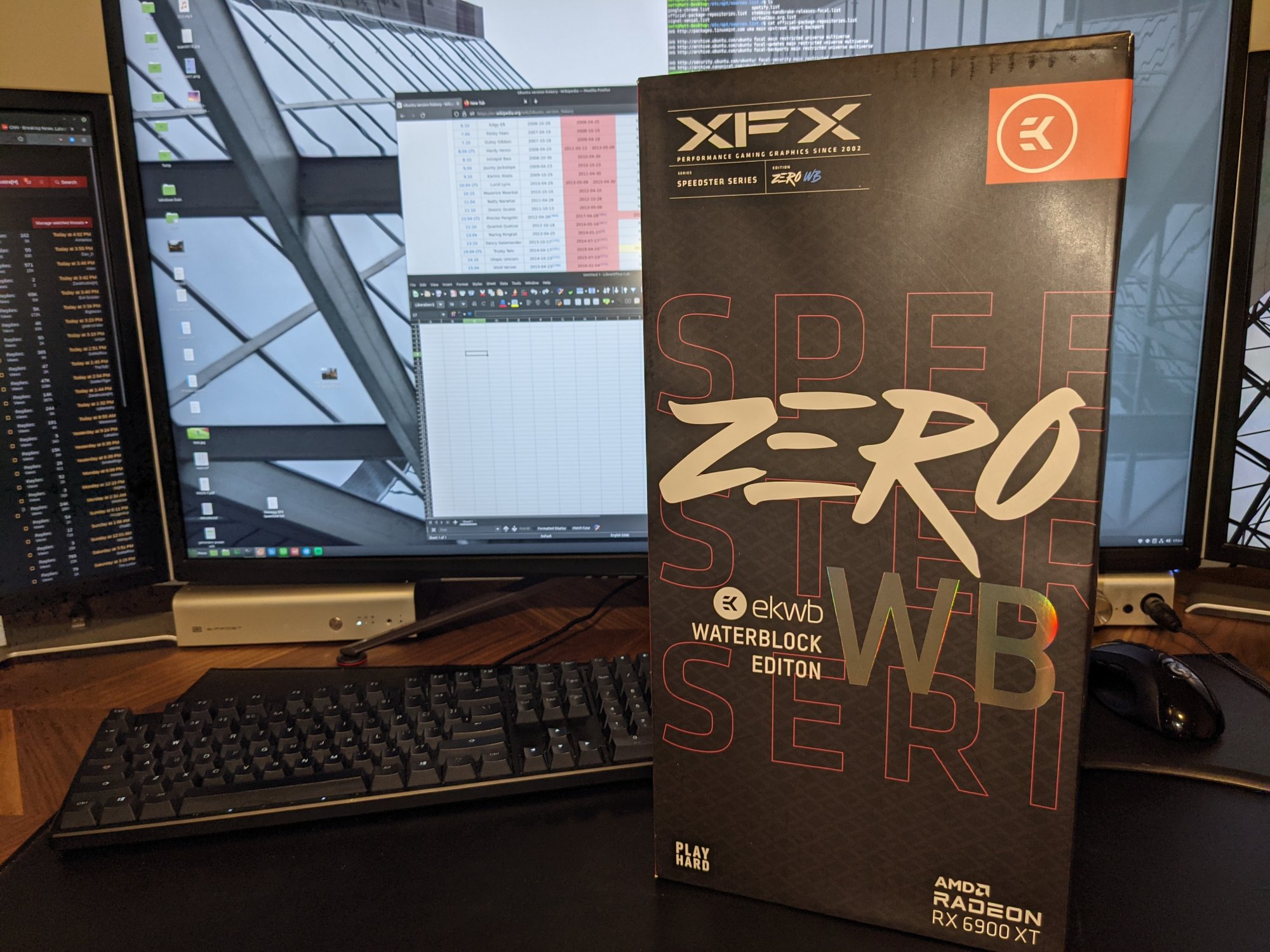

I read about this video card over at TheFPSReview.

I hadn't planned on going AMD this generation, but through some miracle of fortune this one was in stock at AIB MSRP of $1,799 over at Newegg, so after a few seconds of hesitation I bit.

I had really wanted to go with a 3090 or 3080ti this time around, but I wasn't willing to pay $3 grand for the 3090, and 3080ti availability at least was a bit difficult.

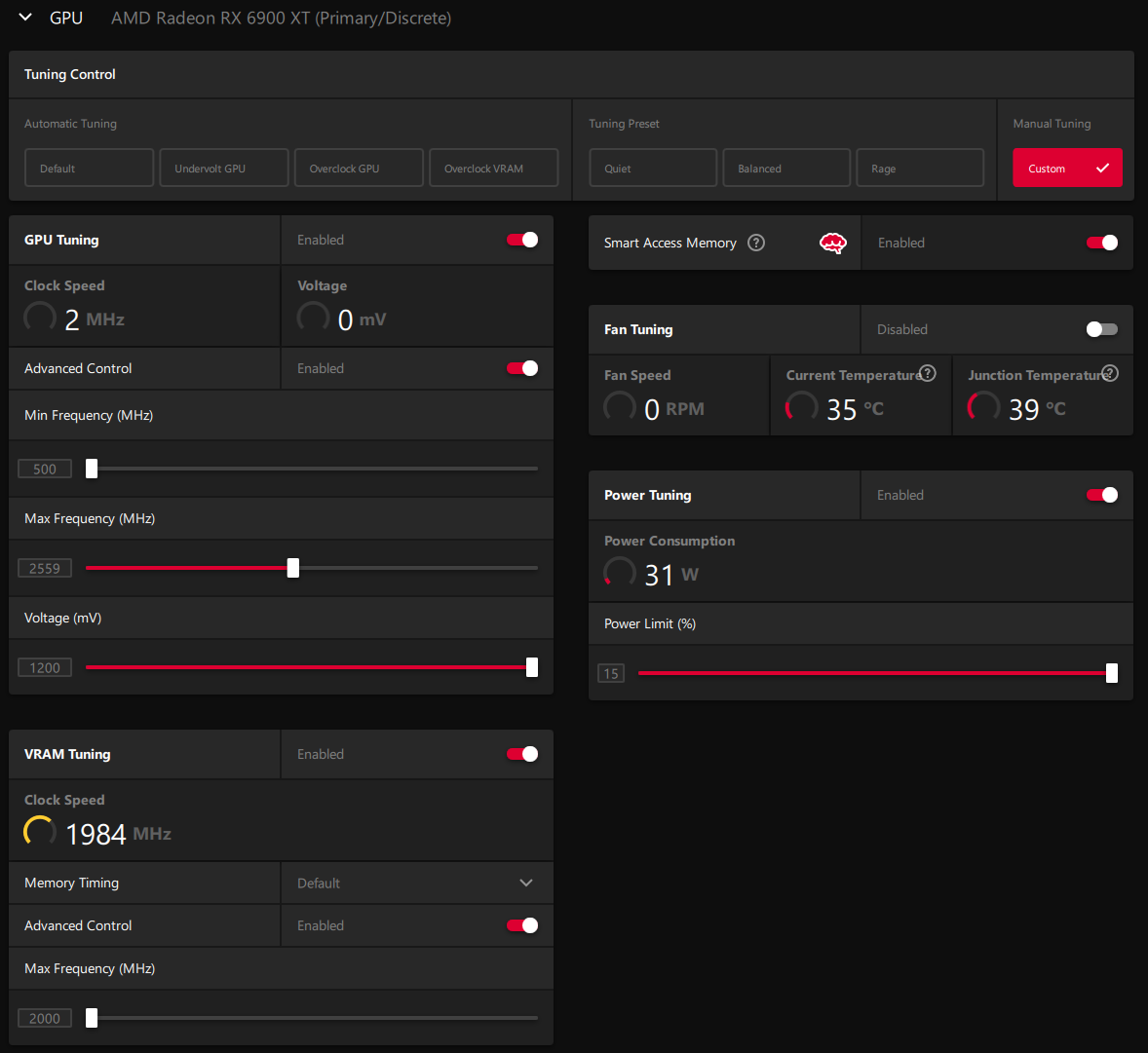

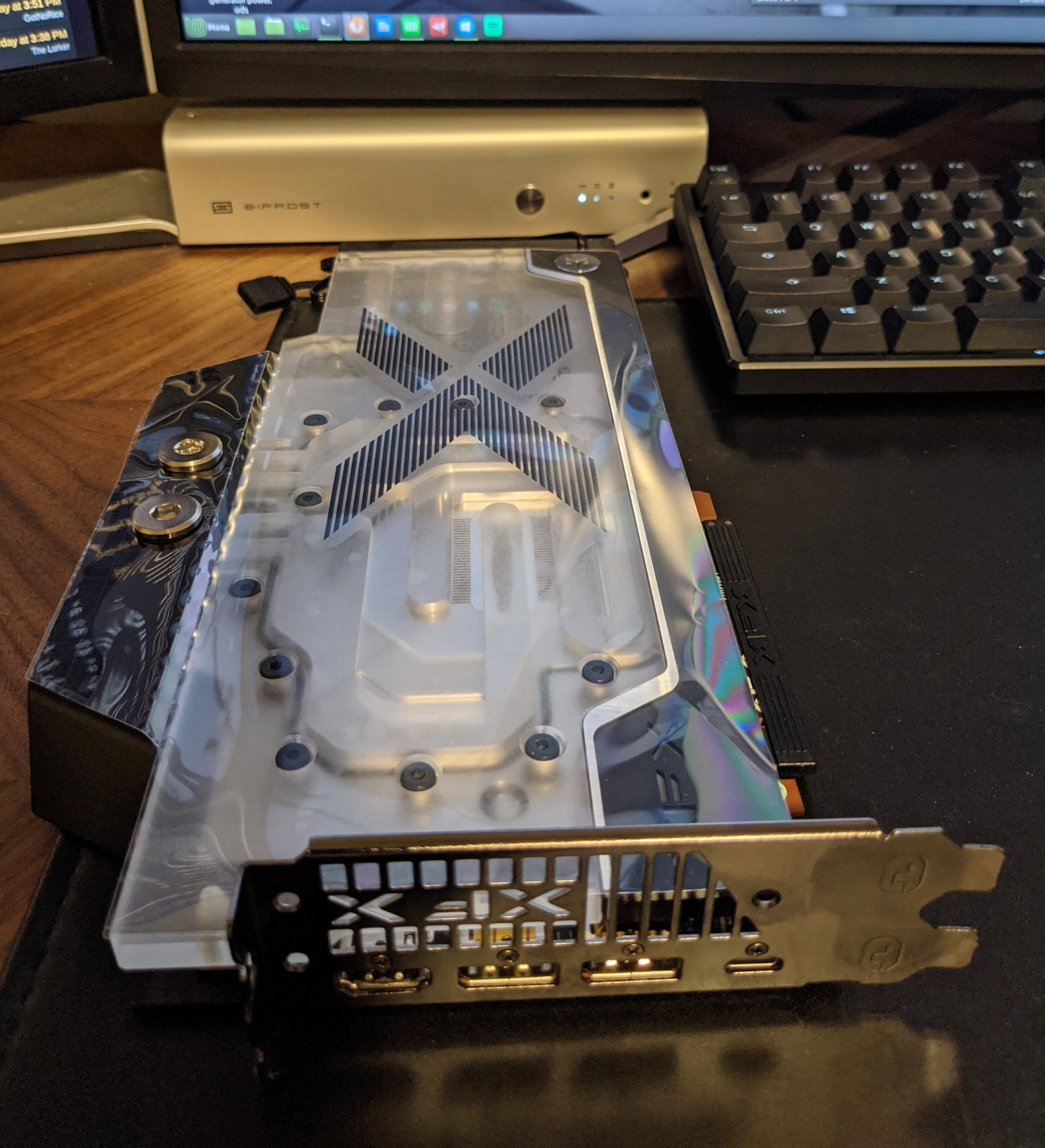

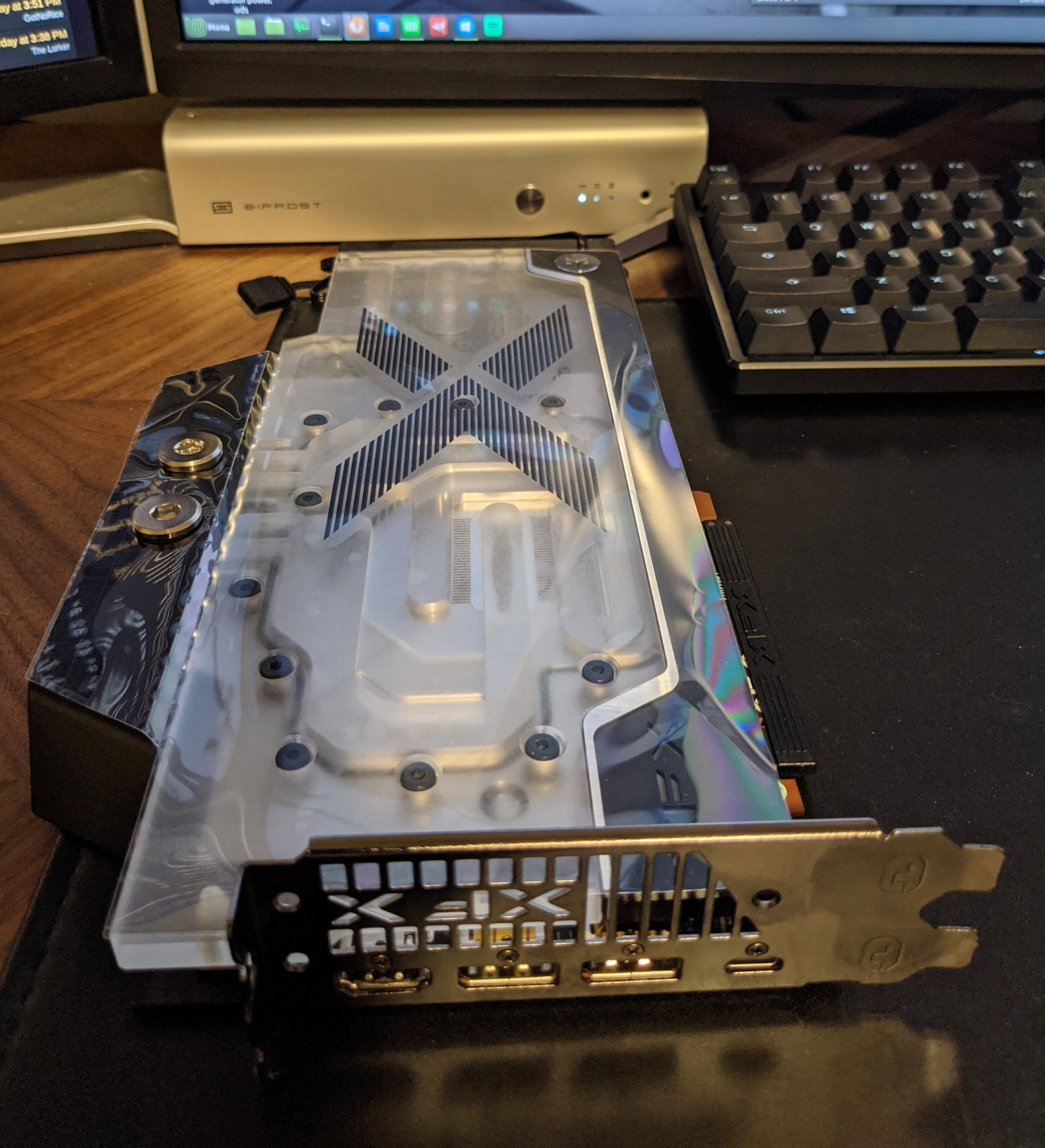

It comes with the specialty binned XTX version of the 6900xt, a totally reworked VRM/power stage to optimize for water cooling, and three 8 pin ports, so hopefully it will make for a good overclocker. Buzz about the card suggests 3GHZ is possible. I figured if this thing can hit 3Ghz as has been suggested, at least if you don't factor in raytracing it might even beat a 3090.

So I bit.

And for some odd reason it is still in stock. Maybe there just aren't enough custom loop builders among the miner crowd to make this fly off the shelves like air cooled models.

It arrived today in a much larger box than expected, and I figured I'd share some details.

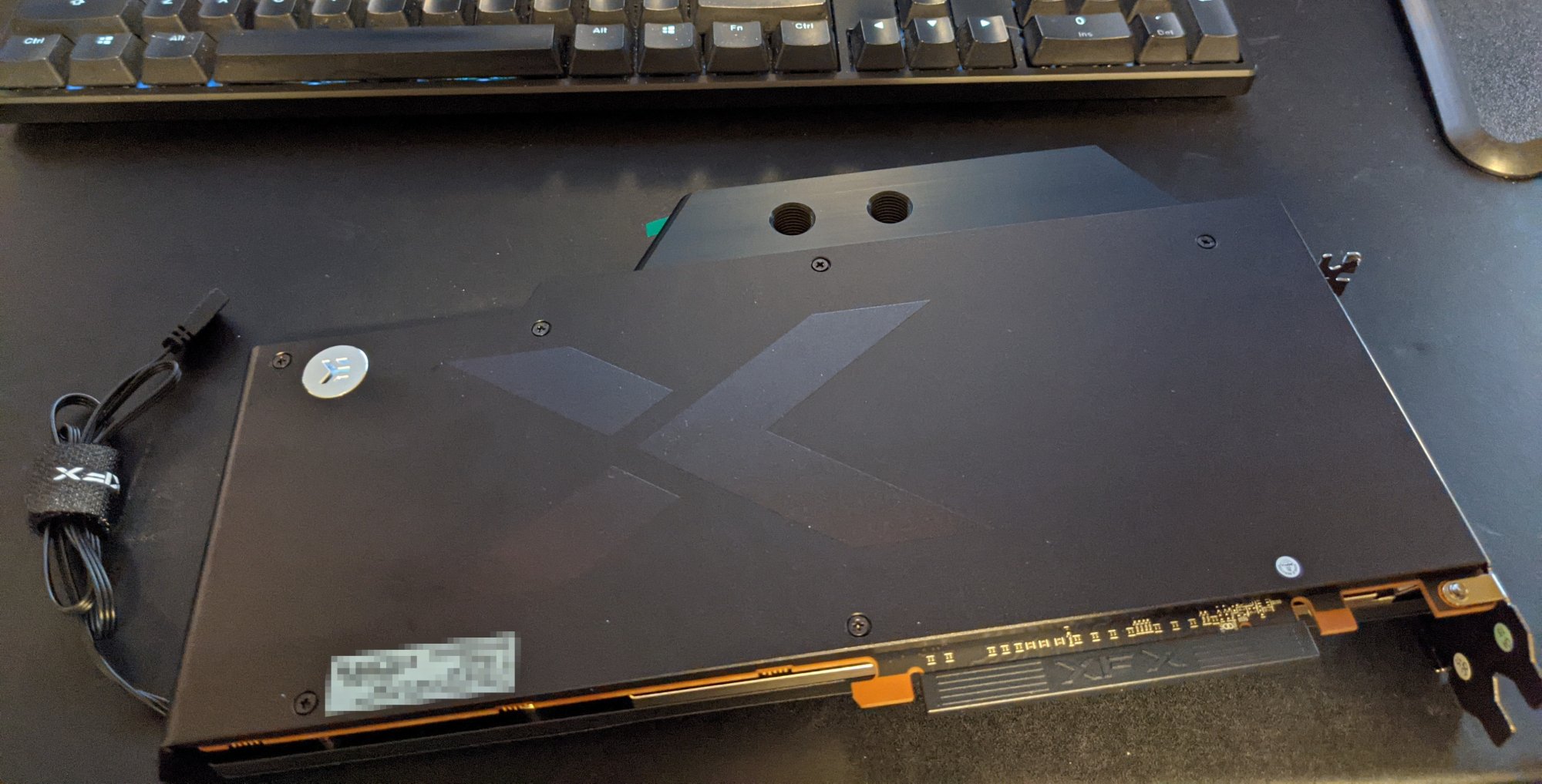

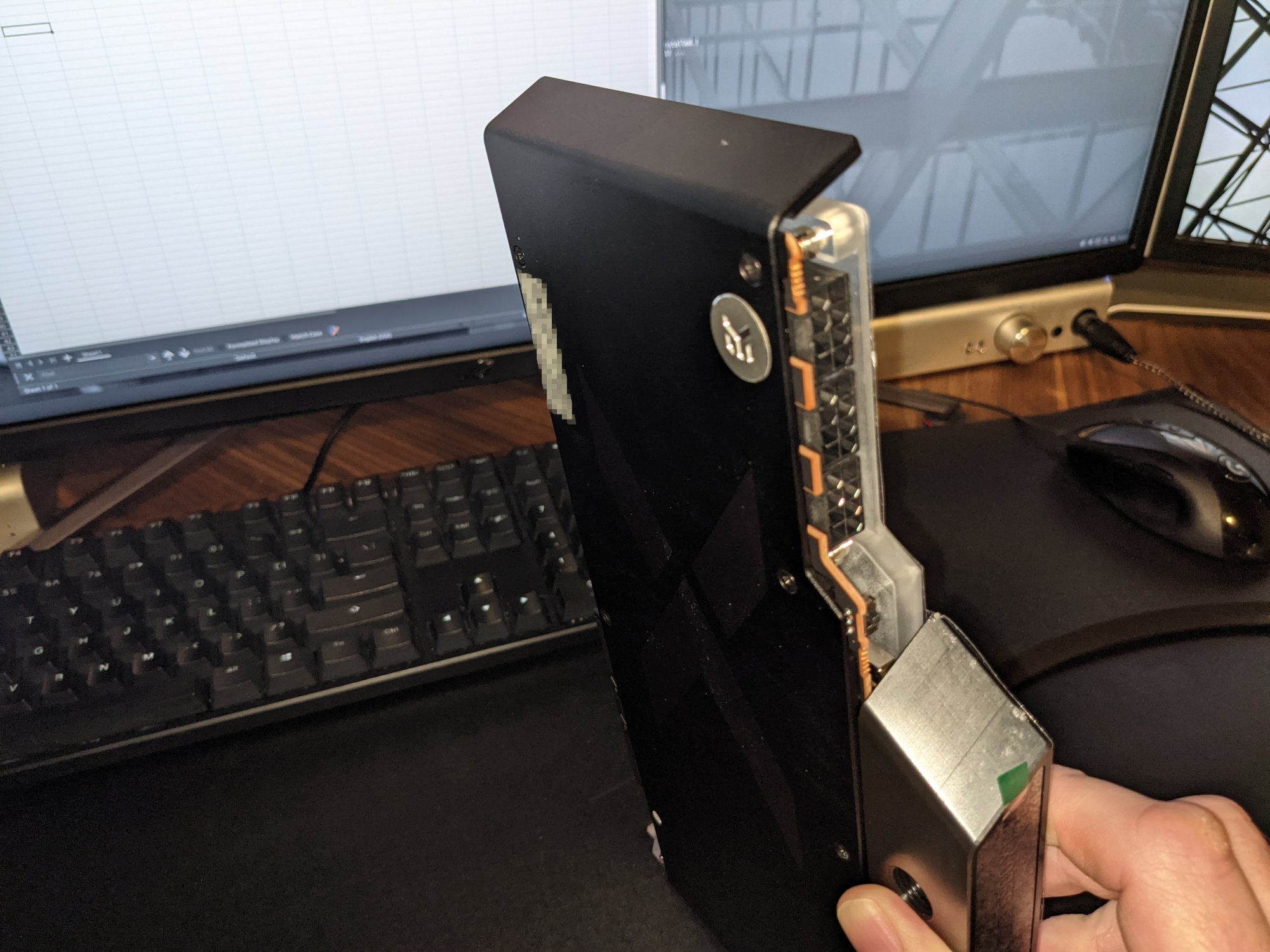

This thing is surprisingly heavy. Much more so than I remember my Pascal Titan X with block installed being....

(Though I haven't done a side by side as it is still going to be installed until I'm done cleaning the new one.

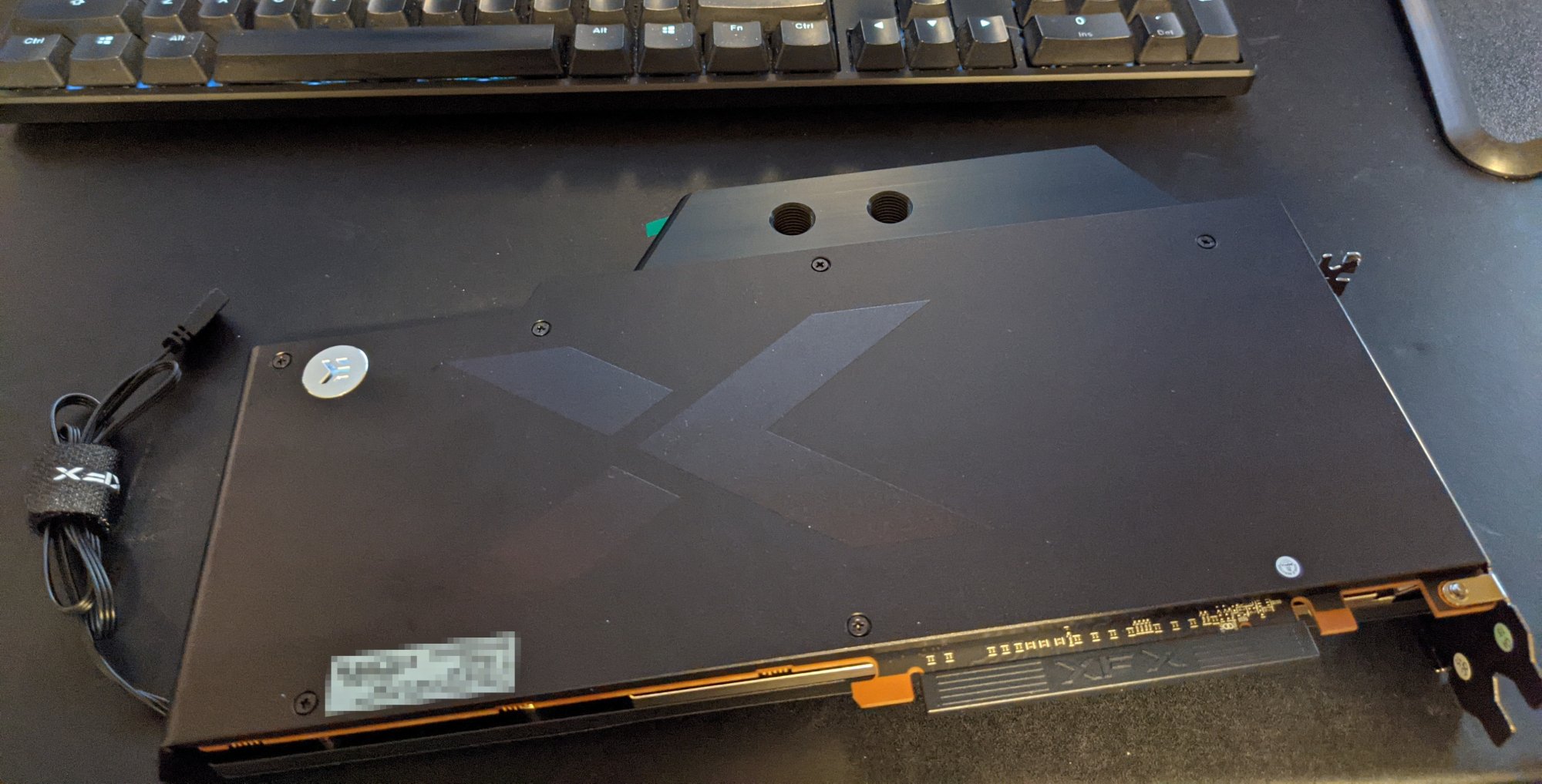

The back plate is metal and like 1/8 thick throughout. It's like the damn thing is armored. I'm guessing that's where most of the excessive weight comes from.

Here's hoping the motherboard PCIe slot can handle the weight...

I cannot understate how surprisingly heavy this thing is.

Normally I like that, it makes it feel sturdy and of quality construction, but this may be a little bit much, even for me...

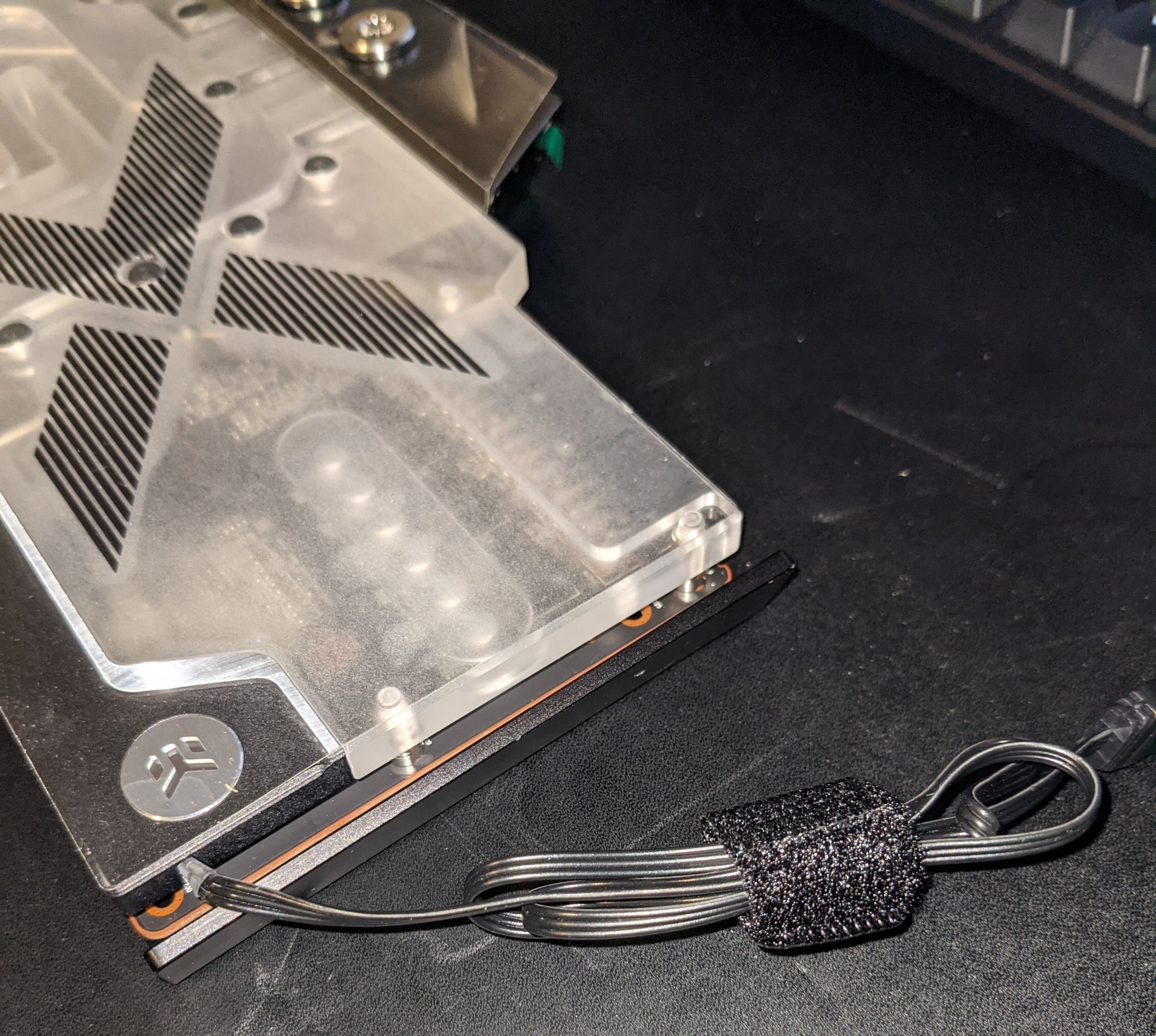

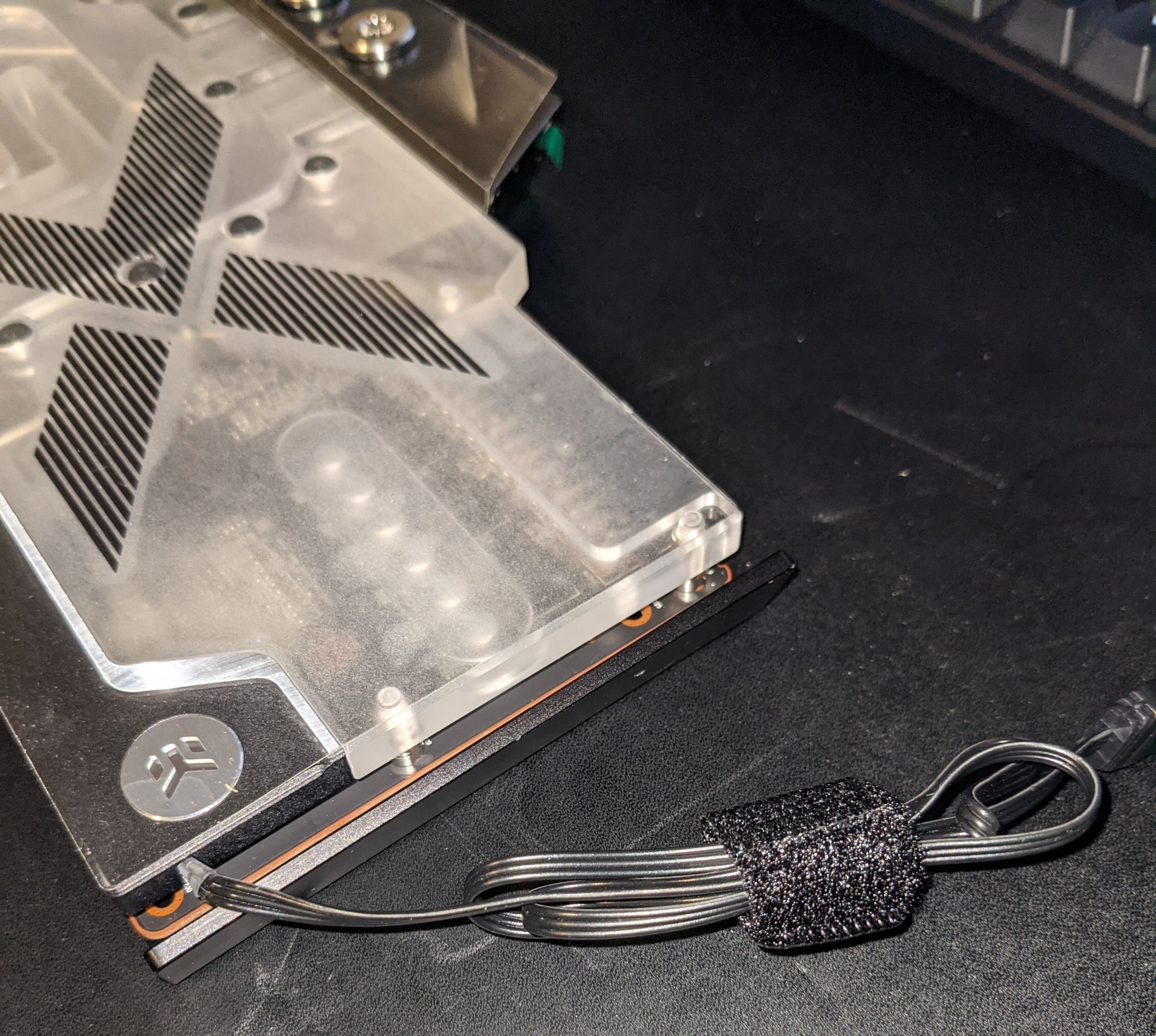

Sadly the RGB LED cable is not detachable from the block, so you are stuck with it whether or not you want it...

(Unless... You know... <snip> <snip>. But that probably impacts warranty and resale value. I guess I'll have to try to hide it somehow.)

I'm so disconnected from the PC case Christmas tree lighting world that I don't even know what kind of connector this is:

I don't do that Christmas tree lighting BS in my builds... At least it's towards the bottom of the card. I can probably tuck it out of the way to hide it.

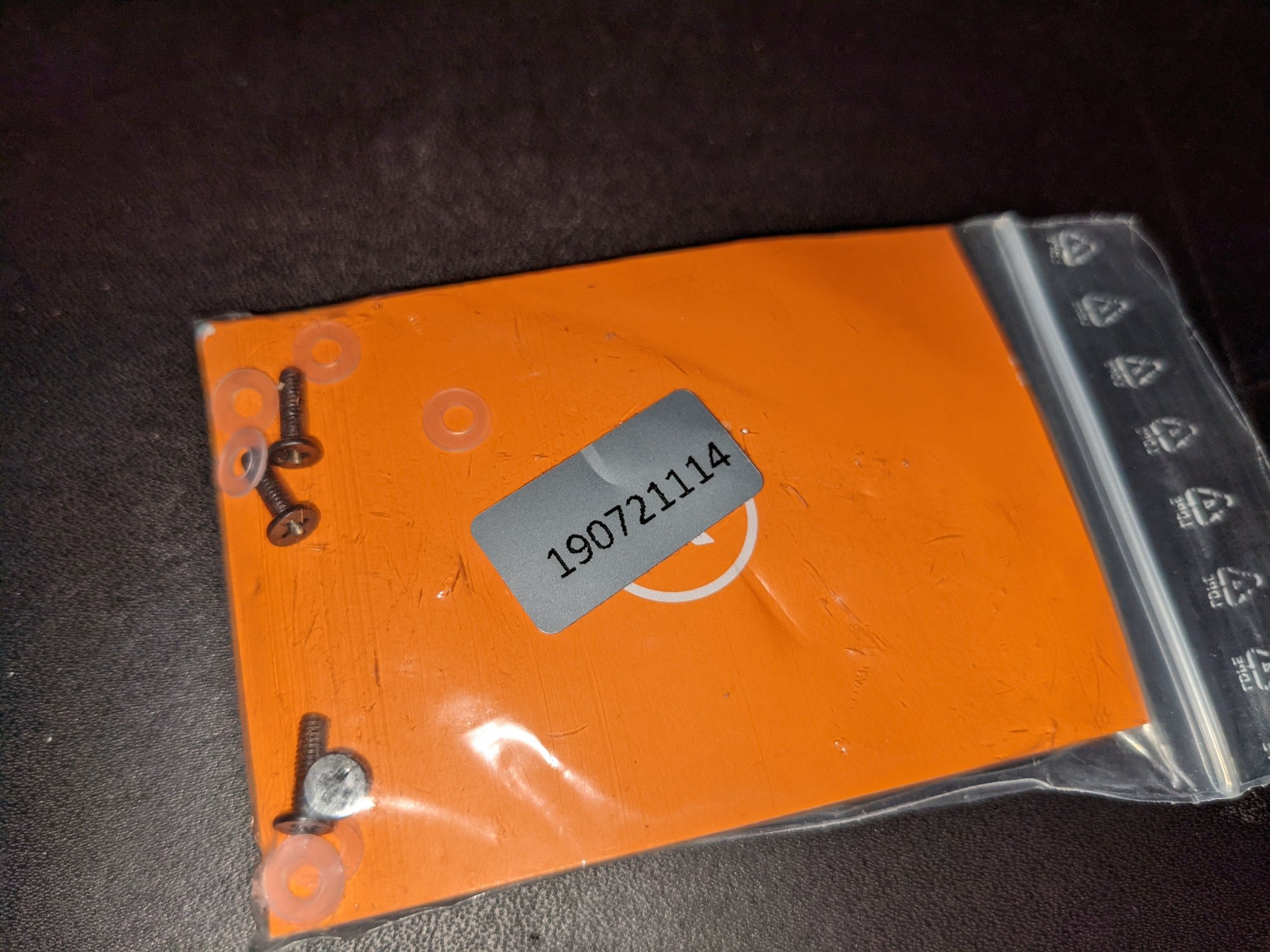

The video card comes with some sort of weird plastic EK hex wrench. I'm guessing it must be for the G1/4 port caps.

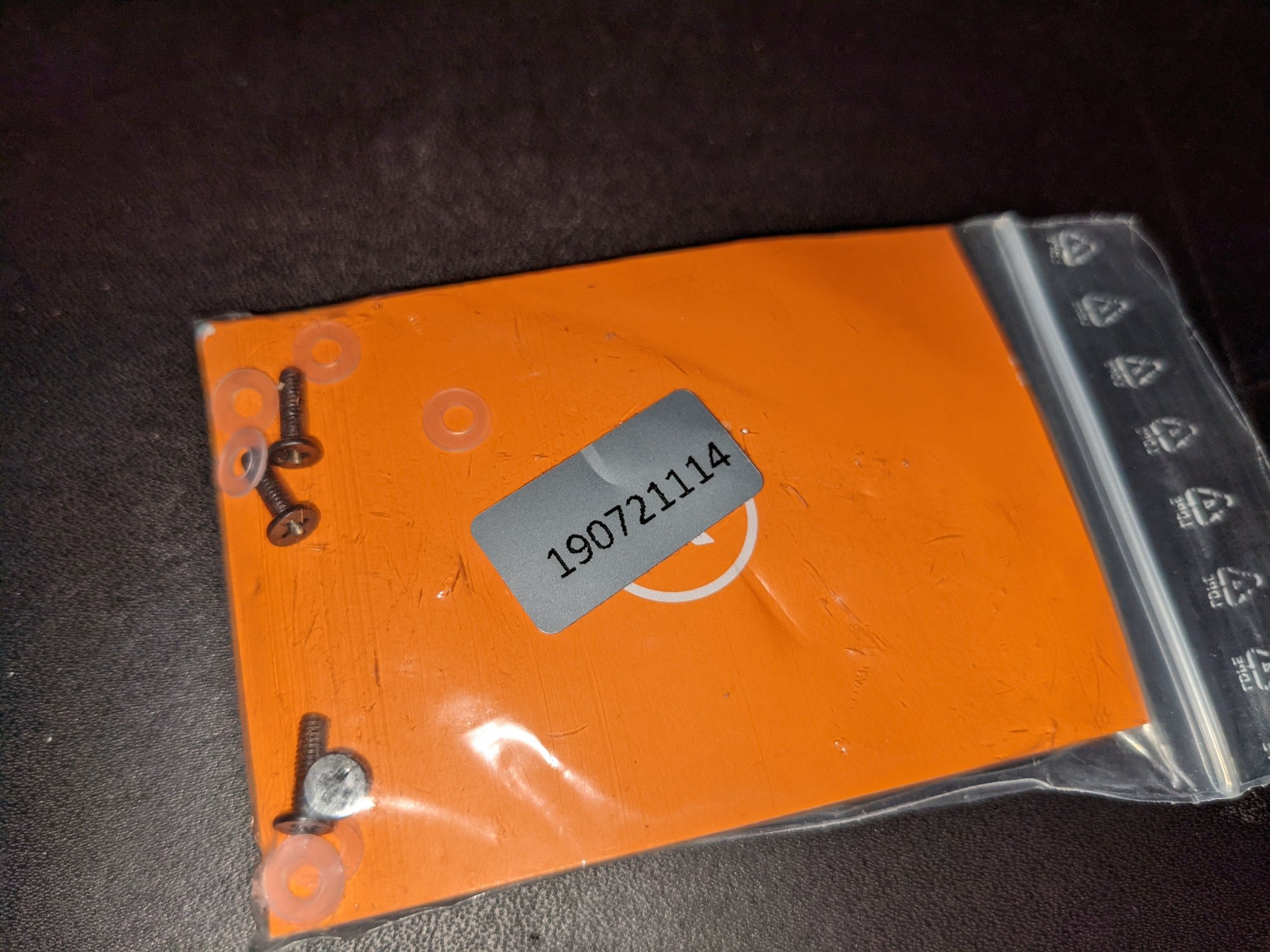

Also not quite sure what all these screws are for. In case you take the block/backplate off and lose some? I'm a fan of extra screws. That shit always gets lost.

Going to have to read the manual...

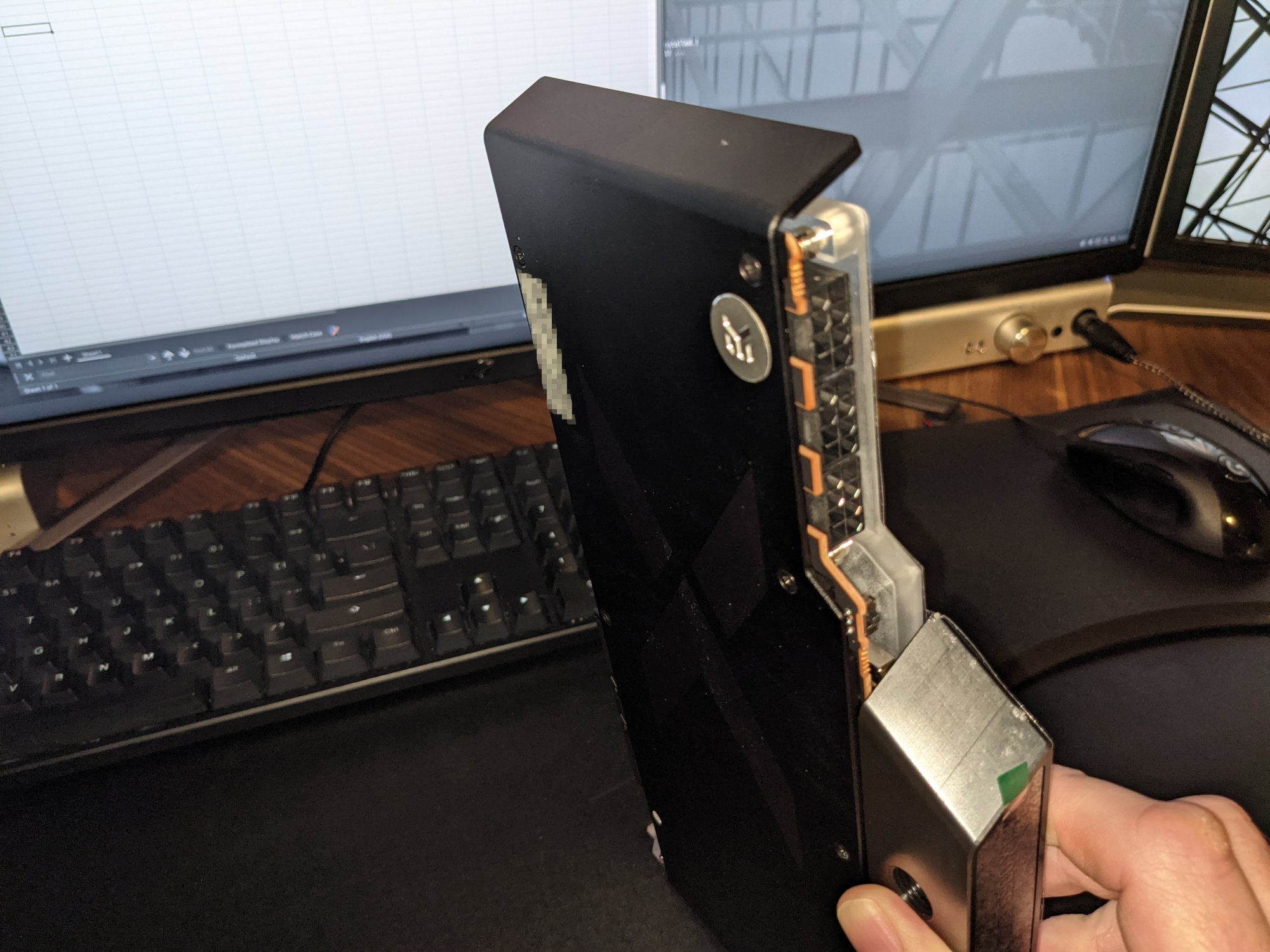

Kind of curious why they didn't make it single slot. It looks as if it would have fit...

And it's not like there are any ports in the second slot area or anything. Maybe they feel they need the screws in both slots both slots to keep this heavy thing in place?

Anyway, this thing promises to be a real bad boy. Figured you guys might enjoy some pics.

I'm going to integrate it into my existing build here.

I read about this video card over at TheFPSReview.

I hadn't planned on going AMD this generation, but through some miracle of fortune this one was in stock at AIB MSRP of $1,799 over at Newegg, so after a few seconds of hesitation I bit.

I had really wanted to go with a 3090 or 3080ti this time around, but I wasn't willing to pay $3 grand for the 3090, and 3080ti availability at least was a bit difficult.

It comes with the specialty binned XTX version of the 6900xt, a totally reworked VRM/power stage to optimize for water cooling, and three 8 pin ports, so hopefully it will make for a good overclocker. Buzz about the card suggests 3GHZ is possible. I figured if this thing can hit 3Ghz as has been suggested, at least if you don't factor in raytracing it might even beat a 3090.

So I bit.

And for some odd reason it is still in stock. Maybe there just aren't enough custom loop builders among the miner crowd to make this fly off the shelves like air cooled models.

It arrived today in a much larger box than expected, and I figured I'd share some details.

This thing is surprisingly heavy. Much more so than I remember my Pascal Titan X with block installed being....

(Though I haven't done a side by side as it is still going to be installed until I'm done cleaning the new one.

The back plate is metal and like 1/8 thick throughout. It's like the damn thing is armored. I'm guessing that's where most of the excessive weight comes from.

Here's hoping the motherboard PCIe slot can handle the weight...

I cannot understate how surprisingly heavy this thing is.

Normally I like that, it makes it feel sturdy and of quality construction, but this may be a little bit much, even for me...

Sadly the RGB LED cable is not detachable from the block, so you are stuck with it whether or not you want it...

(Unless... You know... <snip> <snip>. But that probably impacts warranty and resale value. I guess I'll have to try to hide it somehow.)

I'm so disconnected from the PC case Christmas tree lighting world that I don't even know what kind of connector this is:

I don't do that Christmas tree lighting BS in my builds... At least it's towards the bottom of the card. I can probably tuck it out of the way to hide it.

The video card comes with some sort of weird plastic EK hex wrench. I'm guessing it must be for the G1/4 port caps.

Also not quite sure what all these screws are for. In case you take the block/backplate off and lose some? I'm a fan of extra screws. That shit always gets lost.

Going to have to read the manual...

Kind of curious why they didn't make it single slot. It looks as if it would have fit...

And it's not like there are any ports in the second slot area or anything. Maybe they feel they need the screws in both slots both slots to keep this heavy thing in place?

Anyway, this thing promises to be a real bad boy. Figured you guys might enjoy some pics.

I'm going to integrate it into my existing build here.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)