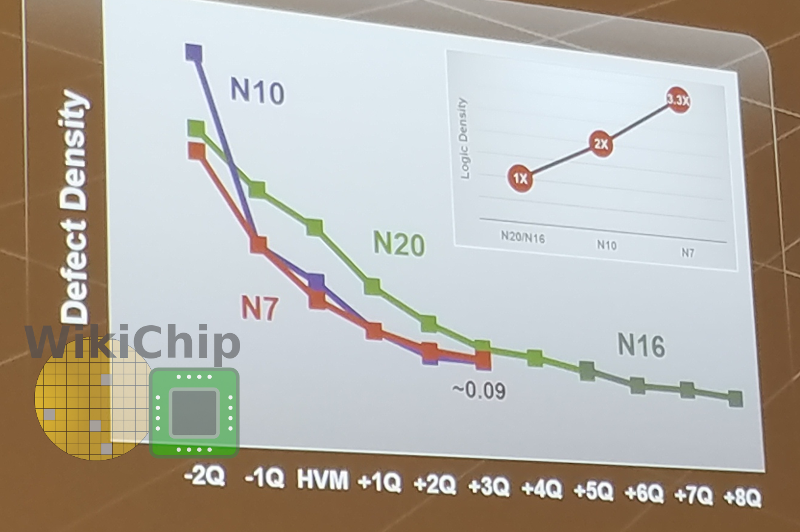

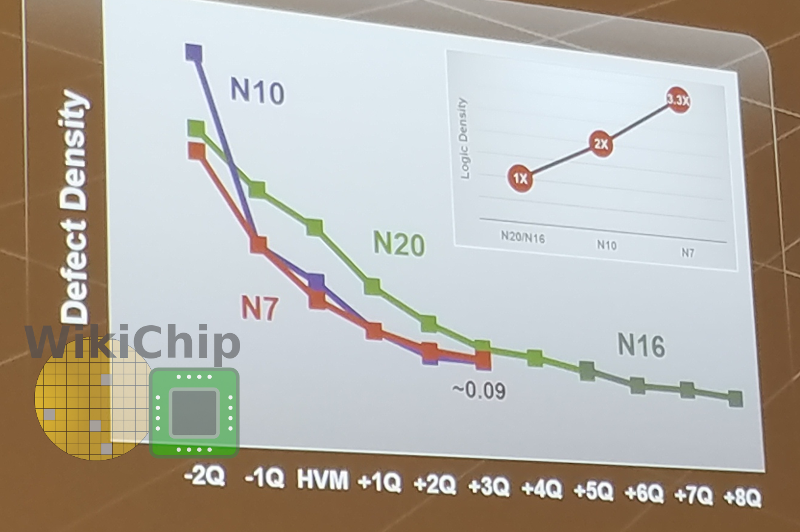

This is pretty big, because previously all we had were rumors and guesses. TSMC put the value right on a recent slide.

https://fuse.wikichip.org/news/2879/tsmc-5-nanometer-update/

7nm is sitting at ~.09 defect rate. VERY good.

That translates into:

Navi 10: ~80% Yield of fully working parts.

Zen2 Chiplet: ~94% Yield of fully working parts.

For years I have been saying, they don't make lower core count parts because they need to recover bad dies, they make lower core count parts almost entirely out of fully working parts, for segmentation purposes. This should help illustrate that.

The slide:

https://fuse.wikichip.org/news/2879/tsmc-5-nanometer-update/

7nm is sitting at ~.09 defect rate. VERY good.

That translates into:

Navi 10: ~80% Yield of fully working parts.

Zen2 Chiplet: ~94% Yield of fully working parts.

For years I have been saying, they don't make lower core count parts because they need to recover bad dies, they make lower core count parts almost entirely out of fully working parts, for segmentation purposes. This should help illustrate that.

The slide:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)