If the peak efficiency is around 50-60% load why do people recommend getting a power supply that has about 5-15% more watts than you actually need? What am I missing. Sounds to me that if my system needs 500 watts I should be looking for a 1000 watt psu for peak efficiency ignoring purchase price and focusing on efficiency.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Power supply efficiency question

- Thread starter kaneO

- Start date

BlueLineSwinger

[H]ard|Gawd

- Joined

- Dec 1, 2011

- Messages

- 1,435

Well, the possible 1-3 points of efficiency aren't really worth the large cost of nearly doubling the capacity. The efficiency curve of modern PSUs isn't nearly as steep as it once was, especially >~20% capacity.

There's also the other side of the curve. A system may take a recommended 500 W PSU to properly handle peak draw from all its components, but in reality will probably spend most of its time far below that. And generally speaking, a PSU's efficiency is at its worst when load is at <20%.

There's also the other side of the curve. A system may take a recommended 500 W PSU to properly handle peak draw from all its components, but in reality will probably spend most of its time far below that. And generally speaking, a PSU's efficiency is at its worst when load is at <20%.

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

Efficiency is not related to load in that way and does not figure in how many watts you need for your system.

* see edit at the bottom, I misread your question.

Getting a PSU with more watts than you need is to get longer life from the PSU.

When you push a PSU hard (ie drive it near its maximum output) it will burn out faster.

Burn out: the capacitors derate over time, with use and with heat. This makes them less capacitive, reducing the maximum power of the PSU.

A good quality PSU when new will be able to supply a bit more current than its rating. But with use, some time later its max power output will drop below its rated max.

If you havent left any headroom and need almost all that power, that PSU isnt going to last very much longer.

ie the nearer its max output it is pushed to, the faster it will wear out. As its max output drops, the required power doesnt so it continually gets pushed harder and harder.

Also, you might upgrade to more powerful hardware in the future which would otherwise need a PSU change.

I always buy a PSU with at least 1/3 extra power headroom. ie if I need 550W I'll buy a 750W.

This proved sensible last year when I bought a 3090 gfx card and luckily already had enough power to drive it without replacing the PSU despite it already being 9 yrs old.

Efficiency only affects how much power is drawn from the wall socket for the amount of power your PC is using.

The more efficient it is the less extra power is pulled from the wall by the PSU for its internal operation. And also generally reduces how hot the PSU gets.

Efficiency will vary from low watts to high watts, peaking somewhere in the middle. It doesnt usually vary that much unless you use very little power or near max power.

This has nothing to do with how much power your PC needs, the PSUs power supplied to the PC is independent of its efficiency.

The only time efficiency can help with a power draw problem (other than cost of power) is if your PC is so powerful, its PSU is maxing out a wall sockets current capability.

Then a more efficient PSU might be able to keep you within the wall supply constraint.

Although, bear in mind, efficiency will reduce a little the hotter the PSU gets and also with age/use.

It pays to keep your PSU cool to use a little less power and last longer.

edit: I reread your question, peak efficiency doesnt vary that much over the power range to matter unless you push the PSU really hard.

Also, if you insist on staying within peak efficiency zone, you will need to keep your PC running hard, consuming more electricity anyway, defeating the objective.

If you are really concerned about efficiency, get a highly efficient PSU with a bit of power headroom as described above and call it a day, you arent going to do better.

* see edit at the bottom, I misread your question.

Getting a PSU with more watts than you need is to get longer life from the PSU.

When you push a PSU hard (ie drive it near its maximum output) it will burn out faster.

Burn out: the capacitors derate over time, with use and with heat. This makes them less capacitive, reducing the maximum power of the PSU.

A good quality PSU when new will be able to supply a bit more current than its rating. But with use, some time later its max power output will drop below its rated max.

If you havent left any headroom and need almost all that power, that PSU isnt going to last very much longer.

ie the nearer its max output it is pushed to, the faster it will wear out. As its max output drops, the required power doesnt so it continually gets pushed harder and harder.

Also, you might upgrade to more powerful hardware in the future which would otherwise need a PSU change.

I always buy a PSU with at least 1/3 extra power headroom. ie if I need 550W I'll buy a 750W.

This proved sensible last year when I bought a 3090 gfx card and luckily already had enough power to drive it without replacing the PSU despite it already being 9 yrs old.

Efficiency only affects how much power is drawn from the wall socket for the amount of power your PC is using.

The more efficient it is the less extra power is pulled from the wall by the PSU for its internal operation. And also generally reduces how hot the PSU gets.

Efficiency will vary from low watts to high watts, peaking somewhere in the middle. It doesnt usually vary that much unless you use very little power or near max power.

This has nothing to do with how much power your PC needs, the PSUs power supplied to the PC is independent of its efficiency.

The only time efficiency can help with a power draw problem (other than cost of power) is if your PC is so powerful, its PSU is maxing out a wall sockets current capability.

Then a more efficient PSU might be able to keep you within the wall supply constraint.

Although, bear in mind, efficiency will reduce a little the hotter the PSU gets and also with age/use.

It pays to keep your PSU cool to use a little less power and last longer.

edit: I reread your question, peak efficiency doesnt vary that much over the power range to matter unless you push the PSU really hard.

Also, if you insist on staying within peak efficiency zone, you will need to keep your PC running hard, consuming more electricity anyway, defeating the objective.

If you are really concerned about efficiency, get a highly efficient PSU with a bit of power headroom as described above and call it a day, you arent going to do better.

Last edited:

Kiriakos-GR

Weaksauce

- Joined

- Nov 21, 2021

- Messages

- 99

Sounds to me that if my system needs 500 watts I should be looking for a 1000 watt psu for peak efficiency ignoring purchase price and focusing on efficiency.

I will try to reorganize your thoughts.

a) Watts they are reference to INPUT power from the wall plug.

b) An economy (Plug-in to wall plug) energy meter, this is capable to give you proofs of max energy that your PC using.

Example : My PC using 300W at extreme load = Gaming & 130W idle. PSU of choice 750W = Lesser thermal load at the PSU at summer time = lesser DC fan RPM = lesser noise from the PSU fan.

Alternatively my PC it can work with an 450W PSU as minimum, but it will run warmer, and internal temperature will harm internal capacitors longevity.

For such a system an 1000W PSU this is a wrong choice, its true that efficiency improve at 40% of load and peak this is 70% = 40~70% = the operating range that the PSU using energy effectively regarding the electricity bill.

Tsumi

[H]F Junkie

- Joined

- Mar 18, 2010

- Messages

- 13,755

Well, the possible 1-3 points of efficiency aren't really worth the large cost of nearly doubling the capacity. The efficiency curve of modern PSUs isn't nearly as steep as it once was, especially >~20% capacity.

There's also the other side of the curve. A system may take a recommended 500 W PSU to properly handle peak draw from all its components, but in reality will probably spend most of its time far below that. And generally speaking, a PSU's efficiency is at its worst when load is at <20%.

Best and most concise answer. Your system isn't running at 100% load all the time unless you're doing some sort of distributed computing. Idle power consumption is usually around 15-25% of maximum load.

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

np, I did the same as you.

Last year I chose the most efficient PSU I could find for my other PC which at the time was the mouthful: Seasonic Prime UIltra Titanium 750W.

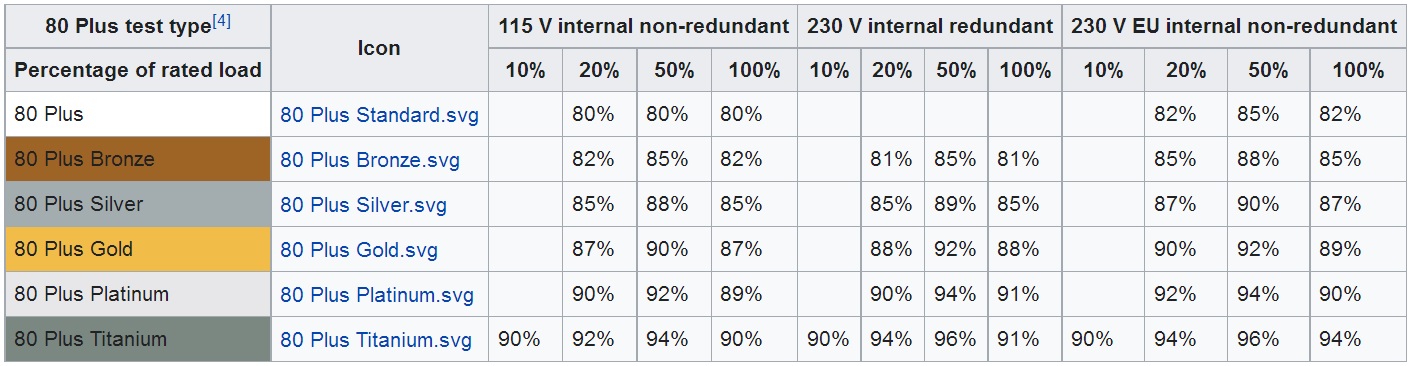

I'll post an image in a moment of the efficiency ratings when I find it...

Last year I chose the most efficient PSU I could find for my other PC which at the time was the mouthful: Seasonic Prime UIltra Titanium 750W.

I'll post an image in a moment of the efficiency ratings when I find it...

Kiriakos-GR

Weaksauce

- Joined

- Nov 21, 2021

- Messages

- 99

Just a tip for the non trained eyes about the above chart.

We focus at 20% and 50% load conditions.

At 50% load that is the most significant real life condition, differences of 80 Plus Bronze (2013) with 80 Plus Gold (2018), they are insignificant.

Only if you have some energy measurements at hand of how many Kilo-watt/hours you PC this consuming per month, only then you may calculate the differences (improvement due electrical efficiency) at the electrical bill.

Electrical efficiency this is of highest importance for organization, them having 100 employees them using 100 computers in a building.

We focus at 20% and 50% load conditions.

At 50% load that is the most significant real life condition, differences of 80 Plus Bronze (2013) with 80 Plus Gold (2018), they are insignificant.

Only if you have some energy measurements at hand of how many Kilo-watt/hours you PC this consuming per month, only then you may calculate the differences (improvement due electrical efficiency) at the electrical bill.

Electrical efficiency this is of highest importance for organization, them having 100 employees them using 100 computers in a building.

kirbyrj

Fully [H]

- Joined

- Feb 1, 2005

- Messages

- 30,693

Just a tip for the non trained eyes about the above chart.

We focus at 20% and 50% load conditions.

At 50% load that is the most significant real life condition, differences of 80 Plus Bronze (2013) with 80 Plus Gold (2018), they are insignificant.

Only if you have some energy measurements at hand of how many Kilo-watt/hours you PC this consuming per month, only then you may calculate the differences (improvement due electrical efficiency) at the electrical bill.

Electrical efficiency this is of highest importance for organization, them having 100 employees them using 100 computers in a building.

A company that has 100 employees and 100 computers isn't going to be buying/building DIY computers. I don't remember speccing a Dell that allowed you to select the PSU efficiency of a basic office "Inspiron" except maybe to move from 360W to 500W for $50 extra.

I agree that 50% might be the highest load and likely it is closer to 1-20% all day. Assuming 8 hours of work per day and then the computer goes into a power efficient sleep mode or is turned off. I don't know that you'd ever make up the difference in price if you had to pay extra for a Gold or Plat PSU over the ~5-6 year service life of an office computer.

Format _C:

2[H]4U

- Joined

- Jun 12, 2001

- Messages

- 3,885

unless you are using the video card (oops I mean GPU as they seem to be identified as now) to do that new mining CRAP LoL!Your system isn't running at 100%

What is internal redundant Vs Non Redundant? Seems to be only applicable in the 230V areas as well

Personally, I'm guessing my system load is typically about 60% 100% of the time unless I'm gaming, then its' probably about about 80%.Best and most concise answer. Your system isn't running at 100% load all the time unless you're doing some sort of distributed computing. Idle power consumption is usually around 15-25% of maximum load.

BlueLineSwinger

[H]ard|Gawd

- Joined

- Dec 1, 2011

- Messages

- 1,435

Personally, I'm guessing my system load is typically about 60% 100% of the time unless I'm gaming, then its' probably about about 80%.

Unless you're constantly doing some kind of processing in the background, or your system is overloaded with crapware or is infected, that load would be highly unusual.

Best way to check is to get a Kill-A-Watt and plug it in between the system and the wall. If you're on a UPS, those will often provide similar functionality (but don't forget to subtract out other connected devices, such as the display).

Tsumi

[H]F Junkie

- Joined

- Mar 18, 2010

- Messages

- 13,755

unless you are using the video card (oops I mean GPU as they seem to be identified as now) to do that new mining CRAP LoL!

What is internal redundant Vs Non Redundant? Seems to be only applicable in the 230V areas as well

If your CPU isn't also maxed out during that, you're not running at 100% load

Personally, I'm guessing my system load is typically about 60% 100% of the time unless I'm gaming, then its' probably about about 80%.

Gaming loads are anywhere from 60-80% depending on the game. Very few games max out the CPU and the GPU at the same time. For general web browsing, videos, and word processing, today's efficient systems are doing that at 15% of max load.

Why wouldn't it be at 90-100%? Assuming heat is not an issue and the game settings are maxed out for 2k gaming.If your CPU isn't also maxed out during that, you're not running at 100% load

Gaming loads are anywhere from 60-80% depending on the game. Very few games max out the CPU and the GPU at the same time. For general web browsing, videos, and word processing, today's efficient systems are doing that at 15% of max load.

I usually monitor my setting settings but never really paid much attention to the power draw from the gpu

Last edited:

Tsumi

[H]F Junkie

- Joined

- Mar 18, 2010

- Messages

- 13,755

Why wouldn't it be at 90-100%? Assuming heat is not an issue and the game settings are maxed out for 2k gaming.

I usually monitor my setting settings but never really paid much attention to the power draw from the gpu

Because gaming loads vary wildly depending on the type of game and how it is coded. It also depends on the power of your CPU in relation to your GPU.

Let's use an example where you have a 150 watt CPU, 200 watt GPU, and 50 watts for the peripherals, with a PSU sized appropriately at 500 watts. In this scenario, your idle (non-gaming) power consumption is very likely in the 100 watt range, putting it squarely in the 20% load range for that PSU. If you had an 800 watt PSU, you would be idling in the more inefficient 12% range.

FPS, RPG, and platforming games tend to be heavy on the GPU and light on the CPU. Maxing out the settings, you'll probably pull 200 watts from the GPU and 80 watts from the CPU. This is especially the case when GPU limited. When resolution and quality is dropped in favor of higher framerates, CPU usage goes up.

RTS, city builder, sim games, and emulators tend to be heavy on the CPU. They can be harsh on the GPU as well, but they generally become CPU bottlenecked, causing the GPU to not work as hard. In this case you can be pulling 150 watts on the CPU and only 150 watts on the GPU.

In addition to that, as CPU core count goes up, the percentage of the CPU that a game can utilize decreases. Many older games are designed to run on 2-3 cores at most, and have one main thread that can only be processed by one core that becomes the bottleneck. Newer games can utilize more cores, but they still struggle with balancing the load evenly. This means there's always some core that's partially idling due to waiting for other cores to finish. Newer CPU architectures mitigate this somewhat by boosting and diverting power to the core that's doing more work.

Kiriakos-GR

Weaksauce

- Joined

- Nov 21, 2021

- Messages

- 99

Let's use an example where you have a 150 watt CPU

My example above, this includes real electrical measurements, and my hardware setup this is at my signature.

We talking of 500W PSU as minimum (SAFE) requirement within the past 20 years, and this is because while hardware this changes, the consumption of medium gaming PC this stays identical.

Very interesting and insightful. I'm curious to know what I'm pulling typically during my gaming sessions. If I get a kill a watt meter I'm gonna report back.Because gaming loads vary wildly depending on the type of game and how it is coded. It also depends on the power of your CPU in relation to your GPU.

Let's use an example where you have a 150 watt CPU, 200 watt GPU, and 50 watts for the peripherals, with a PSU sized appropriately at 500 watts. In this scenario, your idle (non-gaming) power consumption is very likely in the 100 watt range, putting it squarely in the 20% load range for that PSU. If you had an 800 watt PSU, you would be idling in the more inefficient 12% range.

FPS, RPG, and platforming games tend to be heavy on the GPU and light on the CPU. Maxing out the settings, you'll probably pull 200 watts from the GPU and 80 watts from the CPU. This is especially the case when GPU limited. When resolution and quality is dropped in favor of higher framerates, CPU usage goes up.

RTS, city builder, sim games, and emulators tend to be heavy on the CPU. They can be harsh on the GPU as well, but they generally become CPU bottlenecked, causing the GPU to not work as hard. In this case you can be pulling 150 watts on the CPU and only 150 watts on the GPU.

In addition to that, as CPU core count goes up, the percentage of the CPU that a game can utilize decreases. Many older games are designed to run on 2-3 cores at most, and have one main thread that can only be processed by one core that becomes the bottleneck. Newer games can utilize more cores, but they still struggle with balancing the load evenly. This means there's always some core that's partially idling due to waiting for other cores to finish. Newer CPU architectures mitigate this somewhat by boosting and diverting power to the core that's doing more work.

Think I'm gonna add one of these to my wish list.Unless you're constantly doing some kind of processing in the background, or your system is overloaded with crapware or is infected, that load would be highly unusual.

Best way to check is to get a Kill-A-Watt and plug it in between the system and the wall. If you're on a UPS, those will often provide similar functionality (but don't forget to subtract out other connected devices, such as the display).

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)