Venturi

Limp Gawd

- Joined

- Nov 23, 2004

- Messages

- 264

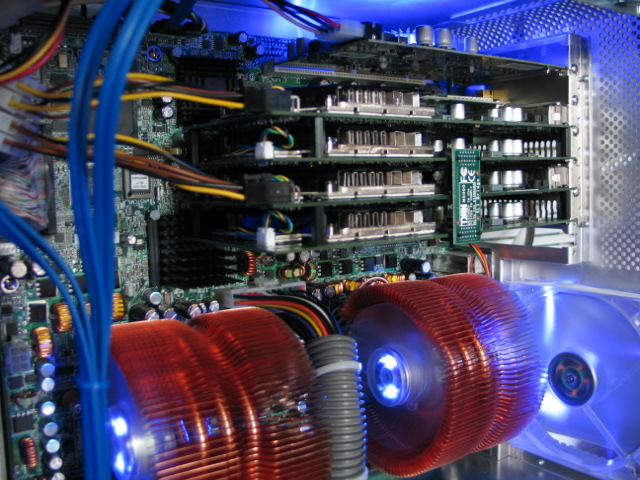

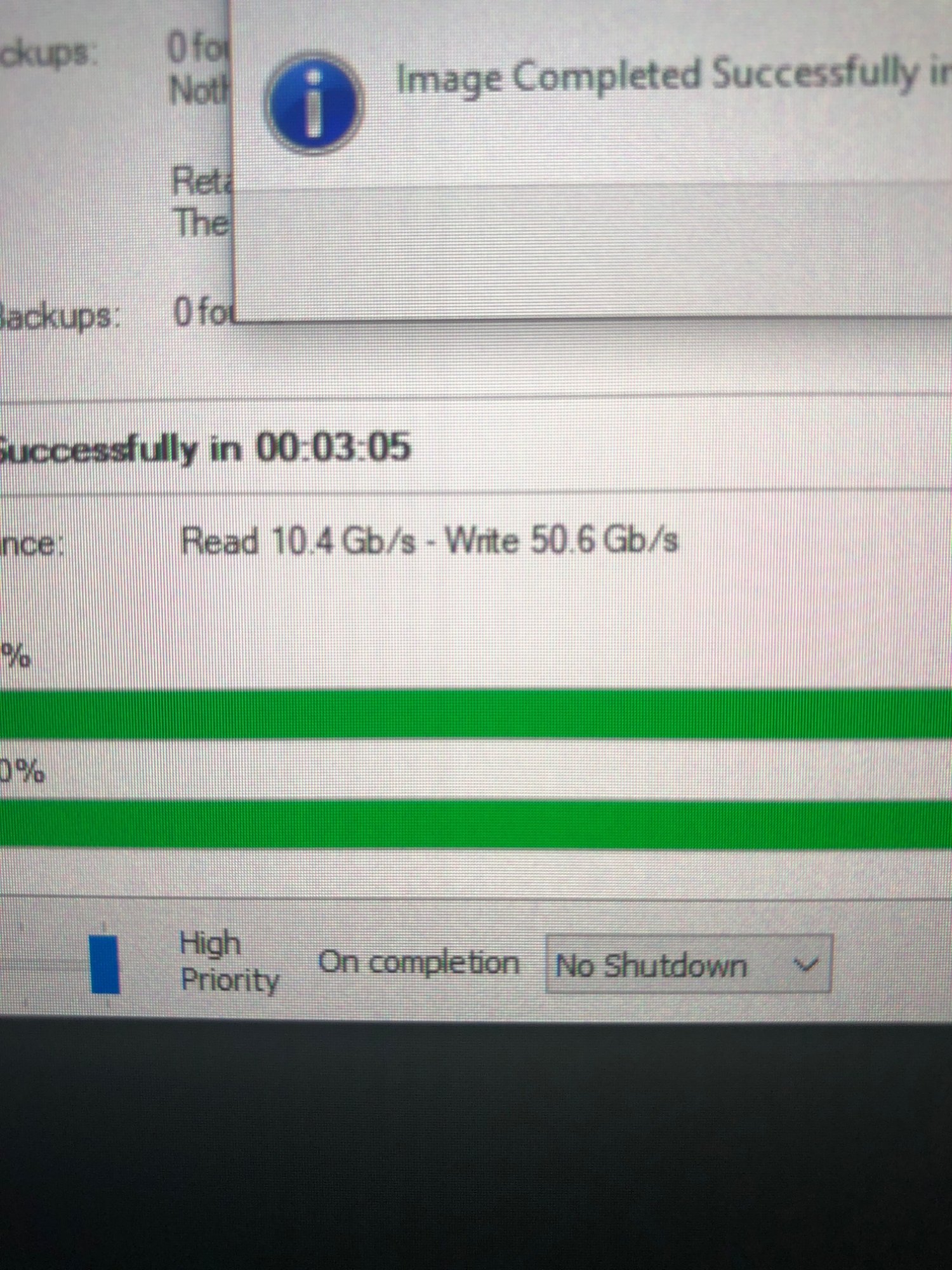

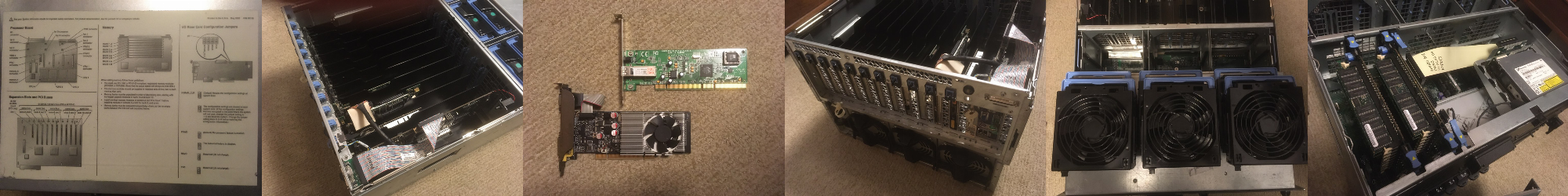

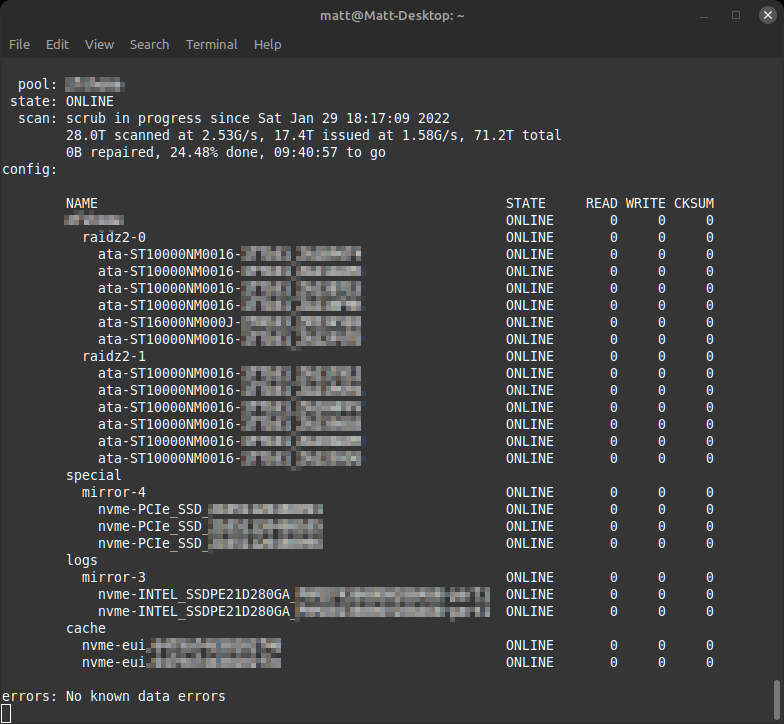

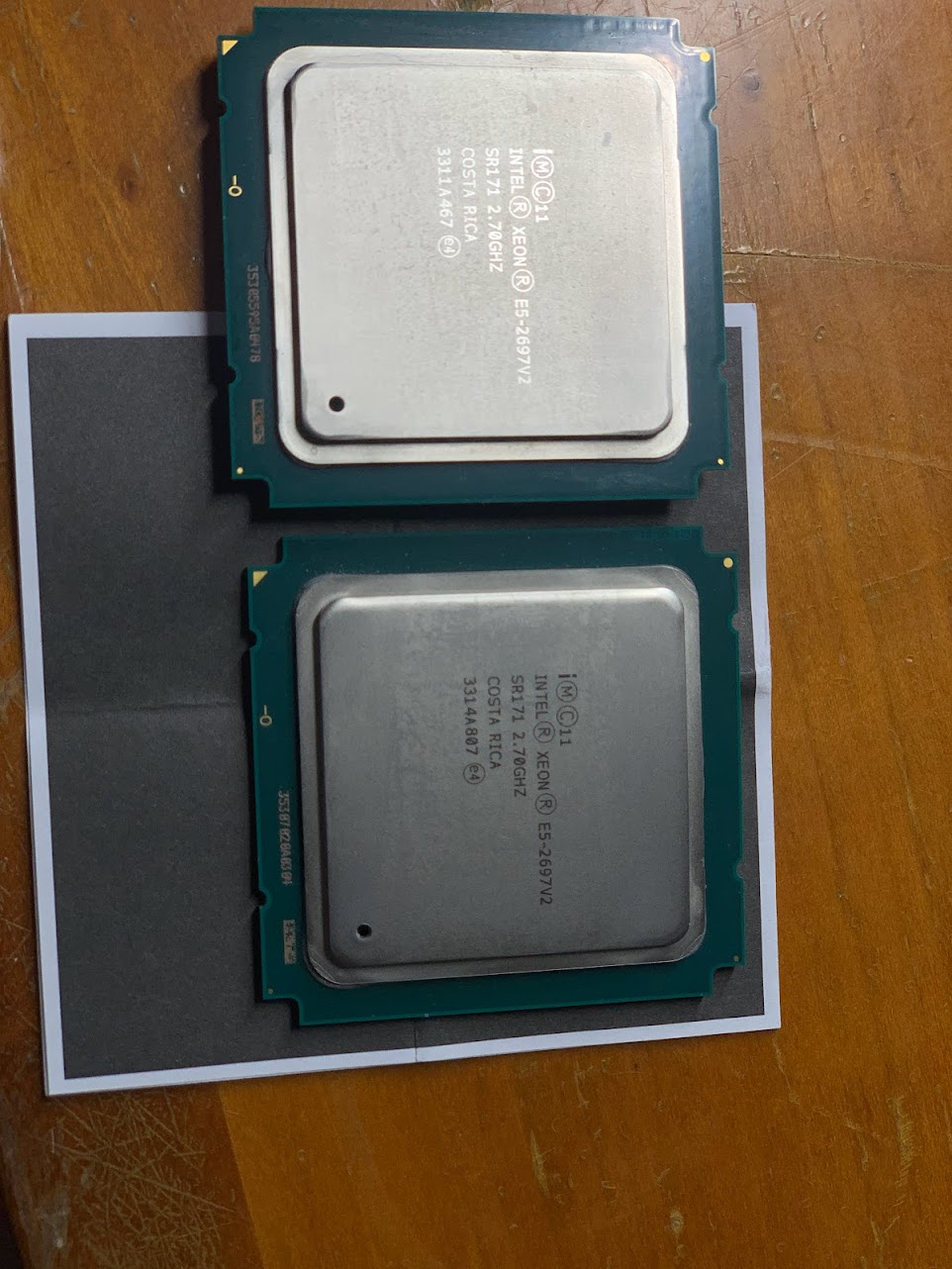

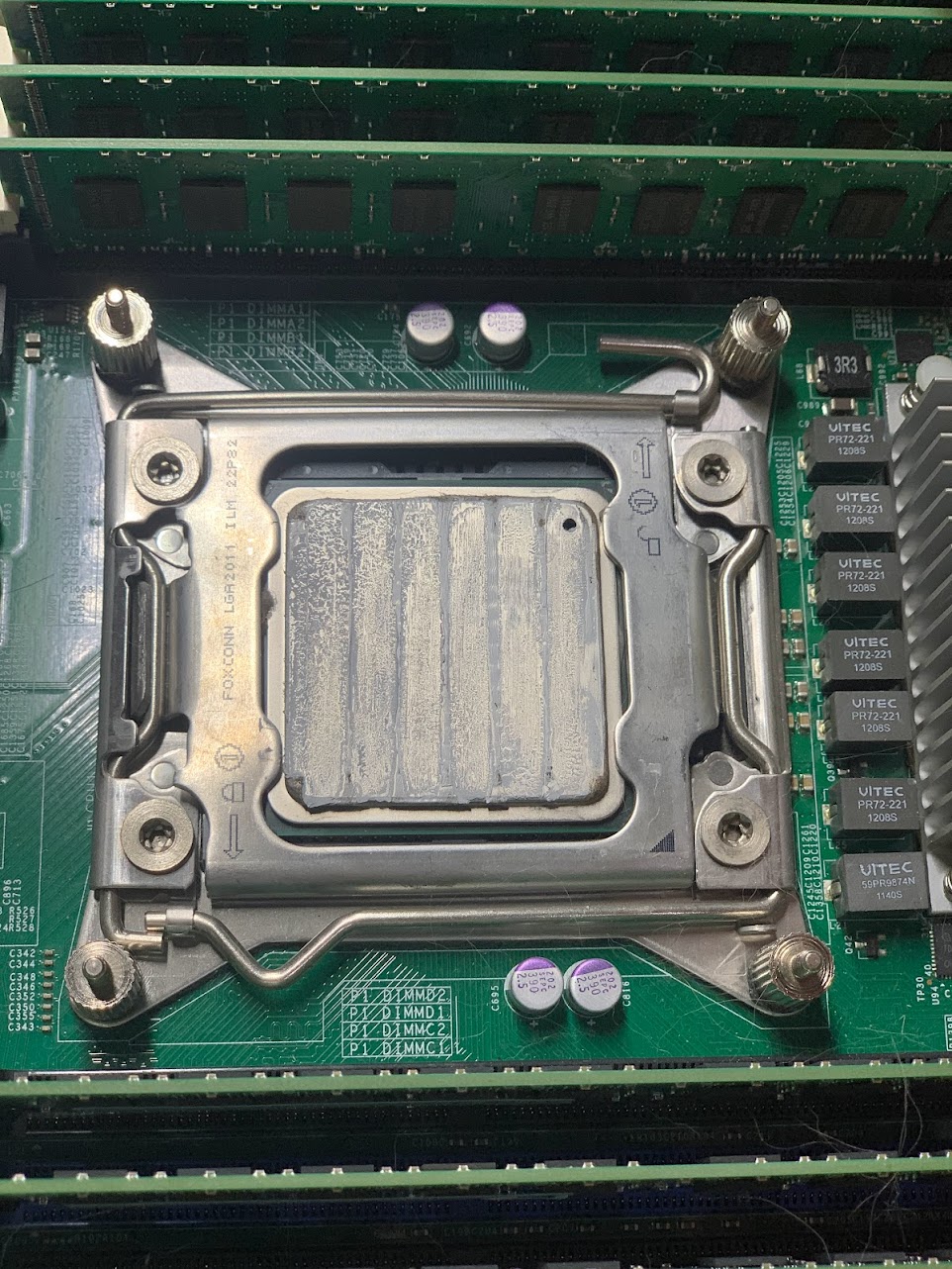

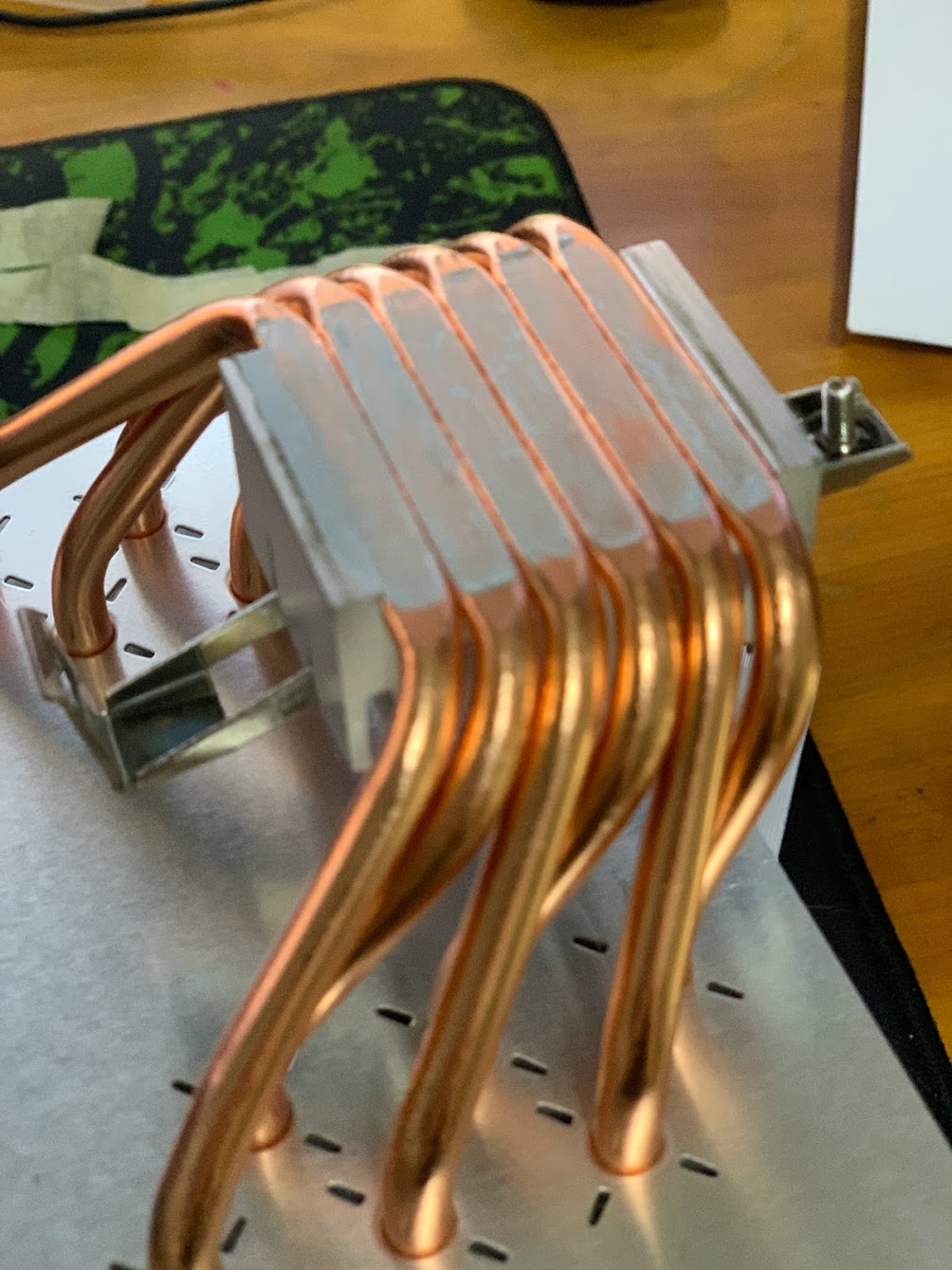

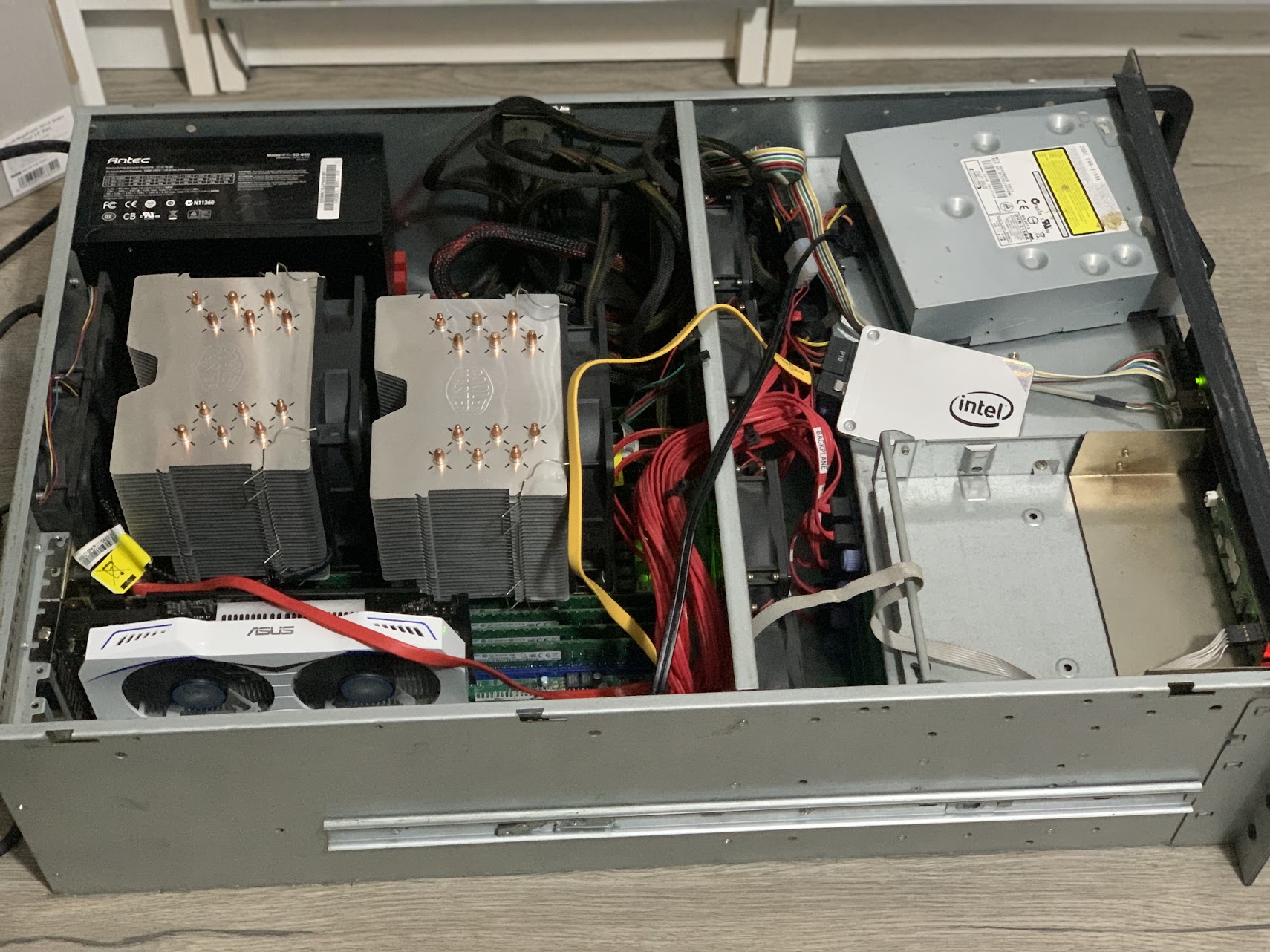

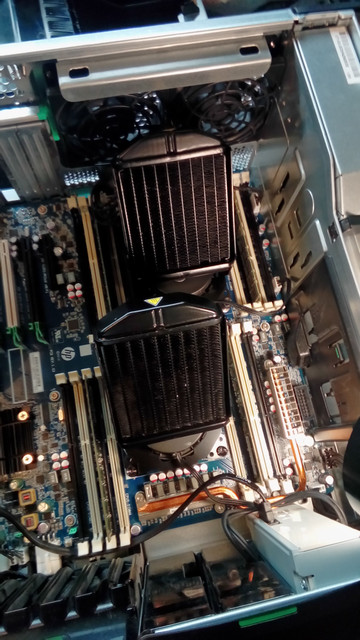

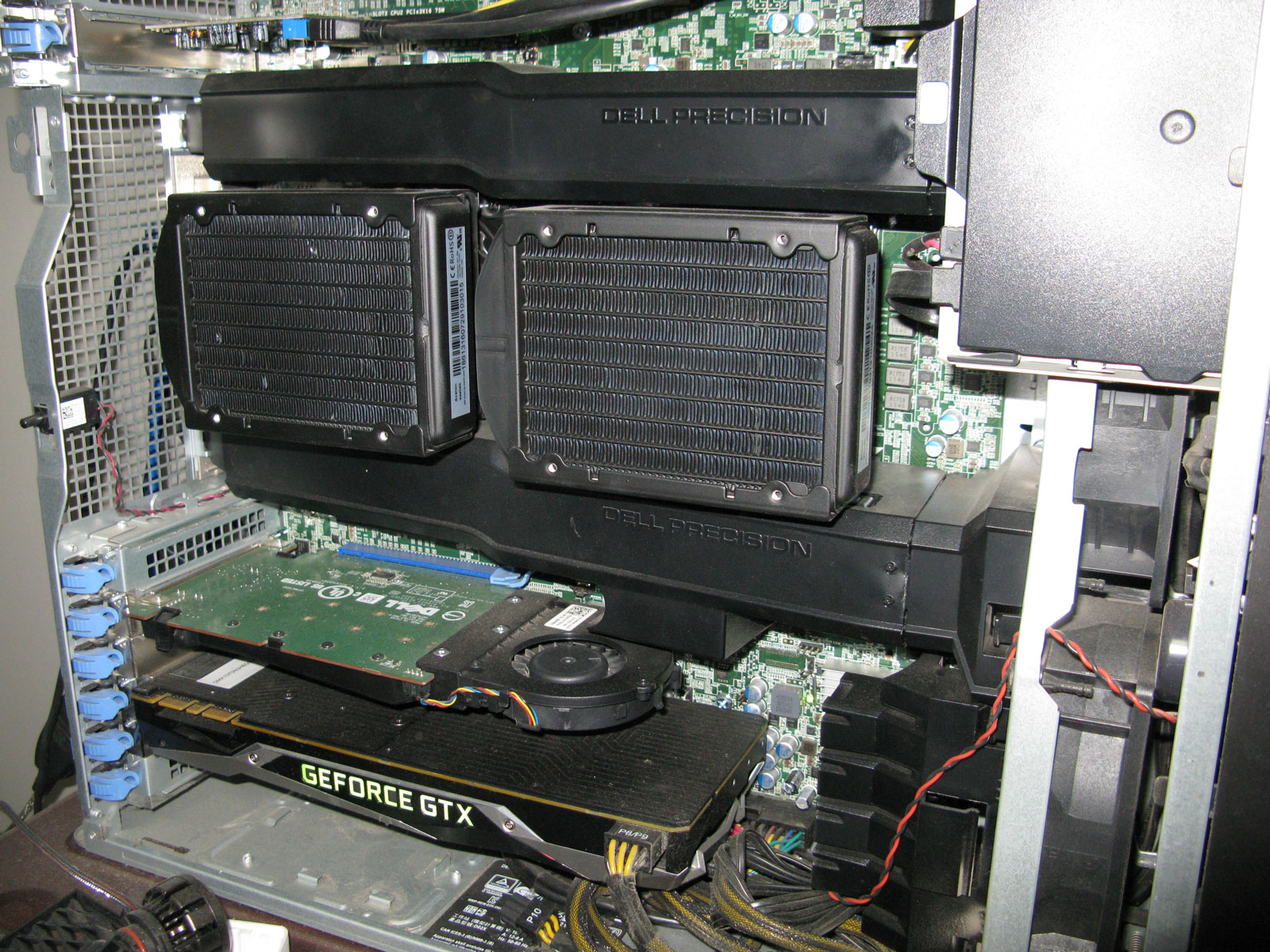

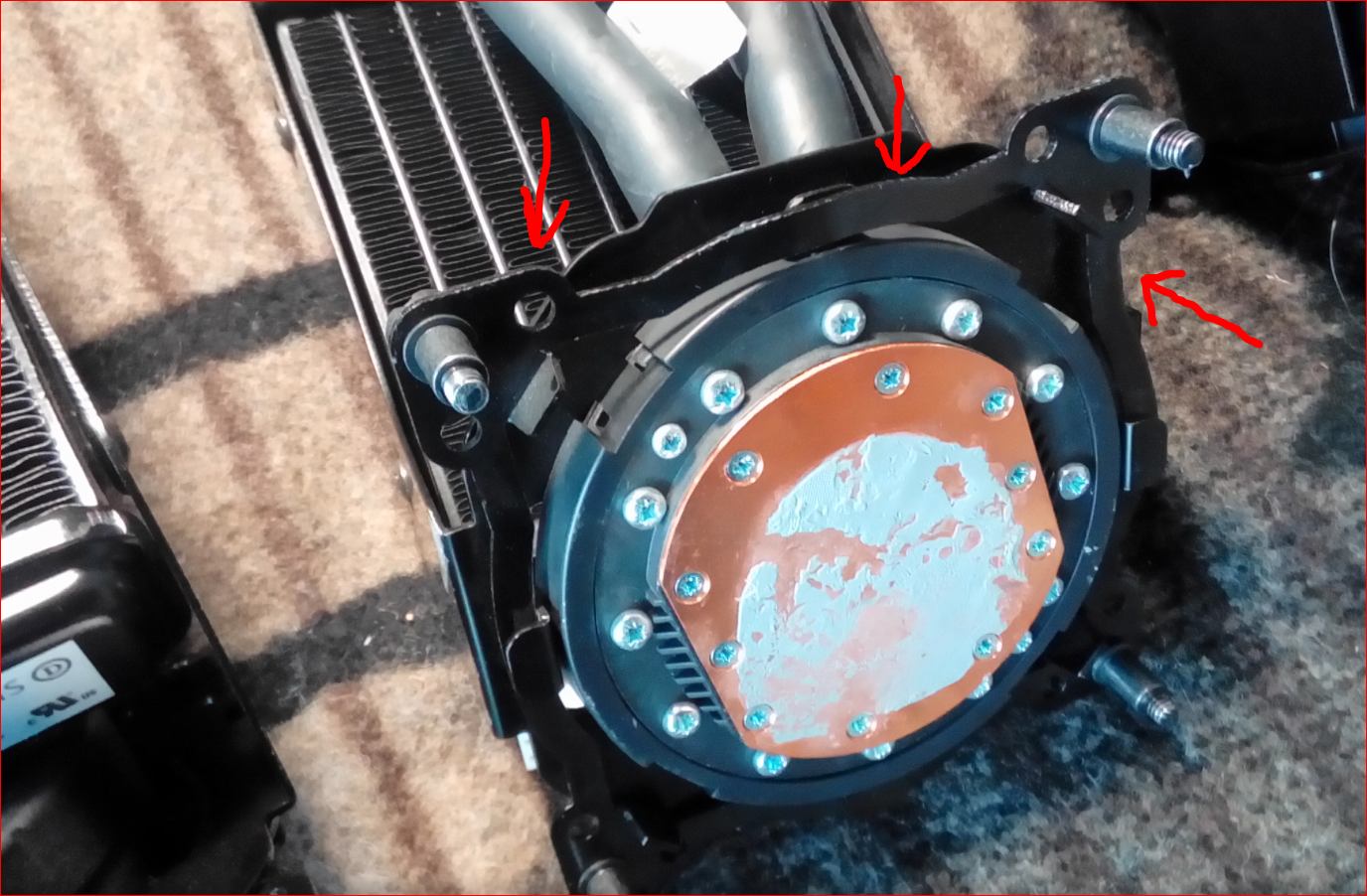

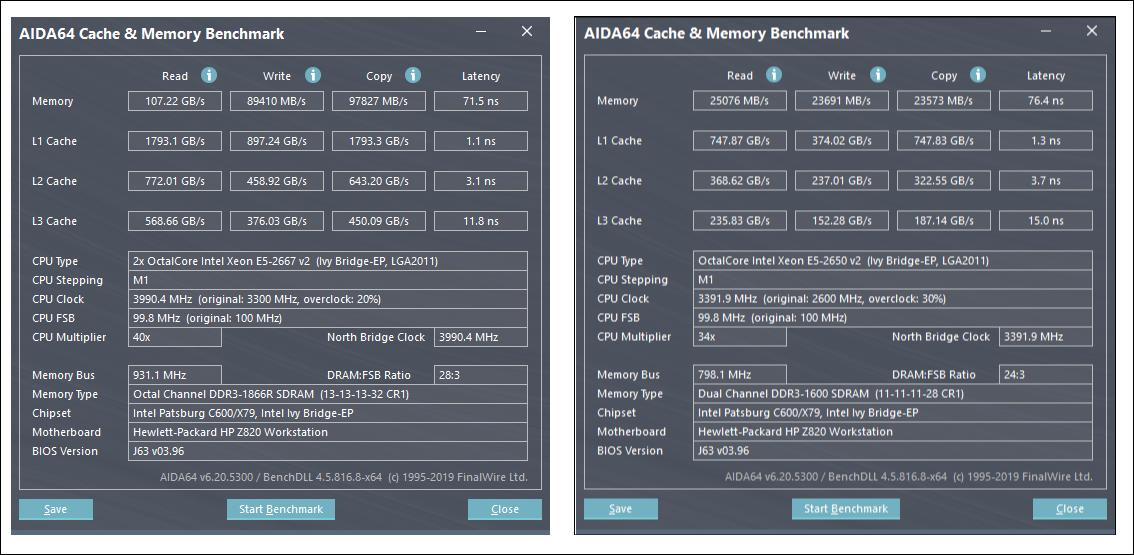

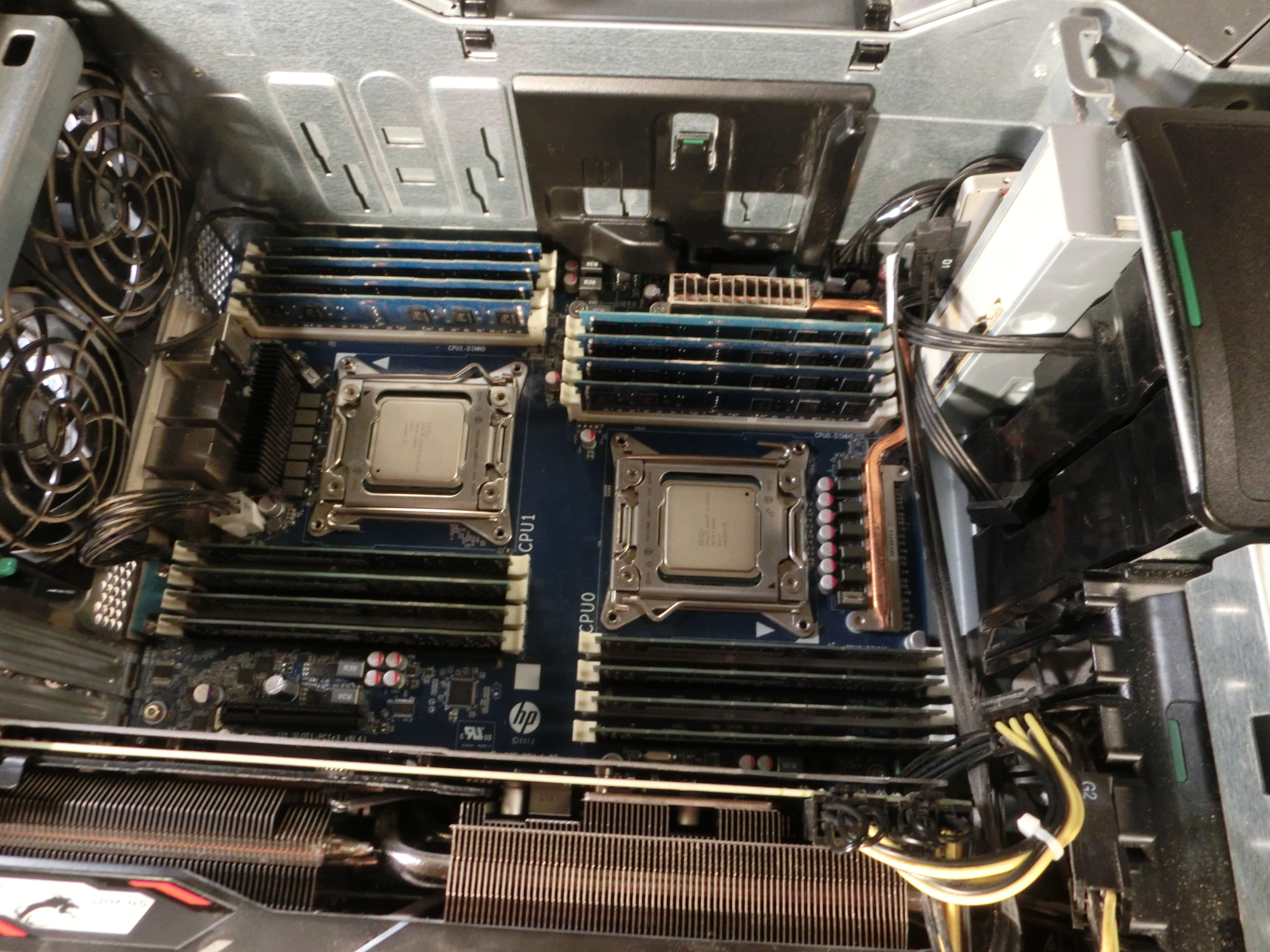

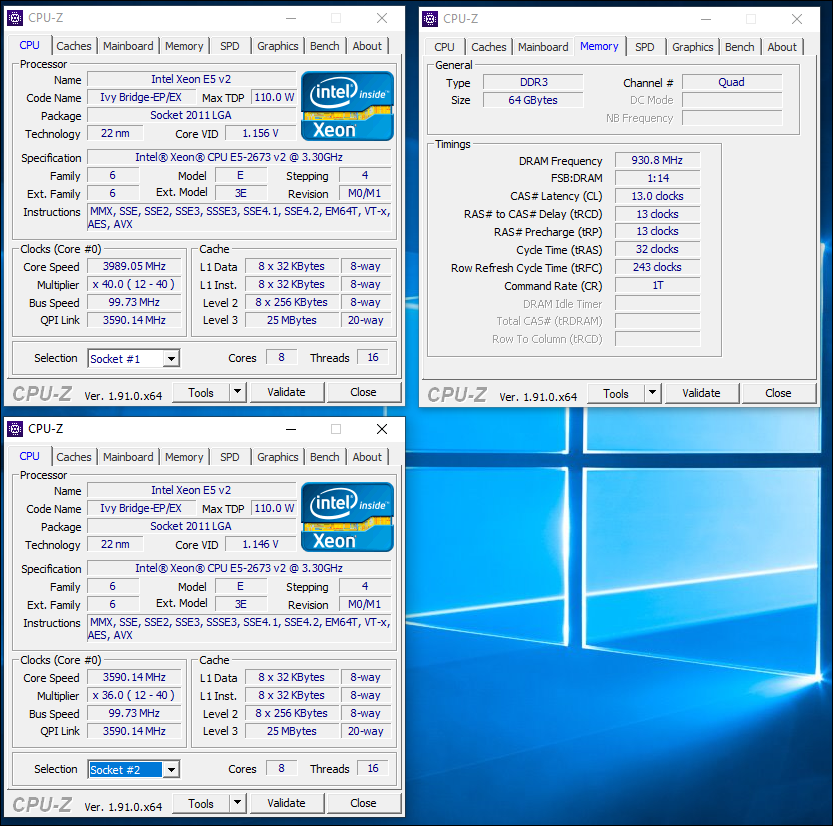

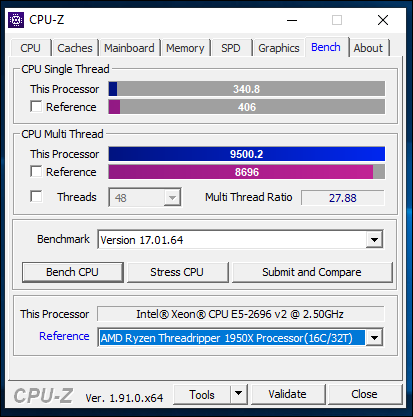

Older pics but you'll get the idea.

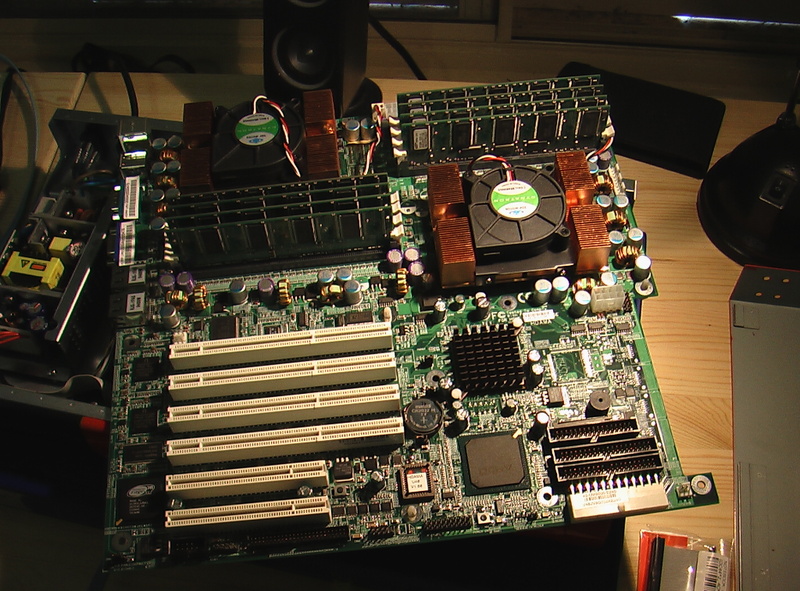

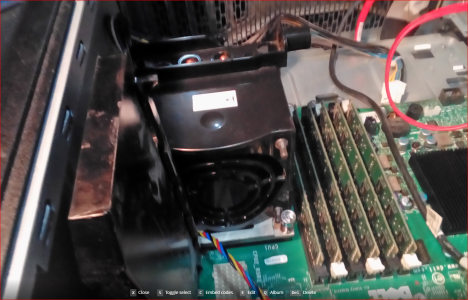

Corsair 900D

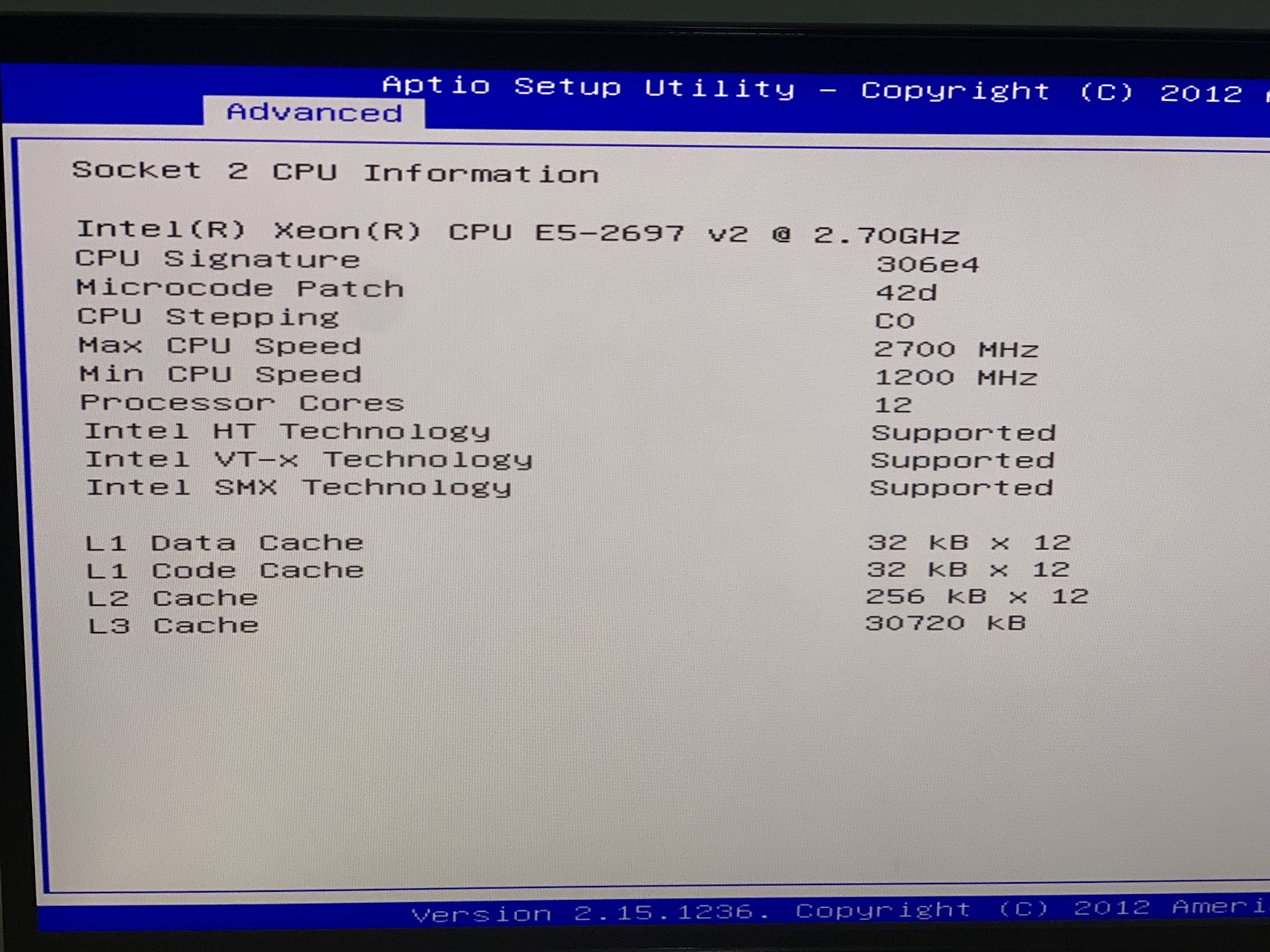

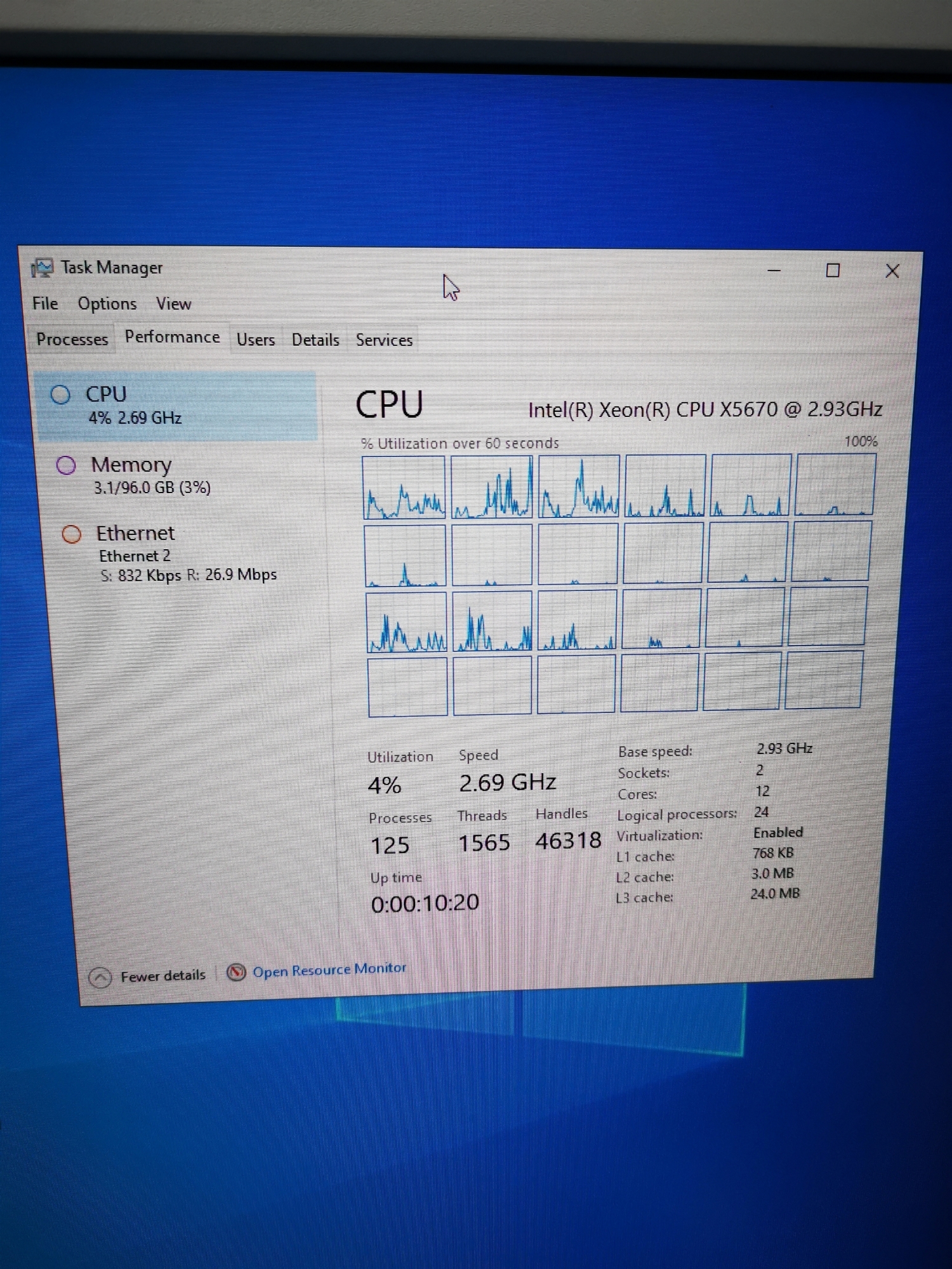

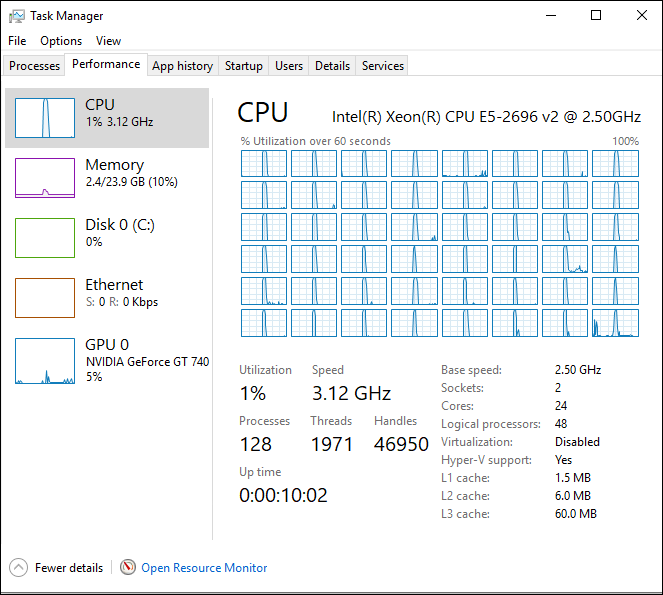

EVGA SR-2

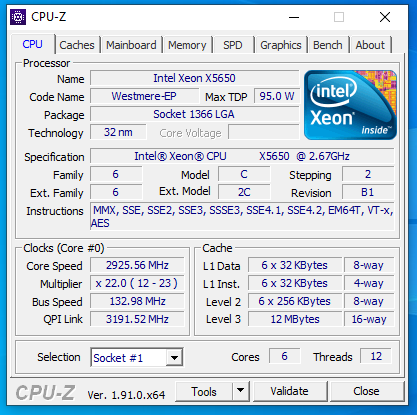

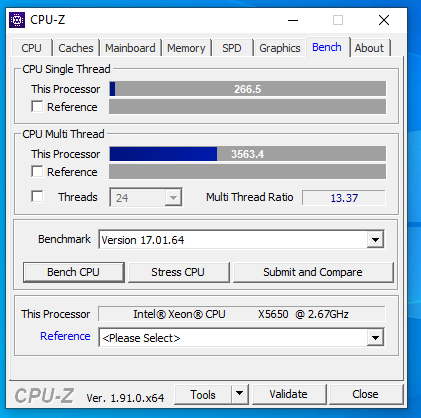

2 x Xeon X5675

48GB DDR3 1600Mhz

2 x 120GB PNY SSD RAID0

2 x Titan V

1 x Titan Xp

1 x RTX 3090 KINGPIN

EVGA 1600 G+

View attachment 437222

View attachment 437223

I was curios how you use the diversity of video cards in the same build. Are you running it in "containers" and vgpu / grid?

Clean build

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)