Usual_suspect

Limp Gawd

- Joined

- Apr 15, 2011

- Messages

- 317

And in came the AMD fanboy's....

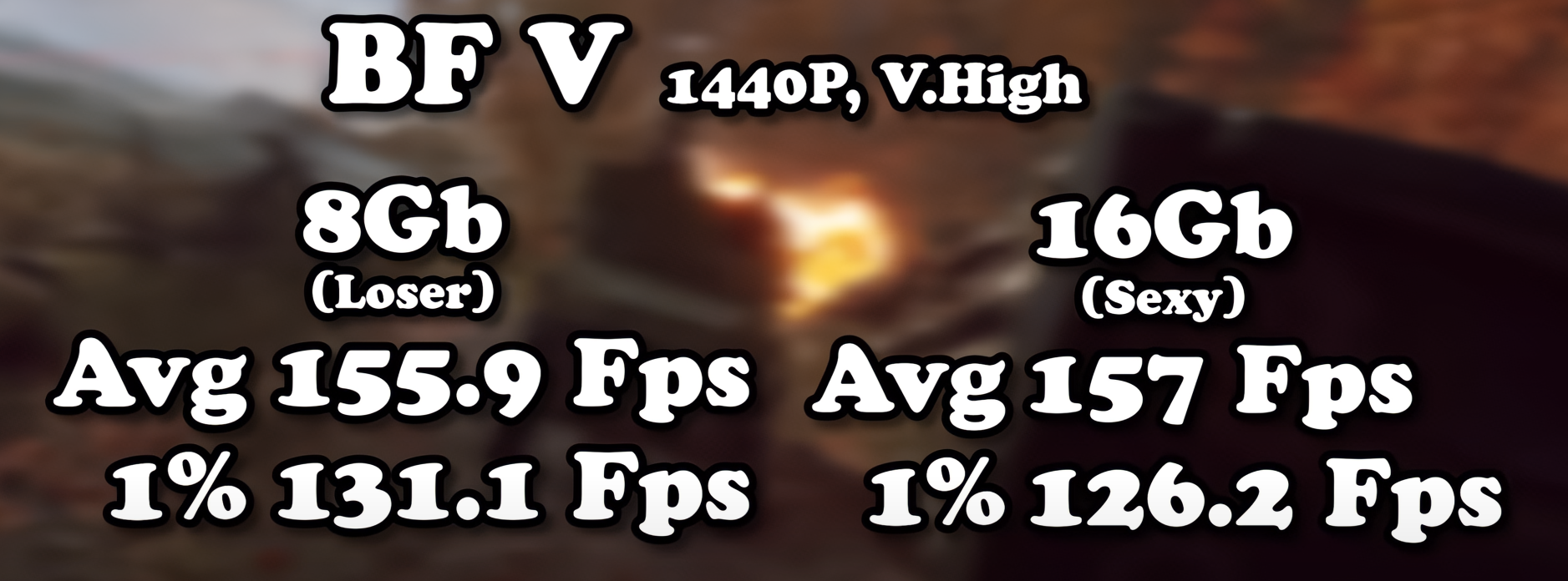

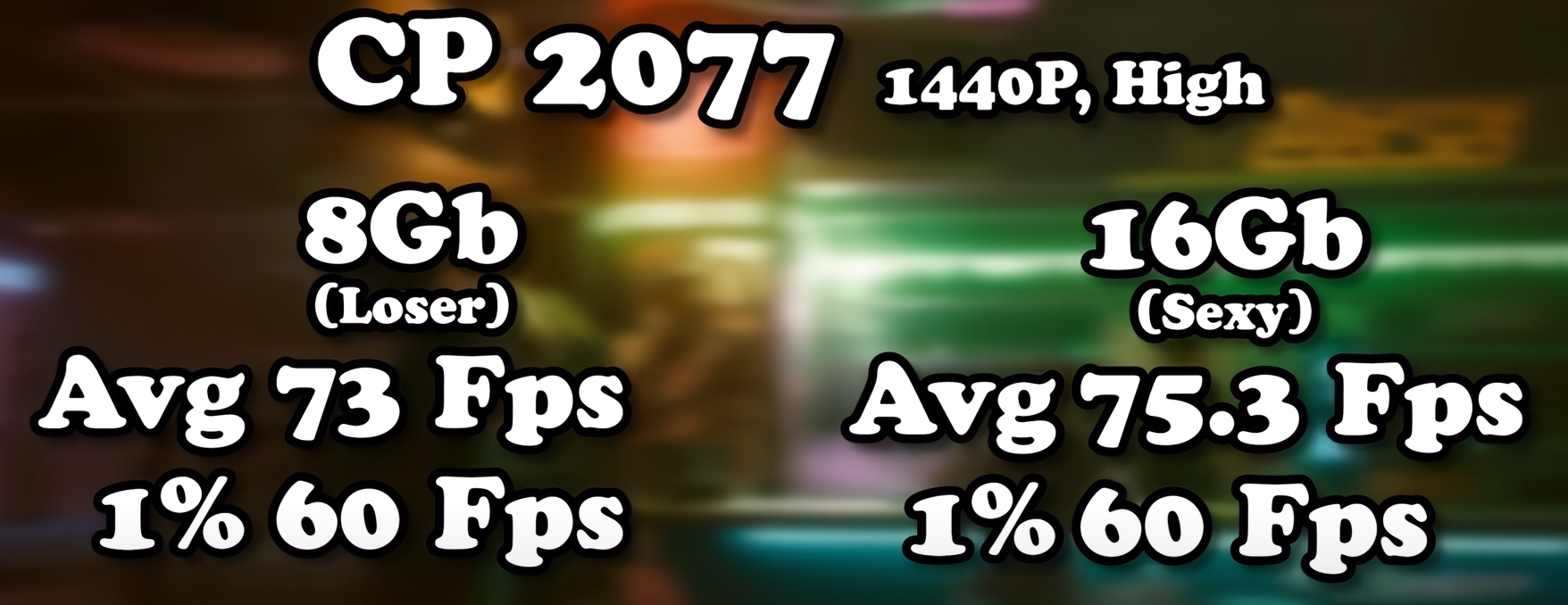

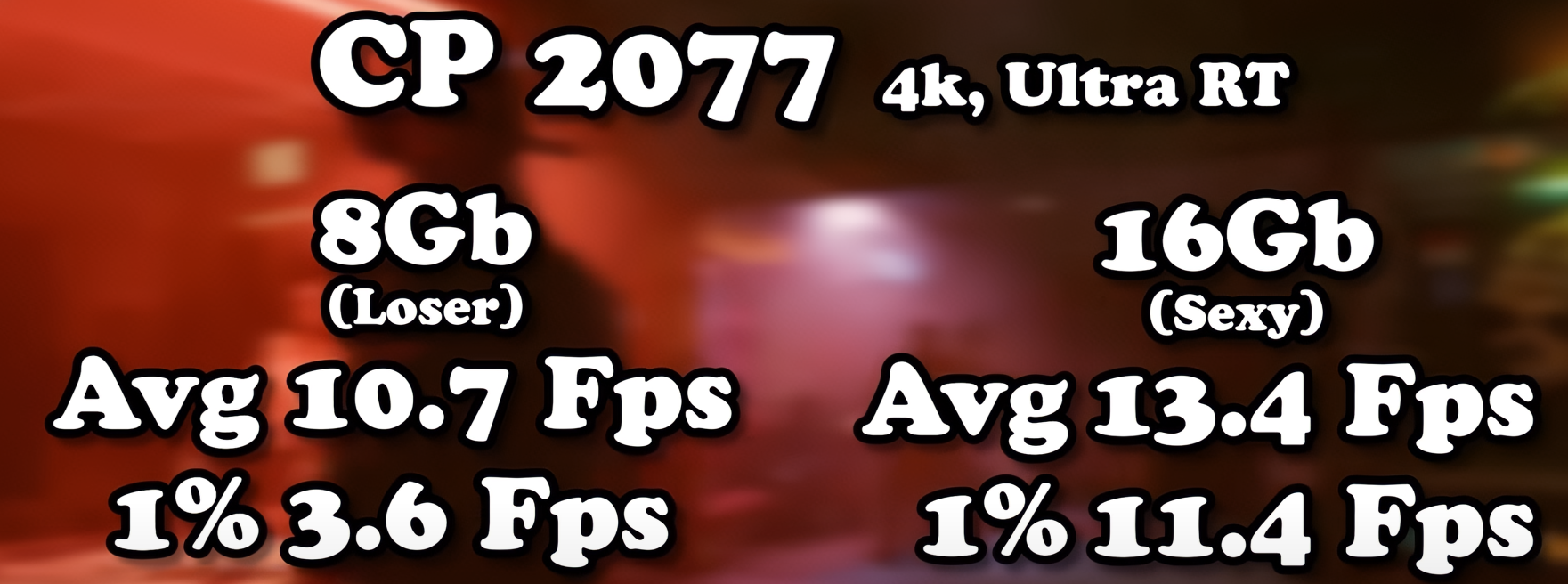

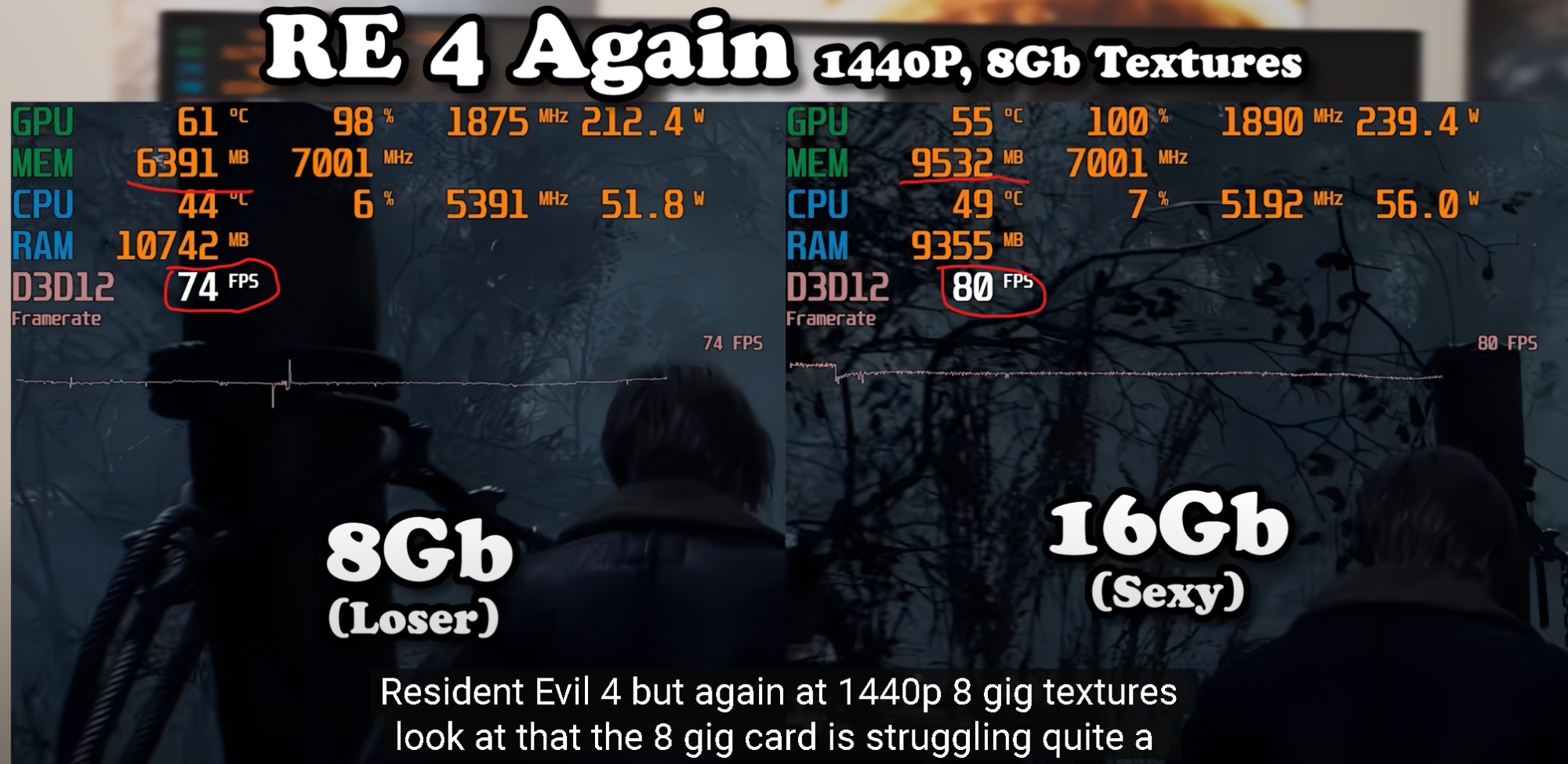

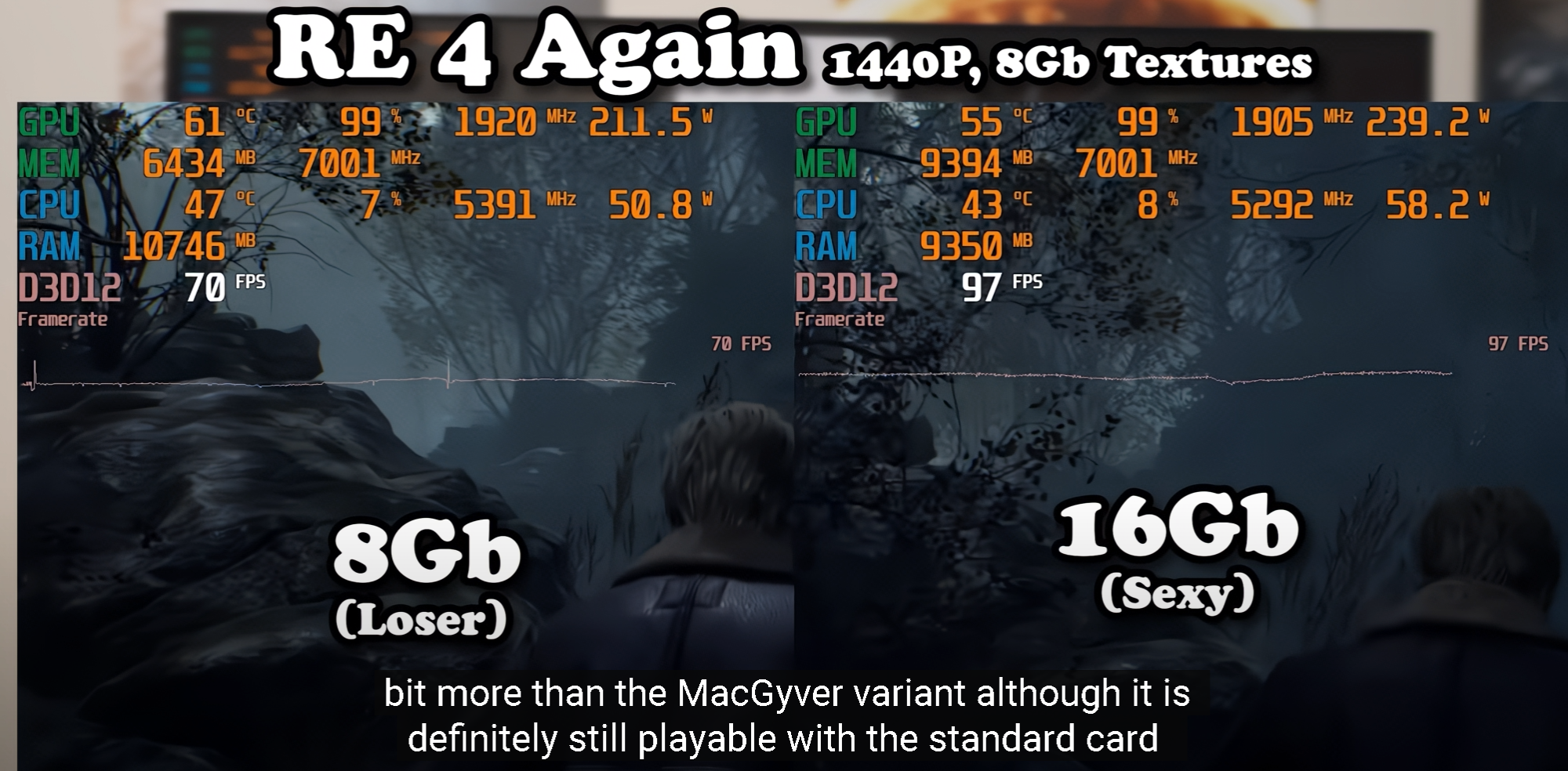

I'll curse Nvidia the day my 4070Ti actually runs into performance issues due to VRAM limitations, but chances are, it won't be for at least another 2 years from now, when it's expected that my GPU just isn't going to be able to do Ultra settings at 1440p anymore due to graphical progression, and even then I'll happily drop them down to high, which is still FAR superior to anything a console has to offer, and if Frame Gen is available, I'll just enjoy the nice, smooth experience it provides.

I'll be interested to see how AMD's FG knock-off is going to be in a couple of years, since by that time, like DLSS did, Frame Gen will get better on the Nvidia side.

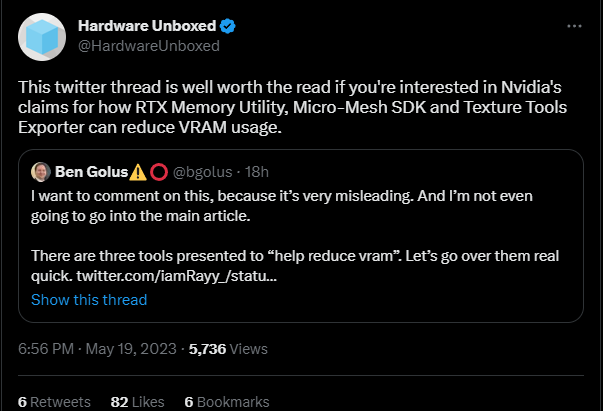

Oh and to the people putting it on Nvidia when it comes to these broken ass ports coming out--no. Nvidia should not, and nor should AMD give these game publishers any more reason to release broken products. Brute forcing isn't a viable solution, as the issue will only compound. Also to these very same people saying this--the publishers should, if they care about the revenue generated by PC ports, look into releasing games that work since a majority of the PC graphics market is owned by Nvidia, and the majority of those people all use 8GB or less GPU's, so if they want the market penetration, and sales numbers, they need to work with the hardware manufacturers, not against them. Anyone who could honestly expect hardware manufacturers to have to cater to game developers don't obviously see how that'll end up hurting the PC gaming industry due to how expensive shit would get real quick. If you think the 40-series is vastly over priced imagine if companies like Nvidia and AMD are having to release GPU's with a baseline of 24GB and top end at 48GB... pair that with TSMC's ever rising costs and that right there would make the 40-series look like a discount.

I'll curse Nvidia the day my 4070Ti actually runs into performance issues due to VRAM limitations, but chances are, it won't be for at least another 2 years from now, when it's expected that my GPU just isn't going to be able to do Ultra settings at 1440p anymore due to graphical progression, and even then I'll happily drop them down to high, which is still FAR superior to anything a console has to offer, and if Frame Gen is available, I'll just enjoy the nice, smooth experience it provides.

I'll be interested to see how AMD's FG knock-off is going to be in a couple of years, since by that time, like DLSS did, Frame Gen will get better on the Nvidia side.

Oh and to the people putting it on Nvidia when it comes to these broken ass ports coming out--no. Nvidia should not, and nor should AMD give these game publishers any more reason to release broken products. Brute forcing isn't a viable solution, as the issue will only compound. Also to these very same people saying this--the publishers should, if they care about the revenue generated by PC ports, look into releasing games that work since a majority of the PC graphics market is owned by Nvidia, and the majority of those people all use 8GB or less GPU's, so if they want the market penetration, and sales numbers, they need to work with the hardware manufacturers, not against them. Anyone who could honestly expect hardware manufacturers to have to cater to game developers don't obviously see how that'll end up hurting the PC gaming industry due to how expensive shit would get real quick. If you think the 40-series is vastly over priced imagine if companies like Nvidia and AMD are having to release GPU's with a baseline of 24GB and top end at 48GB... pair that with TSMC's ever rising costs and that right there would make the 40-series look like a discount.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)