Hi,

Looking to get opinions on a good RAID controller for IR mode RAID 1, as well as to get your experiences on which to use.

I'm currently running ESXI 6.5/6.7 on a 2700X/X470 machine, and a RAID 1 Samsung 860 EVO 1TB array on an LSI 9211-8i is very slow.

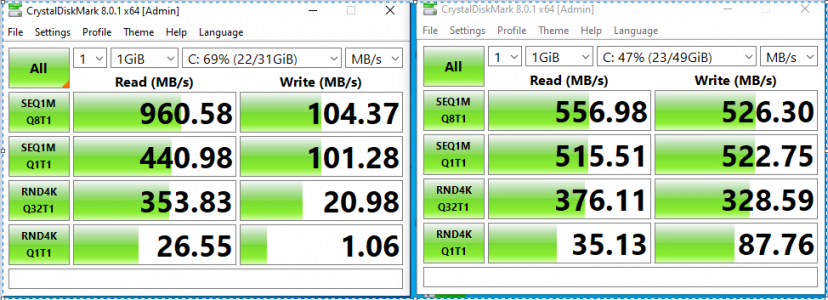

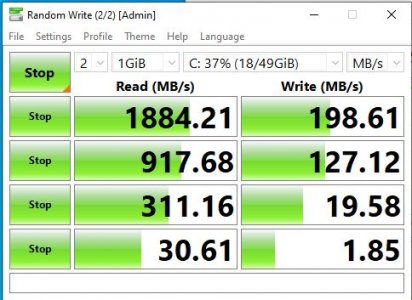

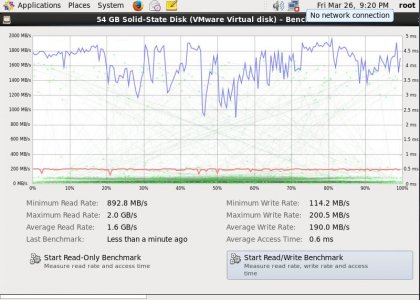

CrystalDiskMark (Windows), HDParm (on a Linux), and some real world tests (Restoration of a mySQL dump) are all significantly slower than a single 850 EVO 250GB from the motherboard SATA drive.

I know the RAID card will make the drive slower, but I was not expecting 50% slower.

Some questions:

a. What RAID controller will work fine for ESXI RAID 1/10 for database use? I'm currently looking at either 9440-8i's or 9361-8i's, both of which are on the ESXI compatibility chart.

b. What is the reason why the RAID is slow? When I run a CrystalDiskMark on a bare metal Windows 10 with the LSI 9211 RAID 1 formatted as NTFS, the benchmarks are not far off compared to a SATA drive plugged into the board. This is more in line with what I expected.

Thanks!

Thinking of trying some Lenovo 530-8i's, which are MegaRAID 9440-8i to be purely ran in IR mode for RAID 1/10

Looking to get opinions on a good RAID controller for IR mode RAID 1, as well as to get your experiences on which to use.

I'm currently running ESXI 6.5/6.7 on a 2700X/X470 machine, and a RAID 1 Samsung 860 EVO 1TB array on an LSI 9211-8i is very slow.

CrystalDiskMark (Windows), HDParm (on a Linux), and some real world tests (Restoration of a mySQL dump) are all significantly slower than a single 850 EVO 250GB from the motherboard SATA drive.

I know the RAID card will make the drive slower, but I was not expecting 50% slower.

Some questions:

a. What RAID controller will work fine for ESXI RAID 1/10 for database use? I'm currently looking at either 9440-8i's or 9361-8i's, both of which are on the ESXI compatibility chart.

b. What is the reason why the RAID is slow? When I run a CrystalDiskMark on a bare metal Windows 10 with the LSI 9211 RAID 1 formatted as NTFS, the benchmarks are not far off compared to a SATA drive plugged into the board. This is more in line with what I expected.

Thanks!

Thinking of trying some Lenovo 530-8i's, which are MegaRAID 9440-8i to be purely ran in IR mode for RAID 1/10

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)