Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GeForce RTX 2060 VRAM Usage, Is 6GB's Enough for 1440p?

- Thread starter happymedium

- Start date

Modred189

Can't Read the OP

- Joined

- May 24, 2006

- Messages

- 16,318

THanks everyone for this thread. Very useful to me.

One question: is there any indication that performance hits in ray tracing is ram limited or core-count limited. It doesn't seem clear to me.

I'm a 1080p gamer, so the 2060 seems like a good update to my aging 970 FE

One question: is there any indication that performance hits in ray tracing is ram limited or core-count limited. It doesn't seem clear to me.

I'm a 1080p gamer, so the 2060 seems like a good update to my aging 970 FE

I picked up a MSI Ventus 2060 for benchmarking and I thought it did pretty good for 1440p with a Ryzen 2600 on the 9 games that I tested.

Now I'm small-time but I do enjoy running benchmarks and sharing/comparing info:

Good work man, and we all appreciate you not having some weird techno music in it. Your videos may get more attention if you did a comparison with at least one other similar card such as Vega 56 or GTX 1070ti.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,205

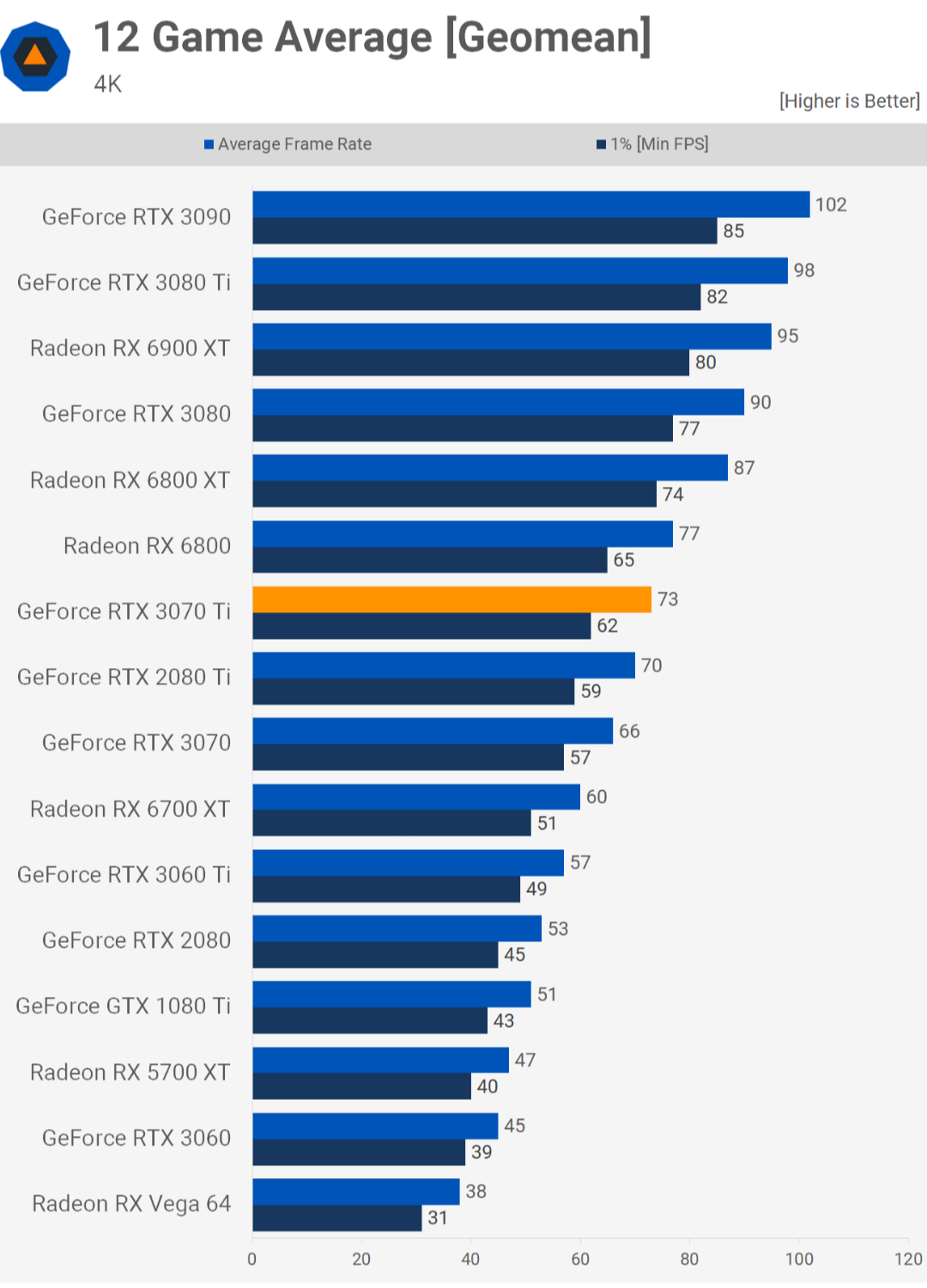

Yes, it is a thread necro, but very relevant today. So after three years, it looks like a the 6GB frame buffer was not much of an issue for the 2060. It is actually a bit amusing to look back at this thread.

The new card, RTX 2060 12 GB, has the same bandwidth as the old 6 GB model with double the vram. However, it does have the same core capacity as the 8 GB Super model.

So Steve tested the "new" card against those cards. The results showed that the 12 GB model being closer to that of the 6 GB model than the higher bandwidth 8 GB model, especially at 1440p, which is a strong indicator that the bottleneck is bandwidth in both the 6 GB and 12 GB cards. Had there been a theoretical 6 GB card on a 384 bit bus (yeah, goofy) it is possible that card would have beat them all.

One possible caveat: He did do the test using 32 GB of system ram. I would like to see the test done with 16 GB of ram as well. Reason being is that the 3 GB GTX 1060 using less system ram while the 6 GB GTX did not. Curious to see if something similar happens at a larger scale.

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,520

Yes, it is a thread necro, but very relevant today. So after three years, it looks like a the 6GB frame buffer was not much of an issue for the 2060. It is actually a bit amusing to look back at this thread.

The new card, RTX 2060 12 GB, has the same bandwidth as the old 6 GB model with double the vram. However, it does have the same core capacity as the 8 GB Super model.

So Steve tested the "new" card against those cards. The results showed that the 12 GB model being closer to that of the 6 GB model than the higher bandwidth 8 GB model, especially at 1440p, which is a strong indicator that the bottleneck is bandwidth in both the 6 GB and 12 GB cards. Had there been a theoretical 6 GB card on a 384 bit bus (yeah, goofy) it is possible that card would have beat them all.

One possible caveat: He did do the test using 32 GB of system ram. I would like to see the test done with 16 GB of ram as well. Reason being is that the 3 GB GTX 1060 using less system ram while the 6 GB GTX did not. Curious to see if something similar happens at a larger scale.

I doubt the system memory will matter much. They don't run benchmarks with 100 browser tabs open.

Sir Beregond

Gawd

- Joined

- Oct 12, 2020

- Messages

- 943

VRAM capacity was never the problem for a card of this caliber performance. You don't buy a 60 class card to game well in 4k - especially if it has a 192-bit bus. I mean really now. You'll hit far more bottlenecks with regards to the performance before you ever hit any because of VRAM capacity.

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

You need to actually watch the digital foundry video on battlefield because it was indeed limited by the vram and that was 2 years ago and only at 1080p. For most games yes you would certainly be correct but there will always be some exceptions. Same goes for 8 gigs now as there are at least 4 games that will require lowering of settings that could otherwise run fine with more vram.VRAM capacity was never the problem for a card of this caliber performance. You don't buy a 60 class card to game well in 4k - especially if it has a 192-bit bus. I mean really now. You'll hit far more bottlenecks with regards to the performance before you ever hit any because of VRAM capacity.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,205

u need to actually watch the digital foundry video on battlefield because it was indeed limited by the vram and that was 2 years ago and only at 1080p. For most games yes you would certainly be correct but there will always be some exceptions. Same goes for 8 gigs now as there are at least 4 games that will require lowering of settings that could otherwise run fine with more vram.

What games are those and how do we know the higher settings are too much for the cores and not the vram? Right now the RX 3070 is possibly in a worse posistion than the RX 2060 was then.

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

The easiest and quickest way is to turn down textures as texture settings have almost zero impact on anything other than vram. That is the case for Doom Eternal, Far Cry 6, Wolfenstein youngblood and a few other games that I can't think of right now. There are also a couple of games out there that will dynamically adjust the settings on the fly if you don't have enough vram so you will think that you are all right because you're not getting stuttering but you're actually losing some details. In the end it's not that big a deal but there's something about having a brand new product that's already a limitation on the day it's released.What games are those and how do we know the higher settings are too much for the cores and not the vram? Right now the RX 3070 is possibly in a worse posistion than the RX 2060 was then.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,205

The easiest and quickest way is to turn down textures as texture settings have almost zero impact on anything other than vram. That is the case for Doom Eternal, Far Cry 6, Wolfenstein youngblood and a few other games that I can't think of right now. There are also a couple of games out there that will dynamically adjust the settings on the fly if you don't have enough vram so you will think that you are all right because you're not getting stuttering but you're actually losing some details. In the end it's not that big a deal but there's something about having a brand new product that's already a limitation on the day it's released.

Ah yes id software games eat up vram for sure. Given a set bandwidth, it's hard to get the "perfect" amount of vram at certain performance levels. 6 GB is said to be too little for the 2060 and 12 GB is said to be way too much. Same for the 8 GB on the 3070 and 16 GB on the upcoming 3070ti.

The only around this is using a hybrid setup with unmatched module sizes in which GPU manufactures have rarely done.

N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

Same old thing as usual, VRAM typically limits your card more than speed of it. Never skimp on VRAM if you want to use it for a few years or longer. Only exception is those weird mega gimped GDDR2? era cards of the old days with lots of slow-ass useless VRAM.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,205

What?? Show us one benchmark where a 2060 12 GB beats an 2060 Super 8 GB.Same old thing as usual, VRAM typically limits your card more than speed of it. Never skimp on VRAM if you want to use it for a few years or longer. Only exception is those weird mega gimped GDDR2? era cards of the old days with lots of slow-ass useless VRAM.

Modred189

Can't Read the OP

- Joined

- May 24, 2006

- Messages

- 16,318

Also, wasn't there an Nvidia GPU series where they played games with bandwidth and vram capacity? Was it the 9600s? Bandwidth turned out to be a huge deal over capat...What?? Show us one benchmark where a 2060 12 GB beats an 2060 Super 8 GB.

FlawleZ

[H]ard|Gawd

- Joined

- Oct 20, 2010

- Messages

- 1,691

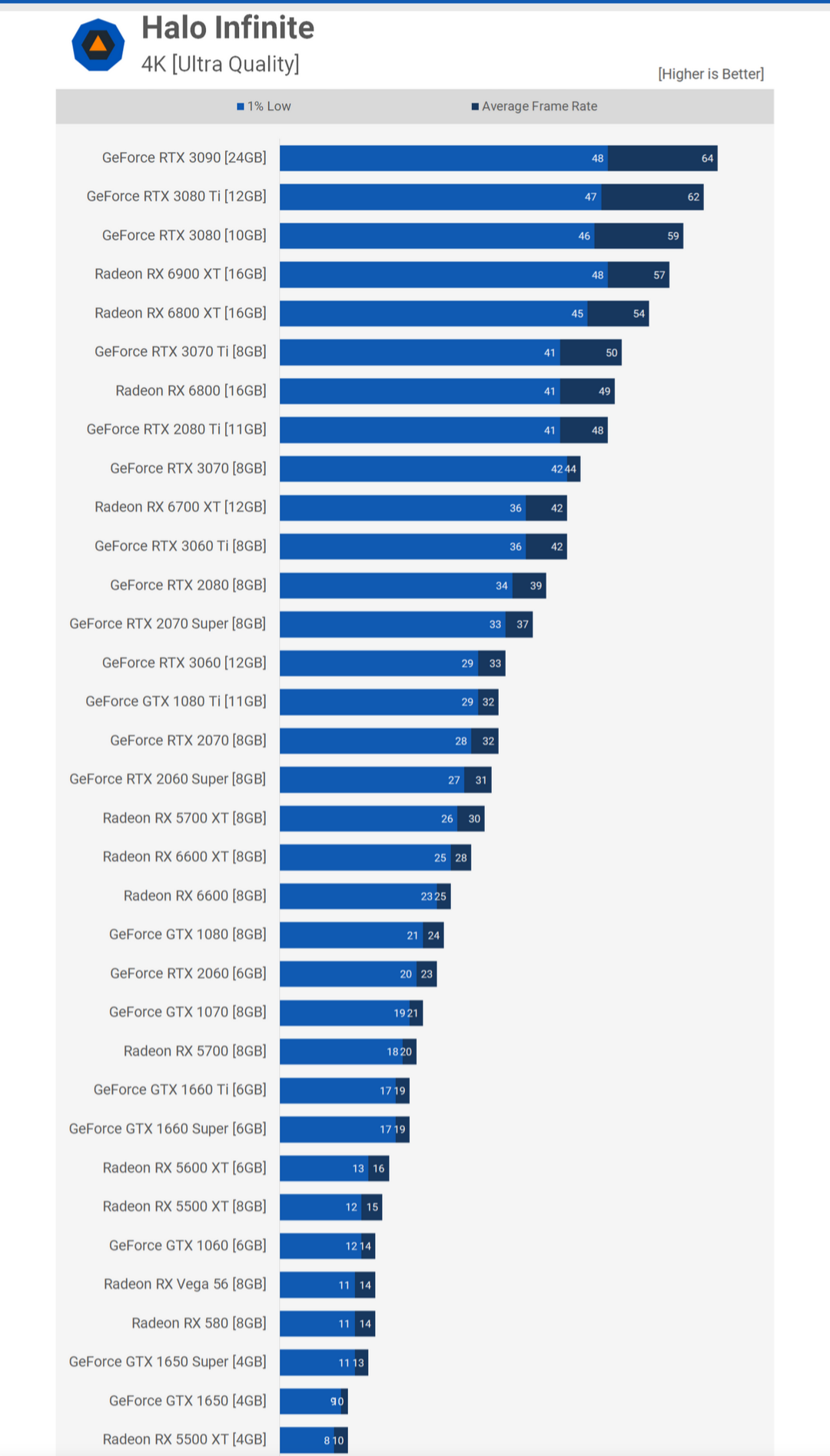

Playing Halo Infinite the other day at 4K max and even on the interior levels I'm seeing 9.2GB of VRAM used. My brother's RX6800 sees closer to 10GB used.

I don't really see the 6GB frame buffer a problem for an RTX 2060, but for the 2080s and especially 3070s, the 8GB frame buffer will most certainly be a problem as games are using more and more VRAM.

I don't really see the 6GB frame buffer a problem for an RTX 2060, but for the 2080s and especially 3070s, the 8GB frame buffer will most certainly be a problem as games are using more and more VRAM.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,205

Most certainly is a strong term. Doom Eternal was the only game I saw where the 2080ti scored a decisive victory over the 3070ti.Playing Halo Infinite the other day at 4K max and even on the interior levels I'm seeing 9.2GB of VRAM used. My brother's RX6800 sees closer to 10GB used.

I don't really see the 6GB frame buffer a problem for an RTX 2060, but for the 2080s and especially 3070s, the 8GB frame buffer will most certainly be a problem as games are using more and more VRAM.

Remember, vram used or allocated is not the same as vram needed.

FlawleZ

[H]ard|Gawd

- Joined

- Oct 20, 2010

- Messages

- 1,691

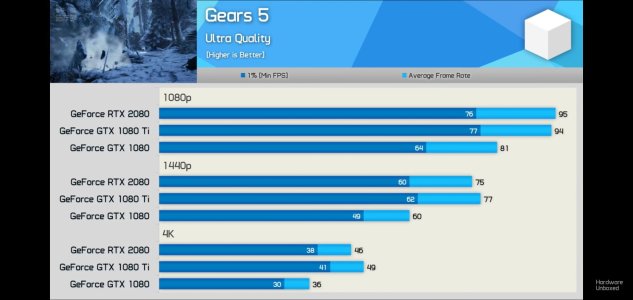

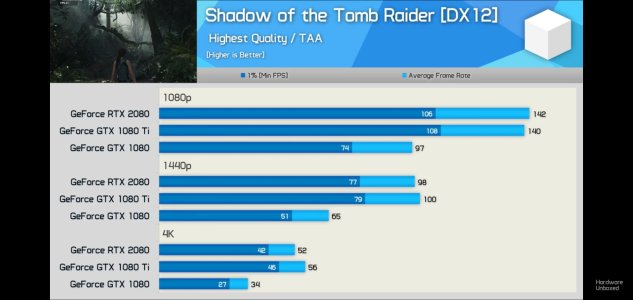

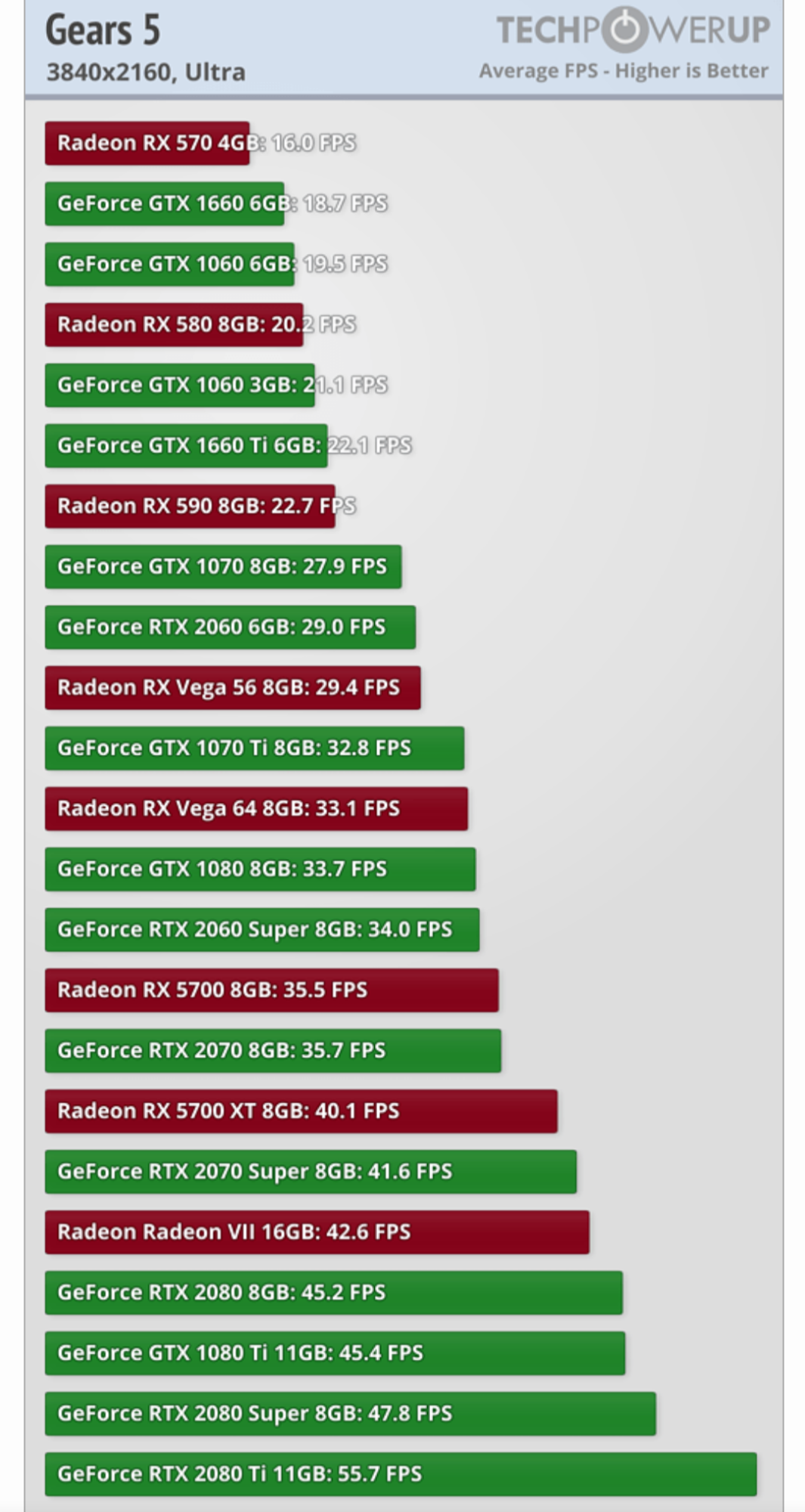

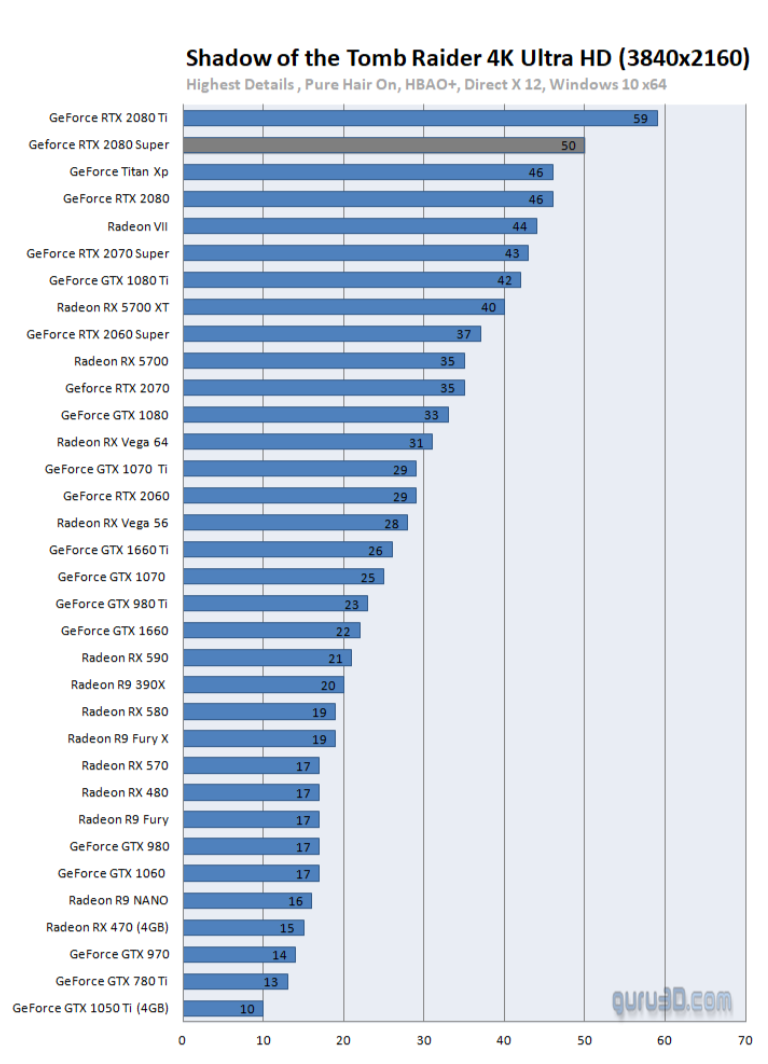

Gears 5 and Tomb Raider both benefit from having more than 8GB. 1080TI defeats 2080 in these cases.Most certainly is a strong term. Doom Eternal was the only game I saw where the 2080ti scored a decisive victory over the 3070ti.

View attachment 427565

View attachment 427560

Remember, vram used or allocated is not the same as vram needed.

Attachments

Gears 5 and Tomb Raider both benefit from having more than 8GB. 1080TI defeats 2080 in these cases.

I'm not sure I agree with those examples, when you run out of memory your .1 percents take a shit. You also get alot of hitching. At least that's my experience with my older cards.

N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

Maybe in this case if ram speed is comparable and the gpu is relatively taxed with 8gb.. then it's less important. In most situations in past I have run into this (flagships) the VRAM has killed them before they were too slow.What?? Show us one benchmark where a 2060 12 GB beats an 2060 Super 8 GB.

Also, wasn't there an Nvidia GPU series where they played games with bandwidth and vram capacity? Was it the 9600s? Bandwidth turned out to be a huge deal over capat...

Yes this is what I am referring to. Except for the situations where they have some stupidly slow but larger ram capacity or a mediocre GPU already, capacity typically is a limiting factor for most cards I've owned (when ram is similar speed). My 7970 was an OC monster but I ram outta VRAM. 780Ti owners know what I mean too.

290X same thing eventually.

My x800xt was same, it didn't have enough VRAM in the end, hell even when it was new, I couldn't run Ultra in Doom 3 smoothly @1024x768. When you weren't vram limited the GPU still had enough power.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,205

I'm not sure I agree with those examples, when you run out of memory your .1 percents take a shit. You also get alot of hitching. At least that's my experience with my older cards.

SotTR needed a few updates to take advantage of the new hardware. In both cases, the 8GB 2080 Super still managed to beat the 1080ti.

Again, not denying that there are a handful of games that take a dive in performance due to a lack of vram at PLAYABLE settings for that person formance levell. It's just often assumed that this is the case when it may in fact be from the architecture or bandwidth limits.

I don't see how this is showing having more vram is helping.Gears 5 and Tomb Raider both benefit from having more than 8GB. 1080TI defeats 2080 in these cases.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,205

I don't remember that being the case very often.... My 7970 was an OC monster but I ram outta VRAM. ..

In fact, the 4 GB GTX 680, a card of similar performance, was rarely faster than the 2GB version. I scanned the internet for any real performance andvantages of the 6 GB 7970 over the 3 GB version and did not find any. Could be wrong though, it is a big internet.

Sir Beregond

Gawd

- Joined

- Oct 12, 2020

- Messages

- 943

I'm not saying there aren't exceptions to the rule, but that's just what they are, exceptions.You need to actually watch the digital foundry video on battlefield because it was indeed limited by the vram and that was 2 years ago and only at 1080p. For most games yes you would certainly be correct but there will always be some exceptions. Same goes for 8 gigs now as there are at least 4 games that will require lowering of settings that could otherwise run fine with more vram.

Don't get me wrong, I will always advocate for more VRAM if given a choice, like I think the 3070 should have been a 10GB card with the 3080 being a 12GB card from the get-go for example.

crazycrave

[H]ard|Gawd

- Joined

- Mar 31, 2016

- Messages

- 1,878

I don't have alot of room now in vram at 1080p with 3070 but I like that it can run alot of stuff ..

Last edited:

I believe it was the 8800 series when they started to get funky about bandwidth.Also, wasn't there an Nvidia GPU series where they played games with bandwidth and vram capacity? Was it the 9600s? Bandwidth turned out to be a huge deal over capat...

Sir Beregond

Gawd

- Joined

- Oct 12, 2020

- Messages

- 943

The 200-series.I believe it was the 8800 series when they started to get funky about bandwidth.

GTX 280 had a 512-bit bus and the GTX 260 and 275 had a 448-bit bus.

8800-series I believe was a combo of 256-bit and 384-bit cards depending on the model (GT, GTX, etc.)

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

The 8800 GTS had a 320 bit bus.The 200-series.

GTX 280 had a 512-bit bus and the GTX 260 and 275 had a 448-bit bus.

8800-series I believe was a combo of 256-bit and 384-bit cards depending on the model (GT, GTX, etc.)

Ah yes id software games eat up vram for sure. Given a set bandwidth, it's hard to get the "perfect" amount of vram at certain performance levels. 6 GB is said to be too little for the 2060 and 12 GB is said to be way too much. Same for the 8 GB on the 3070 and 16 GB on the upcoming 3070ti.

The only around this is using a hybrid setup with unmatched module sizes in which GPU manufactures have rarely done.

No such thing as too much vram. I’ve been gaming on PC for decades and I have never once has a problem with too much vram and neither have you.

Modred189

Can't Read the OP

- Joined

- May 24, 2006

- Messages

- 16,318

And the 6500xt appears to prove everyone's point in this thread.

Not enough vram. Too slow vram. Too little bandwidth.

Not enough vram. Too slow vram. Too little bandwidth.

I wish that I could forget Vista as well.No such thing as too much vram. I’ve been gaming on PC for decades and I have never once has a problem with too much vram and neither have you.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,205

Prior to the GPU price hikes, the claim of too much vram was not literally too much vram, it was simply a claim that the excess made the card unnecessary expensive. Historic examples are the 6GB 7970 or the 12 GB Titan Maxwell.No such thing as too much vram. I’ve been gaming on PC for decades and I have never once has a problem with too much vram and neither have you.

Modred189

Can't Read the OP

- Joined

- May 24, 2006

- Messages

- 16,318

I might disagree with you on the 12 gig titan. For gaming, I tend to agree, but for those who did double duty gaming and productivity, there were uses where it came in handy.Prior to the GPU price hikes, the claim of too much vram was not literally too much vram, it was simply a claim that the excess made the card unnecessary expensive. Historic examples are the 6GB 7970 or the 12 GB Titan Maxwell.

Now those cases were almost as rare as the number of people who bought the card, so I'm not sure how much that means.

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

The POINT of more vram would be that the smaller amount was already hitting limitations but it seems to go over many people's head. For instance if a 3070 ti 16gb comes out then you will see non stop idiotic comments saying you will never need 16gb on a card like that. These people cant comprehend that if 8gb is already starting to be a limitation then going with 16gb is the only option. 12gb would be more suitable and GDDR6 can technically offer 1.5gb chips so you could end up with 12gb on a 256bit bus but afaik nobody actually made that size.

Sir Beregond

Gawd

- Joined

- Oct 12, 2020

- Messages

- 943

Yeah but at a certain point the VRAM capacity is bottlenecked by either the chip or the memory interface itself or both. I can't imagine a 16GB 3060 Ti makes any sense. Are people actually running out of VRAM and stuttering at 1080p/1440p? Can the card actually make full use of that?The POINT of more vram would be that the smaller amount was already hitting limitations but it seems to go over many people's head. For instance if a 3070 ti 16gb comes out then you will see non stop idiotic comments saying you will never need 16gb on a card like that. These people cant comprehend that if 8gb is already starting to be a limitation then going with 16gb is the only option. 12gb would be more suitable and GDDR6 can technically offer 1.5gb chips so you could end up with 12gb on a 256bit bus but afaik nobody actually made that size.

Last edited:

Yeah but at a certain point the VRAM capacity is bottlenecked by either the chip or the memory interface itself or both. I can't imagine a 16GB 3060 Ti makes any sense. Are people actually running out of VRAM and stuttering at 1080p/1440p? Can the card actually make full use of that?

Only place I've seen issues on my 3060 TI is when it has to deal with the extra overhead involved in VR.

Once you've got the Oculus and SteamVR frameworks sitting on top of each other, you've just chopped a pretty big chunk out of your VRAM. And between the low complexity of VR scenes and the frame-doubling capability of the VR framework, you can get a card punching well above its weight on the resolution front. At the point, you're trying to cram the up-to-5K framebuffer for the Quest 2 into ~5GB of available VRAM, and 8GB starts feeling awfully confining.

Yes. The latest trend in some games is to have zero load times. Think God of War and Guardians of the Galaxy. The way they pull this off is to load up your VRAM and even your RAM to stream in textures. The more VRAM you have the longer you can go until the new textures are streamed in.Yeah but at a certain point the VRAM capacity is bottlenecked by either the chip or the memory interface itself or both. I can't imagine a 16GB 3060 Ti makes any sense. Are people actually running out of VRAM and stuttering at 1080p/1440p? Can the card actually make full use of that?

16GB seems like a bit much though. But it was only a year ago when everyone thought that 8GB wouldn't be needed yet I kept stumbling onto games that if I had less than 8GB I would have been hurting for more. So at this point 8GB I think is the minimum for mid range gamers.

Last edited:

This. I had a GTX 780 w/ 3GB of RAM (still have it getting ready to sell it). It is faster than a RX 570....except when RAM became an issue which was everywhere. Having more than 3GB of RAM meant that I literally could play games on the RX 570 that the GTX 780 would struggle with. I would experience severe stuttering, even though the GPU on the 780 was technically faster.Maybe in this case if ram speed is comparable and the gpu is relatively taxed with 8gb.. then it's less important. In most situations in past I have run into this (flagships) the VRAM has killed them before they were too slow.

Yes this is what I am referring to. Except for the situations where they have some stupidly slow but larger ram capacity or a mediocre GPU already, capacity typically is a limiting factor for most cards I've owned (when ram is similar speed). My 7970 was an OC monster but I ram outta VRAM. 780Ti owners know what I mean too.

290X same thing eventually.

My x800xt was same, it didn't have enough VRAM in the end, hell even when it was new, I couldn't run Ultra in Doom 3 smoothly @1024x768. When you weren't vram limited the GPU still had enough power.

Last edited:

havoc lingers

Limp Gawd

- Joined

- Nov 4, 2021

- Messages

- 129

Is 2060 12GB enough for games of next generation?

One possible caveat: He did do the test using 32 GB of system ram. I would like to see the test done with 16 GB of ram as well. Reason being is that the 3 GB GTX 1060 using less system ram while the 6 GB GTX did not. Curious to see if something similar happens at a larger scale.

I highly doubt that the system RAM would have made a difference. I can't think of a single gaming situation where 16GB isn't sufficient right now. RAM is something you need ENOUGH of. Having more than you need just sitting there won't improve your framerate.

While everyone is arguing on VRAM amounts being good enough or not, I will say this. For those of us who have long upgrade cycles and don't upgrade their GPU every two years, VRAM matters. I got more use out of my R9 280X than GTX 770 users got out of their cards because of the extra 1GB VRAM. It remained playable for longer, and that mattered to me.

We all know that 8GB has been the standard for a long time in the mid-upper range and some titles are revealing it's getting long in the tooth. In that vein, I elected to sit out this upgrade cycle specifically because Nvidia launched their cards with insufficient VRAM in my opinion, otherwise I would have upgraded on day 1 (I saw inventory at a local store and passed it by, and this was before the real insanity hit, making my only regret that I probably could have made serious money reselling that card had I had a crystal ball). It's not unusual for me to go 5-6 years in between upgrades, so in my opinion, launching the 3080 with 10GB of VRAM was a mistake. It's fine to have that opinion when you have long upgrade cycles, even though some technogeeks will defend the honour of their preferred Fortune 500 corporation by insisting it's totally fine and no one should worry about it ever because there is no good reason to. Maybe there isn't for you, person who upgrades every cycle, but it matters to me as I have long upgrade cycles, and even Steve said as much in his intial video.

We all know that 8GB has been the standard for a long time in the mid-upper range and some titles are revealing it's getting long in the tooth. In that vein, I elected to sit out this upgrade cycle specifically because Nvidia launched their cards with insufficient VRAM in my opinion, otherwise I would have upgraded on day 1 (I saw inventory at a local store and passed it by, and this was before the real insanity hit, making my only regret that I probably could have made serious money reselling that card had I had a crystal ball). It's not unusual for me to go 5-6 years in between upgrades, so in my opinion, launching the 3080 with 10GB of VRAM was a mistake. It's fine to have that opinion when you have long upgrade cycles, even though some technogeeks will defend the honour of their preferred Fortune 500 corporation by insisting it's totally fine and no one should worry about it ever because there is no good reason to. Maybe there isn't for you, person who upgrades every cycle, but it matters to me as I have long upgrade cycles, and even Steve said as much in his intial video.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)