Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Folding@Home bigadv EOL 3rd Anniversary Challenge: [H] vs TAAT

- Thread starter biodoc

- Start date

I gave ya the like but you're coming up on me fast. My best rigs are off FAH until the challenge for utility bills reasons unless things suddenly pick up. If things finally do I'll be more of a presence on FAH and BOINC again. Thx for all the helpMeJust let me know if there's anything else I can do to help, but otherwise, I'll keep folding as long as I can

BWG

n00b

- Joined

- Apr 19, 2016

- Messages

- 44

I am going to re-configure everything on my benches over my 4 day weekend to align things into the best slots for maximum PPD. A few things were moved around for a nvlink experiment that quickly faded. (just wanted to beat Bastiaan_NL on Superposition easily, but it wouldn't work on 3xxx with the mod for SLI)

One thing I remember from OCN days is how well versed [H] is in Linux. So, I setup Ubuntu 20.04 on everything I have; dual boot. I then enabled coolbits=28, and I'm able to control my fans/clock speeds on each GPU. What I cannot control through the UI for Nvidia X Server Settings is power limits (unless selecting maximum performance does that; doubt it). So, who all has controlled power limits in Ubuntu? If I use terminal, I think I could possibly control the power limit with this command in my bash?

replacing xxx with max watts, but is 1 the GPU number? If I have 6, I need 6 entries (0,1,2,3,4,5 replacing 1 in each line)?

But, I'd like to figure this all out, and do some test runs before the event starts. I hope this one is different for you guys, and that it remains interesting until the end. Also, thanks for the nomination, but I literally have done nothing to this point. Maybe an event organizer, or my recruiter, firedfly would be more qualified for the award.

One thing I remember from OCN days is how well versed [H] is in Linux. So, I setup Ubuntu 20.04 on everything I have; dual boot. I then enabled coolbits=28, and I'm able to control my fans/clock speeds on each GPU. What I cannot control through the UI for Nvidia X Server Settings is power limits (unless selecting maximum performance does that; doubt it). So, who all has controlled power limits in Ubuntu? If I use terminal, I think I could possibly control the power limit with this command in my bash?

Code:

nvidia-smi -i 1 -pl xxxBut, I'd like to figure this all out, and do some test runs before the event starts. I hope this one is different for you guys, and that it remains interesting until the end. Also, thanks for the nomination, but I literally have done nothing to this point. Maybe an event organizer, or my recruiter, firedfly would be more qualified for the award.

Yes, that Nvidia SMi command will adjust power limit. If you have 6 cards, they will be numbered seemingly at random. You also need a flag I can’t remember offhand to have the configuration applied at boot - otherwise it resets every reboot.I am going to re-configure everything on my benches over my 4 day weekend to align things into the best slots for maximum PPD. A few things were moved around for a nvlink experiment that quickly faded. (just wanted to beat Bastiaan_NL on Superposition easily, but it wouldn't work on 3xxx with the mod for SLI)

One thing I remember from OCN days is how well versed [H] is in Linux. So, I setup Ubuntu 20.04 on everything I have; dual boot. I then enabled coolbits=28, and I'm able to control my fans/clock speeds on each GPU. What I cannot control through the UI for Nvidia X Server Settings is power limits (unless selecting maximum performance does that; doubt it). So, who all has controlled power limits in Ubuntu? If I use terminal, I think I could possibly control the power limit with this command in my bash?replacing xxx with max watts, but is 1 the GPU number? If I have 6, I need 6 entries (0,1,2,3,4,5 replacing 1 in each line)?Code:nvidia-smi -i 1 -pl xxx

But, I'd like to figure this all out, and do some test runs before the event starts. I hope this one is different for you guys, and that it remains interesting until the end. Also, thanks for the nomination, but I literally have done nothing to this point. Maybe an event organizer, or my recruiter, firedfly would be more qualified for the award.

BWG

n00b

- Joined

- Apr 19, 2016

- Messages

- 44

Did they ever build GPU in voltage control that you know of?

Not that I know of, but I’ve only used it on a 1080ti and a 1660tiDid they ever build GPU in voltage control that you know of?

BWG it has been awhile since i set power limits in linux but I dont' recall having to specify anything to make it apply to the 2 cards in my rigs. might try it simply as: sudo nvidia-smi –pl xxx

then you would also want to run : sudo nvidia-smi -pm 1 (makes it persist after reboot) You should be able to verify the clocks but i forget how lol.

Edit I checked my notes and this was exactly how i ran this before to make it apply power limit and persistant with rigs with 2 gpu . nothing required to specify the GPU number.

edit2 . i think maybe... if you just run nvidia-smi –pl (no xxx) afterwards it should simply tell you the current power level for each card.. maybe

then you would also want to run : sudo nvidia-smi -pm 1 (makes it persist after reboot) You should be able to verify the clocks but i forget how lol.

Edit I checked my notes and this was exactly how i ran this before to make it apply power limit and persistant with rigs with 2 gpu . nothing required to specify the GPU number.

Last edited:

That syntax isn't valid. Just running nvidia-smi will give the power limit as part of the output, though.i think maybe... if you just run nvidia-smi –pl (no xxx) afterwards it should simply tell you the current power level for each card.. maybe

BWG

n00b

- Joined

- Apr 19, 2016

- Messages

- 44

Yeah, it worked. Thanks guys. I ordered a 1600w P+ to replace the 1300w G+ my 2 3090's are in so I have headroom to oc those more.

Have some monster water blocks on those?Yeah, it worked. Thanks guys. I ordered a 1600w P+ to replace the 1300w G+ my 2 3090's are in so I have headroom to oc those more.

BWG

n00b

- Joined

- Apr 19, 2016

- Messages

- 44

Have some monster water blocks on those?

Nah, not sure if I will or not quite honestly, but I did look at some. MicroCenter had a few of the Bitspower ones in stock. I just got the 2nd one in December on a step-up from a 3070 TI. Looks like USPS is bringing the PSU today. I wasn't able to do the re-config over the long weekend because my whole house got bit by Covid-19 finally. All is well, I think. Still recovering.

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,094

Time to run more FAH covid projects this coming Sunday

BWG

n00b

- Joined

- Apr 19, 2016

- Messages

- 44

Do you guys have a Discord official/unofficial? I'm only in IRC lol

I turned em on at stock clocks for a test run. http://bwg.42web.io/#/summary

I turned em on at stock clocks for a test run. http://bwg.42web.io/#/summary

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,094

Holy shit. That's a lot of GPUs.... We might need you for this upcoming May BOINC pentathlon challenge alsoDo you guys have a Discord official/unofficial? I'm only in IRC lol

I turned em on at stock clocks for a test run. http://bwg.42web.io/#/summary

Ok, some folks here might be jealous of you in having possession of so many cards.

BWG

n00b

- Joined

- Apr 19, 2016

- Messages

- 44

All I did was queue evga cards 24 hours before release (elite) and also won a few in the newegg shuffle. I remember refreshing and trying to queue my first 3080 TI. It was an hour long venture of 16 tabs in edge and chrome.

I'll be a competitor in that if I do it. Ehw or Ocn.

I'll be a competitor in that if I do it. Ehw or Ocn.

OK Horde I've got the GTX1050 & 1060 rockin' a few hours before midnite so the WU's should drop early on the 23rd. My 3060 is uh - busy. I'm 217 turns into a strat game and I just... can"t... stop... It's only using 65% GPU so I'll add some light folding until i can't stay awake then I'll switch it to full. Got the workshop CPU on GROMACS too for an added few bonus WU's. Let's go

Apparently there are database issues at F@H and they are working on it as of Friday. Hopefully they will get this sorted out sometime today. See StefanR5R's post in the anandtech forum for links.You guys know what's going on at F@H and EOC, been 0 for a while now.

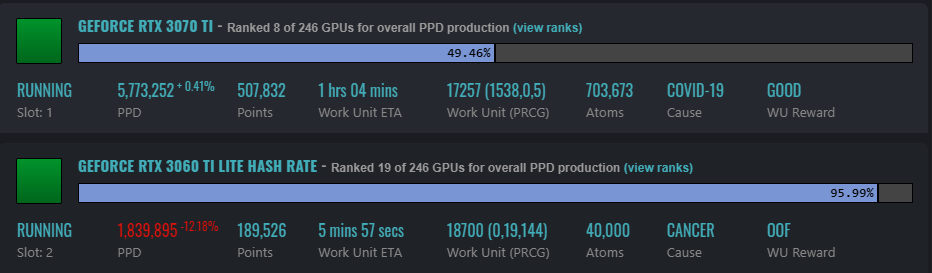

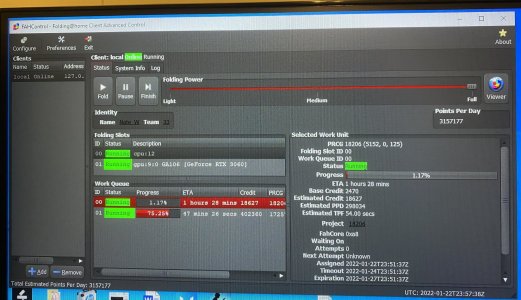

Finally paused mining operation and got all the cards moved around. Best I can get right now as I don’t have the CPU horsepower to feed all these beastly cards!

Attachments

-

3C98493F-6924-419B-834D-05F099880BEE.jpeg776.5 KB · Views: 0

3C98493F-6924-419B-834D-05F099880BEE.jpeg776.5 KB · Views: 0 -

BF49B207-BEBB-4373-AF27-ED509278A950.jpeg578.3 KB · Views: 0

BF49B207-BEBB-4373-AF27-ED509278A950.jpeg578.3 KB · Views: 0 -

A0DE9201-3A23-49FB-B9DE-B864F7620483.jpeg585.9 KB · Views: 0

A0DE9201-3A23-49FB-B9DE-B864F7620483.jpeg585.9 KB · Views: 0 -

676FF2E9-1E91-4E3E-B1E7-5BD999924E41.jpeg632 KB · Views: 0

676FF2E9-1E91-4E3E-B1E7-5BD999924E41.jpeg632 KB · Views: 0 -

2742B964-A87D-491D-8849-9B7C9AEE9843.jpeg548.2 KB · Views: 0

2742B964-A87D-491D-8849-9B7C9AEE9843.jpeg548.2 KB · Views: 0

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,094

Send some of the cards to me then. I have Xeon Haswell and Epyc that have a few unused pcie lanes.

Dang, strike that. I only have 650W PSU . At one point I mined with two VII with 650W PSU for3 months.

. At one point I mined with two VII with 650W PSU for3 months.

Dang, strike that. I only have 650W PSU

Ducrider748

Limp Gawd

- Joined

- Jul 25, 2011

- Messages

- 308

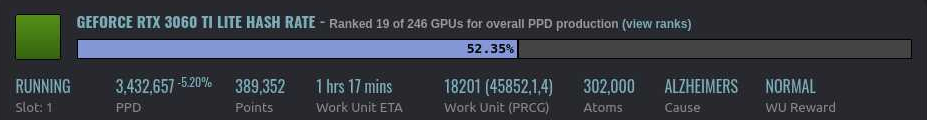

The ppd on those 30060 ti's is pretty low. You should be getting close to 3 million each. Are you running the current drivers?

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,094

He is running off x1 lane. The rig setup is for mining but I won't complain as this is better than nothing, lol.

Older 472 drivers. CPU is pegged at 100% for those two cards. It's roughly 15 million more PPD, so don't complain! I halted mining to participate and had to reconfigure cards and had issues getting them all going again!The ppd on those 30060 ti's is pretty low. You should be getting close to 3 million each. Are you running the current drivers?

The x1 lane doesn't seem to affect them very much for PPD. I just don't have enough CPU horsepower to feed all of them. The 2080 Super does 2 million or so on an x1 link. The 3070 does about the same on a x1 riser card. I was able to get 5 million PPD from 3080 Ti on x1 riser card when I did some testing before race started.He is running off x1 lane. The rig setup is for mining but I won't complain as this is better than nothing, lol.

Last edited:

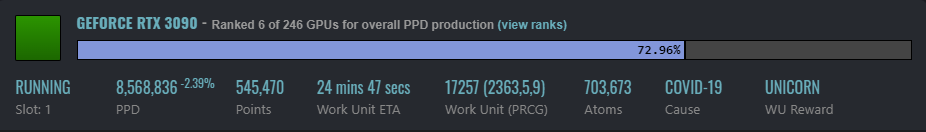

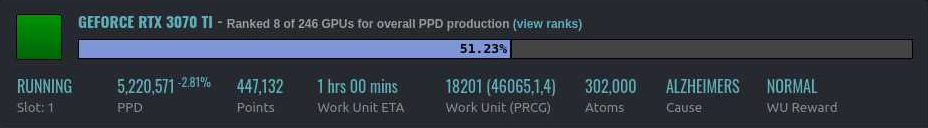

Just about to head to bed, but wanted to get some of the heavy hitters crunching. My 3090 seems to have stabilized around 5.2 mil, my 3070 ti & 3060 ti (both lhr) rig seems to be clocking in around 6 mil combined. Tomorrow I will finish the new rig so that will being another 3060 ti online, and on Tuesday a 3090 fe will join in. I wish I could put my two 2070 supers to use, but they are on risers (and that rig has 5x gpu with a lowly amd 965 be - not enough cpu power to push them!)

Is there any easy way to monitor all the ppd of my machines at once?

Is there any easy way to monitor all the ppd of my machines at once?

My server running older 472 drivers as its the current Win 2008R2//Win 7 driver and its got fairly up to date CUDA and I didnt notice an appreciable change to slightly newer CUDA on my Win 10 rigs so it's staying back where it belongs. Older CUDA definitely showed a drop off for folding but YMMV. You should be good to go with that. If it ain't broke ...Older 472 drivers. CPU is pegged at 100% for those two cards. It's roughly 15 million more PPD, so don't complain! I halted mining to participate and had to reconfigure cards and had issues getting them all going again!

HFM.netJust about to head to bed, but wanted to get some of the heavy hitters crunching. My 3090 seems to have stabilized around 5.2 mil, my 3070 ti & 3060 ti (both lhr) rig seems to be clocking in around 6 mil combined. Tomorrow I will finish the new rig so that will being another 3060 ti online, and on Tuesday a 3090 fe will join in. I wish I could put my two 2070 supers to use, but they are on risers (and that rig has 5x gpu with a lowly amd 965 be - not enough cpu power to push them!)

Is there any easy way to monitor all the ppd of my machines at once?

Remember that when you compare PPD, the size (atom count) of the WU can vary significantly. When they benchmark they try to strike a balance, but larger cards will see higher PPD on larger WUs. On smaller WUs, the delta between higher and lower end cards will shrink.

you should be able to run 1 of them and get about 2 million or so PPD. I have an Athlon 435 triple core that is overwhelmed by two 3060Tis, ut it is good for 2+ million PPDJust about to head to bed, but wanted to get some of the heavy hitters crunching. My 3090 seems to have stabilized around 5.2 mil, my 3070 ti & 3060 ti (both lhr) rig seems to be clocking in around 6 mil combined. Tomorrow I will finish the new rig so that will being another 3060 ti online, and on Tuesday a 3090 fe will join in. I wish I could put my two 2070 supers to use, but they are on risers (and that rig has 5x gpu with a lowly amd 965 be - not enough cpu power to push them!)

Is there any easy way to monitor all the ppd of my machines at once?

I am going to get a massive point update when they do get it online if all the points have been saved but not updating user point totalsWe will start the race when the F@H/EOC stats are back up. Since we passed the original start date and time, no more Team Anandtech members can sign up for the race.

BWG

n00b

- Joined

- Apr 19, 2016

- Messages

- 44

We're winning! Woooohooo!

Ducrider748

Limp Gawd

- Joined

- Jul 25, 2011

- Messages

- 308

| Yes, we all know the stats are down!01.23.22, 9:07am CST |

|---|

It's not on my end, the stats feed hasn't updated due to an upstream issue with the F@H servers. According to a twitter post from yesterday (@foldingathome):So we all just have to be patient and let them fix the problem. |

pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,094

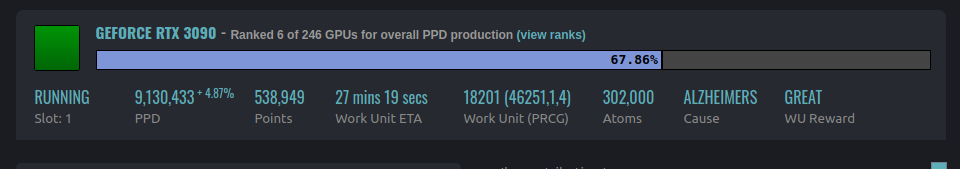

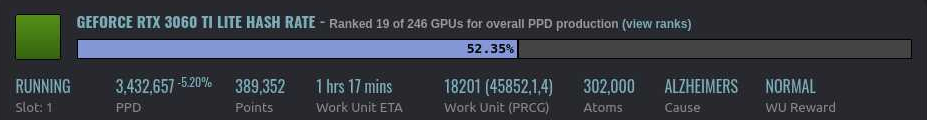

Just to keep the challenge entertaining, let's post some WUs ppd (screenshot preferred) that have completed at least 50%. Best to install FAH web client that works in chrome. Include OS | Power settings as a minimum as these two are known to significantly affect PPDs. Assuming you have one full core for each task, there shouldn't be any significant bottleneck from the cpu, otherwise might be useful to put a note there. If you are not running half to full pcie lanes, please indicate that too.

TAAT members, please feel free to post it here.

Here is mine to get started.

Ubuntu 20.04 | 205W

Ubuntu 20.04 | 155W

TAAT members, please feel free to post it here.

Here is mine to get started.

Ubuntu 20.04 | 205W

Ubuntu 20.04 | 155W

Fardringle

n00b

- Joined

- Jan 9, 2021

- Messages

- 2

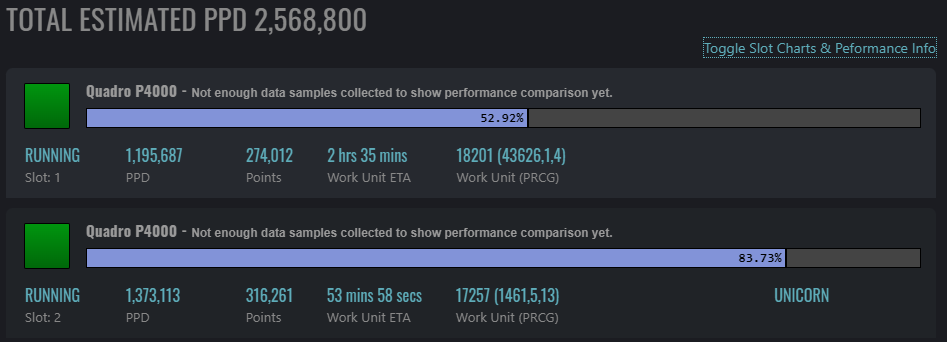

Not going for any records here. Just some personal amusement having four-year-old GPUs that don't exist in the PPD rank database yet. (plus a UNICORN)

Running Windows 10 with no power/curve adjustments because Quadro cards don't have those options. Using about 105W each according to GPU-Z.

Running Windows 10 with no power/curve adjustments because Quadro cards don't have those options. Using about 105W each according to GPU-Z.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)