Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

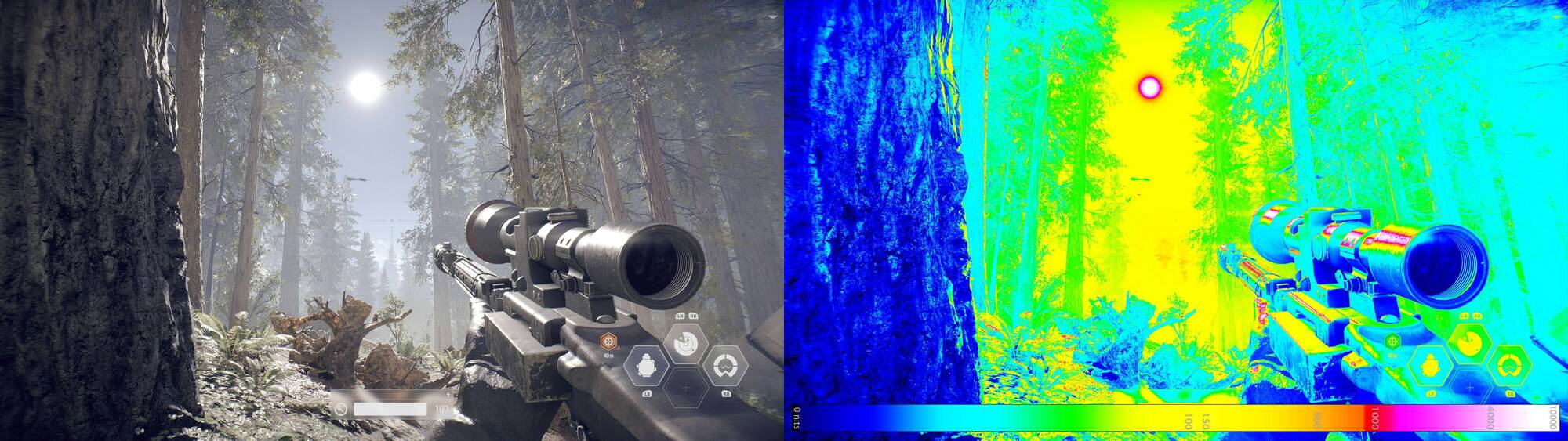

Shadow of the Tomb Raider.Ok wanna give me a game that supposely isn't too bright with HDR then? And who said I am blaming the display? I am saying that in general I don't see the need for 10,000 nits displays. Nobody said anything about blaming, I just don't think its necessary.

HDR isnt supposed to make the frame generally much brighter all the time, only those few things in the frame that should be.

I didnt say you were blaming that one display, I said you are blaming all displays that can go brighter.

After all, you said what is the point of brighter HDR.

I blamed the game and demonstrated you dont understand how it should work.

(I made an assumption you must have played other HDR games without complaint)

You expect everything to work 100% and blew up because perhaps one game doesnt.

The problem may even be you havent calibrated that display and it is too bright.

edit

Corrected Rise of the Tomb Raider to Shadow of the Tomb Raider.

RoTT doesnt use HDR.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)