Venturi

Limp Gawd

- Joined

- Nov 23, 2004

- Messages

- 264

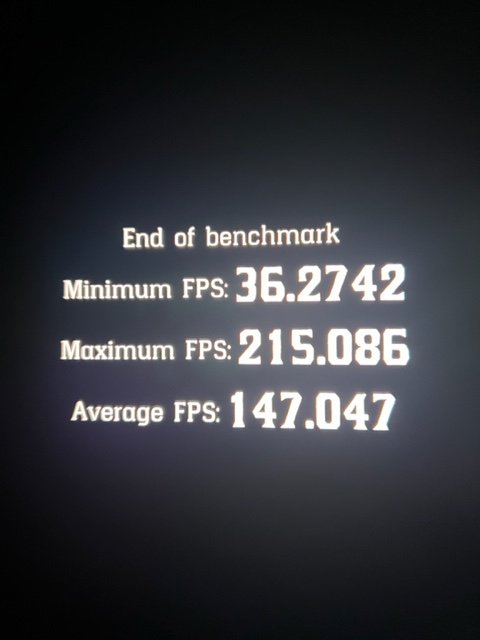

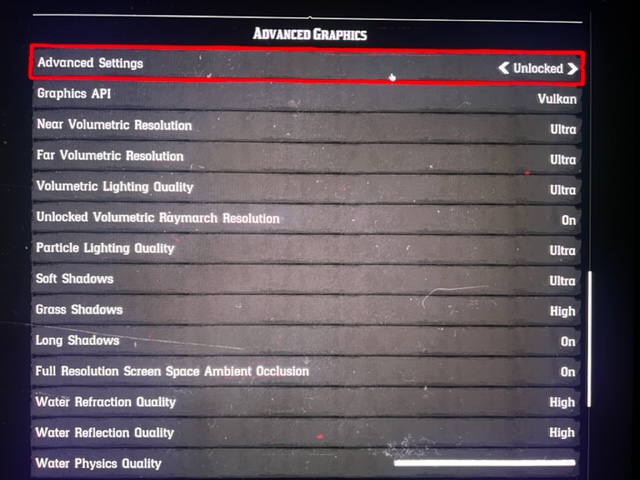

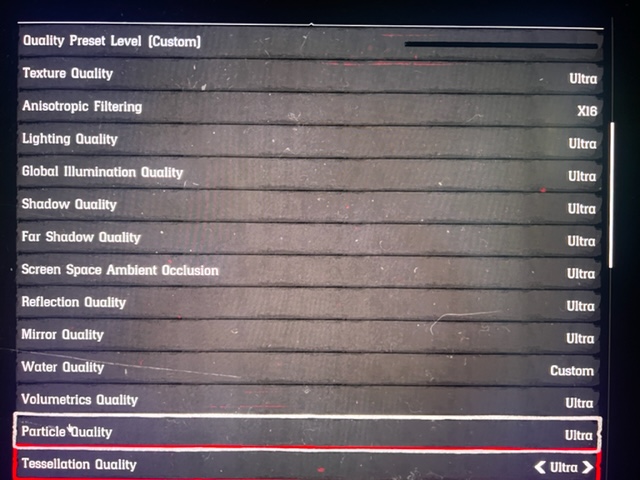

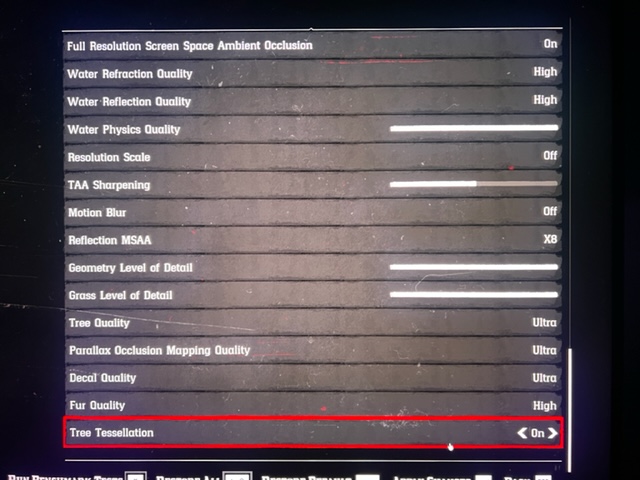

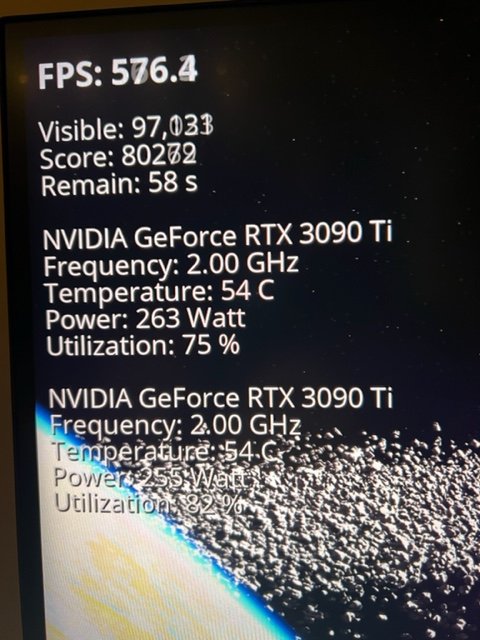

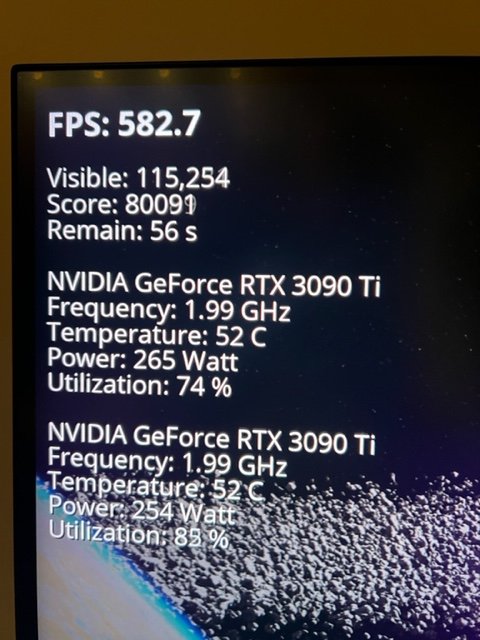

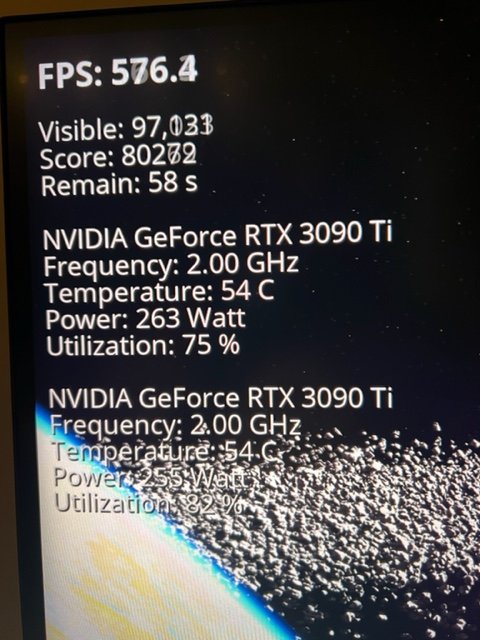

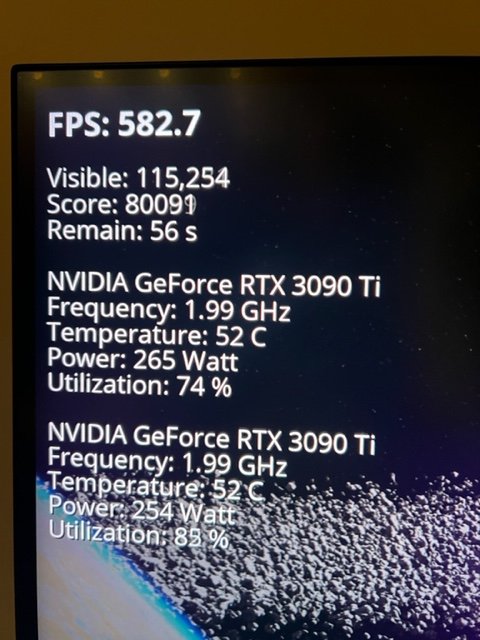

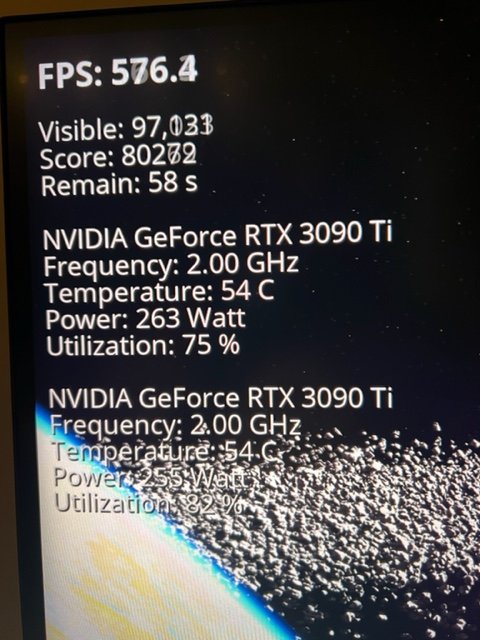

well here tis in its first few passes before any optimizations in 4k

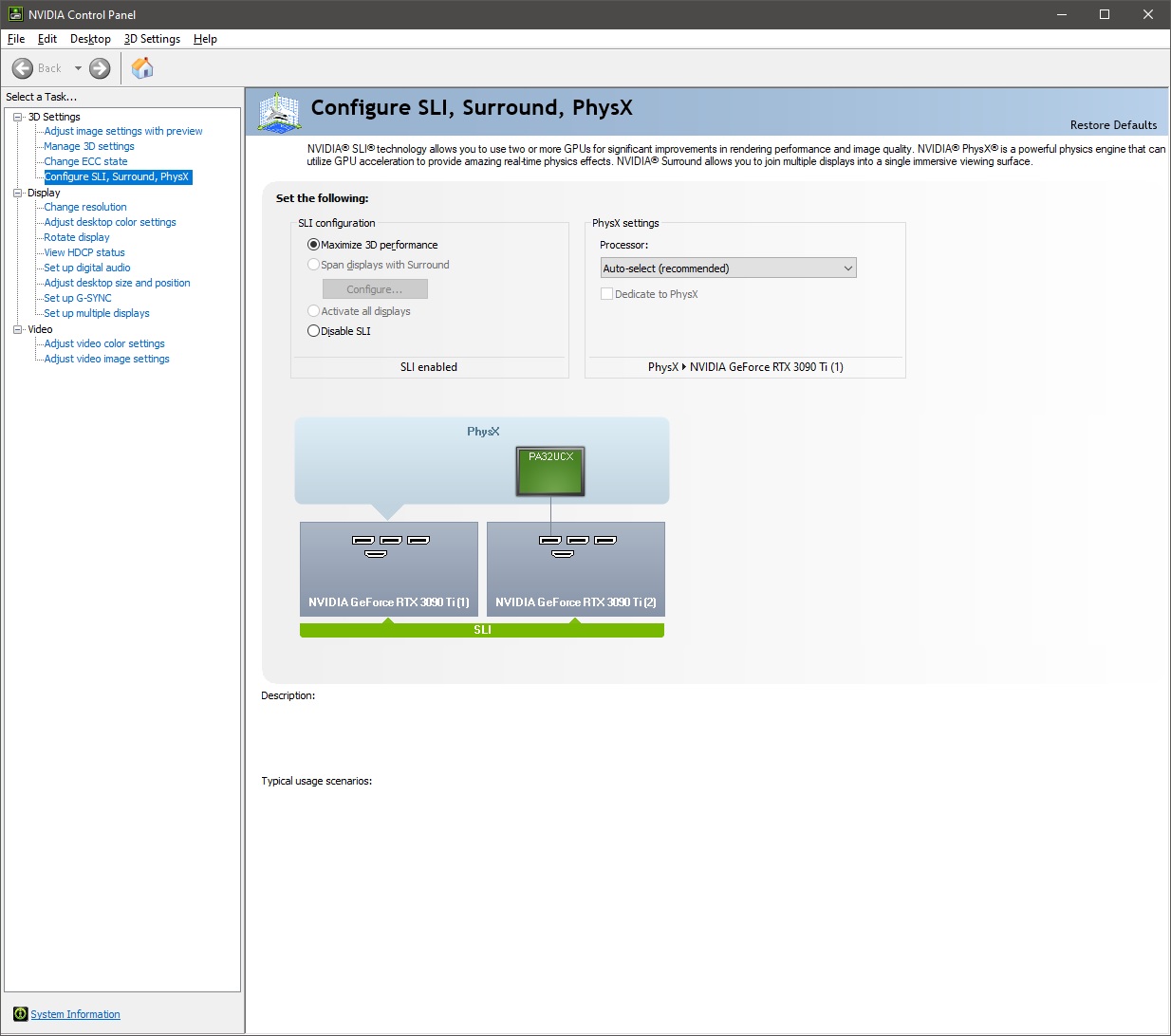

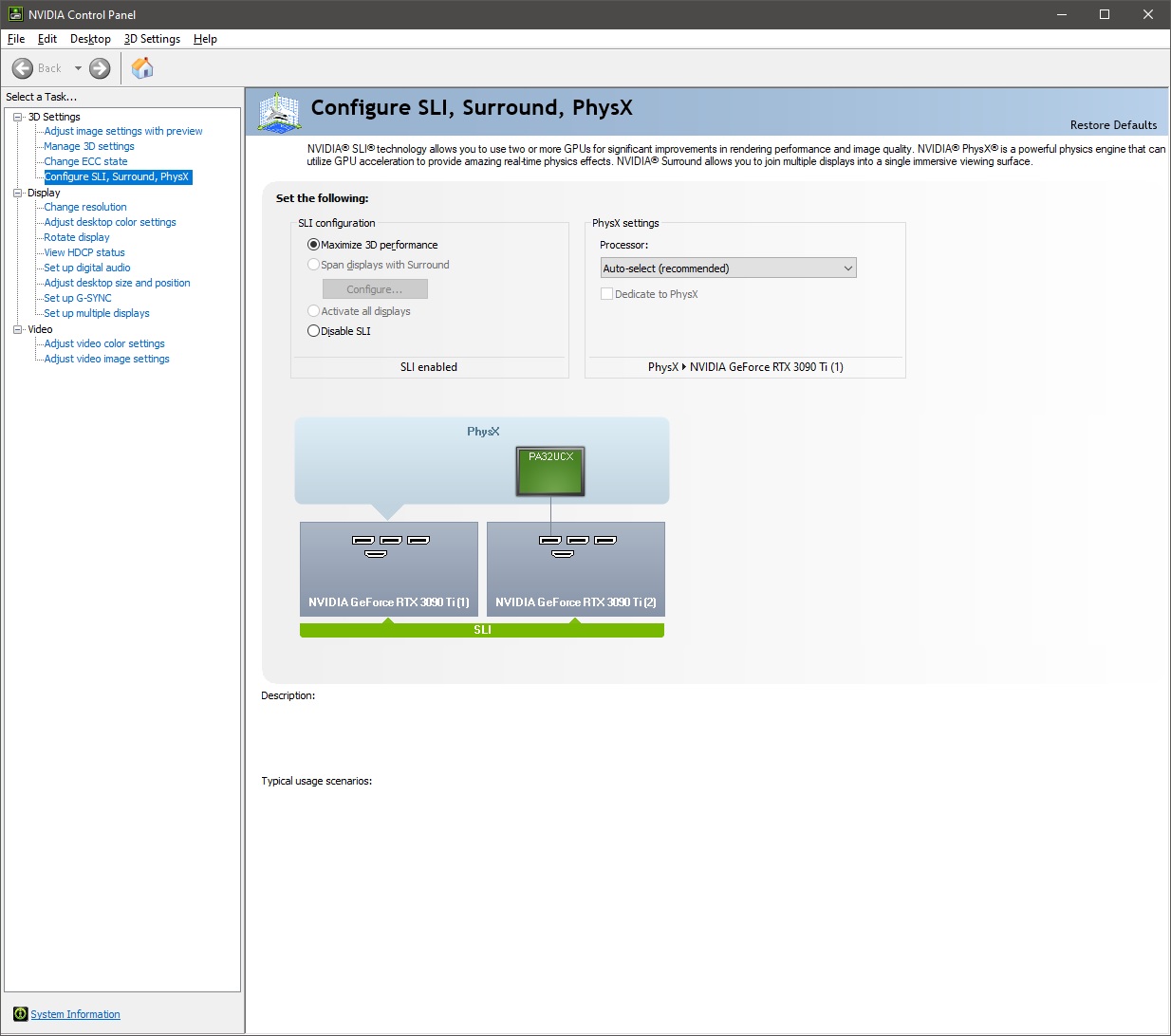

dual 3090 Ti FE nvlink

So here are some early benchmarks,, temps, power draw etc, without optimizations, see system spec for PC configuration, yes... its a SFF case

system spec:

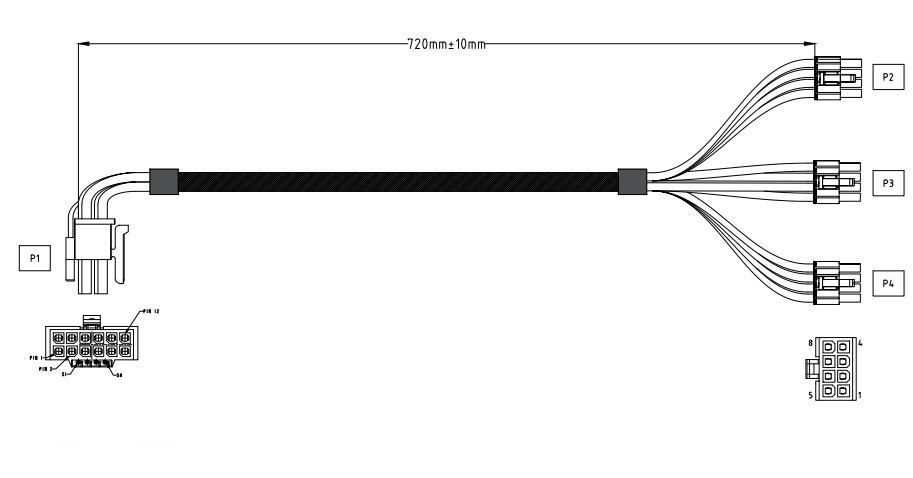

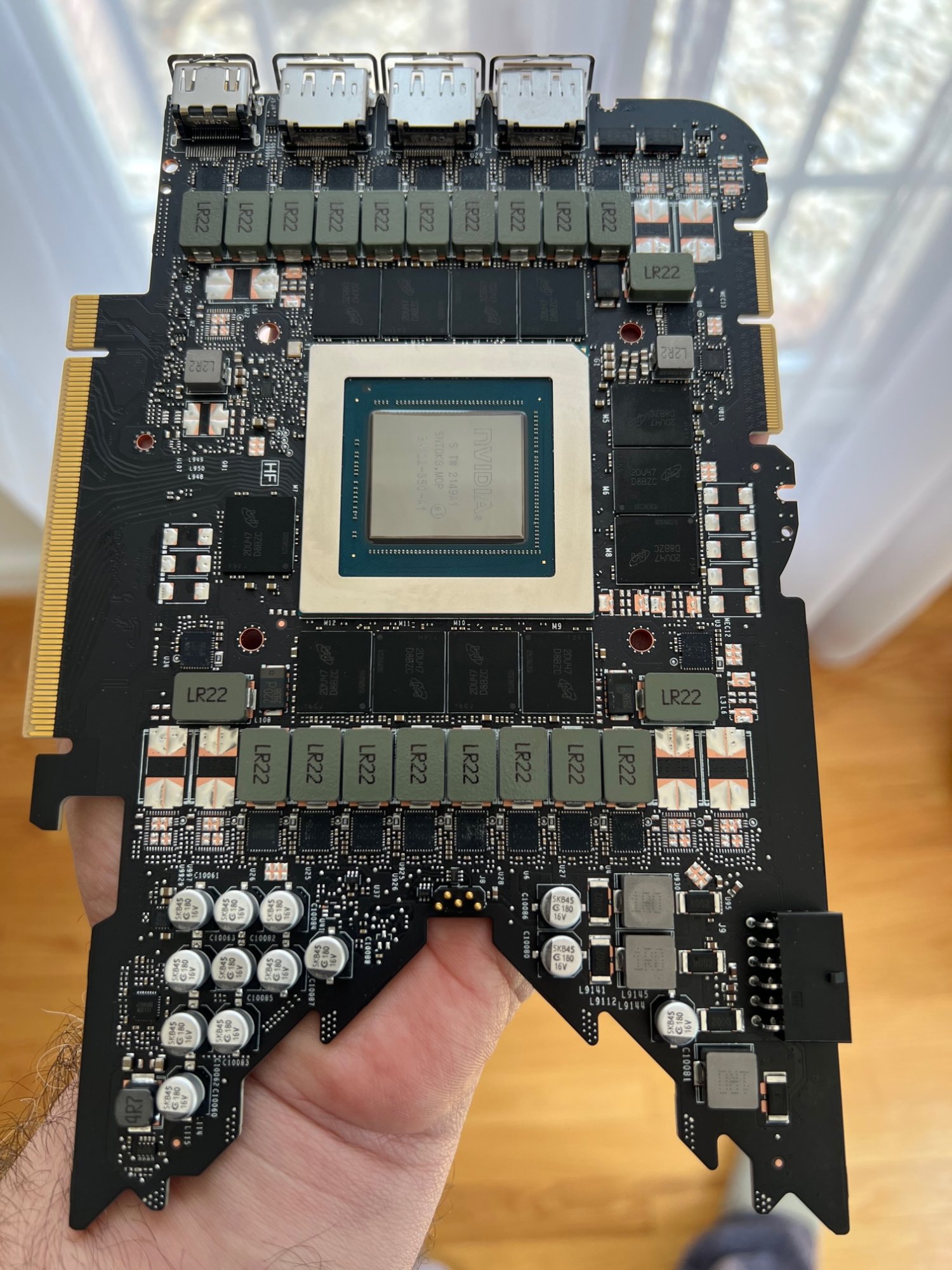

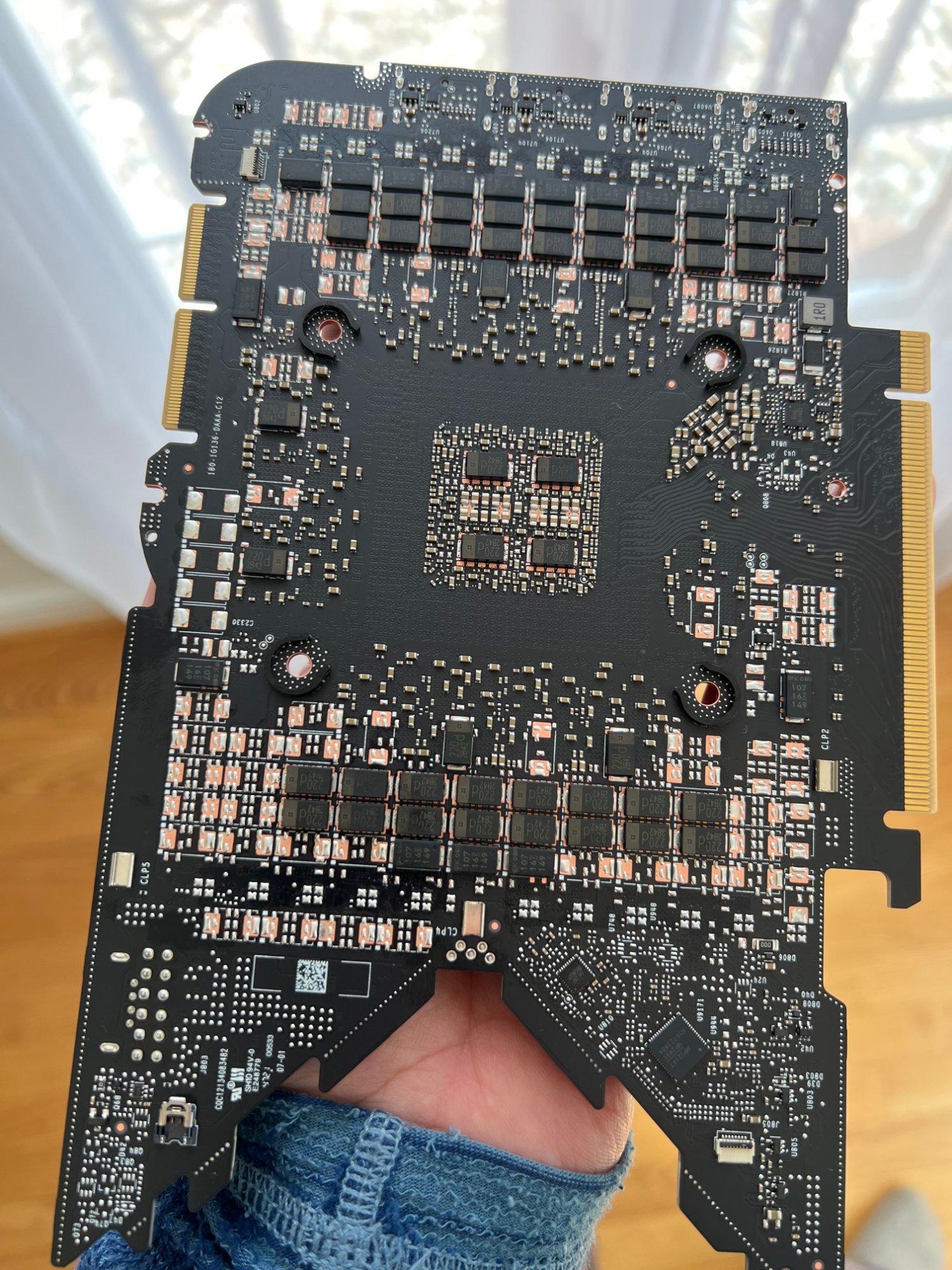

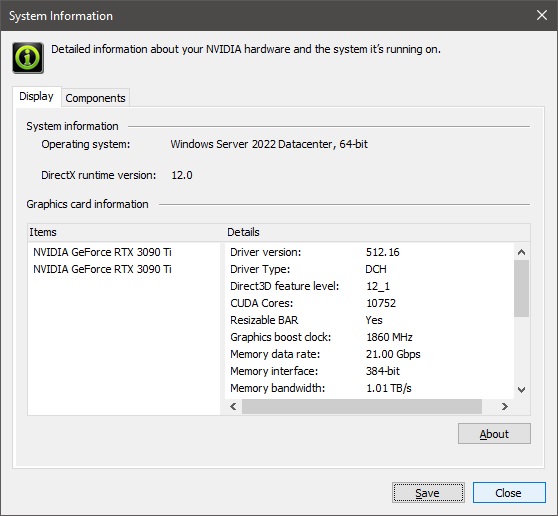

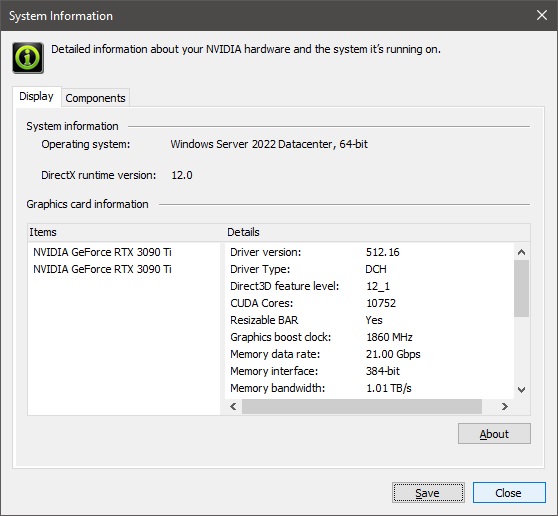

2x 3090 Ti RTX Founders Edition & SLI / NvLink bridge

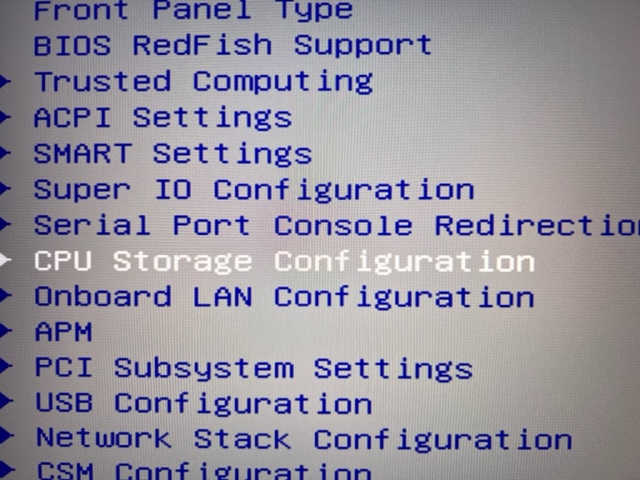

2x 8280L, 56/112 cores, Asus c621 Sage Dual socket bios 6605

1.5 TB ram. DDR4 ECC LRDIMMs 1600W silent digital power supply

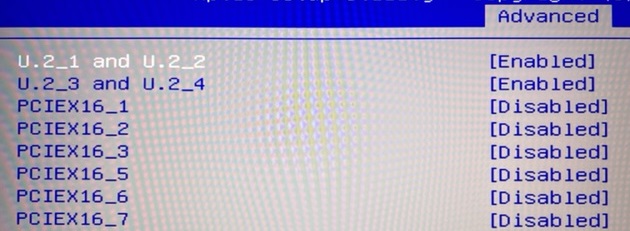

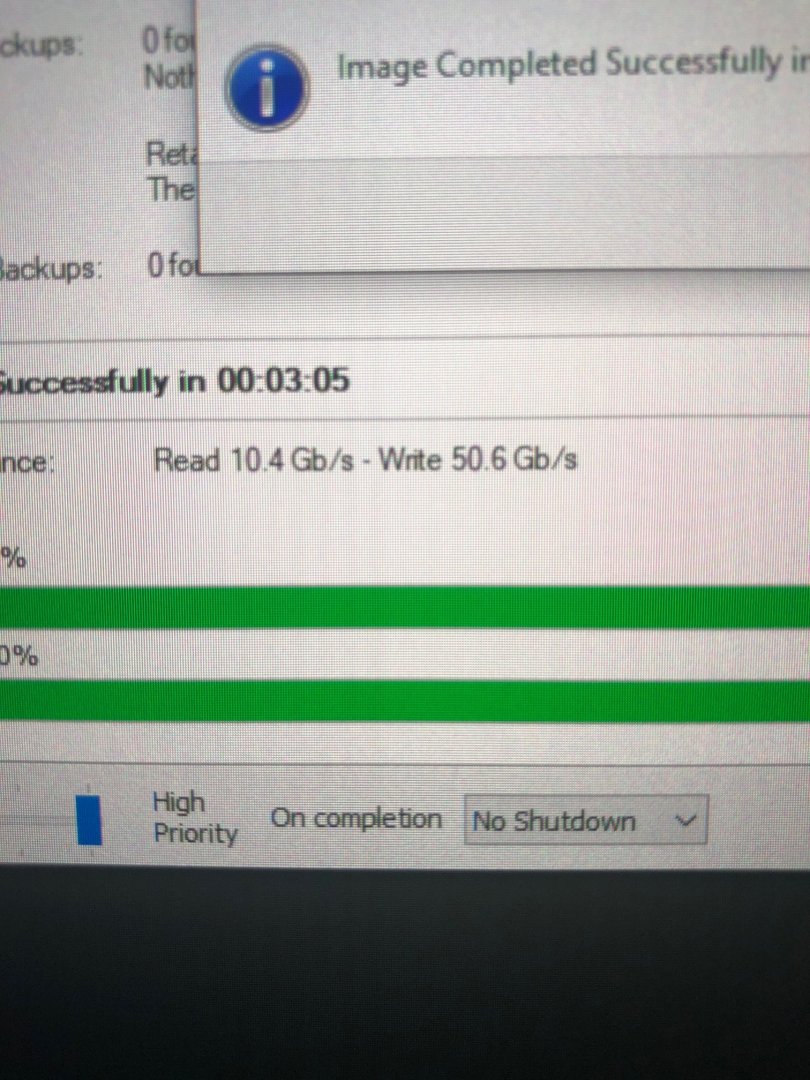

(Data drive) 4x VROC Raid 0 Micron 9300 Max (51.2 TB volume) (OS Drive) Sabrent Rocket 4 Plus (8TB). Asus PA32UCG-K monitor,

TT SFF case, MS Data Center 2022 (customized) & Ubuntu (customized

this is a 3 minute test, took measurements at 2/3 of the way through

dual 3090 Ti FE nvlink

So here are some early benchmarks,, temps, power draw etc, without optimizations, see system spec for PC configuration, yes... its a SFF case

system spec:

2x 3090 Ti RTX Founders Edition & SLI / NvLink bridge

2x 8280L, 56/112 cores, Asus c621 Sage Dual socket bios 6605

1.5 TB ram. DDR4 ECC LRDIMMs 1600W silent digital power supply

(Data drive) 4x VROC Raid 0 Micron 9300 Max (51.2 TB volume) (OS Drive) Sabrent Rocket 4 Plus (8TB). Asus PA32UCG-K monitor,

TT SFF case, MS Data Center 2022 (customized) & Ubuntu (customized

this is a 3 minute test, took measurements at 2/3 of the way through

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)