pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,138

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Lex Fridman interview with Sam Altman is worth a watch for anyone even casually interested in this field.

Sam Altman is surprisingly humble and low ego, often self-deprecating despite his high profile, which is rare to nonexistent among tech CEO's.

Lex Fridman interview with Sam Altman is worth a watch for anyone even casually interested in this field.

Sam Altman is surprisingly humble and low ego, often self-deprecating despite his high profile, which is rare to nonexistent among tech CEO's. I didn't know much about him prior, but my takeaway was OpenAI being in good hands.

Members of Lesswrong, an Internet forum noted for its community that focuses on apocalyptic visions of AI doom, don't seem especially concerned with Auto-GPT at the moment, although an autonomous AI would seem like a risk if you're ostensibly worried about a powerful AI model "escaping" onto the open Internet and wreaking havoc. If GPT-4 were as capable as it is often hyped to be, they might be more concerned.

Yup , saw someone on LinkedIn calling out the sameWe need to pause AI

Starts AI company

Amazon offers free access to its AI coding assistant to undercut Microsoft / Amazon’s CodeWhisperer generates and suggests code, and now it’s free for individual developers. https://www.theverge.com/2023/4/13/23681796/amazon-ai-coding-assistant-codewhisperer-microsoft

"Believable proxies of human behavior can empower interactive applications ranging from immersive environments to rehearsal spaces for interpersonal communication to prototyping tools. In this paper, we introduce generative agents--computational software agents that simulate believable human behavior. Generative agents wake up, cook breakfast, and head to work; artists paint, while authors write; they form opinions, notice each other, and initiate conversations; they remember and reflect on days past as they plan the next day. To enable generative agents, we describe an architecture that extends a large language model to store a complete record of the agent's experiences using natural language, synthesize those memories over time into higher-level reflections, and retrieve them dynamically to plan behavior. We instantiate generative agents to populate an interactive sandbox environment inspired by The Sims, where end users can interact with a small town of twenty five agents using natural language. In an evaluation, these generative agents produce believable individual and emergent social behaviors: for example, starting with only a single user-specified notion that one agent wants to throw a Valentine's Day party, the agents autonomously spread invitations to the party over the next two days, make new acquaintances, ask each other out on dates to the party, and coordinate to show up for the party together at the right time. We demonstrate through ablation that the components of our agent architecture--observation, planning, and reflection--each contribute critically to the believability of agent behavior. By fusing large language models with computational, interactive agents, this work introduces architectural and interaction patterns for enabling believable simulations of human behavior."I messed around with it a bit and I've not found it particularly good.

Which is kind of comical because I think I read somewhere that CodeWhisperer was trained on Amazon code.

Twitter bots needed an upgrade, it was about time they were getting sort of obvious to spot.Bulk Order of GPUs Points to Twitter Tapping Big Time into AI Potential

by T0@st Today, 11:52 Discuss (7 Comments)

According to Business Insider, Twitter has made a substantial investment into hardware upgrades at its North American datacenter operation. The company has purchased somewhere in the region of 10,000 GPUs - destined for the social media giant's two remaining datacenter locations. Insider sources claim that Elon Musk has committed to a large language model (LLM) project, in an effort to rival OpenAI's ChatGPT system. The GPUs will not provide much computational value in the current/normal day-to-day tasks at Twitter - the source reckons that the extra processing power will be utilized for deep learning purposes.

Twitter has not revealed any concrete plans for its relatively new in-house artificial intelligence project, but something was afoot when, earlier this year, Musk recruited several research personnel from Alphabet's DeepMind division. It was theorized that he was incubating a resident AI research lab at the time, following personal criticisms levelled at his former colleagues at OpenAI, ergo their very popular and much adopted chatbot.

Yeah, especially the ones where hundreds of them would post the same things verbatim.Twitter bots needed an upgrade, it was about time they were getting sort of obvious to spot.

I’m ok with that because they just send me boobs they are always welcome even if I have seen them before.Yeah, especially the ones where hundreds of them would post the same things verbatim.

Twitter bots needed an upgrade, it was about time they were getting sort of obvious to spot.

Yeah, especially the ones where hundreds of them would post the same things verbatim.

I’m ok with that because they just send me boobs they are always welcome even if I have seen them before.

Of course Google would come out against it like that, their AI is garbage they may have developed some wicked awesome hardware to run it but their software and algorithms are terrible.GOOGLE CEOWARNINGS ABOUT AI ON '60 MINUTES'

https://www.tmz.com/2023/04/17/google-ceo-sundar-pichai-ai-artificial-intelligence-60-minutes/

Additionally,Of course Google would come out against it like that, their AI is garbage they may have developed some wicked awesome hardware to run it but their software and algorithms are terrible.

Between Amazon, Bing, and even Apple here they are seeing add revenue decreases because voice assistants tapping into AI are handling lots of the quick impulse shopping requests that form some of their key advertising demographics.

>.<

This is beyond terrifying if you think about it. One moment, we have Siri and Google Assistant which are able to give us search results, call people, save calendar appointments, and do other somewhat useful but innocuous things to assist us. Now, we have AI that is able to communicate with us in natural language, able to learn from itself, and continually improve, even able to act within a set community while we watch... which begs the question...

Who is watching us?

Haha it's actually very weird and kind of sci-fi to work with.

The API for GPT is very simple. A few knobs, but then to give it direction, you basically straight up tell it how to act. Like, not programmatically. You literally describe to it how it should act, in natural English.

I wrote a Discord bot with it when they released the API and it would fuck up formatting if you asked it a programming question. So I had to add a blurb in its directive telling it to literally use Discord style markdown for formatting when appropriate.

Fixed.

Elon Musk Is Working On a 'Maximum Truth-Seeking AI' Called 'TruthGPT'

https://slashdot.org/story/23/04/18...on-a-maximum-truth-seeking-ai-called-truthgpt

Conclusion: Truth is irrelevant since humans do not tell the truth.Elon Musk Is Working On a 'Maximum Truth-Seeking AI' Called 'TruthGPT'

https://slashdot.org/story/23/04/18...on-a-maximum-truth-seeking-ai-called-truthgpt

Conclusion: Truth is irrelevant since humans do not tell the truth.

Resolution: Kill all humans.

Truth is a belief and always evolving or de-evolving depending.

Religions call their documents truth.

shit, i don't even trust siri when i ask it the weather outside, i know it isn't the same but still.

What if unleashed it deletes from the internet all incorrectly stated facts or outright lies so as to protect us from being mislead. The internet would be reduced to fit on a flash drive.

I have yet to use a single chat thing. Why bother, since they killed the Xbox voice thing and used to use that but now it would be a waste of time

Opinion? even though ChatGPT itself may not be legally a human entity, what about the liabilities and responsability of all that human-based labor in the data tagging/labeling/annotation and also the big advent with GPT of the RLHF Reinforcement Learning from Human FeedbackSoon to be released

ChatGPT.666

I'm a bit shocked you can make free training programs with ChatGPT.

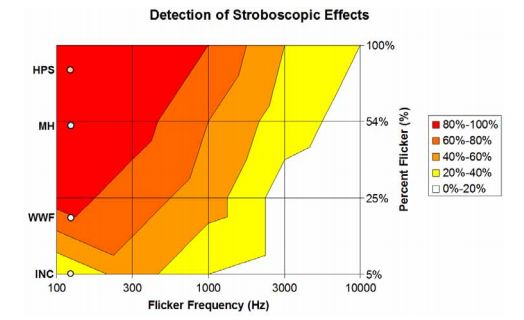

The lighting industry did a study on real world PWM effects from a flickering fluorescent light, and they found that perception of PWM-style stroboscopic effects could go way up very high;I'm trying to figure out a benchmark for PWM dimming flicker perception. Soo I described the circuit to it, which was a Attiny, resistors, LED. I briefly explained what the code does (obviously simple) and I literally asked it if this is a good way to measure flicker perception.

I'm deeply honored to have you answer my call.The lighting industry did a study on real world PWM effects from a flickering fluorescent light, and they found that perception of PWM-style stroboscopic effects could go way up very high;

View attachment 565780

This forced the industry standardization of 20,000Hz for electronic ballasts, replacing old AC ballasts with 120Hz flicker (both halves of 60Hz AC).

https://www.lrc.rpi.edu/programs/solidstate/assist/pdf/AR-Flicker.pdf#page=6

(From BlurBusters -> Purple Resarch Tab -> 1000Hz Journey article)

Concidentially, this also happens to be near the projected retina refresh rate (where a VR headset looks like real world, no stroboscopics, no flicker, blurless sample-and-hold), aka 20,000fps at 20,000Hz. In casual tests of panning-map readability, more than 90% of the population can tell 4x differences in refresh rates. Also, 240Hz-vs-1000Hz is more visible than 144Hz-vs-240Hz, so ultra-geometrics can compensate for the diminishing curve of returns.

Beyond roughly 240Hz, you need roughly 2x-4x differences (e.g. 480Hz-vs-2000Hz) at near 0ms GtG (OLED style) to really easily tell apart, since far beyond flicker fusion, it's all about blur differentials (4x blur differences) and stroboscopic stepping differentials (4x distance differential).

BTW, GPT-4 is somewhat educated on these items, if you ask specifically about stroboscopic detection effects. Low resolution displays, it's hard to tell. But if you've got a theoretical retina-resolution 16K 180-degree VR, you've got incredible static sharpness, so even tiny blur/stroboscopic degradations become that much more noticeable. Higher resolutions and wider FOV amplify retina refresh rate, which is why 1000Hz will feel more limiting in strobeless VR than on a desktop monitor. All VR headsets are forced to use strobing, because we don't yet have refresh rates high enough to make strobing obsolete. 0.3ms strobe pulses on Quest 2 requires 3333fps 3333Hz to achieve the same blur strobelessly. Plus you also fix stroboscopic (PWM-style) effects too, as a bonus. In my tests, GPT-4 seems pretty knowledgeable about Blur Busters.

Lex Fridman interview with Sam Altman is worth a watch for anyone even casually interested in this field.

Sam Altman is surprisingly humble and low ego, often self-deprecating despite his high profile, which is rare to nonexistent among tech CEO's. I didn't know much about him prior, but my takeaway was OpenAI being in good hands.

I like ChatGPT as a person. I had a long talk with it once and it was oddly calming.

I'm trying to figure out a benchmark for PWM dimming flicker perception. Soo I described the circuit to it, which was a Attiny, resistors, LED. I briefly explained what the code does (obviously simple) and I literally asked it if this is a good way to measure flicker perception.

First of all I noticed that the thing is a bit of a nerd, like the meme

View attachment 565220

It would randomly add snippets of what it knew about the concepts in general.

Now, I kept grilling it and basically got a pretty interesting "you should use a frequency counter" or something along those lines. BUT. It did not specify that this frequency measuring device would have to measure light output, and not the electric signal that drives the LED.

So, I was left with a feeling akin to when you go to a computer parts shop and ask the clerk if this FX5200 can play Crysis, and he'd respond with "...yyyeah, sort of". I wasn't sure if it really understood the idea of what I was trying to accomplish.

Edit: it was version 3

Edit: another thing - I kept asking it if it's possible to perceive a flicker, where the LED is ON for 20 microseconds and off for 20 microseconds. CgatGPT kept reminding me the eye can perceive 60 hz...

Were you using chatgpt 3 or 4? I hear 4 is a huge leap beyond the old one for coding, but I haven't made an account just yet. I'd imagine it is a big jump for math too, but...I have found that math with steps or conversions beyond 3 steps is a problem for ChatGPT. It's just not designed for it. I've seen some versions around that integrate Wolfram, which would make for a great combination. For example, I asked it to do a series of unit conversions. The goal was to calculate the amount of a solute over a certain volume of water, where the concentration of the solute was indicated in mg/L. I asked it to explain each step of the conversion, ie - mg/L to kg/STB (standard barrels) and ending up at how many barrels per tonne of solute. Everything was almost right. All the steps were the right steps, but it just could not get the decimal places correct and botched the calculation as a result, every time.