Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Asus P5E-VM HDMI: best matx ever?

- Thread starter pvhk

- Start date

yes this graphic card really rocks!!!

http://i209.photobucket.com/albums/bb261/pvhk/700.jpg

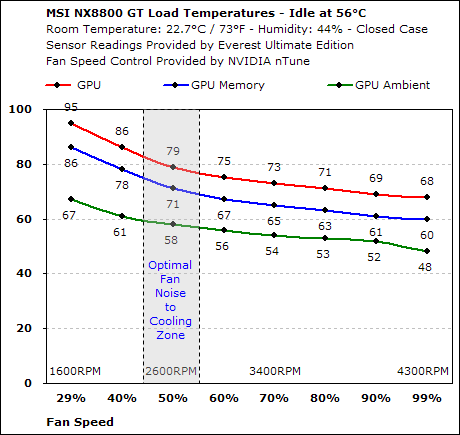

There's alot of discussion about the loudness of the 8800GT under load, especially when playing the Crysis demo. Whats your opinion on the noise for the 8800GT under load, pvhk? Supposedly when it gets over 45% speed it gets pretty loud. I read people have been fixing the speed at just under 45% for heavy 3D game play.

the fan speed is @29% by default!There's alot of discussion about the loudness of the 8800GT under load, especially when playing the Crysis demo. Whats your opinion on the noise for the 8800GT under load, pvhk? Supposedly when it gets over 45% speed it gets pretty loud. I read people have been fixing the speed at just under 45% for heavy 3D game play.

@100% it is very loud!

But it is possible via rivatuner or other soft to tweak the fan speed @40%: the best compromise between noise/cooling:

Does anybody have any idea how the PCIe lanes are distributed? I mean is the main PCIe x16 slot full speed even if integrated graphics is used (with one or two displays, even) and does the gigabit ethernet chip use PCIe or PCI (not good for speed). The manual didn't mention this, unlike the excellent Gigabyte manuals I skimmed through while checking out the GA-G33M variants (-S2H was a letdown, it has integrated DVI but the main PCIe is only x4 and ethernet was on PCI).

Acording to the board's manual, there are three PCI-E slots (1@16x, 2@1x), the PEG slot being full-speed. I don't think you'll have any problems with that one.Does anybody have any idea how the PCIe lanes are distributed? I mean is the main PCIe x16 slot full speed even if integrated graphics is used (with one or two displays, even) and does the gigabit ethernet chip use PCIe or PCI (not good for speed). The manual didn't mention this, unlike the excellent Gigabyte manuals I skimmed through while checking out the GA-G33M variants (-S2H was a letdown, it has integrated DVI but the main PCIe is only x4 and ethernet was on PCI).

There is one thing, though... The Intel IGP doesn't usually work with external GPUs. As soon as a PCI-E GPU is put in the PEG slot, the IGP desables itself. On the GA-G33M-DS2R, the manual clearly states that using ANY GPU on ANY PCI-E slot will result on the IGP being disabled... Not so on the X1250 IGP, which supports "Surround View" with ATI cards.

If somehow you found a way to have both the PCI-E and the IGP working on Intel chipsets, that may well be the cause of the PEG slot speed reduction, since it's not a supported feature, AFAIK.

As for the LAN chip, it is PCI-E-based, again according to the manual. I have, however, to say something about PCI-based NICs. On 10/100 LAN environments, there is no performance loss between PCI and PCI-E, the bandwidth is just not a factor. On Gigabit environments, although the latest PCI-E NICs can go as high as 900Mbps throughput (600~700Mbps for PCI-based ones), you do have to consider that packet handling at Gigabit speeds takes a HUGE amount of CPU power. And HDDs usually top at around 30~40MBps sustained (usually even less, because of fragmentation and whatever), so you'll usually stay well below 500Mbps, which most of PCI Gigabit NICs can handle...

Also, do keep in mind that the PCI bus is becoming increasingly "free". Nowadays, on an Intel chipset, you only have the legacy IO chip on that bus, which is not that much... Everything else is either PCI-E or has dedicated buses (like HD Audio). In this particular board, only the Firewire chip is PCI-based (along with the legacy IO controller), and there is only a PCI slot, so you wouldn't have a hard time with PCI bandwidth issues. Unless you frequently use multiple external Firewire HDDs (again, capped at 40MBps each) to transfer data via Gigabit...

Cheers.

Miguel

Damn, this sucks. I've never used systems with integrated graphics so I wasn't up to date on this behaviour; that it still works like this. It bloody well should be supported. I'm thinking of driving three or more monitors with a microATX system, with a large GPU cooler on the primary discrete card - there might not be room or free slots for another discrete graphics card.The Intel IGP doesn't usually work with external GPUs. As soon as a PCI-E GPU is put in the PEG slot, the IGP desables itself. On the GA-G33M-DS2R, the manual clearly states that using ANY GPU on ANY PCI-E slot will result on the IGP being disabled... Not so on the X1250 IGP, which supports "Surround View" with ATI cards.

[...]

If somehow you found a way to have both the PCI-E and the IGP working on Intel chipsets, that may well be the cause of the PEG slot speed reduction, since it's not a supported feature, AFAIK.

Indeed, I'm just planning for the future in case I build a fileserver over Gigabit LAN someday. That would or could be faster than a local disc if the CPUs on both ends are up to it.On 10/100 LAN environments, there is no performance loss between PCI and PCI-E, the bandwidth is just not a factor.

Using a PCI graphics card is, I guess, my only hope and even that isn't fool-proof - first I'd have to find a PCI graphics card from NVIDIA, have a free slot for it and then hope the OS doesn't play dumb when I try to use separate, different drivers for it and the main card.Also, do keep in mind that the PCI bus is becoming increasingly "free". Nowadays, on an Intel chipset, you only have the legacy IO chip on that bus, which is not that much... Everything else is either PCI-E or has dedicated buses (like HD Audio).

For three monitors, I think only the X1250/690G ATI, and perhaps the 6000 and 7000 series NVIDIA chipsets can handle IGP and GPU (from the same manufacturer, mind you) at the same time. NVIDIA is also preparing something called "Hybrid SLI" that can also help you there. As for Intel, the multi-GPU approach is not available because... well, Intel doesn't manufacture dedicated GPUs... heheheDamn, this sucks. I've never used systems with integrated graphics so I wasn't up to date on this behaviour; that it still works like this. It bloody well should be supported. I'm thinking of driving three or more monitors with a microATX system, with a large GPU cooler on the primary discrete card - there might not be room or free slots for another discrete graphics card.

Unless HDD technology changes a lot in the next few years, or you use big RAID arrays for sequential reads, you won't be able to cap the Gigabit badwidth that easily. Sustained 75MBps (600Mbps) is not that easy to achieve...Indeed, I'm just planning for the future in case I build a fileserver over Gigabit LAN someday. That would or could be faster than a local disc if the CPUs on both ends are up to it.

Well, good luck with that one. Do keep in mind that it seems Vista only allows one VGA driver, and the FX5200 (the only ones I know available on PCI format that work with Vista) uses a different driver than the newer 6, 7 and 8 series...Using a PCI graphics card is, I guess, my only hope and even that isn't fool-proof - first I'd have to find a PCI graphics card from NVIDIA, have a free slot for it and then hope the OS doesn't play dumb when I try to use separate, different drivers for it and the main card.

In XP is easier. I've had multiple VGA cards working hand-in-hand in XP, and with different GPUs. Do steer clear of S3 cards, though, since the only way to make them work in XP is with them being the primary display adapter, which is usually not a very good idea...

Cheers.

Miguel

I find it hard to believe this is so complicated. On the other hand, multi-monitor support is probably usually provided for professionals and business environments by Quadro or other such cards, and gaming cards have never been considered part of the equation... The setup I need is probably two monitors on the main card (some NVIDIA card possibly, like I now have) and a TV on some second card, with hopefully a digital connection to eliminate analog worries. Finding an old, supported card that can do that is the problem there. It might also have to be a PCIe x1/x4 card for these new mATX boards.For three monitors, I think only the X1250/690G ATI, and perhaps the 6000 and 7000 series NVIDIA chipsets can handle IGP and GPU (from the same manufacturer, mind you) at the same time. NVIDIA is also preparing something called "Hybrid SLI" that can also help you there.

Which wouldn't be the goal either, but just something faster than a 100Mbit LAN with some bandwidth in use already.Sustained 75MBps (600Mbps) is not that easy to achieve...

I'm steering well clear of Vista anyway. The only problem may be with having to install drivers from different releases of ForceWare - I have no idea if the newest one's control panel can still talk to older drivers from previous versions. They do drop older cards from the package from time to time, don't they?Well, good luck with that one. Do keep in mind that it seems Vista only allows one VGA driver, and the FX5200 (the only ones I know available on PCI format that work with Vista) uses a different driver than the newer 6, 7 and 8 series...

Good to hear. Is there anything special involved with installing cards of different models, and even makes? Do they all still support hardware acceleration (something I was told might not work, but I have no time or hardware at hand to test these things at the moment). Pretty much the only requirement for such a PCI card I need is a DVI connector in the back and high resolution support, at 1920x1080 (or 1200). (I've tried S3 cards with older machines by the way and I know they're a pain in the ass to use.)In XP is easier. I've had multiple VGA cards working hand-in-hand in XP, and with different GPUs. Do steer clear of S3 cards, though, since the only way to make them work in XP is with them being the primary display adapter, which is usually not a very good idea...

There are some industrial cards dedicated exactly to that. I've seen reports of cards with support for up to nine monitors (which is the maximum Windows currently supports), if memory serves me right.

If you want to use XP, you have many more options, since several VGA drivers are allowed. Your only problem becomes driver or hardware incompatibility.

So, since I'm on the "good news" side, I have to tell you there are also PCI-E 1x GPUs, so you have just opened up many more possibilities. And much more if you consider options like the GA-G33M-DS2R (and the G31 "little brother"), which has both a PCI-E 16x and a PCI-E 4x slot (open-ended ). Not to mention you can even CF these cards...

). Not to mention you can even CF these cards...

As for NVIDIA drivers, the 5200 series driver support ended on the 5x.xx Detonator series (not really sure, but there are several cards whose support ended long ago). And I don't know how the drivers behave with different versions at the same time...

As for your last paragraph, I have to say the last time I have messed around with multi-monitor support was a few years ago with TNT2 and S3 cards, so "hardware acceleration" wasn't an issue... lol If you stick with GPUs from the same manufacturer (and same driver), you should be fine and keep all the features, since the drivers already support multi-monitor configurations.

One last note: I've never seen NVIDIA-based PCI-E 1x cards, only ATI-based, and from the X1000 series, if memory serves me right. However, I do know most ATI cards (well, at least those who use dedicated power connections) work in reduced bandwidth cenarios (there was an article on that last year on Anandtech or Tom's Hardware that tested a X1950XTX). So, even with an open-ended 4x or 1x ("custom" open-ended 1x, if you know what I mean... hehehe) you should be able to use other GPUs on the same system.

Cheers.

Miguel

P.S.: Sorry for the HUGE off-topic

If you want to use XP, you have many more options, since several VGA drivers are allowed. Your only problem becomes driver or hardware incompatibility.

So, since I'm on the "good news" side, I have to tell you there are also PCI-E 1x GPUs, so you have just opened up many more possibilities. And much more if you consider options like the GA-G33M-DS2R (and the G31 "little brother"), which has both a PCI-E 16x and a PCI-E 4x slot (open-ended

As for NVIDIA drivers, the 5200 series driver support ended on the 5x.xx Detonator series (not really sure, but there are several cards whose support ended long ago). And I don't know how the drivers behave with different versions at the same time...

As for your last paragraph, I have to say the last time I have messed around with multi-monitor support was a few years ago with TNT2 and S3 cards, so "hardware acceleration" wasn't an issue... lol If you stick with GPUs from the same manufacturer (and same driver), you should be fine and keep all the features, since the drivers already support multi-monitor configurations.

One last note: I've never seen NVIDIA-based PCI-E 1x cards, only ATI-based, and from the X1000 series, if memory serves me right. However, I do know most ATI cards (well, at least those who use dedicated power connections) work in reduced bandwidth cenarios (there was an article on that last year on Anandtech or Tom's Hardware that tested a X1950XTX). So, even with an open-ended 4x or 1x ("custom" open-ended 1x, if you know what I mean... hehehe) you should be able to use other GPUs on the same system.

Cheers.

Miguel

P.S.: Sorry for the HUGE off-topic

CrimandEvil

Dick with a heart of gold

- Joined

- Oct 22, 2003

- Messages

- 19,670

Are they even out (in the States) yet?Anyone got theirs yet?

I haven't seen it yet. I'm also keeping en eye on it, I don't know if I should replace my abit F-I90HD with this one. I never had a problem with my board unlike many other but maybe I should not push my luck further.

Also, I email twice abit if this board would run the new 45nm Penryn and I never got an answer.

Now I read there is also the ASUS P5N-EM HDMI with nForce 630i that is coming but no other spec I can find and no official annoucement I can find either. Is the nForce 630i a better solution than Intel G35 / ICH9R? I have no idea.

Also, I email twice abit if this board would run the new 45nm Penryn and I never got an answer.

Now I read there is also the ASUS P5N-EM HDMI with nForce 630i that is coming but no other spec I can find and no official annoucement I can find either. Is the nForce 630i a better solution than Intel G35 / ICH9R? I have no idea.

Glad pvhk you'll get it 1st since I see it is listed in your country. I'll base my decision on your board evaluation and your 1st thought. Hopefully it will be available soon here also.

Now I need to decide if I will match it with a 8800GT or a HD 3870. I know 8800GT have a little more power but 3870 runs cooler and more silently. I run my current integrated Ati with driver only as I hate CCC and I wonder if I will have to use CCC to run the HD 3870 if I don't plan to play with frqs and all. I think nVidia driver panel is lighter than AMD/ATi CCC.

Geesh it's really hard to decide!

Now I need to decide if I will match it with a 8800GT or a HD 3870. I know 8800GT have a little more power but 3870 runs cooler and more silently. I run my current integrated Ati with driver only as I hate CCC and I wonder if I will have to use CCC to run the HD 3870 if I don't plan to play with frqs and all. I think nVidia driver panel is lighter than AMD/ATi CCC.

Geesh it's really hard to decide!

I haven't seen it yet. I'm also keeping en eye on it, I don't know if I should replace my abit F-I90HD with this one. I never had a problem with my board unlike many other but maybe I should not push my luck further.

Also, I email twice abit if this board would run the new 45nm Penryn and I never got an answer.

Now I read there is also the ASUS P5N-EM HDMI with nForce 630i that is coming but no other spec I can find and no official annoucement I can find either. Is the nForce 630i a better solution than Intel G35 / ICH9R? I have no idea.

If you're happy with the F-I90HD, I don't think why you should change it. But, if you DO want to change it, I won't mind taking it for freeThe nforce 630i P5N-EM HDMI does not match the P5E-VM HDMI in term of performance wise!

Now, if the G35-based mobos are ANYTHING like the G33, they will be performance monsters (for mATX, of course... hehehe). G33 are already good, but if G35 is even more enthusiast-friendly, then the full-ATX standard will start to have problems to keep up (well, the low-to-mid-end, at lease...

As for GeForce 7xxx-based mobos, there are good news and bad news. The good news is, it seems the IGP is somewhat alike to the (low-end) 6xxx series in terms of performance. The bad news are the 7xxx IGP is apparently worse than the 6xxx IGP

As a sidenote, right now the only IGP-enabled (ir seems, at least on Intel chipsets, all of them have the IGP logic in them, only not enabled...) northbridges available that support dual channel memory mode are anything Intel-based (starting with the 865G, which can support up to 1066MHz C2Ds, though only 800MHz ones for IGP, more than that needs an AGP graphics card) and the X1250, from ATI. Everything else (and trust me, I've checked every one of them) is only single-channel if it has an IGP.

Kind of lame, if you ask me... You either choose performance or price, there is almost nothing in the middle... I mean, you can get something VIA-based for like 30~40, and for 50~65 you have 945G/GC boards (I can't really understand why they are so popular these days...). The good stuff (X1250 and G33) starts at 90~150, which is rather expensive...

So, in short, best IGP chipset right now is G33+ICH9(R), followed by X1250+SB600. Those are also the only OC-friendly boards you have (although some 945G/GC and G31-based mobos might disagree on that, at least as far as the X1250 is concerned... hehehe). They all also have severe "quircks" (lack of memory dividers for Gxx, poor memory performance for 945G/GC, lack of RAID southbridges, iffy memory slots for X1250...) Hopefully G35 will be an über-northbridge, and answer all those issues

Cheers.

Miguel

This from a post made by Gary Key over at AT.

"The G35 board is the ASUS P5E-VM HDMI, no issues with getting a Q6600 up to 9x400 on it if that is in anyone's plan, the BIOS is fairly extensive for overclocking the board, video output quality is much improved over G31/G33, and the one drawback of the board so far is that ASUS used the Realtek ALC883 codec, not a big fan of the 883 when the 888T and 889A are much better if they had to use Realtek."

The entire thread can be found here:

http://forums.anandtech.com/messageview.aspx?catid=29&threadid=2120540&enterthread=y

"The G35 board is the ASUS P5E-VM HDMI, no issues with getting a Q6600 up to 9x400 on it if that is in anyone's plan, the BIOS is fairly extensive for overclocking the board, video output quality is much improved over G31/G33, and the one drawback of the board so far is that ASUS used the Realtek ALC883 codec, not a big fan of the 883 when the 888T and 889A are much better if they had to use Realtek."

The entire thread can be found here:

http://forums.anandtech.com/messageview.aspx?catid=29&threadid=2120540&enterthread=y

Meh, that's not too bad of a problem. At least you can add your own soundcard. What we need to know about are the unfixable problems this board might have. With the exception of the PCI slot arrangement (what do I need two 4x PCIE slots for?, this board is looking pretty perfect to me.

Got the P5E-VM HDMI today and I'm somewhat disappointed.

I thought the onboard voltage damper would eliminate Vdroop but it didn't.

When set 1,7 vcore it gives 1.48 real volts... guess we need to pencil mod this sucker too . Haven't updated to the latest bios yet though and still on version 0202, maybe 0301 which is the latest fixes this... Will post back soon...

. Haven't updated to the latest bios yet though and still on version 0202, maybe 0301 which is the latest fixes this... Will post back soon...

I thought the onboard voltage damper would eliminate Vdroop but it didn't.

When set 1,7 vcore it gives 1.48 real volts... guess we need to pencil mod this sucker too

Not 100% sure but I think in that post Gary made he said they were waiting on newer BIOSes from the manufacturers that sent mobos in including the P5E-VM HDMI. Next week I think he said. Which brings up the question why are manufacturers sending products out to be reviewed when they knowingly dont have a good working BIOS?  Thats just plain wrong to my thinking. AT needs to take these manufacturers to task about such silliness.

Thats just plain wrong to my thinking. AT needs to take these manufacturers to task about such silliness.

That's weird!!

Allsop, Does it have the same or better max fsb compared to the p5k-vm?

What is the module upon the pcie16x slot?

Have not tried any higher FSB than 450 due to instabillity of my Q6600 at 9 multiple...

About the module I don't know, nothong about it in the manual, it's only ID is ASM_1 and ASM2....

And it's soldered to the board and not removable.

Anyway I don't know why Asus releases a MB such as this with Voltage Damper and a max vcore of 1.7 in bios, when it doesn't live up to it's expectations.

1.48 max vcore or 1.58 with vdroop mod is far from acceptable... shame!

How did you perform the the vdroop mod, like the p5k-vm?

could you post a screen of the resistor to pencil?

Thxs allsop!

btw: did you test all the 3 bios available including the 1st beta?

The board came with bios 0202 and now I'm on the latest 0301...

In the picture below you see which resistor to pencil, it's the one marked red.

Alternative you could pencil the resistor to the left of it.....

drazendead

n00b

- Joined

- Jun 8, 2007

- Messages

- 12

The idea to perform the pencil mod on both resistors... it could improve something (increase the voltage a bit more)???

Thx

Thx

The idea to perform the pencil mod on both resistors... it could improve something (increase the voltage a bit more)???

Thx

Already tried that... didn't bump the voltage....

drazendead

n00b

- Joined

- Jun 8, 2007

- Messages

- 12

I'll try this: http://sg.vr-zone.com/?i=3904 with your Vmod ASAP

Thx allsop

Thx allsop

I'll try this: http://sg.vr-zone.com/?i=3904 with your Vmod ASAP

Thx allsop

That's only for default voltage the CPU reports to the MB.. it helps on MBs that doesn't offer vcore adjustments but on my board I have that possibility already.

I would still be stuck at about 1.6 volts as I am now with the vdroop mod.

Thxs again for your vdroop mod!The board came with bios 0202 and now I'm on the latest 0301...

In the picture below you see which resistor to pencil, it's the one marked red.

Alternative you could pencil the resistor to the left of it.....

When i will receive mine, It will be done after some test with my x6800!

Your biggest problem there will be finding a PCI card which is Vista-compatible. The only ones I know of are FX5200-based NVIDIA cards, which are VERY rare (I think they are made by Club3D, but I'm not sure).Curious to know, if I use the intergrated HDMI/VGA and a PCI (non E) graphics card, will this work ok with Vista Aero? Or would it still be a problem since Vista only likes one driver?

And then, yes, you'll have the major pain to have them work together in Vista. XP is already a tough nut to crack, Vista is like an adamantium nut

So, in short, yes, you'll have a blast trying to get two non-SLI/CF cards (well, technically, those also have problems... lol) working in Vista. If you manage to get hold of one of those FX5200 cards and want to give it away, pass it to me, I've got a small P3-1GHz server just waiting for one of those (no AGP slot

Cheers.

Miguel

I'm using this Radeon X1550 in one of my old Shuttle XPCs:

http://www.newegg.com/Product/Product.aspx?Item=N82E16814103031

It's PCI and is Vista compatible

http://www.newegg.com/Product/Product.aspx?Item=N82E16814103031

It's PCI and is Vista compatible

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)