erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,874

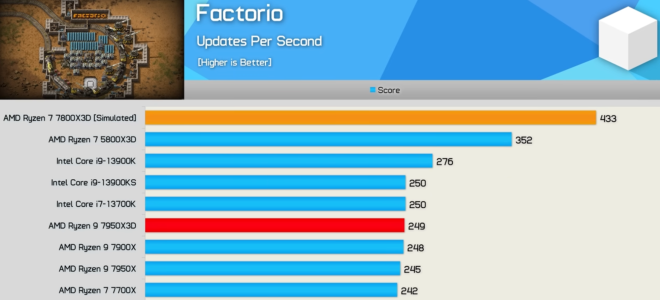

Should I stick with my AM4 5950X?Honestly, I'll be sticking with my 5800x3d it seems. Thought this would prove to show better gains, but it really doesn't.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Should I stick with my AM4 5950X?Honestly, I'll be sticking with my 5800x3d it seems. Thought this would prove to show better gains, but it really doesn't.

The IHS for AM5 is indeed, thicker.We don´t, and I doubt that it is true given that literally no one complained about the requirement to spend a few bucks to get a new mounting bracket for their existing coolers back when am4 was introduced. Apart from that, I imagine that increasing socket thickness would be the more obvious solution for that problem.

I find it far more likely that the extra vertical space's primary reason is the cache in the 3d versions of the cpu. If I remember correctly, the previous gen 5800x3d required quite a bit of effort to obtain thinner chiplets so that they could add the cache on top within the existing am4 spec.

smaller package makes it significantly harder to dissipate heat due to the lack of surface area. this is the key problem both intel and AMD are going to run into as processes get smaller. the actual heat being dissipated through the IHS is much lower than what the actual cores themselves are running at. it was one of the reasons AMD made the IHS's thicker to act like a heat sponge so to speak since the heat from the dies transfers more efficiently to the IHS then the IHS does to the cooler when going from an idle state to a loaded state.What's most interesting to me is how the AMD CPU when scaled to a certain W is somehow always hotter than the intel CPU at the same W. I wonder what's going on there.

That's what I'm doing. This thing is impressive, but not $1000+ system upgrade impressive.Should I stick with my AM4 5950X?

Great point, I hadn't given that enough thought. I'll just chalk it up to being a bit tired after work today.Don't we already know that the Zen4 chips have an extra thick IHS to maintain compatibility with AM4 coolers? Delidding, or even shaving off a mm or so, takes a huge chunk of temp off.

IDK what you mean. AMD blows them way in efficiency so not sure what you mean by what he is drinking lmao.

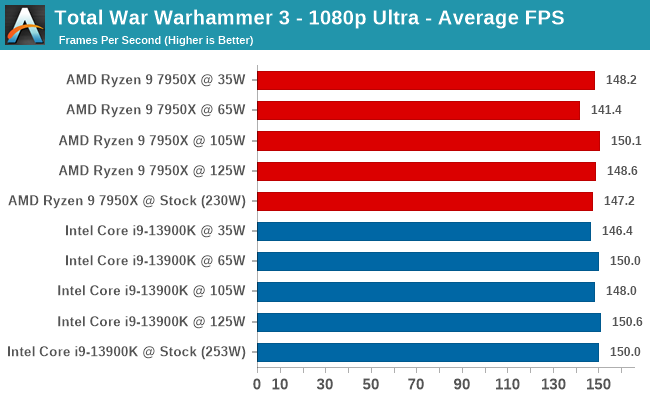

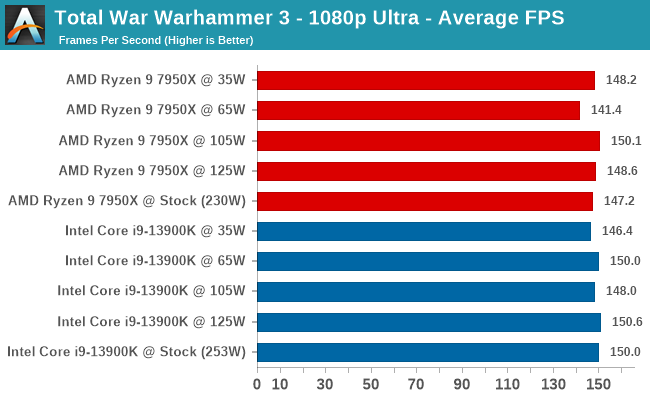

Wow, so just ignore the link I posted to spoon feed you. Let me spoon feed you actual images then.He has no idea what he's talking about. And the closest thing he can up with to a retort is to reference some power scaling numbers from the 45w-higher-TDP 7950X.

AMD could choose to juice the 7950X3D with another 100w of maximum headroom, take away every marginal win Intel currently holds by doing so, and still come in with a lower total power draw.

Don't we already know that the Zen4 chips have an extra thick IHS to maintain compatibility with AM4 coolers?

Cool, let me know when we can play this game. In all seriousness, Cinebench has become a meme of a benchmark that spits out numbers that can't be interpreted into actual performance, just like Ashes before it. Seems the benches that favor AMD all end up in the same manner, who would have thunk.

These are scaling charts where the CPUs are basically measured against themselves on a wattage basis. You seem to be dismissive of numbers only when it doesn't fit your narrative.Cool, let me know when we can play this game. In all seriousness, Cinebench has become a meme of a benchmark that spits out numbers that can't be interpreted into actual performance, just like Ashes before it. Seems the benches that favor AMD all end up in the same manner, who would have thunk.

I understand full well what those numbers mean, and yet unfortunately, they don't really apply to performance deltas when power scaling in any other application. So those numbers only apply to this one specific application and that really doesn't mean much at all when the application doesn't actually have any tangible purpose, now does it.These are scaling charts where the CPUs are basically measured against themselves on a wattage basis. You seem to be dismissive of numbers only when it doesn't fit your narrative.

I see, so since warhammer and cinebench don't match up 1:1 - the cinebench scaling numbers are meaningless - and warhammer is the intuitive benchmark for watts to performance? I could barely get that typed out before laughing out loud for real.I understand full well what those numbers mean, and yet unfortunately, they don't really apply to performance deltas when power scaling in any other application. So those numbers only apply to this one specific application and that really doesn't mean much at all when the application doesn't actually have any tangible purpose, now does it.

Cool, let me know when we can play this game. In all seriousness, Cinebench has become a meme of a benchmark that spits out numbers that can't be interpreted into actual performance, just like Ashes before it. Seems the benches that favor AMD all end up in the same manner, who would have thunk.

AMD and intel aren't even close to equal on performance/power right now. There's a lot of data on the net, even on power normalized graphs outside of these Cinebench and AT's game benchmarks you showed (where its entirely GPU limited btw) that Intel consumes way more power for the same amount of work. If Cinebench and blender aren't enough, try all the other benchmarks which actually use the cores and see which CPU scales better when power normalized. Power consumption is one of the key reasons AMD are winning server contracts at the rate they are.I understand full well what those numbers mean, and yet unfortunately, they don't really apply to performance deltas when power scaling in any other application. So those numbers only apply to this one specific application and that really doesn't mean much at all when the application doesn't actually have any tangible purpose, now does it.

I was reading the 7800X3D is likely better for most people since it’s homogenous and the 7950 doesn’t spool up right in some games. So you’re probably spot on.What I'm noticing with these benchmark reviews is how good the 5800X3D still is. Pure gamers shouldn't even consider the 7950X3D. Stay with the 5800X3D or wait for the 7800X3D.

I do that too. I also get teased about turning on the desktop icons first thing after a windows install.To be expected since the 7800X3D is one CCD with 3D cache.

I must be old school in that I close everything else when I game.

I'd honest to God love a SimCity 4 comparison, lol. That game is notoriously locked to a single core than can get really bogged down later in the game.It's just ridiculous that Hardware Unboxed is the only reviewer that still benchmarks CPUs using a CPU-reliant game - and they only use one game! Factorio - that's it. So many simulation/tycoon/strategy games out there that are bogged down by CPU performance - and the reviewers ignore all of them in favor of what fits their typical BS narrative, "CPUs don't really matter anymore, put your money into this (way overpriced) GPU instead (that won't actually noticeably increase game performance - just graphics)."

It looks like the 7000 series doesn't benefit quite as much from the extra cache but the results are still nice and the efficiency looks great. It looks like the 7800x3d will be the AM5 cpu to get for gaming and the 7950x is a better value for production but this generally gives you the best of both worlds for not too much more than the 7950x.

I don't really care for the asymmetrical design or especially that it requires software with updated profiles to work properly. I'm not a fan of Intel going big/little and this seems worse due to the extra software requirements and complexity involved in assigning the cores based on the type of task rather than just how demanding it is.

I'm going to think it over some but I'll probably just grab a 5800x3d since it would be a drop in upgrade for me and still isn't far behind the best in gaming. The only thing holding me back is I'll probably upgrade to 32GB of RAM and then I'll be a motherboard away from updating my secondary PC so I'll probably still end up buying just as many components however a DDR4 kit and b450 board would be a lot cheaper than a ddr5 kit and x670 board that I'd get if I made the jump to AM5.

On the Intel side the 13900k is still a top end gaming cpu and the 13600k is a really nice bang for the buck cpu but they're unlikely to have nearly as many upgrade options available as an AM5 system which is a big factor for me when everything else seems fairly equal.

It's just ridiculous that Hardware Unboxed is the only reviewer that still benchmarks CPUs using a CPU-reliant game - and they only use one game! Factorio - that's it. So many simulation/tycoon/strategy games out there that are bogged down by CPU performance - and the reviewers ignore all of them in favor of what fits their typical BS narrative, "CPUs don't really matter anymore, put your money into this (way overpriced) GPU instead (that won't actually noticeably increase game performance - just graphics)."

it's a limitation of the game, doesn't matter what cpu you have you'll still hit the same problem and to do that would take forever to do in a benchmark scenario for a game that maybe 1000 people on a good day still play.I'd honest to God love a SimCity 4 comparison, lol. That game is notoriously locked to a single core than can get really bogged down later in the game.

The AMD FX-62 was $1,031. Intel's top CPU at the time was the Pentium Extreme 965, which was $999. Just a couple months after the FX-62 released was when Intel launched the first products in the Core product line, using the Conroe microarchitecture. The top of that product line, the X6800, was also $999. AMD responded by pushing the Windsor architecture further with the FX-74 and FX-76, but couldn't make up the performance deficit. Both of those processors launched at the $999 price point to compete with Intel. With the launch of Phenom the following year, the period where AMD competed on price instead of raw performance began. Intel Core really disrupted the CPU market and led to their market dominance for the next decade.but wasn't AMD's flagship CPU always cheaper than Intel's flagship?

Yes yes, I know. It was just a "what if" type of wish, lol. Wouldn't want to get in the way of the millionth triple digit fps CS:GO benchmark at 720p.it's a limitation of the game, doesn't matter what cpu you have you'll still hit the same problem and to do that would take forever to do in a benchmark scenario for a game that maybe 1000 people on a good day still play.

I think it is more about difficulty than impossibility. Which just means the reviewers are complacent (at least the big ones that have time/money) - it's their job to do the difficult tasks involved with reviews. Some games allow extensive custom modding, map/scenario editing, save game editing, etc... Can create something that is as linear and scaled-up as possible while still simulating the calculations involved with a dynamic game.simulation games are very hard benchmark properly due to the dynamic nature of most modern simulation games. if there's no way to do an in game rendered replay which is how most of them benchmark FS2020 you can't get apples to apples numbers so you have to do multiple run throughs to get a +-5% average which is still too large of a gap. while i definitely enjoy playing simulation/strategy games i can't think of a single one that's worth wasting benchmark time on and that includes FS2020.

It's even more ridiculous when you read the comments and people are crying about 720[/1080p benchmarks. Like they can't grasp that you are trying to see how fast the cpu is relative to others with the the gpu limits removed. Like say a cpu is 40% faster than another in a low resolution but equal in 4k. You can bet a game will come along eventually that need that difference in performance and you will have an idea of how they will compare.It's just ridiculous that Hardware Unboxed is the only reviewer that still benchmarks CPUs using a CPU-reliant game - and they only use one game! Factorio - that's it. So many simulation/tycoon/strategy games out there that are bogged down by CPU performance - and the reviewers ignore all of them in favor of what fits their typical BS narrative, "CPUs don't really matter anymore, put your money into this (way overpriced) GPU instead (that won't actually noticeably increase game performance - just graphics)."

The AMD FX-62 was $1,031.

That's cool and all, but can you really blame people for wanting to see some real world differences today? Again, reviewers should make more effort in showing off CPU heavy games instead of trotting out the same old FPS/1st person RPG spread.You can bet a game will come along eventually that need that difference in performance and you will have an idea of how they will compare.

gamers nexus has ffxiv at least which does benefit from newer architectures and cache quite a bit.It's just ridiculous that Hardware Unboxed is the only reviewer that still benchmarks CPUs using a CPU-reliant game - and they only use one game! Factorio - that's it. So many simulation/tycoon/strategy games out there that are bogged down by CPU performance - and the reviewers ignore all of them in favor of what fits their typical BS narrative, "CPUs don't really matter anymore, put your money into this (way overpriced) GPU instead (that won't actually noticeably increase game performance - just graphics)."

Did not notice it was an MMORPG - good to see something different than the single-player first-person benchmarks. But looks like the cache didn't help too much. 5800x3d is beat by the 7700x. And we can't really determine anything definitely with the 7950x3d because as Hardware Unboxed already showed, it appears the split design of the CPU cache reduces performance of the cache - and Gamers Nexus did not run any "simulated" cache-only tests with the non-cache cores disabled. Have to wait for the 7800x3d to see how the cache really fairs in these benchmarks.gamers nexus has ffxiv at least which does benefit from newer architectures and cache quite a bit.

I think it is more about difficulty than impossibility. Which just means the reviewers are complacent (at least the big ones that have time/money) - it's their job to do the difficult tasks involved with reviews. Some games allow extensive custom modding, map/scenario editing, save game editing, etc... Can create something that is as linear and scaled-up as possible while still simulating the calculations involved with a dynamic game.

That is one of the other things that drive me nuts. Benchmarks for games like Total War and Civilization that measure only in FPS! If you're not measuring turn time too, don't bother including those games at all.It really shouldn't be any harder then playing a CPU intensive turn based game like Civilization.... saving a game at a point where there are many things to calculate. Load same save game up with different systems... hit next turn button use a stopwatch if you have too. A lot of people do play simulation games... when it comes to a CPU I would like to know how fast it is calculating a next turn. There are some games that do really take a bit to calculate every turn. They aren't sexy enough to grab you tube eyeballs I guess. I don't really care how many FPS civilization is running at any decent GPU will take care of that end. I want to know if I can look forward to late game 20s or 5s next turn clicks. I would have to think its exactly those types of games that would do well with massive cache.

I was thinking that. Is it really so hard to just load a savegame latter into a large sim game and see how end turn/ assets load? I get a bit tired of CPU benchmarks using almost the same exact metrics as GPU benchmamrks.It really shouldn't be any harder then playing a CPU intensive turn based game like Civilization.... saving a game at a point where there are many things to calculate. Load same save game up with different systems... hit next turn button use a stopwatch if you have too. A lot of people do play simulation games... when it comes to a CPU I would like to know how fast it is calculating a next turn. There are some games that do really take a bit to calculate every turn. They aren't sexy enough to grab you tube eyeballs I guess. I don't really care how many FPS civilization is running at any decent GPU will take care of that end. I want to know if I can look forward to late game 20s or 5s next turn clicks. I would have to think its exactly those types of games that would do well with massive cache.

I mean thinking about it I guess a reviewer could argue... well the game AI will do something a little different every run. However that should also be easily controlled for. I mean how long would it really take to do reload the game and hit next turn 10 times for each CPU and average them.I was thinking that. Is it really so hard to just load a savegame latter into a large sim game and see how end turn/ assets load? I get a bit tired of CPU benchmarks using almost the same exact metrics as GPU benchmamrks.

This man just said that in power normalized test intel consumes more power. I can't even make this shit up.AMD and intel aren't even close to equal on performance/power right now. There's a lot of data on the net, even on power normalized graphs outside of these Cinebench and AT's game benchmarks you showed (where its entirely GPU limited btw) that Intel consumes way more power for the same amount of work.

Cool, latch on to the mistake I made and ignore the rest of my comment, or all the other people who corrected you with your argument that AMD and Intel have the same power/performance. Let me correct myself, on power normalized graphs, intel has way less performance for the same power. Or vice versa, you know, because it works both ways anyway.This man just said that in power normalized test intel consumes more power. I can't even make this shit up.

It's as if you have no clue what a power normalized test is, holy shit.

I mean lets be honest here. Doesn't matter what benchmark anyone finds. Intel uses more power than AMD. Some test are better than others, but there is no dancing around the fact that Intel sucks at power/performance compared to AMD.This man just said that in power normalized test intel consumes more power. I can't even make this shit up.

It's as if you have no clue what a power normalized test is, holy shit.