erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,894

Idk what this is all about but seems to be causing quite the stir elsewheres

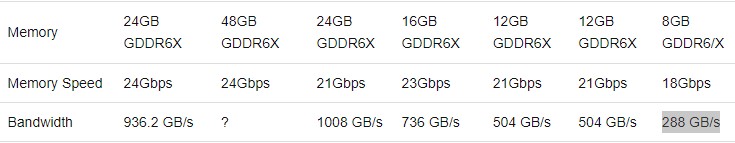

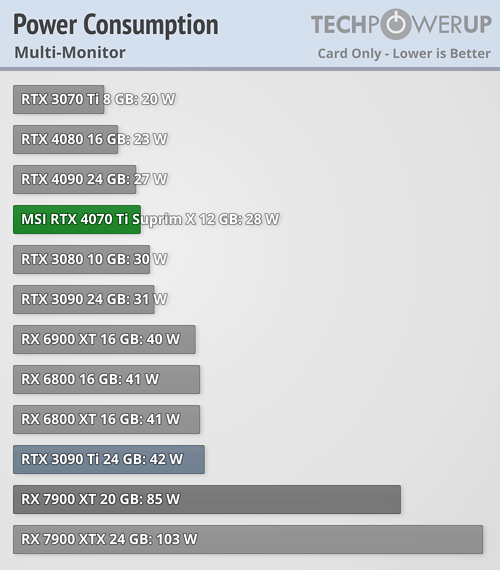

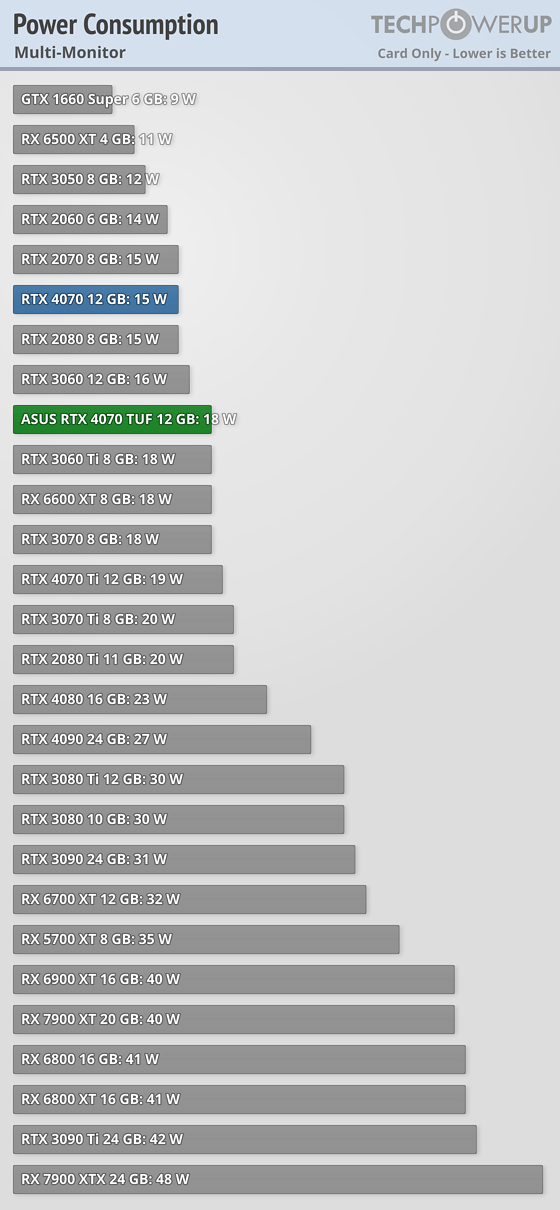

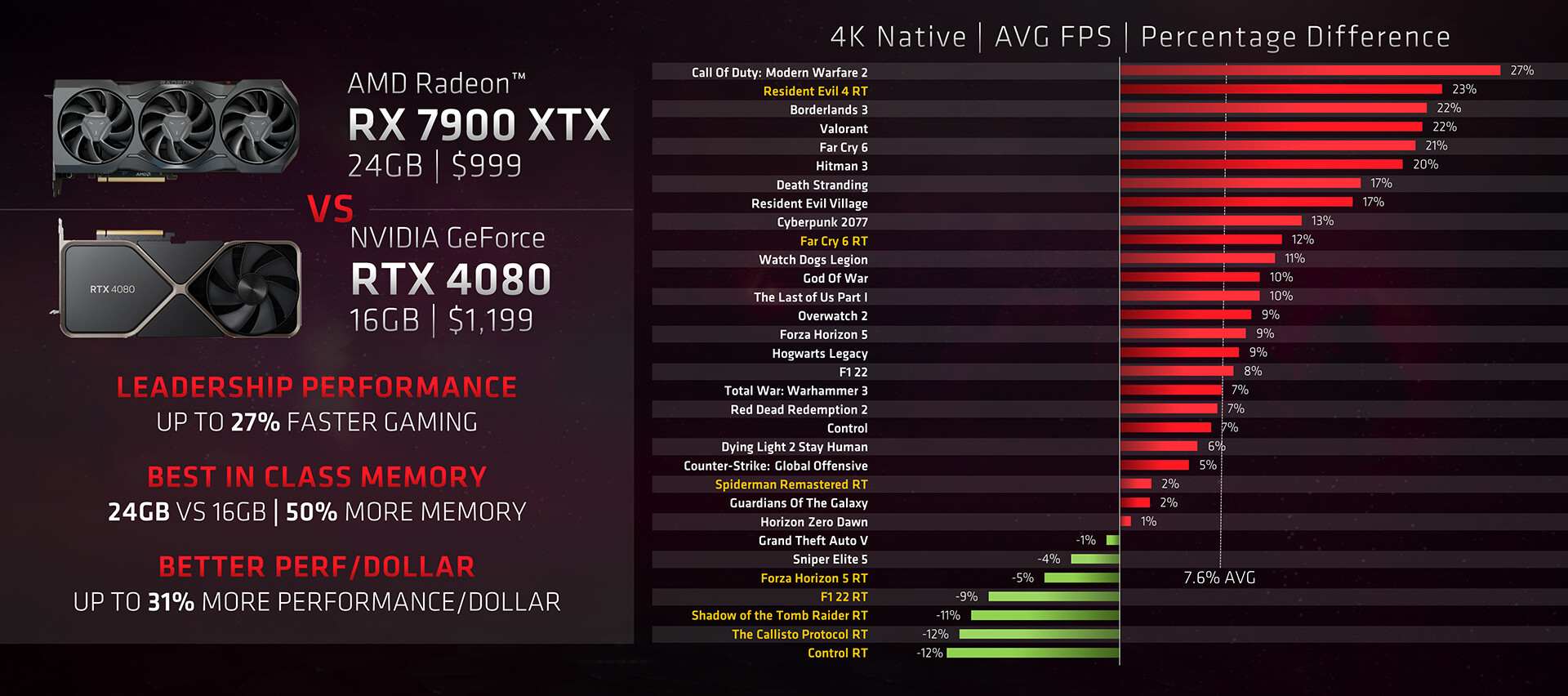

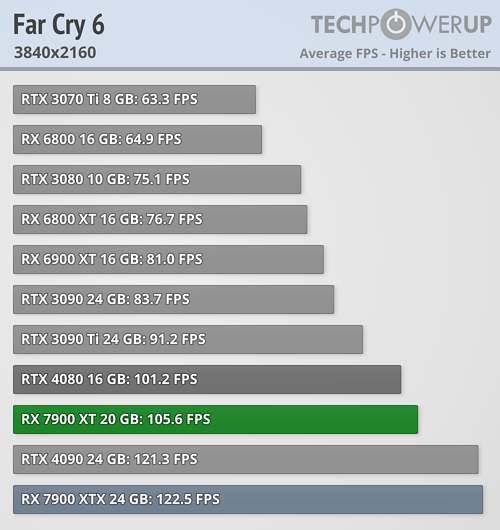

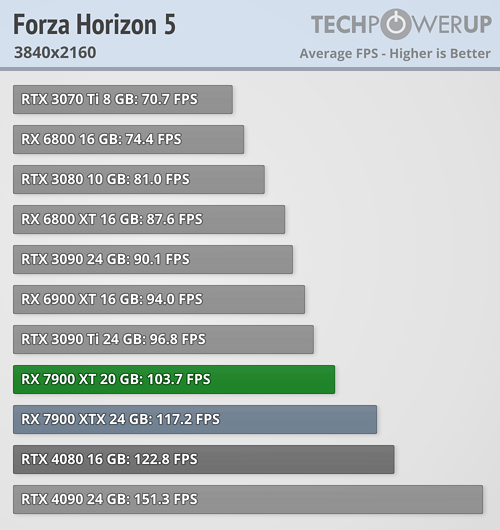

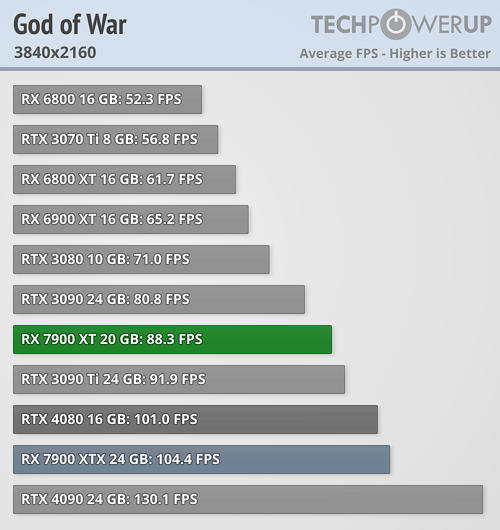

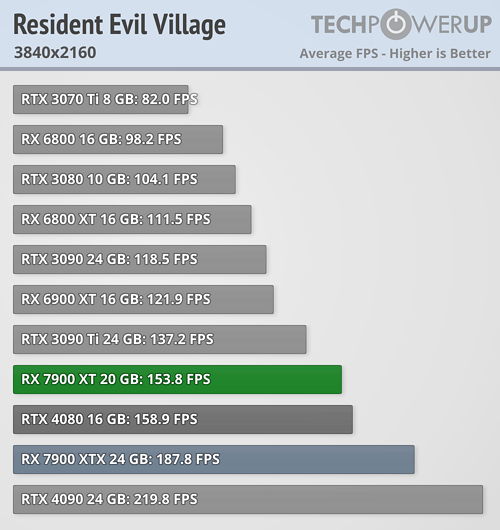

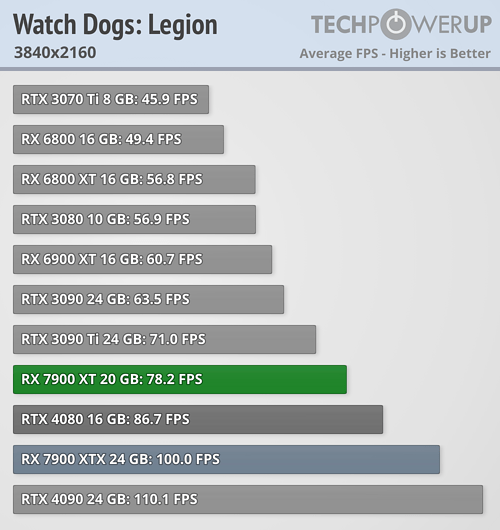

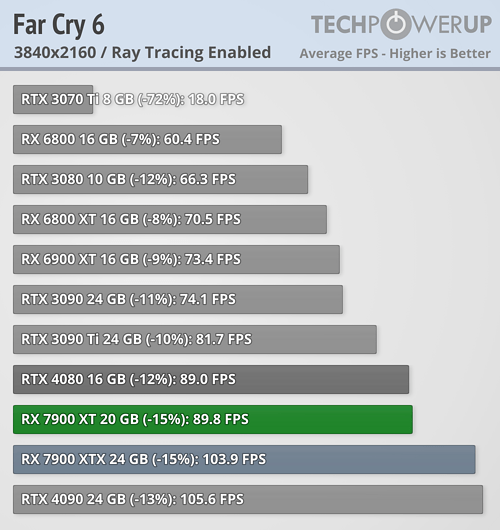

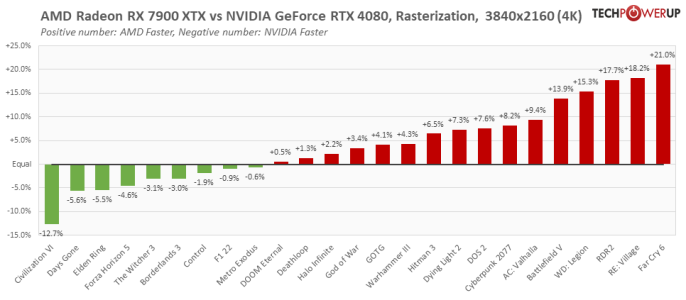

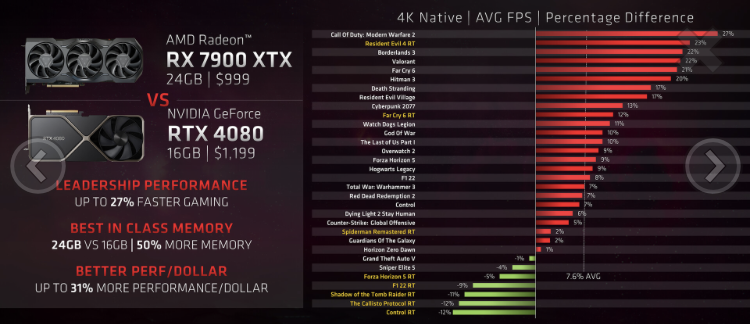

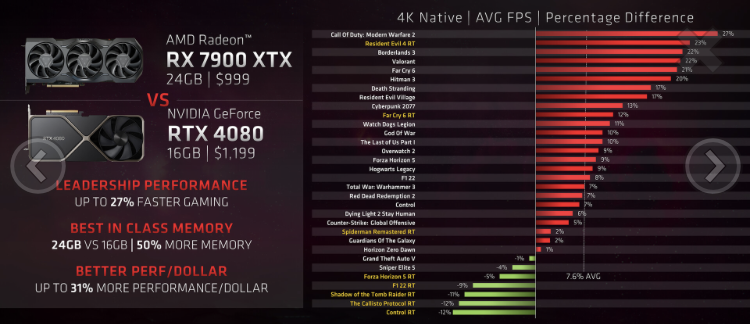

“AMD does have a point here, but as the company has as yet to launch anything below the Radeon RX 7900 XT in the 7000-series, AMD is mostly comparing its 6000-series of cards with NVIDIA's 3000-series of cards, most of which are getting hard to purchase and potentially less interesting for those looking to upgrade their system. That said, AMD also compares its two 7000-series cards to the NVIDIA RTX 4070 Ti and the RTX 4080, claiming up to a 27 percent lead over NVIDIA in performance. Based on TPU's own tests of some of these games, albeit most likely using different test scenarios, the figures provided by AMD don't seem to reflect real world performance. It's also surprising to see AMD claims its RX 7900 XTX beats NVIDIA's RTX 4080 in ray tracing performance in Resident Evil 4 by 23 percent, where our own tests shows NVIDIA in front by a small margin. Make what you want of this, but one thing is fairly certain and that is that future games will require more VRAM, but most likely the need for a powerful GPU isn't going to go away.”

Source: https://www.techpowerup.com/307110/amd-plays-the-vram-card-against-nvidia

“AMD does have a point here, but as the company has as yet to launch anything below the Radeon RX 7900 XT in the 7000-series, AMD is mostly comparing its 6000-series of cards with NVIDIA's 3000-series of cards, most of which are getting hard to purchase and potentially less interesting for those looking to upgrade their system. That said, AMD also compares its two 7000-series cards to the NVIDIA RTX 4070 Ti and the RTX 4080, claiming up to a 27 percent lead over NVIDIA in performance. Based on TPU's own tests of some of these games, albeit most likely using different test scenarios, the figures provided by AMD don't seem to reflect real world performance. It's also surprising to see AMD claims its RX 7900 XTX beats NVIDIA's RTX 4080 in ray tracing performance in Resident Evil 4 by 23 percent, where our own tests shows NVIDIA in front by a small margin. Make what you want of this, but one thing is fairly certain and that is that future games will require more VRAM, but most likely the need for a powerful GPU isn't going to go away.”

Source: https://www.techpowerup.com/307110/amd-plays-the-vram-card-against-nvidia

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)