IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

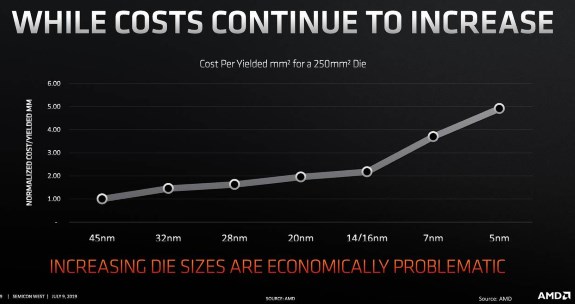

Yes thank you ! Nvidia is a-hole because they basically raised the the cost of GPU for no reason other than they could at the time. Now they're a F"K because their chip is too big and has no head room and AMD has a much smaller chip that in some benchmarks matches the performance of their $700 card. So recap AMD new gpu scale up Nvidia's doesn't and Nvidia cards cost alot more produce so they can't cut cost. So the AMD story is totally plausible.

Literally none of this is correct, and I don't know why you quoted me.

Bad assumption how? Serious here, as everything points to it being cheaper, every deep dive I have read, every insider talking about it who mentions costs per die or wafer also say it will be cheaper for AMD, some suggest it's significantly so, while others suggest it's only marginally cheaper. No where have I see anyone suggest it would cost more.

We can't just apply simple math- we don't know what TSMC is charging Nvidia for wafers, nor do we know what working GPUs cost Nvidia. Nvidia could be paying less, and likely would be, as they're pretty bullish as a company. That's just basic negotiation.

Price has to do with

A lot of things. I get your line of thought and agree that it applies, I just see it as incomplete- and we'll never get the complete picture. The argument that Nvidia is paying more, and the argument that they're paying less, are both supportable with available evidence and reasoning.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)