Benchmarks and source article here from PCWorld: https://www.pcworld.com/article/178...-3080-vs-radeon-rx-6800-xt-which-gpu-buy.html

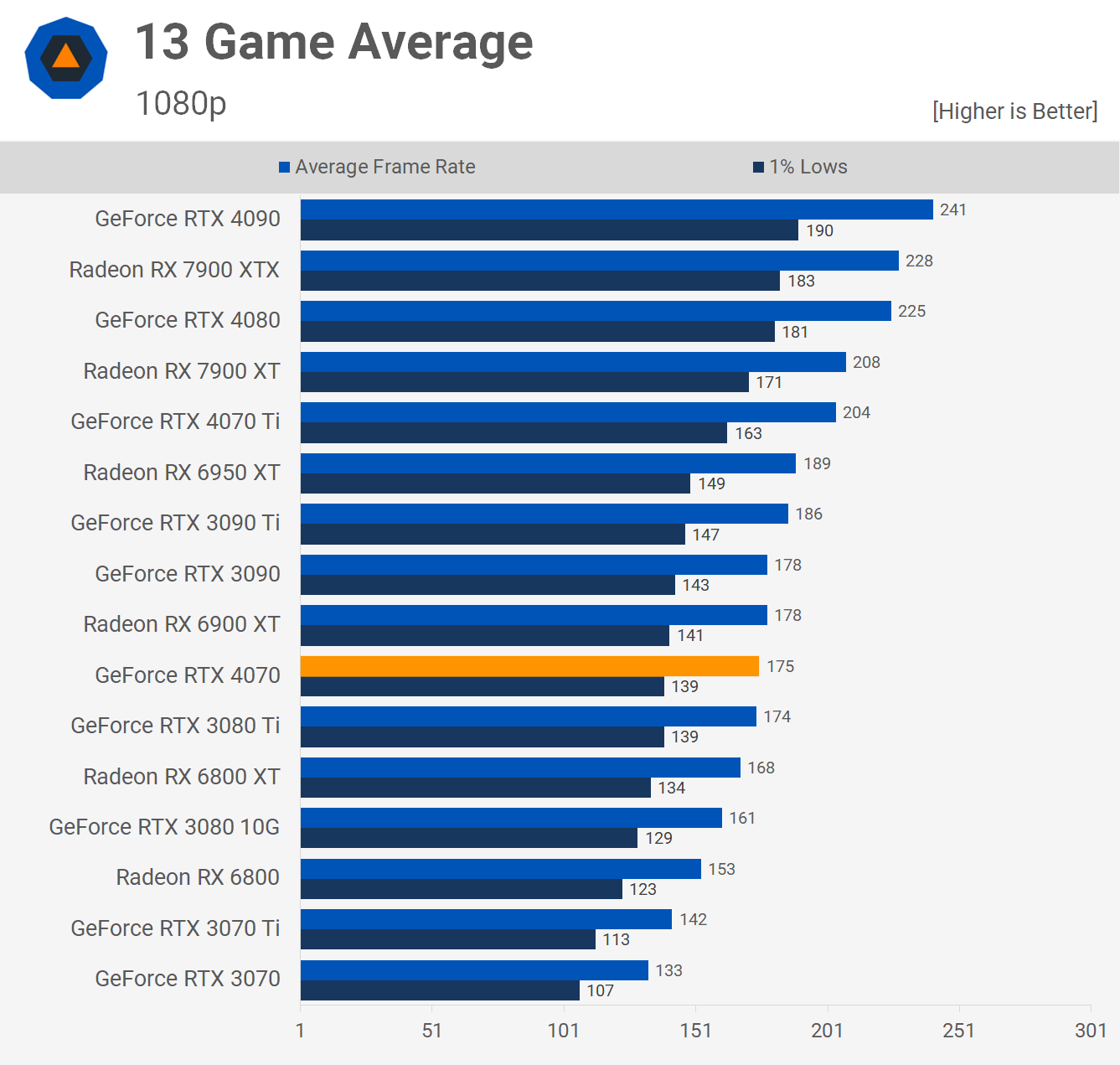

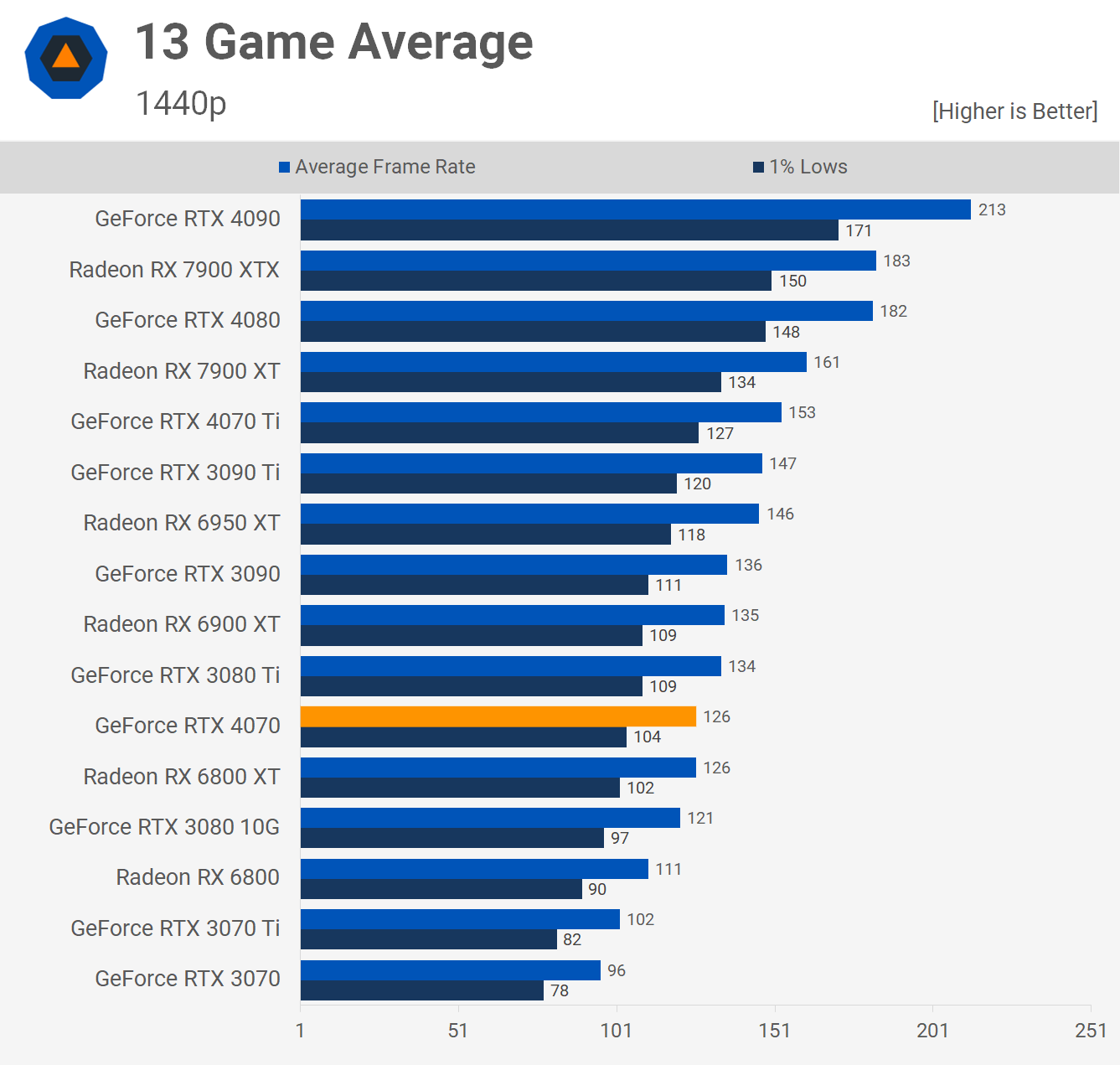

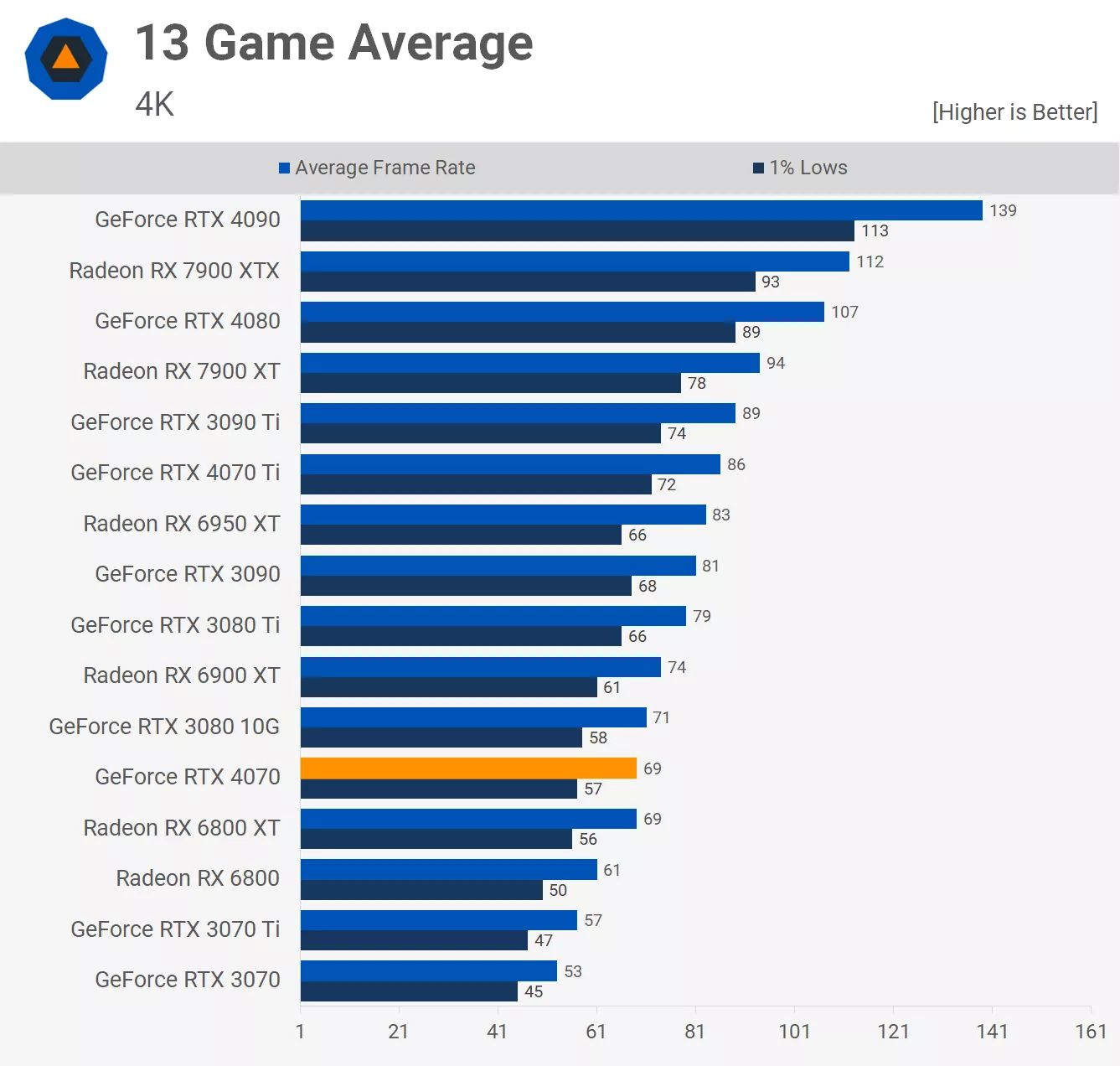

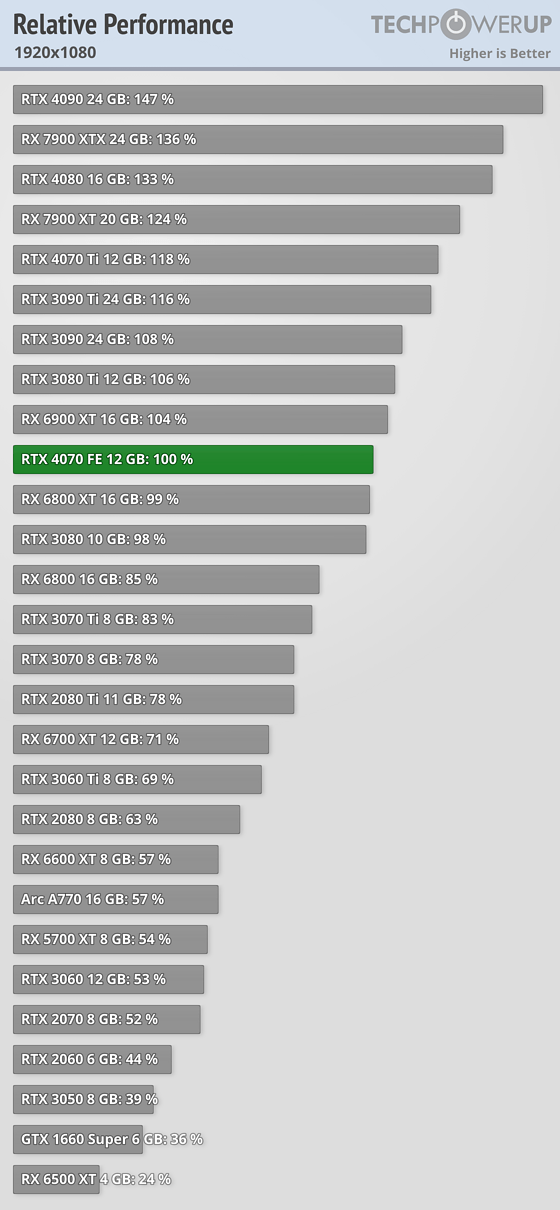

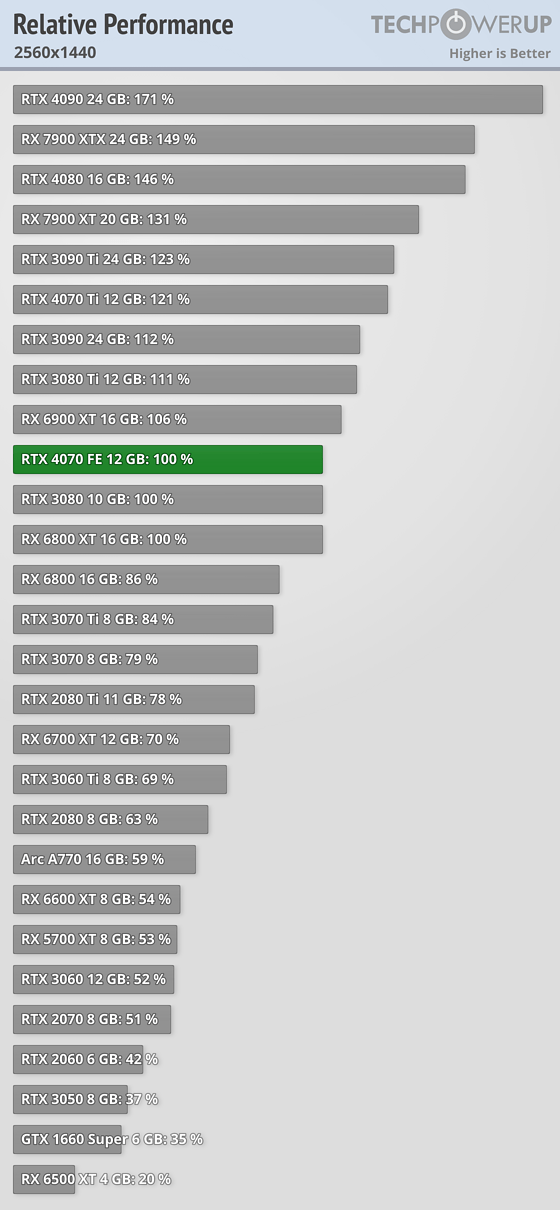

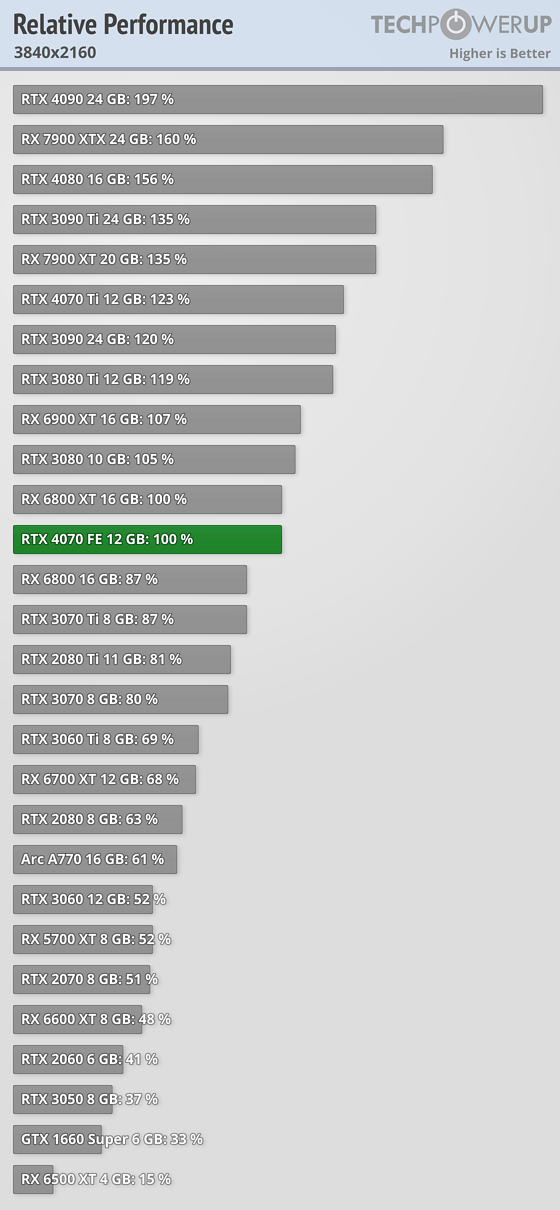

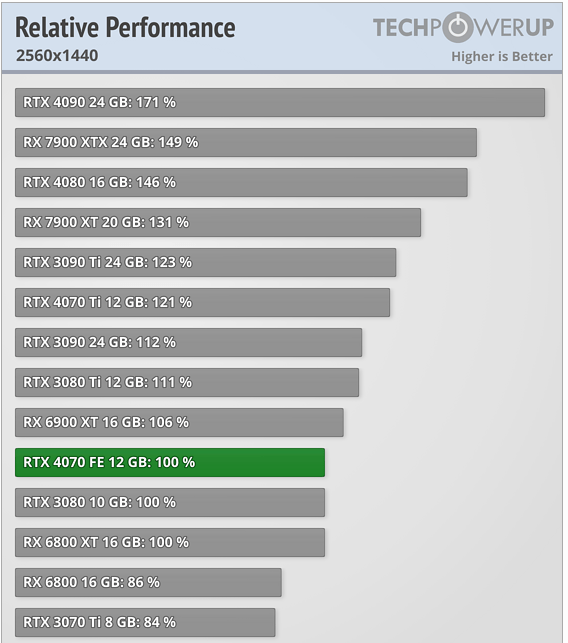

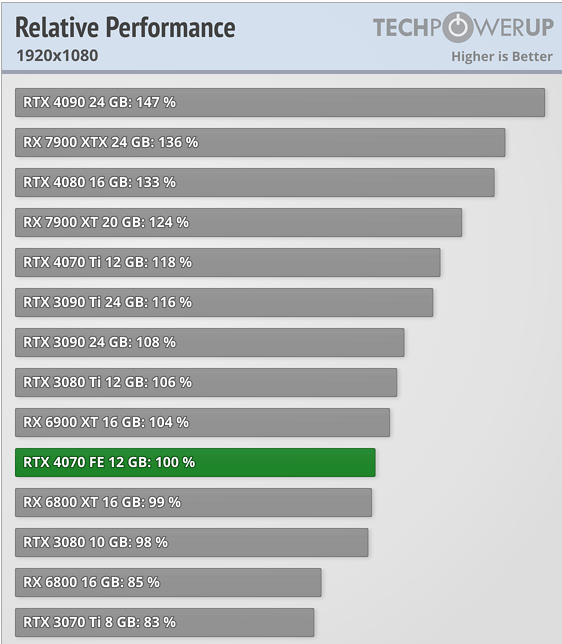

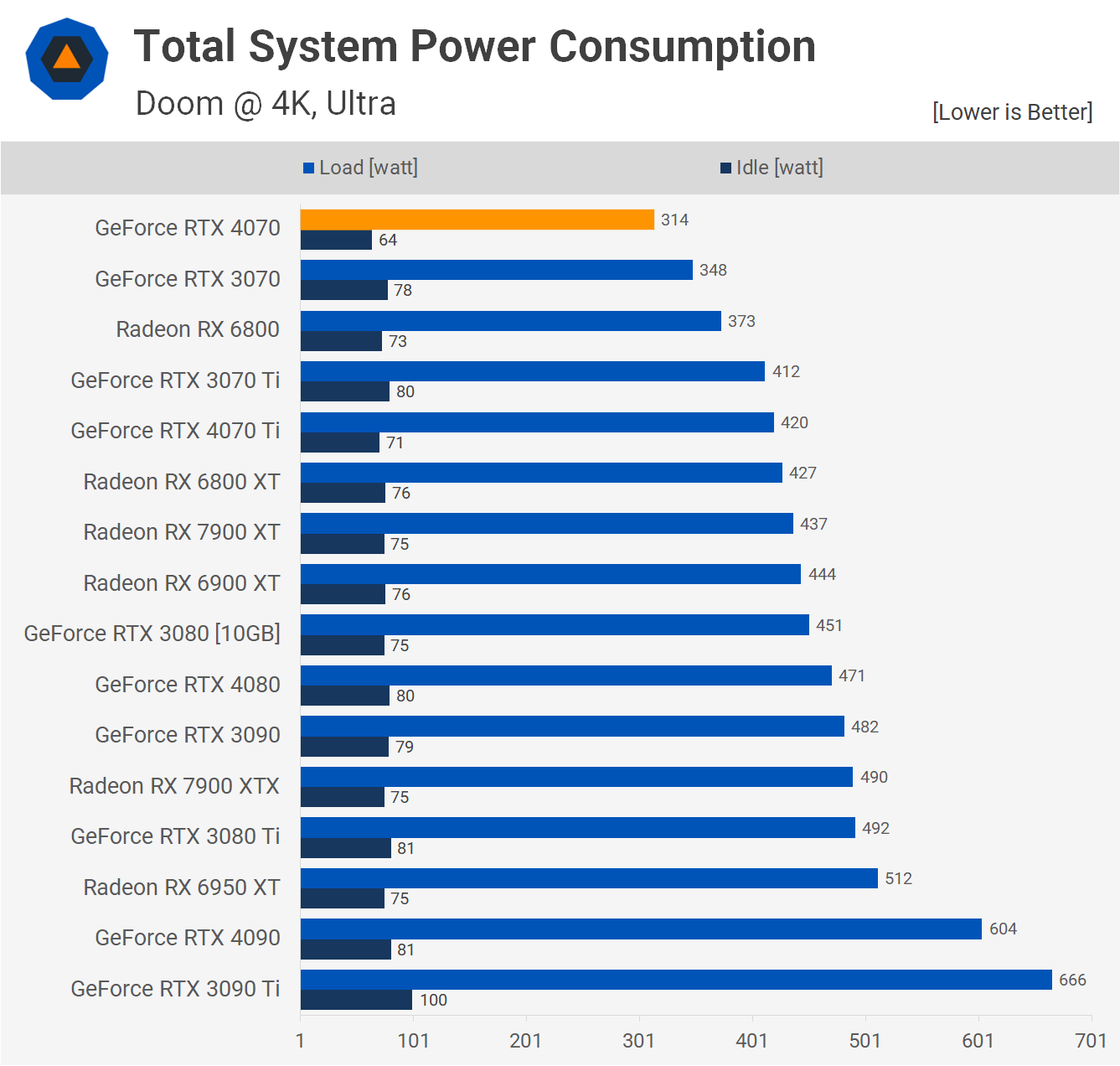

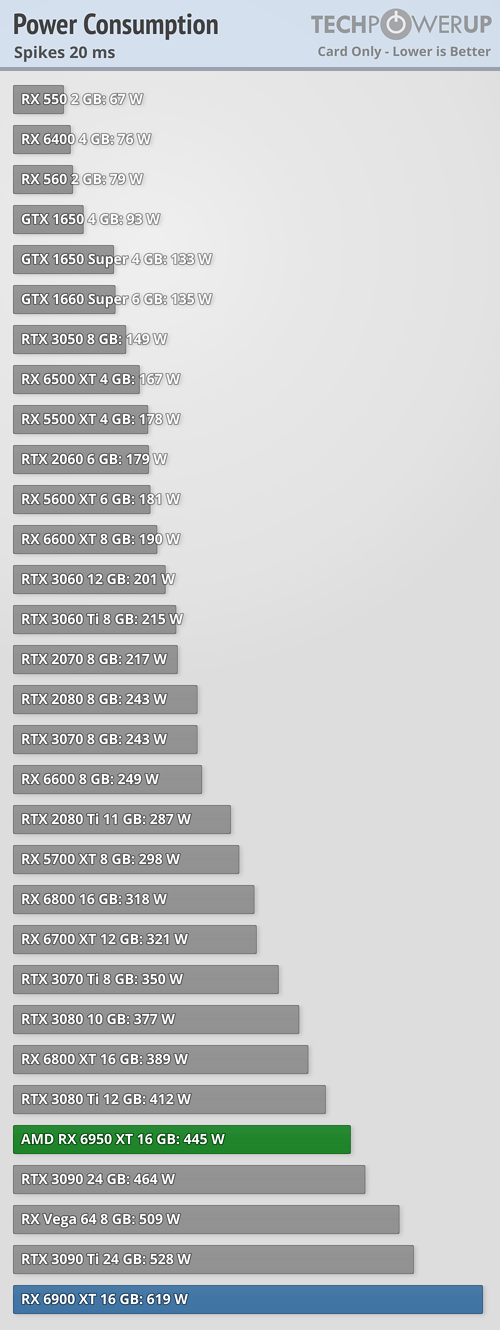

Long story short, in rasterized games, 4070 loses to the 6800XT which can be found for under $550 on Newegg. Meanwhile it's priced at $599 which used to be the old xx80 series pricing.

Is Nvidia just out of touch or too greedy for their own good?

The good news is that the 4070 does manage to beat the 6800XT when ray tracing is enabled in those games that supports it. But that's about all the good news.

Is anyone seriously going to buy this card? It feels like the lame duck out of the Nvidia 40xx series offerings.

The 4090 is the top end card, so all the people who want top of market performance will gladly pay nosebleed prices. The price isn't even relevant to them.

The 4080 is the next best card, relatively bad value for the price but at $1200 is the next tier down for people who need to respect a budget but begrudgingly pay over the odds for an upgrade.

Meanwhile with the 4070, what is even the point of this card? It loses to cards that are cheaper than it in purely rasterized games, it doesn't offer top end performance, it honestly just feels like a video card for Nvidia to sell that couldn't reach 4080 specs. IE factory reject 4080's get 'cut down' and sold as 4070s. Thoughts?

Long story short, in rasterized games, 4070 loses to the 6800XT which can be found for under $550 on Newegg. Meanwhile it's priced at $599 which used to be the old xx80 series pricing.

Is Nvidia just out of touch or too greedy for their own good?

The good news is that the 4070 does manage to beat the 6800XT when ray tracing is enabled in those games that supports it. But that's about all the good news.

Is anyone seriously going to buy this card? It feels like the lame duck out of the Nvidia 40xx series offerings.

The 4090 is the top end card, so all the people who want top of market performance will gladly pay nosebleed prices. The price isn't even relevant to them.

The 4080 is the next best card, relatively bad value for the price but at $1200 is the next tier down for people who need to respect a budget but begrudgingly pay over the odds for an upgrade.

Meanwhile with the 4070, what is even the point of this card? It loses to cards that are cheaper than it in purely rasterized games, it doesn't offer top end performance, it honestly just feels like a video card for Nvidia to sell that couldn't reach 4080 specs. IE factory reject 4080's get 'cut down' and sold as 4070s. Thoughts?

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)