Likely going to keep a full set of boards for spare parts, but I literally just re-read messages and realized he said he has an extra set of boards for his FW that he's also going to give me. I shouldn't realistically need two full sets of backup boards, so I will keep you posted!In case you would sell some FW900 parts, please let me know! I am interested.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

24" Widescreen CRT (FW900) From Ebay arrived,Comments.

- Thread starter mathesar

- Start date

Xar

Limp Gawd

- Joined

- Dec 15, 2022

- Messages

- 227

Has any of you tried NvTrueHDR v1.3 modded Retro games on your CRT?

I'm away and can't test it. Heard it looks a lot better than W11 Auto-HDR and gorgeously on organic techs like OLEDs and Plasma. I assumed that's the case with CRTs like F520, FW900, 2070SB, P1130, P275 as well.

I'm away and can't test it. Heard it looks a lot better than W11 Auto-HDR and gorgeously on organic techs like OLEDs and Plasma. I assumed that's the case with CRTs like F520, FW900, 2070SB, P1130, P275 as well.

Comixbooks

Fully [H]

- Joined

- Jun 7, 2008

- Messages

- 22,019

rabidz7

[H]ard|Gawd

- Joined

- Jul 24, 2014

- Messages

- 1,331

Is LAGRUNAUER still in business selling CRTs?

cesarioFL71

Limp Gawd

- Joined

- Nov 26, 2021

- Messages

- 372

Last I heard he was still in the "trade" but he was out of FW900's and has few spare parts.Is LAGRUNAUER still in business selling CRTs?

Update: The S board has been stuck in Customs for 7 days now. I think they think it's a bomb. Lol.Was able to secure another FW900 for parts (damaged tube but boards are supposed to be fine). Only aiming to swap out the S board for now, to see if it brings my dynamic convergence back to life.

Will let you all know how that goes.

Edit: It arrived the day after I posted this, and appears to be in pristine condition! Wish me luck with the disassembly, I always hate that part.

Last edited:

insanelyinnocent

n00b

- Joined

- Feb 26, 2012

- Messages

- 10

I bought this after googling that it uses the Synaptics VMM 2322 on Amazon for about £100. I tried my FW900 and a very old Dell LCD panel, i get no picture but they are recognised in windows and Mac OS, meaning the EDID is being passed through. Tempted to open it up and see if maybe the VGA pins simply arent soldered or maybe i got a dud. I already have the cheaper Startech DP2VGAHD20 which works at 1920x1200@80Hz but picture quality is rubbish.I see Startech has a relatively new adapter that uses the Synaptics vmm2322, which is in the Sunix DPU3000 and has the ~545mHz pixel clock

https://media.startech.com/cms/pdfs/mdp2dvid2_datasheet.pdf

Only problem is it's only advertised as a Dual Link DVI adapter. But some of the pictures show it has a DVI-I connector. Maybe it's wishful thinking to believe the analog pins might be wired up?

Then, there are other pictures that show it with a DVI-D connector.

If it doesn't support analog VGA in any of its revisions, I'm wondering if it still might be possible to wire up the 5 analog RGB pins to the chip, then flash it to Sunix firmware.

It's a little too expensive to just buy one just to try. I have to tools to do it though

Enhanced Interrogator

[H]ard|Gawd

- Joined

- Mar 23, 2013

- Messages

- 1,429

So it does have the DVI-I layout? Some of the pictures I've seen had the DVI-D layout insteadI bought this after googling that it uses the Synaptics VMM 2322 on Amazon for about £100. I tried my FW900 and a very old Dell LCD panel, i get no picture but they are recognised in windows and Mac OS, meaning the EDID is being passed through. Tempted to open it up and see if maybe the VGA pins simply arent soldered or maybe i got a dud. I already have the cheaper Startech DP2VGAHD20 which works at 1920x1200@80Hz but picture quality is rubbish.

Definitely takes some pictures if you open it up. I'm guessing the analog pins aren't soldered.

Would be really nice if we could find a datasheet for this chip

insanelyinnocent

n00b

- Joined

- Feb 26, 2012

- Messages

- 10

Yea, thought i could find one easily online but no luck. I will try emailing Synaptics today. Not too keen on opening it yet as it wasnt cheap and if i cant get VGA out of it i’ll have no use for itSo it does have the DVI-I layout? Some of the pictures I've seen had the DVI-D layout instead

Definitely takes some pictures if you open it up. I'm guessing the analog pins aren't soldered.

Would be really nice if we could find a datasheet for this chip

Prefer running 1512x945 @ 120hz and then using Nvidia DLDSR to push the res to 2268*1418 @ 120hz, less faffing about with a secondary card and more compatible imo.Anybody here doing interlacing? 2304x1440i @120hz is pretty darn amazing.

That sounds like a nice alternative res, I'll definitely give that a shot! Already running a 1080ti and have high bandwidth HDMI to VGA dac options, but there are some games that just say "No, I will not let you interlace". Usually just settle with a lower refresh in those cases, but interested in seeing how games take to 1512x945 @ 120hz + DLDSR on my RTX rig.Prefer running 1512x945 @ 120hz and then using Nvidia DLDSR to push the res to 2268*1418 @ 120hz, less faffing about with a secondary card and more compatible imo.

Hey y'all I just picked up a Sun GDM-5410 which is only a 4:3 but has a whopping 121khz horizontal scan rate letting me push high refresh rates out of this thing. The power works and sends a light to the power button that's blinking orange (instead of green) and the display doesn't appear... There isn't any sound from the guts of the display when I turn it on, but my PC reads it as plugged in at 720p 60hz, more than feasible for this monitor. The cable and PC is good, tested on another CRT. I took it apart and didn't get any voltage from the flyback to the anode, and I'm thinking it's as simple as a bad flyback. This shares the same flyback as the G500 and the FW900, which is why I'm here... Is there anything I could do to make sure it is indeed the flyback before becoming a beggar for some parts? lol

Last edited:

As soon as the LED is blinking orrange, that means a fault is detected and power supply is shut down. So yes, there won't be any anode voltage, that makes sense. And BTW trying to measure anode voltage is stupidly risky, do you realize it normaly is of several TENs of KILOVOLTS ?

insanelyinnocent

n00b

- Joined

- Feb 26, 2012

- Messages

- 10

I've had no reply from Synaptics. I took a closer look at the Startech adapter and as it turns out, the analog pins in the DVI socket arent populated. So at least i know i dont just have a dud.Yea, thought i could find one easily online but no luck. I will try emailing Synaptics today. Not too keen on opening it yet as it wasnt cheap and if i cant get VGA out of it i’ll have no use for it

I find the image quality with the DP2VGAHD2 to be quite pleasing. Bought a spare or two.I bought this after googling that it uses the Synaptics VMM 2322 on Amazon for about £100. I tried my FW900 and a very old Dell LCD panel, i get no picture but they are recognised in windows and Mac OS, meaning the EDID is being passed through. Tempted to open it up and see if maybe the VGA pins simply arent soldered or maybe i got a dud. I already have the cheaper Startech DP2VGAHD20 which works at 1920x1200@80Hz but picture quality is rubbish.

jbltecnicspro

[H]F Junkie

- Joined

- Aug 18, 2006

- Messages

- 9,541

My man. Another reason CRT rocks. It will draw whatever you tell it to, provided it's in range.I'm the oddball running 2235 x 1397 @ 83Hz calculated to 375Mhz pixel clock. No flickering and silky smooth when locked to refresh rate. I'm also on the StarTech DP2VGAHD20, and I do have a spare just in case.

How do you do it ? Nvidia control panel doubles the bandwidth, making it impossible, and, CRU adds it, but, when I click apply on the CP nothing happens.Anybody here doing interlacing? 2304x1440i @120hz is pretty darn amazing.

How do you do it ? Nvidia control panel doubles the bandwidth, making it impossible, and, CRU adds it, but, when I click apply on the CP nothing happens.Prefer running 1512x945 @ 120hz and then using Nvidia DLDSR to push the res to 2268*1418 @ 120hz, less faffing about with a secondary card and more compatible imo.

How do you do it ? Nvidia control panel doubles the bandwidth, making it impossible, and, CRU adds it, but, when I click apply on the CP nothing happens. (sorry for spam... been looking forward to a reply from someone...now impatiently haha)My man. Another reason CRT rocks. It will draw whatever you tell it to, provided it's in range.

jbltecnicspro

[H]F Junkie

- Joined

- Aug 18, 2006

- Messages

- 9,541

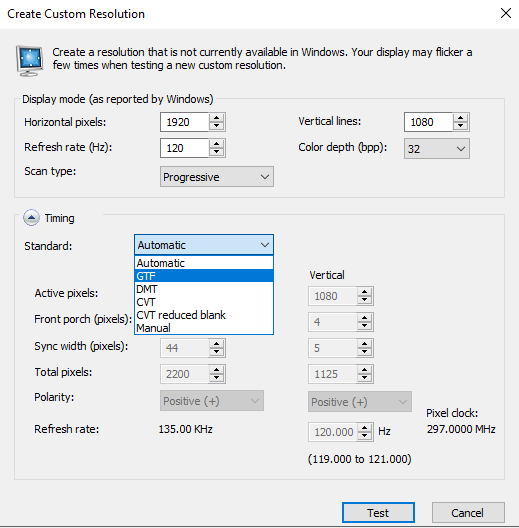

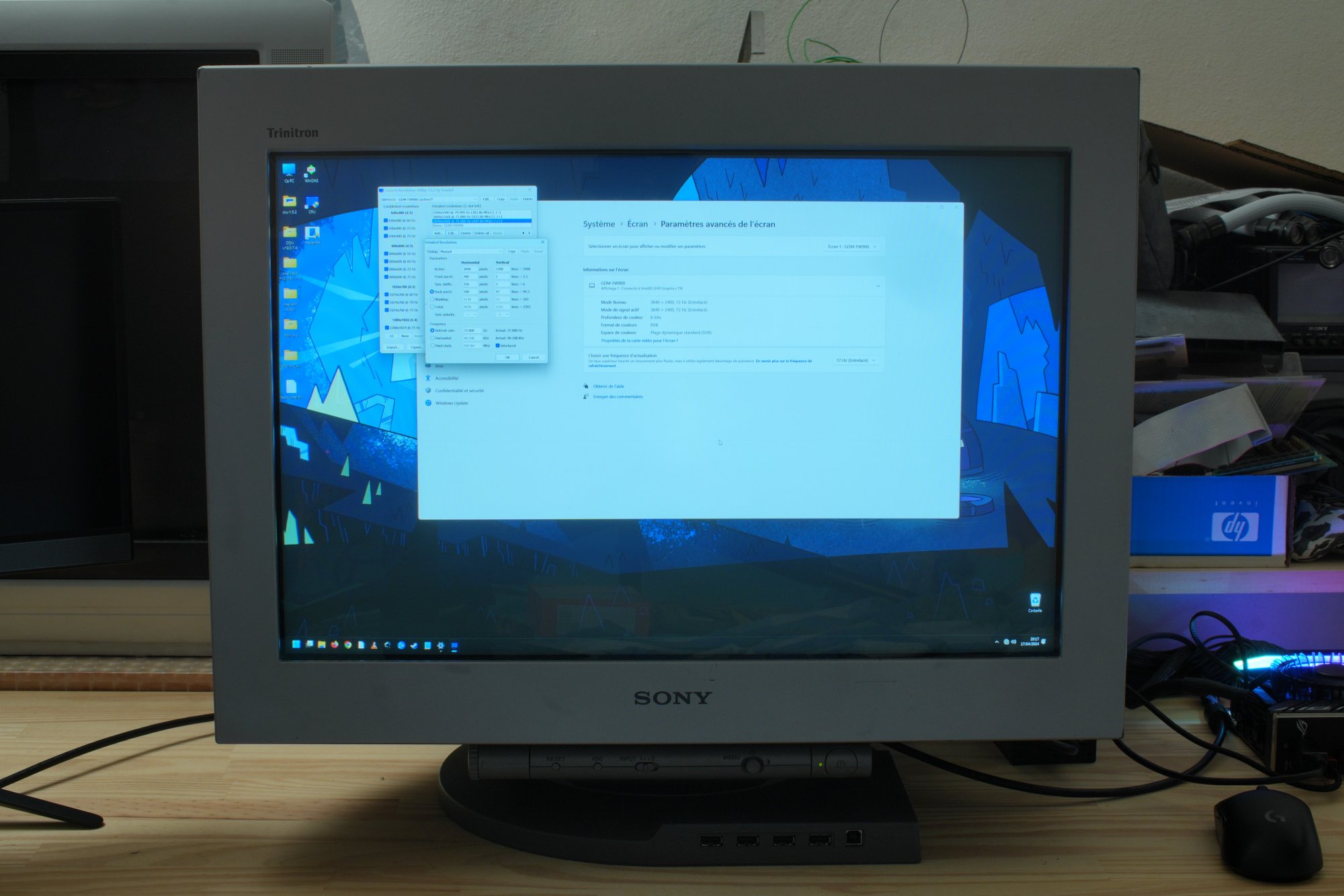

Nvidia gives you options as to which timing formula to use. I had good results using the GTF formulas, if I recall correctly:How do you do it ? Nvidia control panel doubles the bandwidth, making it impossible, and, CRU adds it, but, when I click apply on the CP nothing happens. (sorry for spam... been looking forward to a reply from someone...now impatiently haha)

etienne51

Limp Gawd

- Joined

- Mar 20, 2015

- Messages

- 130

Timings are either CVT or GTF. CVT always works fine, I seem to remember for GTF it depends on the resolution.How do you do it ? Nvidia control panel doubles the bandwidth, making it impossible, and, CRU adds it, but, when I click apply on the CP nothing happens. (sorry for spam... been looking forward to a reply from someone...now impatiently haha)

---

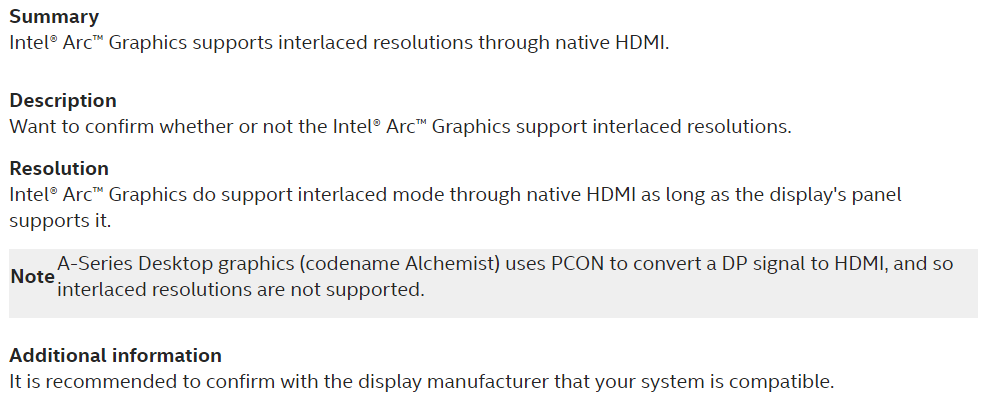

A few days ago I got my hands on a Intel ARC A380, so I could test interlaced resolutions support by myself. I found no real reports out there, and some contradictory informations on that.

I did a complete writeup on Reddit, I don't know if I'm allowed to post these links here, I'll try.

https://www.reddit.com/r/crtgaming/comments/1c0w4w3/interlaced_on_intel_arc_a380_sort_of_working/

Short version here, no support at all on the latest drivers (31.0.101.5382), but when I tried the very first drivers supporting the A380 (30.0.101.1736), interlaced resolutions works!

It looks like HDMI caps at 1080i60. After that the resolutions just don't show up anymore in Windows. Might be error on my part, but I had a bunch of issues with the HDMI port, even on LCD displays, so I just moved to DisplayPort for further testing.

On DisplayPort though, any interlaced resolution entered in CRU shows up in the Windows display settings. Issue is, there is a limitation around 220MHz pixel clock or so, where the picture starts to get messed up from the bottom, all the way to the top the higher the pixel clock.

I was able to make the following resolutions work flawlessly:

- 1920x1440i, the highest 100% working refresh rate was 110.33Hz (221.71MHz pixel clock)

- At 1600x1200i, it was 154.46Hz (223.63MHz pixel clock, a bit higher for some reason, limitation might not be pixel clock then)

- Also tested 2880x1800i at 60Hz, working fine very close to the Intel ARC limit as well (still far from the monitor limit of 140Hz...)

So, by far, I would rather use 1920x1440 at 90Hz progressive than 110Hz interlaced. In the current state of things, while ARC supports interlaced, it might only be interesting for people wanting to run lower resolutions, maybe driving a 1080i HD CRT TV, or maybe 480i (didn't test that yet...).

So this was an interesting test for sure, glad I was finally able to get those infos. At least we know ARC hardware supports interlaced, unlike what Intel claims (see Note section below). It's once again a driver issue...

But I don't think any future update will change that. And I'm a bit worried about 15th gen Intel CPUs, but I guess I'm gonna give it a test when it's released.

(https://www.intel.com/content/www/us/en/support/articles/000094488/graphics.html)

Next up, I'm expecting a delivery of a Asus B660-I motherboard early next week, with a temporary Intel Pentium G7400 (Intel UHD 710, same driver as the 770 found in the higher end i5 - i9).

I heard a few reports of interlaced working on the iGPU, but again with previous driver versions. It should not be a problem, so I'm gonna give this a try and figure out what the limits are.

If I can finally run 1920x1440i at 170Hz with these iGPUs, I will throw my RTX 4060 Low Profile on it, and start testing some recent games at high res, and soon get a proper CPU (since it's gonna be in a tiny case, probably the i5 13600K).

I will report back when I'm done testing.

Last edited:

Enhanced Interrogator

[H]ard|Gawd

- Joined

- Mar 23, 2013

- Messages

- 1,429

If I remember correctly, Nvidia will show double bandwidth in the UI, but when it actually displays the resolution, it will output the correct (meaning half) bandwidthHow do you do it ? Nvidia control panel doubles the bandwidth, making it impossible, and, CRU adds it, but, when I click apply on the CP nothing happens. (sorry for spam... been looking forward to a reply from someone...now impatiently haha)

GTF is what doubles it...Nvidia gives you options as to which timing formula to use. I had good results using the GTF formulas, if I recall correctly:

View attachment 647489

Well, when I click apply it just says "test failed" and "...not supported by your display"If I remember correctly, Nvidia will show double bandwidth in the UI, but when it actually displays the resolution, it will output the correct (meaning half) bandwidth

CVT didn't work either... thanks for your replies thoughTimings are either CVT or GTF. CVT always works fine, I seem to remember for GTF it depends on the resolution.

---

A few days ago I got my hands on a Intel ARC A380, so I could test interlaced resolutions support by myself. I found no real reports out there, and some contradictory informations on that.

I did a complete writeup on Reddit, I don't know if I'm allowed to post these links here, I'll try.

https://www.reddit.com/r/crtgaming/comments/1c0w4w3/interlaced_on_intel_arc_a380_sort_of_working/

Short version here, no support at all on the latest drivers (31.0.101.5382), but when I tried the very first drivers supporting the A380 (30.0.101.1736), interlaced resolutions works!

It looks like HDMI caps at 1080i60. After that the resolutions just don't show up anymore in Windows. Might be error on my part, but I had a bunch of issues with the HDMI port, even on LCD displays, so I just moved to DisplayPort for further testing.

On DisplayPort though, any interlaced resolution entered in CRU shows up in the Windows display settings. Issue is, there is a limitation around 220MHz pixel clock or so, where the picture starts to get messed up from the bottom, all the way to the top the higher the pixel clock.

I was able to make the following resolutions work flawlessly:

So, glad to see it somewhat works, but considering the limitations it's totally pointless to me. While 110Hz at 1920x1440 is still better than the 90Hz my F520 can do in progressive, it's really not much of a difference, and the drawbacks of interlaced completely negate the gain in refresh rate here, making it a worse resolution.

- 1920x1440i, the highest 100% working refresh rate was 110.33Hz (221.71MHz pixel clock)

- At 1600x1200i, it was 154.46Hz (223.63MHz pixel clock, a bit higher for some reason, limitation might not be pixel clock then)

- Also tested 2880x1800i at 60Hz, working fine very close to the Intel ARC limit as well (still far from the monitor limit of 140Hz...)

So, by far, I would rather use 1920x1440 at 90Hz progressive than 110Hz interlaced. In the current state of things, while ARC supports interlaced, it might only be interesting for people wanting to run lower resolutions, maybe driving a 1080i HD CRT TV, or maybe 480i (didn't test that yet...).

So this was an interesting test for sure, glad I was finally able to get those infos. At least we know ARC hardware supports interlaced, unlike what Intel claims (see Note section below). It's once again a driver issue...

But I don't think any future update will change that. And I'm a bit worried about 15th gen Intel CPUs, but I guess I'm gonna give it a test when it's released.

View attachment 647501

(https://www.intel.com/content/www/us/en/support/articles/000094488/graphics.html)

Next up, I'm expecting a delivery of a Asus B660-I motherboard early next week, with a temporary Intel Pentium G7400 (Intel UHD 710, same driver as the 770 found in the higher end i5 - i9).

I heard a few reports of interlaced working on the iGPU, but again with previous driver versions. It should not be a problem, so I'm gonna give this a try and figure out what the limits are.

If I can finally run 1920x1440i at 170Hz with these iGPUs, I will throw my RTX 4060 Low Profile on it, and start testing some recent games at high res, and soon get a proper CPU (since it's gonna be in a tiny case, probably the i5 13600K).

I will report back when I'm done testing.

Sorry for the late reply. Nvidia's DLDSR will only work on the highest currently available resolution, so I have several preset CRU bins with my desired resolutions and refresh rates.How do you do it ? Nvidia control panel doubles the bandwidth, making it impossible, and, CRU adds it, but, when I click apply on the CP nothing happens.

I tend to swap between different resolutions depending on the game I’m playing.

For example, I’m currently playing Persona 3 Reloaded on Xbox Game Pass, which supports 120Hz. This gives me two options: I can load up my CRU with only 1512*945 @ 120Hz (no other resolutions). I actually find this resolution perfectly fine for P3R. However, I can bump this up via Nvidia DLDSR by going into the Nvidia Control Panel, selecting "Manage 3D Settings," and under DSR – Factors, choosing DL scaling 2.25x.

This should enable a resolution of 2268*1418 @ 120Hz.

You can either leave the desktop at 1512*945, as the new resolution should be selectable in-game now, or just use the new resolution straight away if the game doesn’t recognize it.

Tip: Make sure the resolution that you want to upscale is the highest available resolution. Also, when I'm gaming on the FW900, I tend to set that screen as the main screen.

I have attached a zip file with the pre-made CRU bins, along with a list highlighting the refresh rates at natural resolutions and their corresponding DLDSR resolutions to help you make your choice.

Most of the CRU bins work fine on the Delock 62967; however, the 2800+ resolutions only work on the LK7112 – the Delock caps out at 2700 at 60Hz, but your mileage might vary. The 160Hz options should include 640*400, which is perfect for Sea of Stars.

So yeah, I find these bins perfect for me. I can switch to the best 60Hz option for games that are locked to 60Hz, and other options depend on whether my 3080Ti can hit those frames per second, and then use DLDSR as a fallback if I want to explore higher ress’s

Attachments

etienne51

Limp Gawd

- Joined

- Mar 20, 2015

- Messages

- 130

Next up, I'm expecting a delivery of a Asus B660-I motherboard early next week, with a temporary Intel Pentium G7400 (Intel UHD 710, same driver as the 770 found in the higher end i5 - i9).

I heard a few reports of interlaced working on the iGPU, but again with previous driver versions. It should not be a problem, so I'm gonna give this a try and figure out what the limits are.

If I can finally run 1920x1440i at 170Hz with these iGPUs, I will throw my RTX 4060 Low Profile on it, and start testing some recent games at high res, and soon get a proper CPU (since it's gonna be in a tiny case, probably the i5 13600K).

I will report back when I'm done testing.

Alright, here it is! Today I was finally able to test the last gen Intel integrated graphics with interlaced modes.

This time I went straight to DisplayPort, tried with the default drivers installed by Windows Update and as expected... no interlacing working. No surprise there.

So I went straight to DDU to remove these and downgrade to a random version 30.0.101.207 I found online. My thought were version 31 seems to have dropped interlaced, so probably version 30.whatever would work. Sure enough, that works!

With ARC I was limited to 110Hz on 1920x1440 interlaced, but here... it went up to 170Hz without the slightest issue! Working perfectly, awesome! What a relief!

Now it was time to test higher resolutions, and find the limits. I remember with my Intel 9700K I couldn't display high resolutions, the horizontal pixels count was capped if I'm not mistaken. So I was expecting something similar here.

But to my surprise... I was able to display a whopping 4080x2550i (16:10) at 62Hz!! Or more realistically maybe, 2200x1650i (4:3) at 165Hz for example. And I tried 4K 16:9, 3840x2160i at 77Hz. I suppose 72Hz would be best for multimedia playback.

Absolutely mindblowing! I always wanted to have access to interlaced resolutions that high, so I could display stuff like 4K. Especially with the already crazy high 540MHz bandwidth of the Sunnix DPU3000, there is a lot of room for high resolutions at really good refresh rates if using interlaced!

Now, unfortunately, there is still a limitation as usual. Here, pixel clock is precisely capped at 458.49MHz on DisplayPort. Going just one decimal over, and the custom resolution won't show up anymore. I tested that exact limit for a few different resolutions, it remains the same.

HDMI is just super limited once again, I can't go over 1080i 60 or something. I didn't test much longer and didn't look for exact limits, it looked pointless to me.

So I'm curious if maybe ToastyX could work something out for Intel drivers, if he gets access to the hardware. That would be insane if the limit could be lifted here, allowing the full bandwidth of the adapters on both progressive and interlaced resolutions! Maybe lift the HDMI limit too, I don't know.

Note that, at such high resolutions like 4K, the odd and even lines are literally almost on top of each other, and you just can't see it's interlaced anymore. I looked very close and couldn't tell it was interlaced.

Also, it's crazy that at 4080x2550i on my GDM-F520, setting the Windows UI scaling to 100%... I was still just barely able to read fine texts in the Windows Settings app, looking close at the screen. It's super tiny, it's just barely readable, but you can see the F520 is the sharpest Trinitron tube, and it shows! I haven't tested on the FW900 yet.

Now, to me this is the best solution I've ever tried, for using interlaced on a recent PC. I really dislike the last gen Intel CPUs sadly, and would much rather wait for next gen AMD & Intel... But with results like that, I'll be getting a

One more thing I need to test, is actually playing at high resolution and refresh rates with a rendering GPU passing frames to the iGPU for display. It's not great to test that with a super slow Intel Pentium Gold, but I'm sure I can try older games it will have lots of fps. Hopefully something doesn't bottleneck here, but I'll find out I guess.

Big compromise with this solution though... Now I'm stuck with a CPU generation, which is why I really hoped Intel ARC would be just as good... So I could upgrade to Intel 15th gen or the next gen Ryzen, and just plug in ARC and keep going. Now I can't upgrade, at least not this PC.

I'm not sure Intel 15th gen iGPU will have similar behavior, considering even current gen has no more interlaced support with recent drivers. I really don't understand that, it was working so well, why just remove it with an update like that?

It's weird because they say interlaced works on their iGPUs, when it really doesn't with the latest drivers. Maybe it's gonna come back with a future update, no idea. I would be very curious to test a 15th gen CPU.

Last edited:

etienne51

Limp Gawd

- Joined

- Mar 20, 2015

- Messages

- 130

Awesome to so see another video on that legendary thing! And looks like more is to come in the future on those Siemens black and white models!

View: https://www.youtube.com/watch?v=asfViYLpShM

View: https://www.youtube.com/watch?v=asfViYLpShM

etienne51

Limp Gawd

- Joined

- Mar 20, 2015

- Messages

- 130

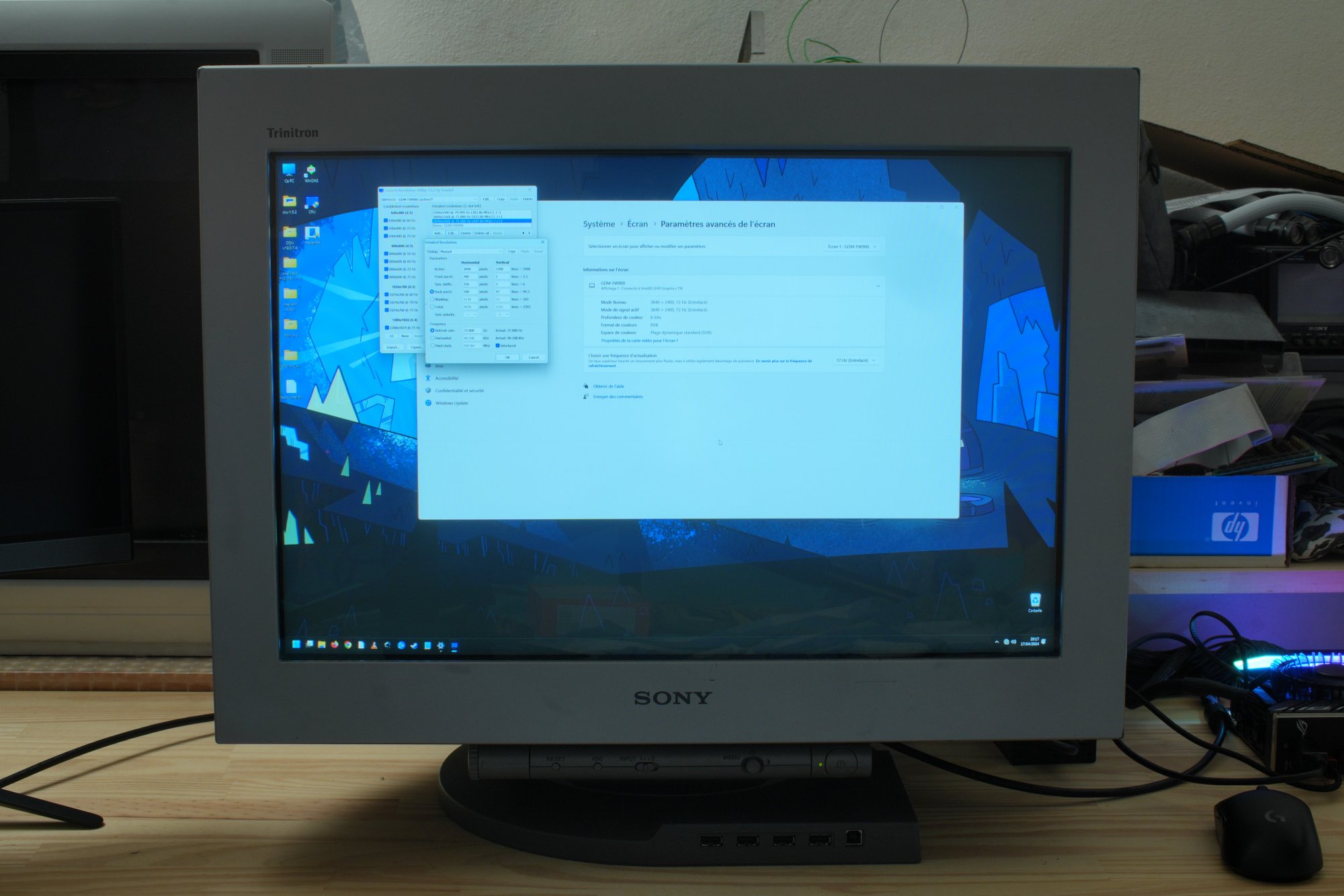

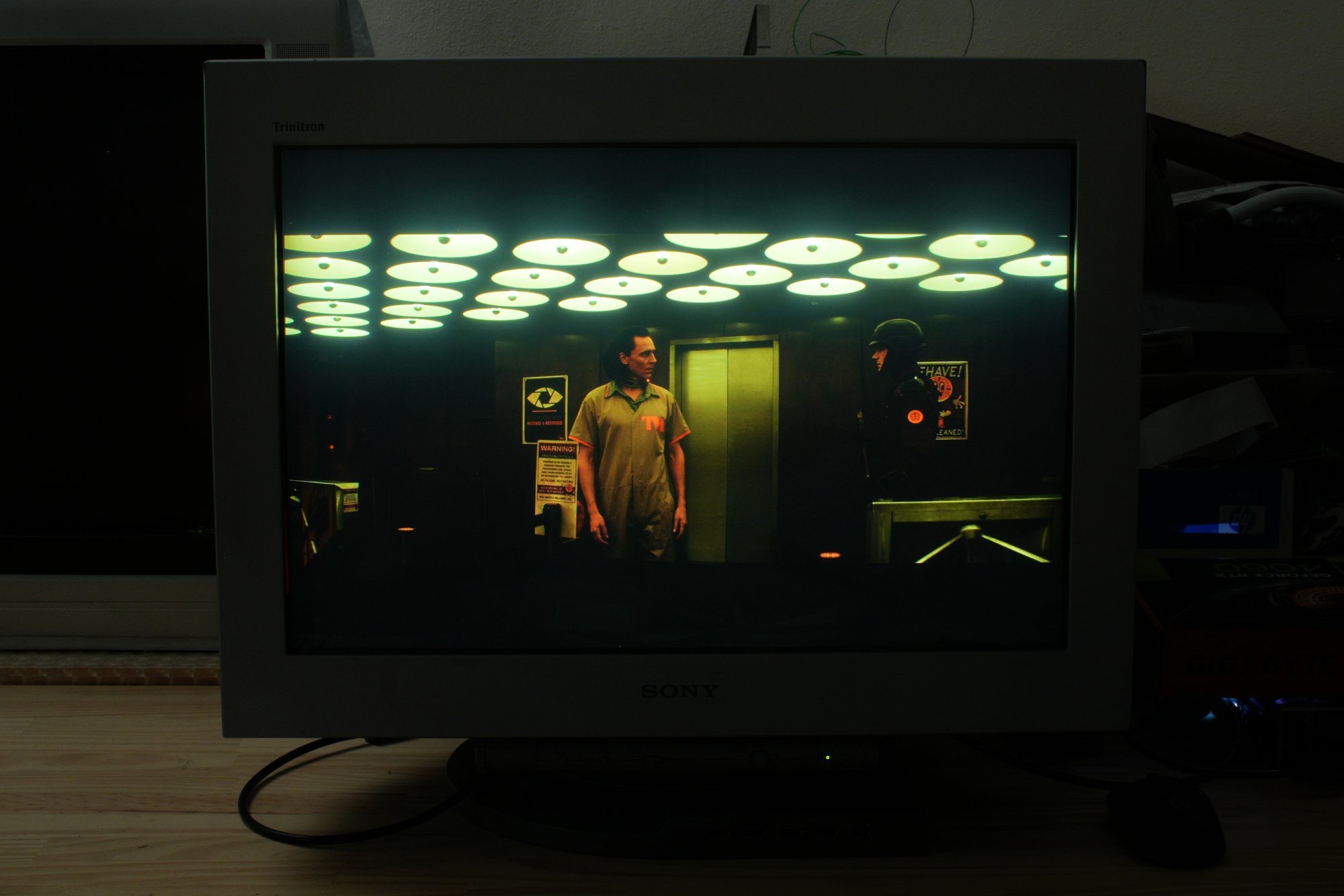

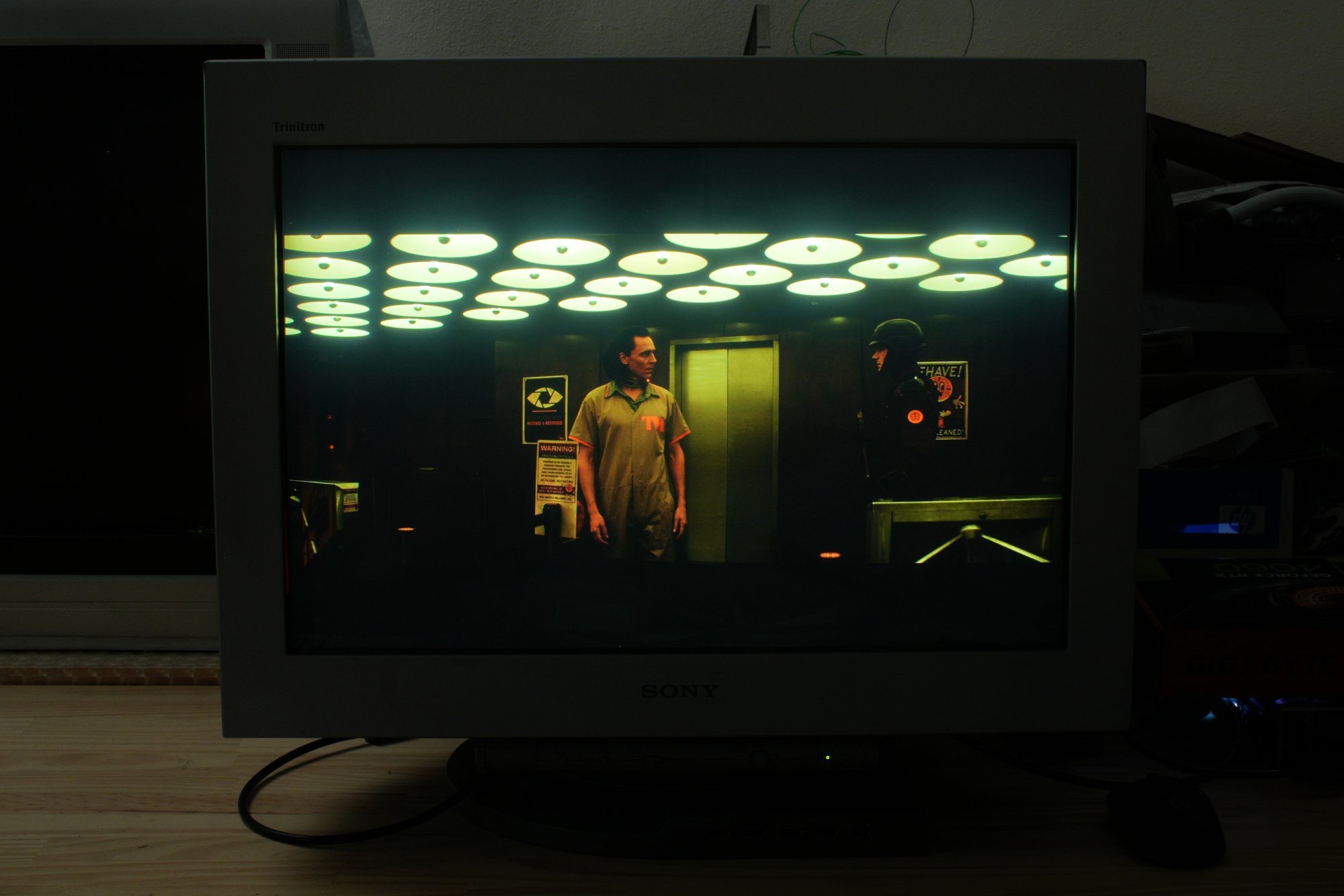

Today I hooked up the FW900 to the Intel iGPU, and 3840x2400i at 72Hz looks absolutely stunning on it! I had to tweak the timings a little to get below the 458.49MHz limit, but it worked flawlessly. Crystal clear picture!

With only 90.1kHz and 72Hz, it's far from the maximum limits of this monitor, which is quite impressive with such a high resolution already!

I watched a couple episodes of Loki on it!

With only 90.1kHz and 72Hz, it's far from the maximum limits of this monitor, which is quite impressive with such a high resolution already!

I watched a couple episodes of Loki on it!

i love youToday I hooked up the FW900 to the Intel iGPU, and 3840x2400i at 72Hz looks absolutely stunning on it! I had to tweak the timings a little to get below the 458.49MHz limit, but it worked flawlessly. Crystal clear picture!

With only 90.1kHz and 72Hz, it's far from the maximum limits of this monitor, which is quite impressive with such a high resolution already!

View attachment 648324

I watched a couple episodes of Loki on it!

View attachment 648325

The fantastic FW900 does not disappoint.Today I hooked up the FW900 to the Intel iGPU, and 3840x2400i at 72Hz looks absolutely stunning on it! I had to tweak the timings a little to get below the 458.49MHz limit, but it worked flawlessly. Crystal clear picture!

With only 90.1kHz and 72Hz, it's far from the maximum limits of this monitor, which is quite impressive with such a high resolution already!

View attachment 648324

I watched a couple episodes of Loki on it!

View attachment 648325

I'm not looking to upscale, just looking to interlaceSorry for the late reply. Nvidia's DLDSR will only work on the highest currently available resolution, so I have several preset CRU bins with my desired resolutions and refresh rates.

I tend to swap between different resolutions depending on the game I’m playing.

For example, I’m currently playing Persona 3 Reloaded on Xbox Game Pass, which supports 120Hz. This gives me two options: I can load up my CRU with only 1512*945 @ 120Hz (no other resolutions). I actually find this resolution perfectly fine for P3R. However, I can bump this up via Nvidia DLDSR by going into the Nvidia Control Panel, selecting "Manage 3D Settings," and under DSR – Factors, choosing DL scaling 2.25x.

This should enable a resolution of 2268*1418 @ 120Hz.

You can either leave the desktop at 1512*945, as the new resolution should be selectable in-game now, or just use the new resolution straight away if the game doesn’t recognize it.

Tip: Make sure the resolution that you want to upscale is the highest available resolution. Also, when I'm gaming on the FW900, I tend to set that screen as the main screen.

I have attached a zip file with the pre-made CRU bins, along with a list highlighting the refresh rates at natural resolutions and their corresponding DLDSR resolutions to help you make your choice.

Most of the CRU bins work fine on the Delock 62967; however, the 2800+ resolutions only work on the LK7112 – the Delock caps out at 2700 at 60Hz, but your mileage might vary. The 160Hz options should include 640*400, which is perfect for Sea of Stars.

So yeah, I find these bins perfect for me. I can switch to the best 60Hz option for games that are locked to 60Hz, and other options depend on whether my 3080Ti can hit those frames per second, and then use DLDSR as a fallback if I want to explore higher ress’s

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)