BigZuesz

n00b

- Joined

- Jan 11, 2022

- Messages

- 17

i plan on upgrading to a whole new system in the next month but i want to use this setup temporarily till i get the rest of my parts im going from a micro atx to a mini itx and correct me if im wrong but im not sure the smaller power supply the mini itx builds use will not fit in a big case

My current Specs are B450 Motherboard micro atx,

16 gb ddr4 3100 Mhz corsair vengeance ram,

1tb M.2 SSD,

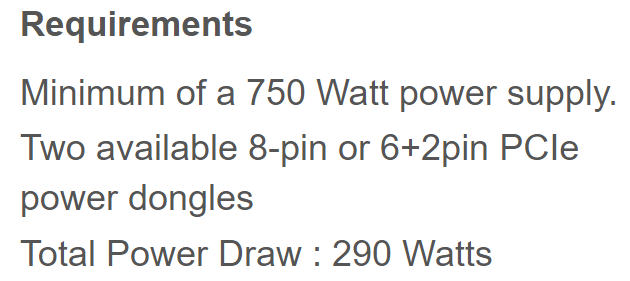

gtx 1050 ti GPU, "my rtx 3070 ti Ftw3 is coming in a few days"

Ryzen 5 2600X

EVGA 500 BR Bronze Power Supply

My current Specs are B450 Motherboard micro atx,

16 gb ddr4 3100 Mhz corsair vengeance ram,

1tb M.2 SSD,

gtx 1050 ti GPU, "my rtx 3070 ti Ftw3 is coming in a few days"

Ryzen 5 2600X

EVGA 500 BR Bronze Power Supply

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)